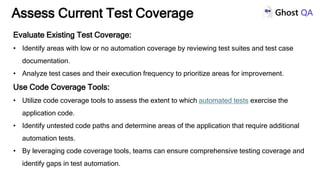

The document outlines strategies for improving automation test coverage, emphasizing the importance of measuring the extent to which automated tests verify software functionality. Key methods include assessing current test practices, prioritizing high-risk and repetitive test cases for automation, and utilizing effective test design principles like data-driven testing and cross-browser compatibility. Continuous integration and ongoing monitoring of test results are also highlighted as crucial for enhancing software quality and enabling rapid deployment.