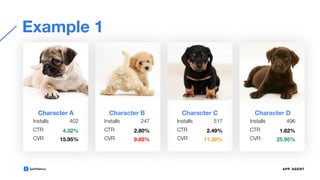

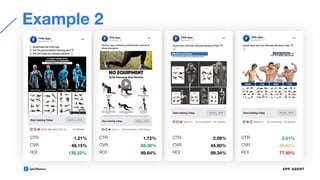

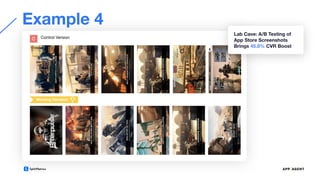

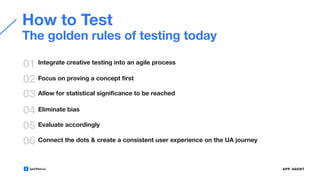

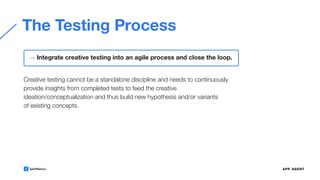

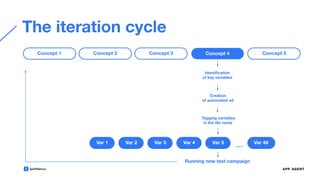

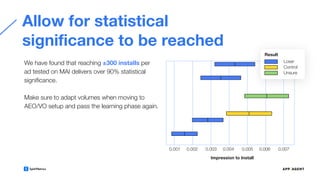

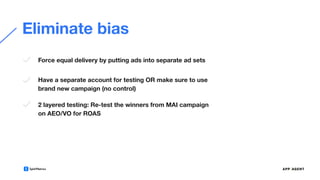

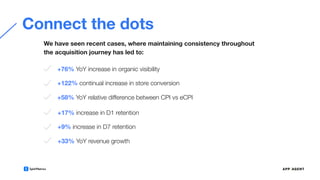

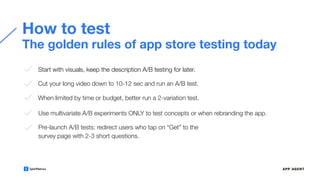

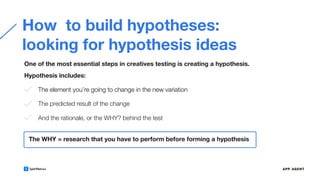

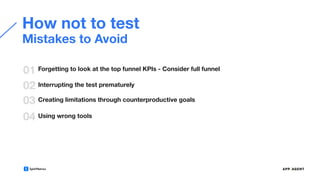

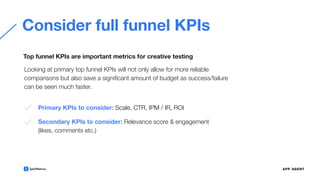

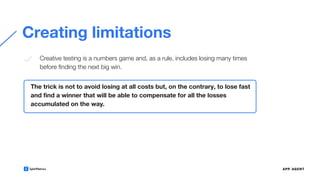

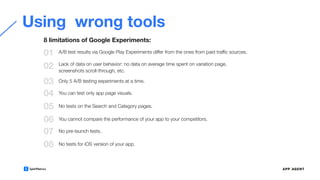

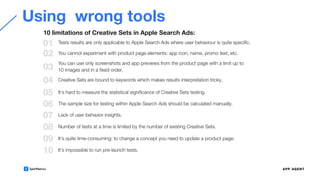

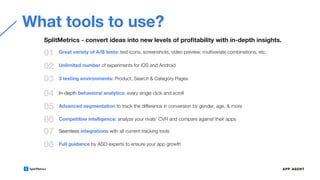

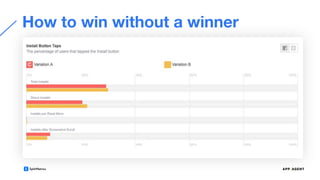

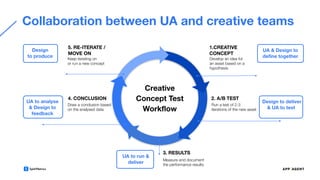

The document emphasizes the significance of creative testing in user acquisition (UA), providing strategies for effective A/B testing and highlighting the importance of collaboration between UA and creative teams. It outlines key metrics for evaluation, common pitfalls to avoid, and the impact of iOS updates such as IDFA deprecation on UA practices. Additionally, it stresses the necessity of continuous testing and the value in tests that do not yield a clear winner.