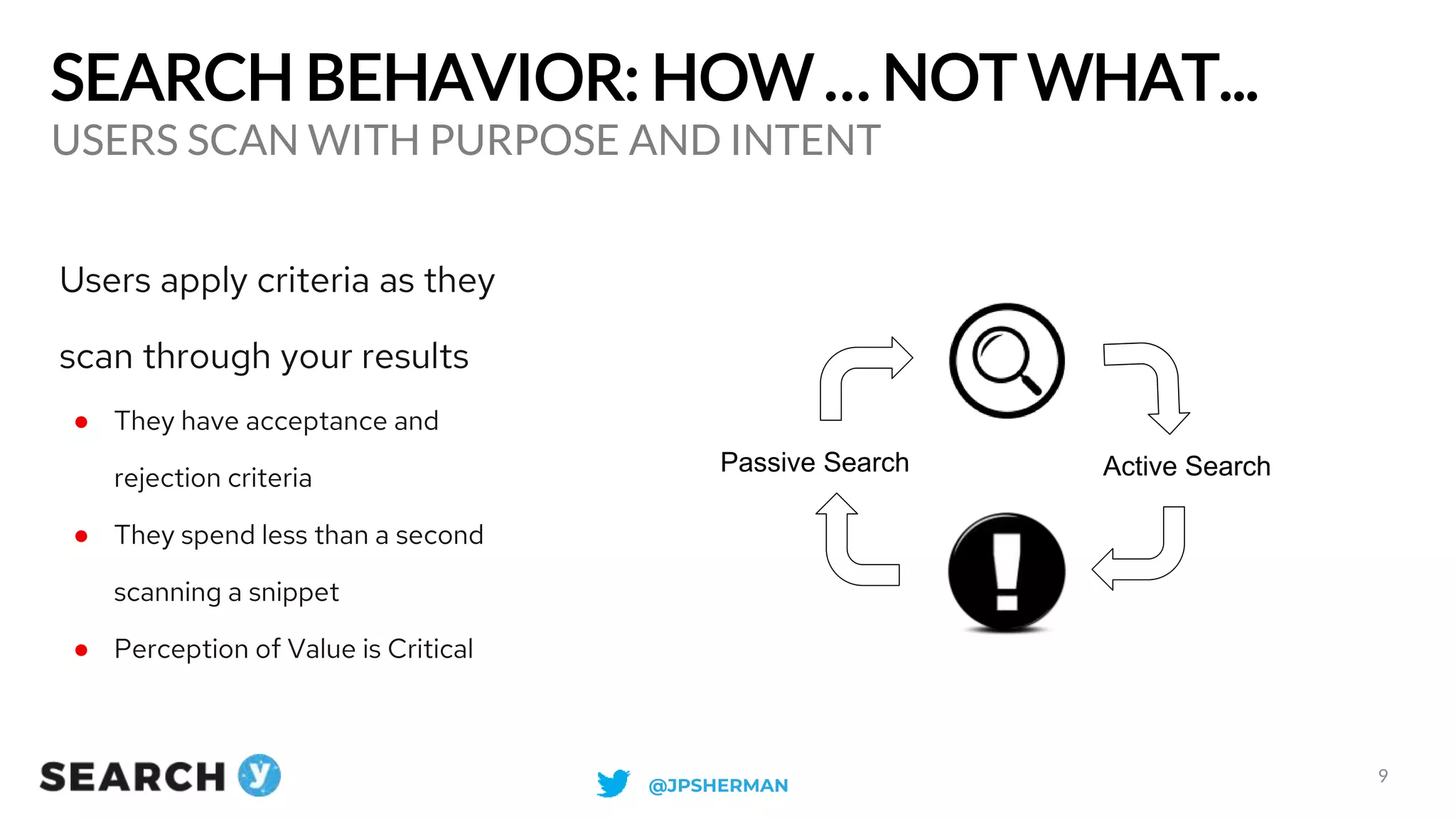

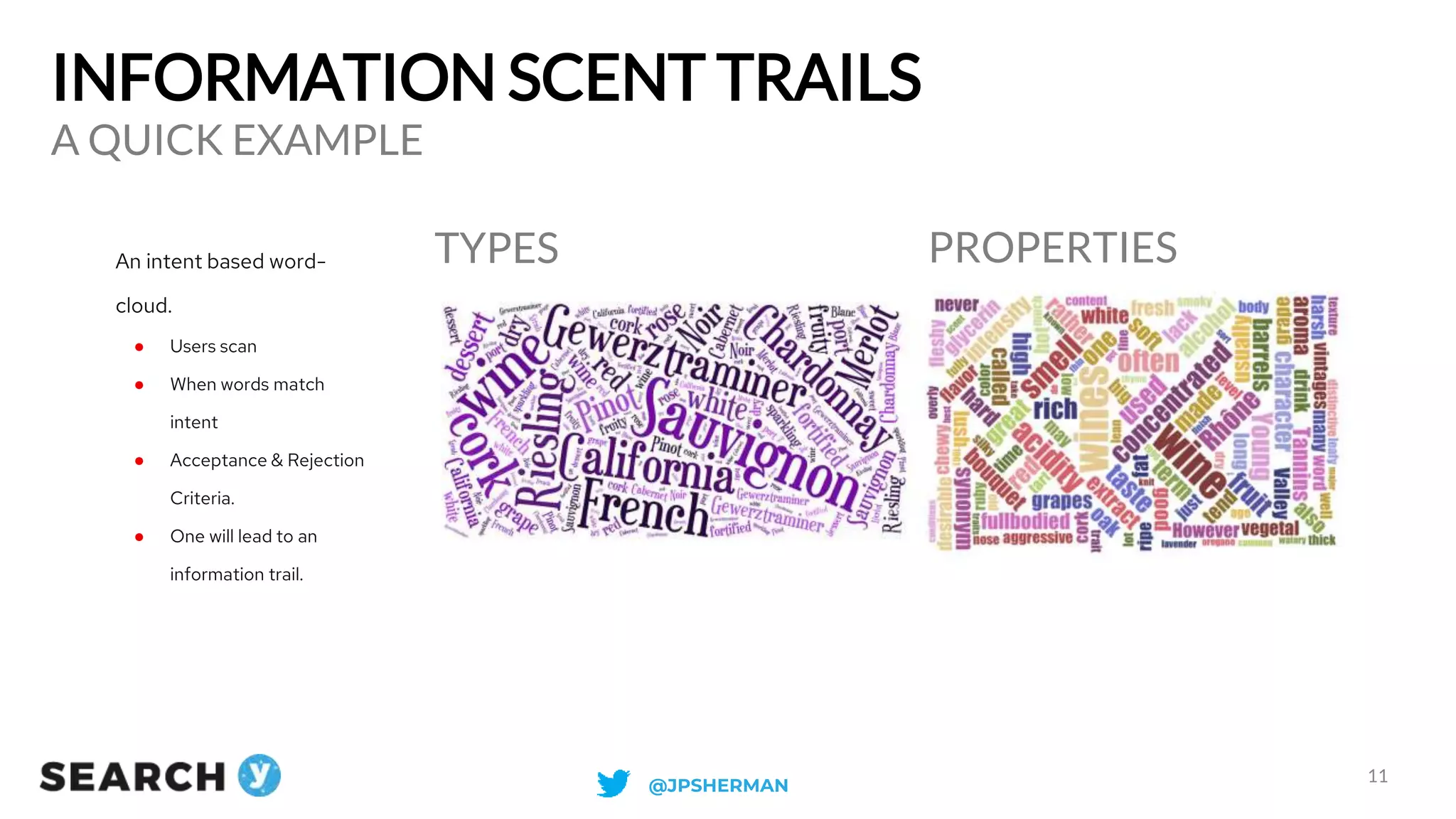

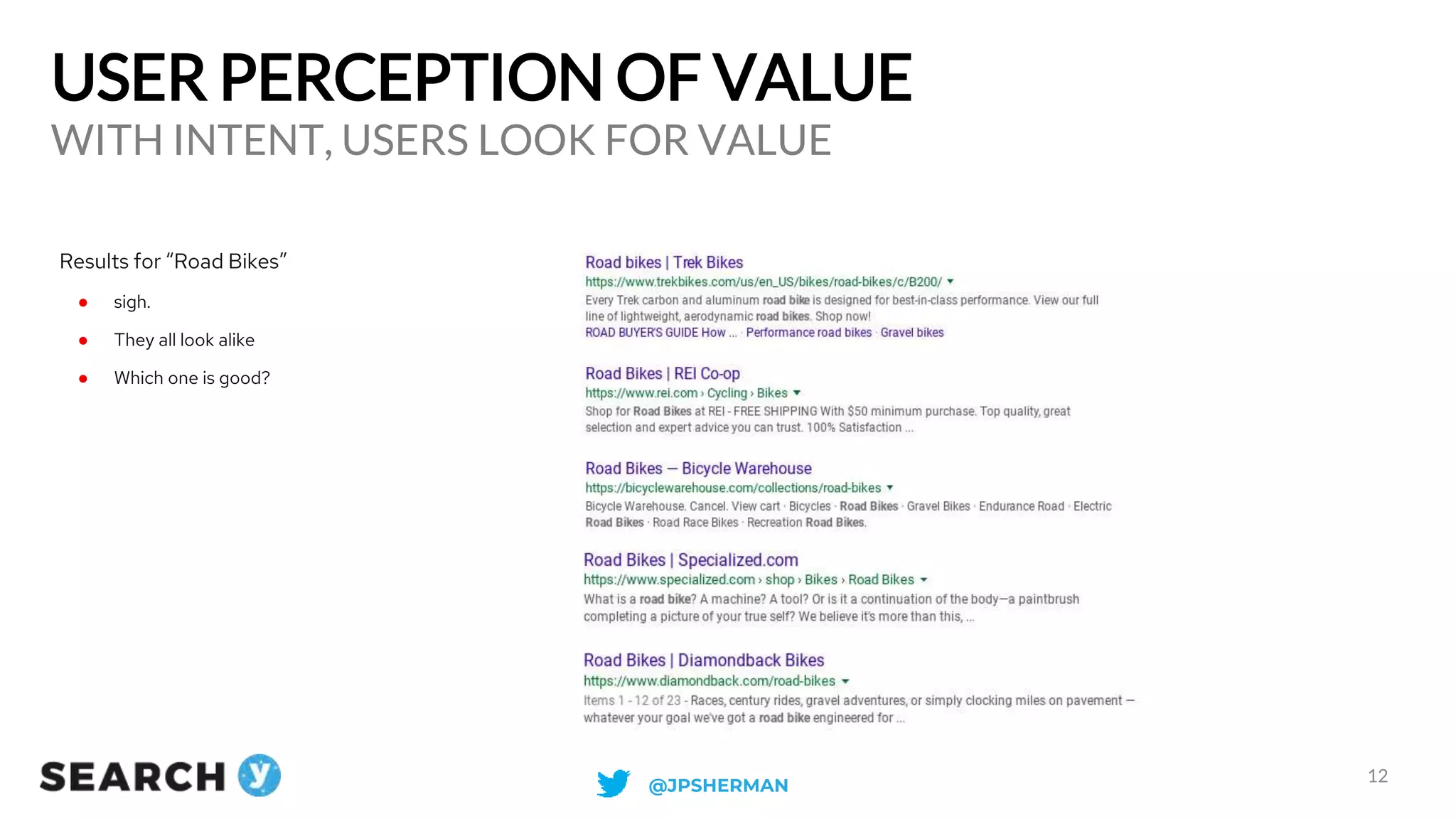

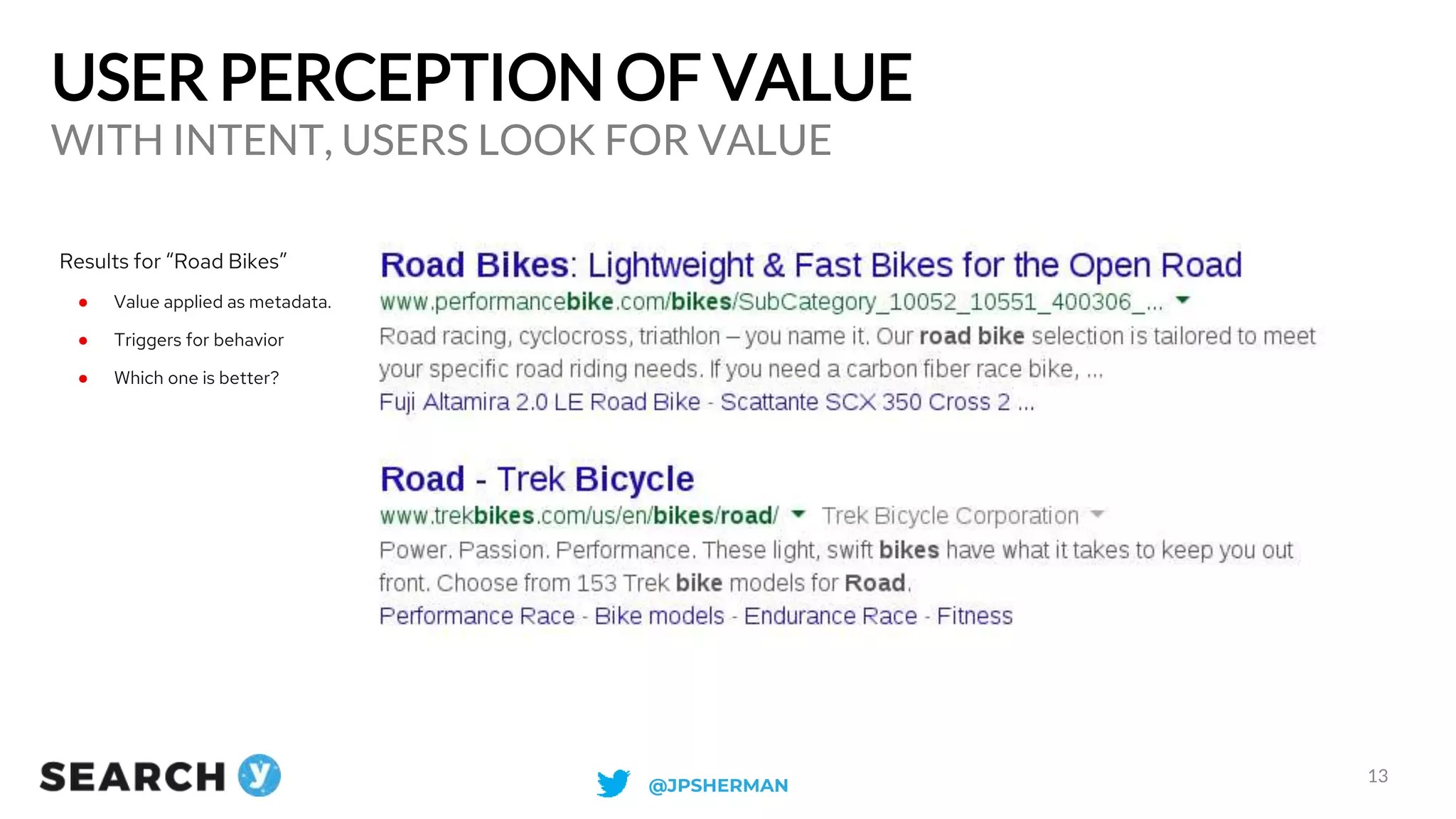

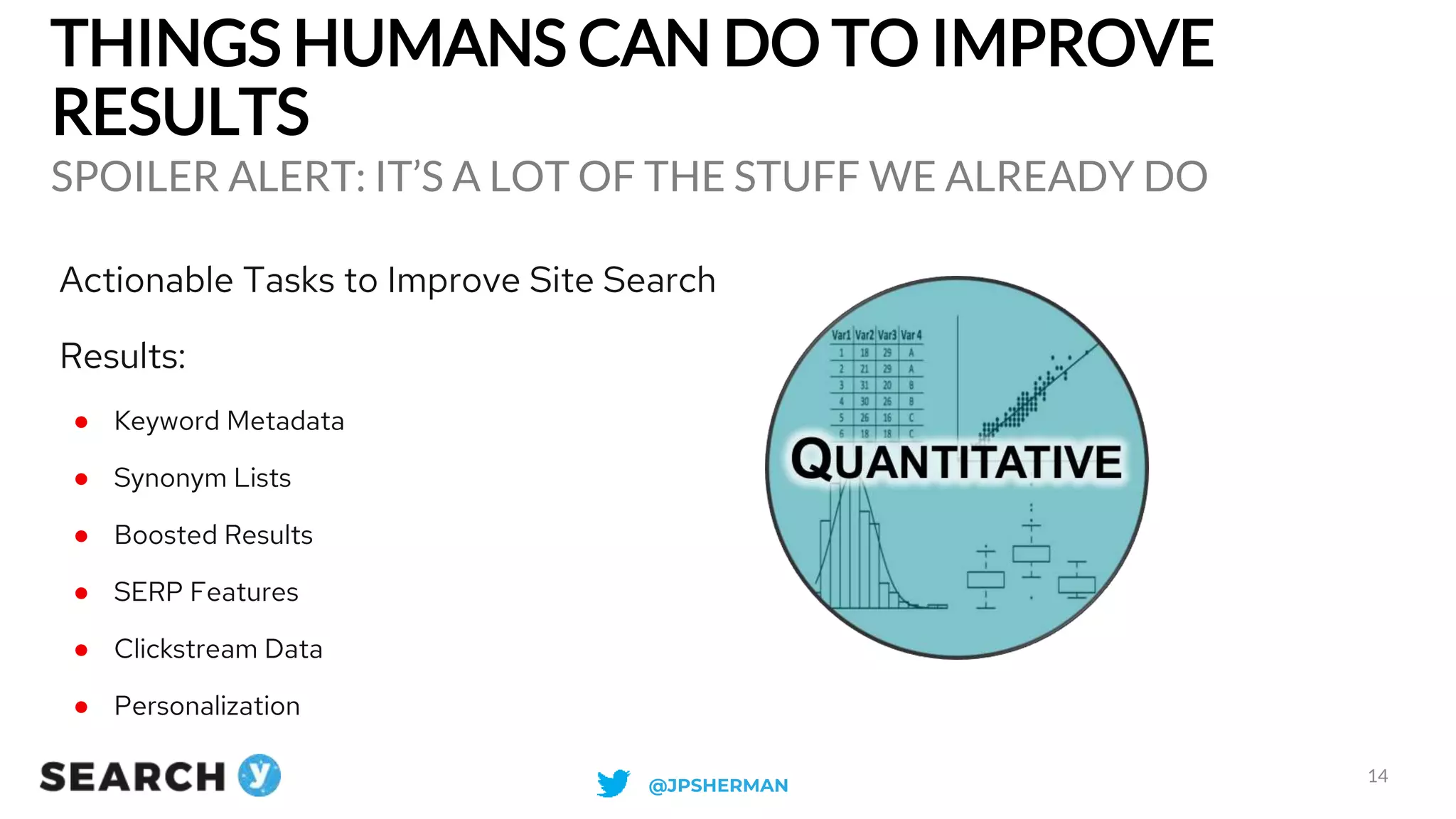

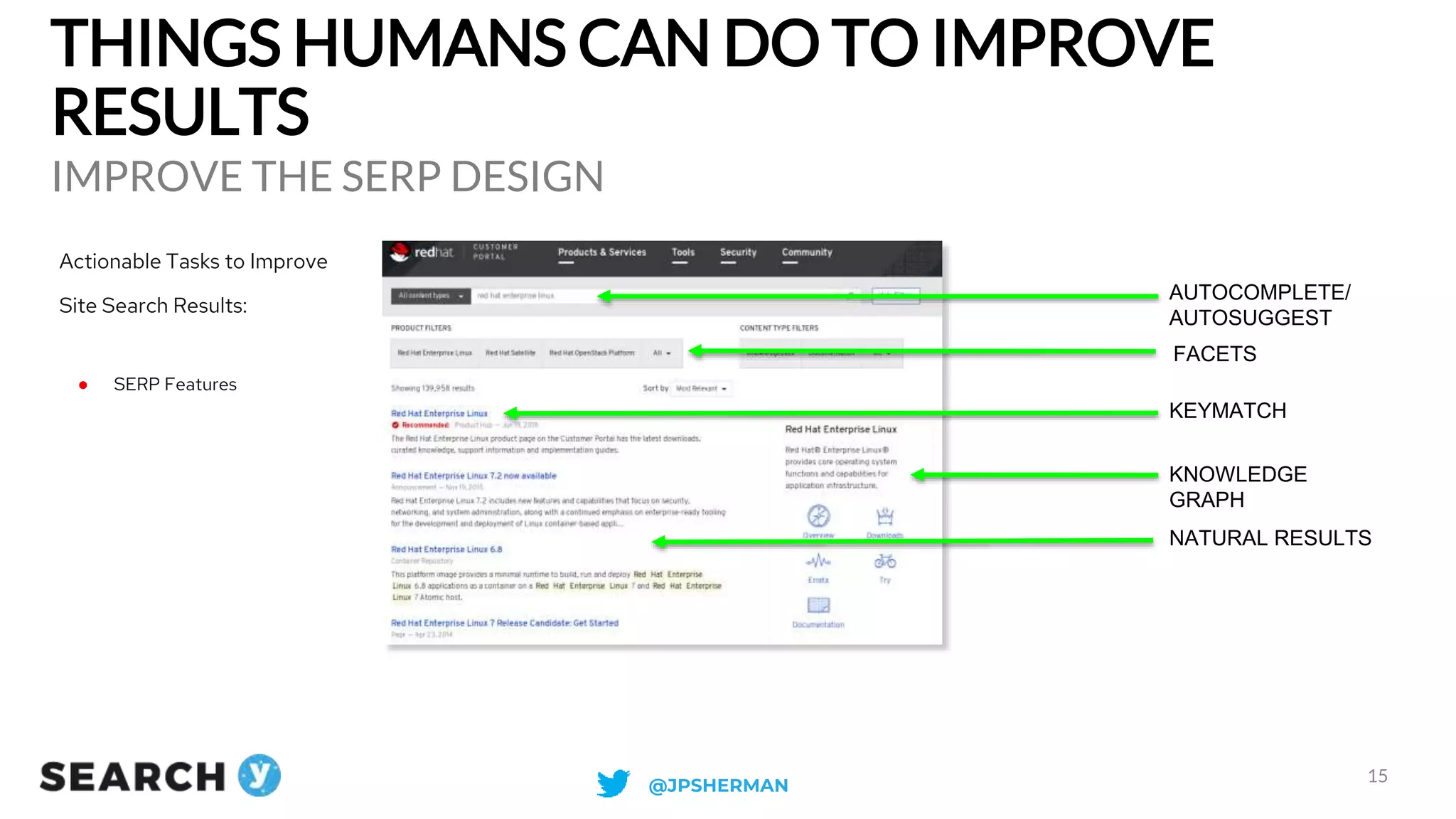

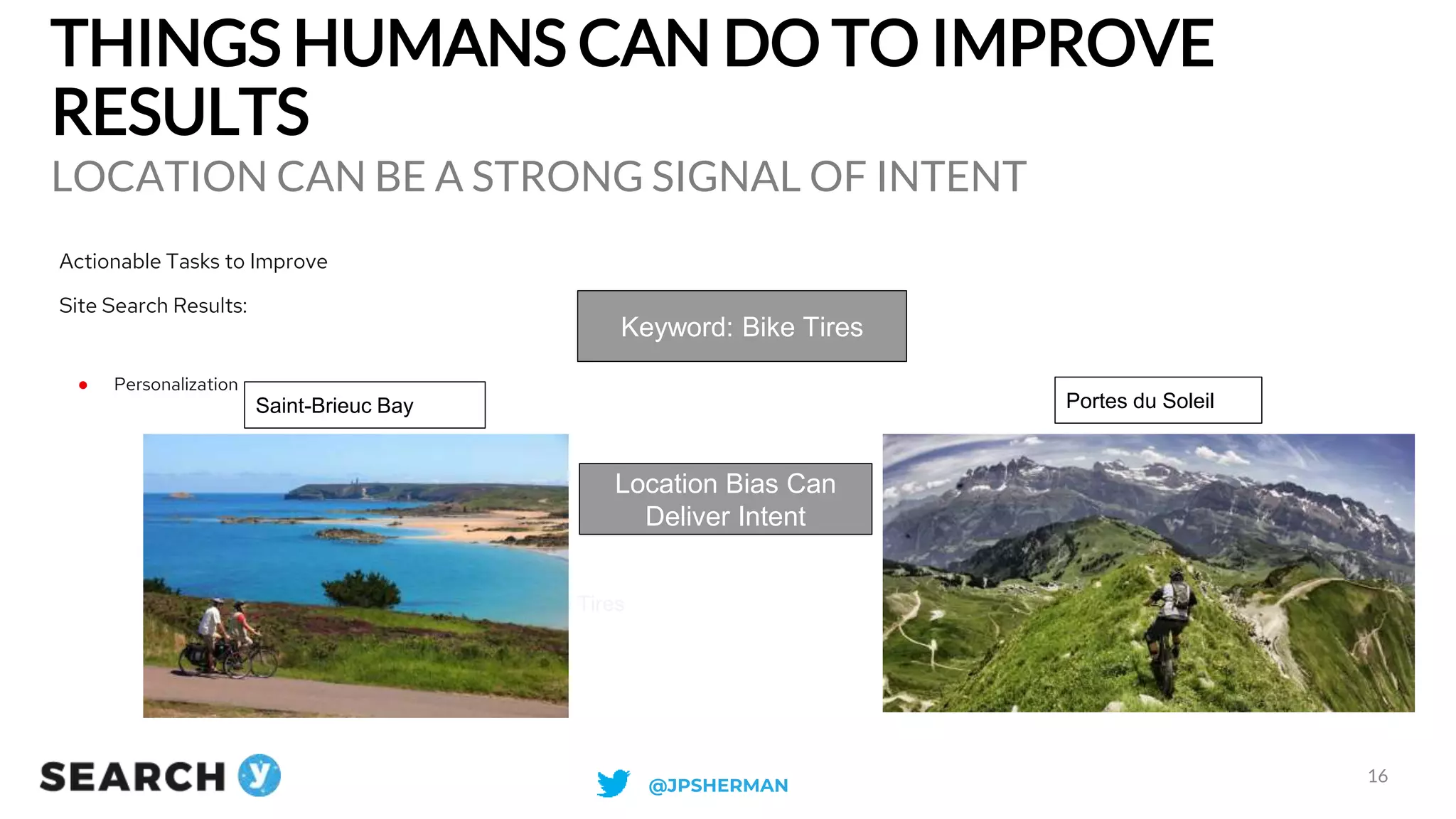

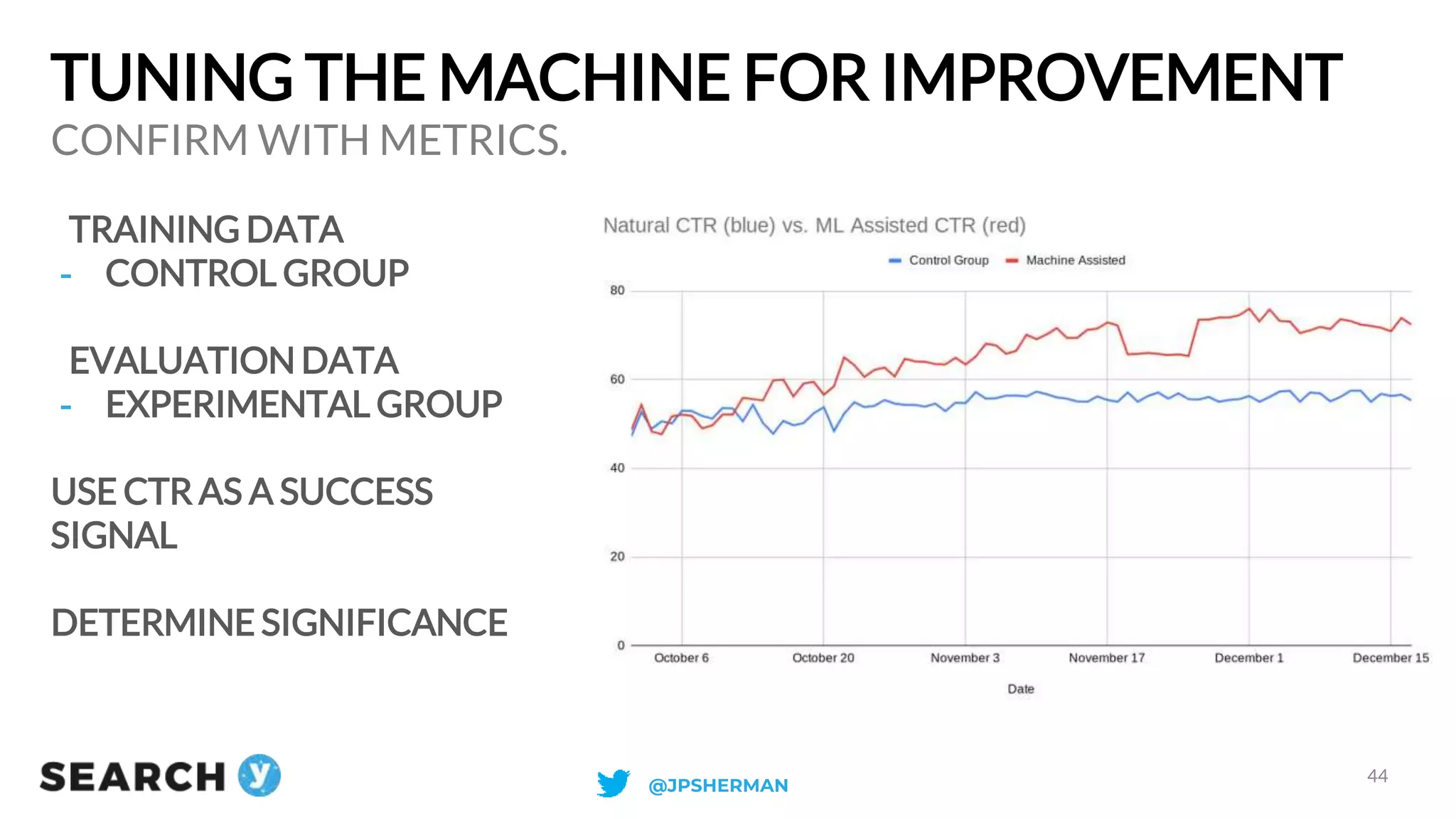

The document discusses the importance of improving site search results to enhance user experience and conversion rates. Key strategies include understanding user behavior, leveraging metadata, and refining search engine result pages (SERPs). It emphasizes the need for organizations to adapt their search functionality to effectively meet user intent and improve overall findability.