If you're leading a modernization project, the stakes are high, and the pressure’s real. This guide was built with that in mind. It’s for CIOs, CTOs, and IT leads who need clear, honest advice before diving into a COBOL-to-Java migration.

We’ve stripped out the jargon and padded promises. Instead, you’ll get practical strategies, the missteps to watch out for, and real-world wins, like how one global automaker boosted batch performance by 67%.

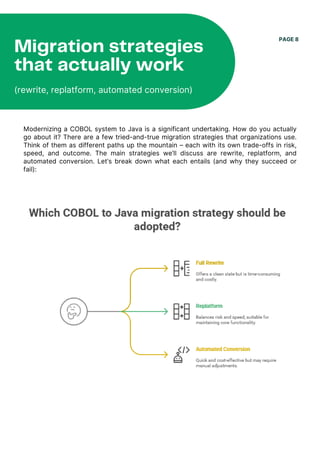

Whether you're leaning toward a full rewrite, a replatform, or using automated tools, this guide will help you stay focused, avoid surprises, and keep your team moving in the right direction.