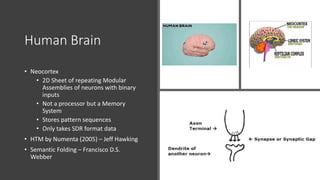

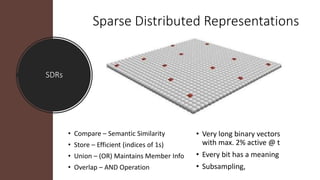

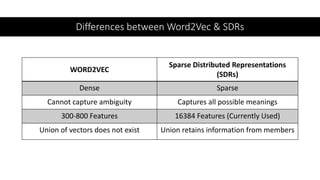

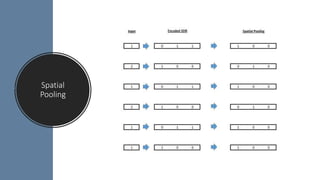

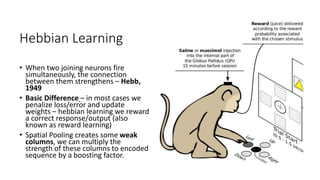

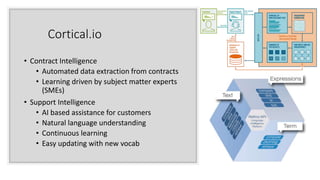

The document discusses Hierarchical Temporal Memory (HTM) by Numenta, which is inspired by the human brain's neocortex and focuses on learning through sparse distributed representations (SDRs) that capture semantic similarities. It contrasts HTM's approach with Word2Vec, highlighting differences in how they handle ambiguity, data representation, and weight adjustments through Hebbian learning. Additionally, it touches on automated data extraction and AI support systems in contract intelligence.