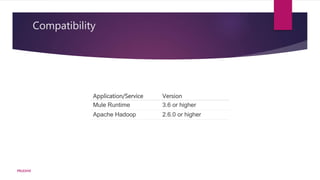

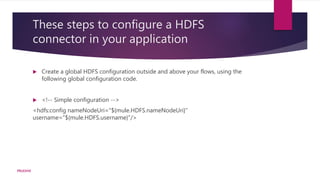

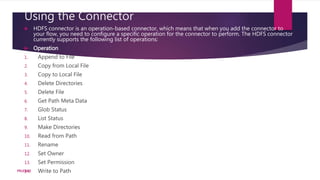

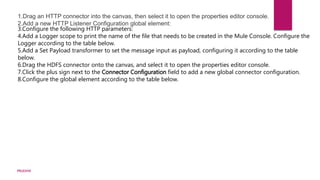

The HDFS connector allows bi-directional integration between Mule applications and an Apache Hadoop instance. To use the connector, a global HDFS configuration must be defined containing parameters like the nameNodeUri and username. The connector supports operations like writing to, reading from, and deleting files from HDFS. An example flow demonstrates creating a file in HDFS by writing the HTTP request payload to the specified path.

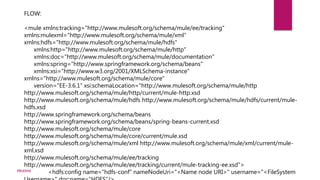

![<http:listener-config

name="HTTP_Listener_Configuration" host="localhost" port="8090" basePath="filecreate"

doc:name="HTTP Listener Configuration"/>

<http:connector name="HTTP_HTTPS"

cookieSpec="netscape" validateConnections="true" sendBufferSize="0" receiveBufferSize="0"

receiveBacklog="0" clientSoTimeout="10000" serverSoTimeout="10000" socketSoLinger="0"

doc:name="HTTP-HTTPS"/>

<flow name="Create_File_Flow"

doc:name="Create_File_Flow">

<http:listener config-

ref="HTTP_Listener_Configuration" path="/" doc:name="HTTP"/>

<logger message="Creating file:

#[message.inboundProperties['http.query.params'].path] with message:

#[message.inboundProperties['http.query.params'].msg]" level="INFO" doc:name="Write to Path

Log"/>

<set-payload

value="#[message.inboundProperties['http.query.params'].msg]" doc:name="Set the message input

as payload"/>

<hdfs:write config-ref="hdfs-conf"

path="#[message.inboundProperties['http.query.params'].path]" doc:name="Write to Path"/>

</flow>

</mule>PRUDHVI](https://image.slidesharecdn.com/hdfsconnector-151209143247-lva1-app6891/85/Hdfs-connector-13-320.jpg)