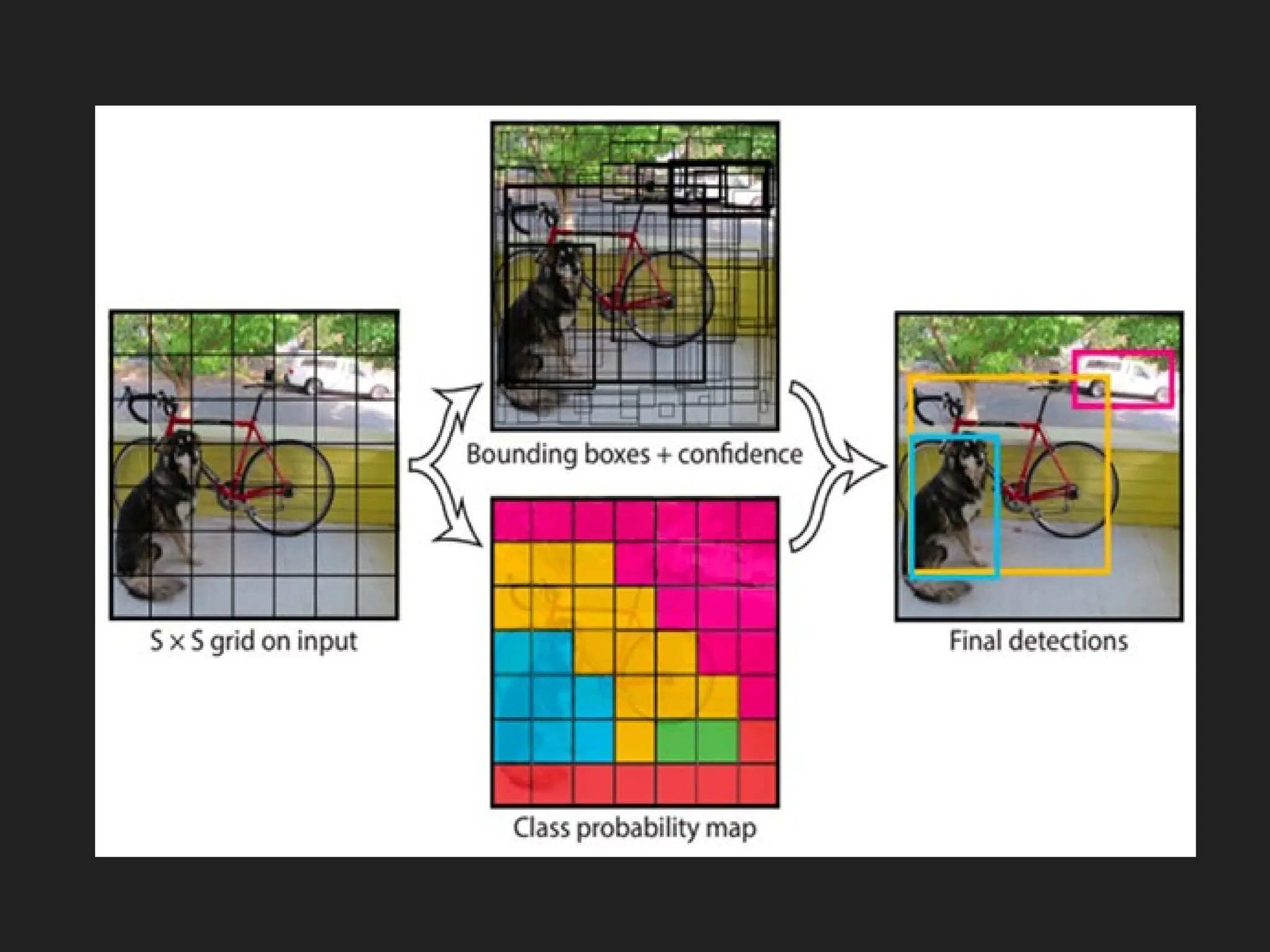

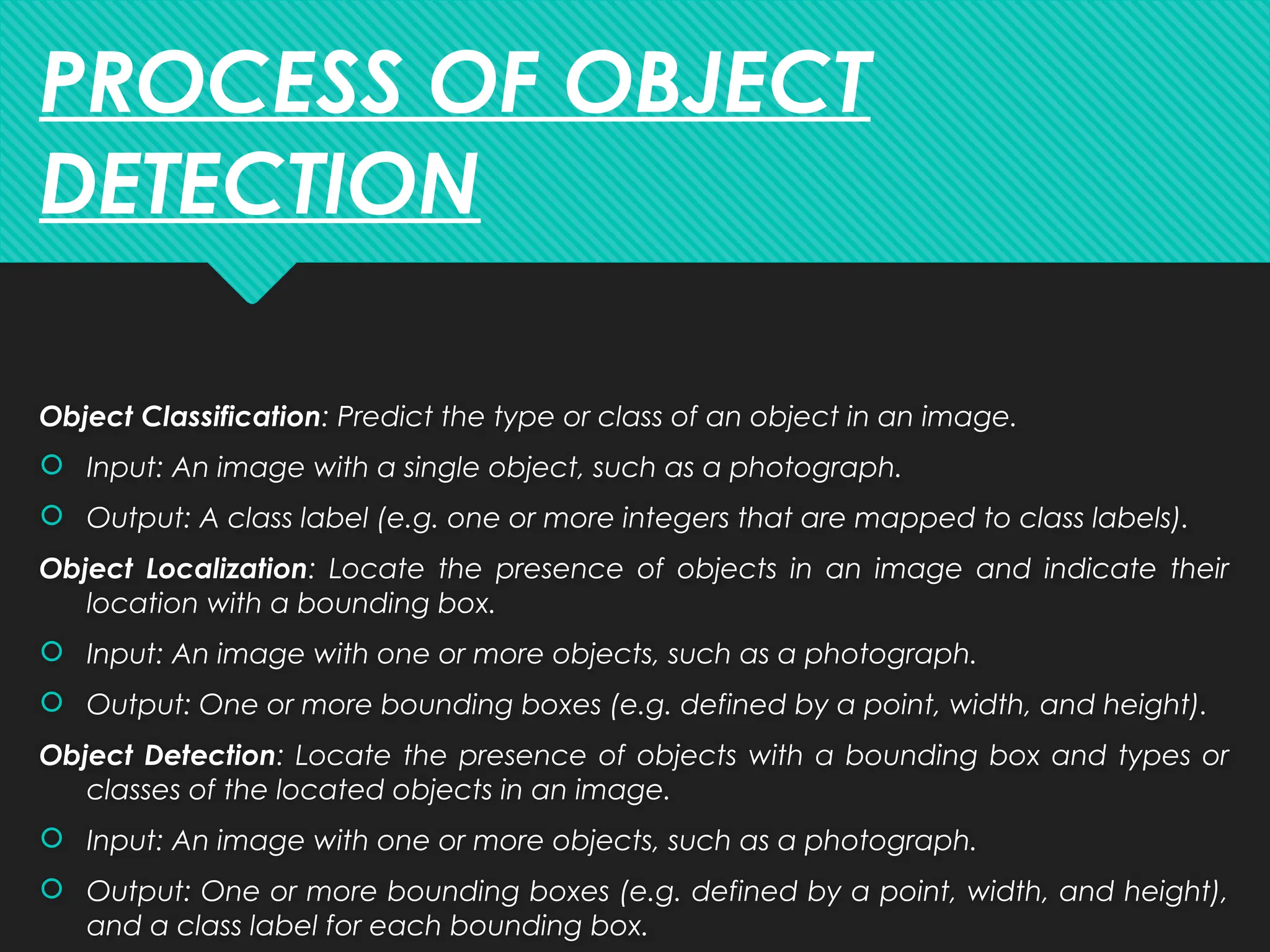

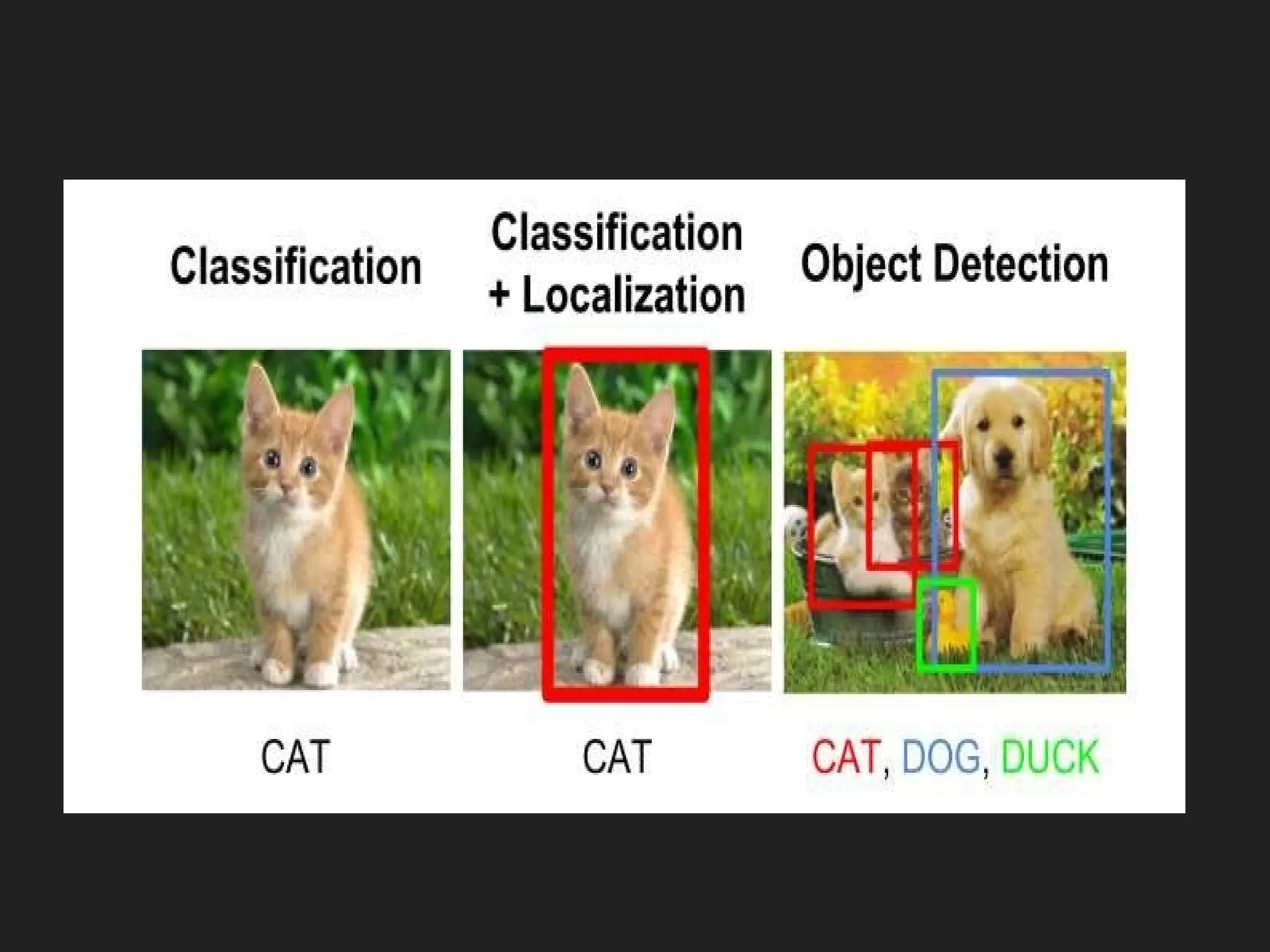

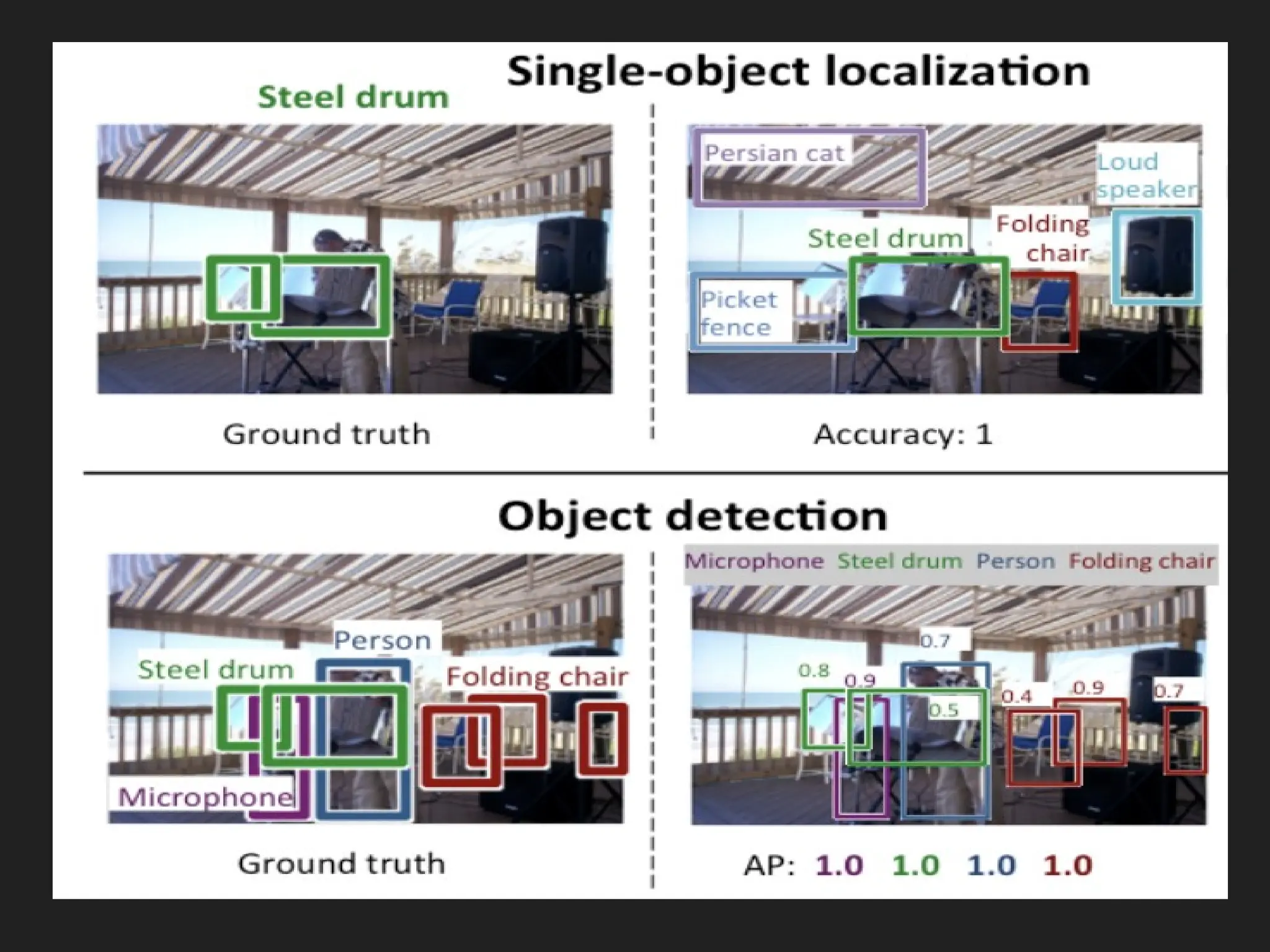

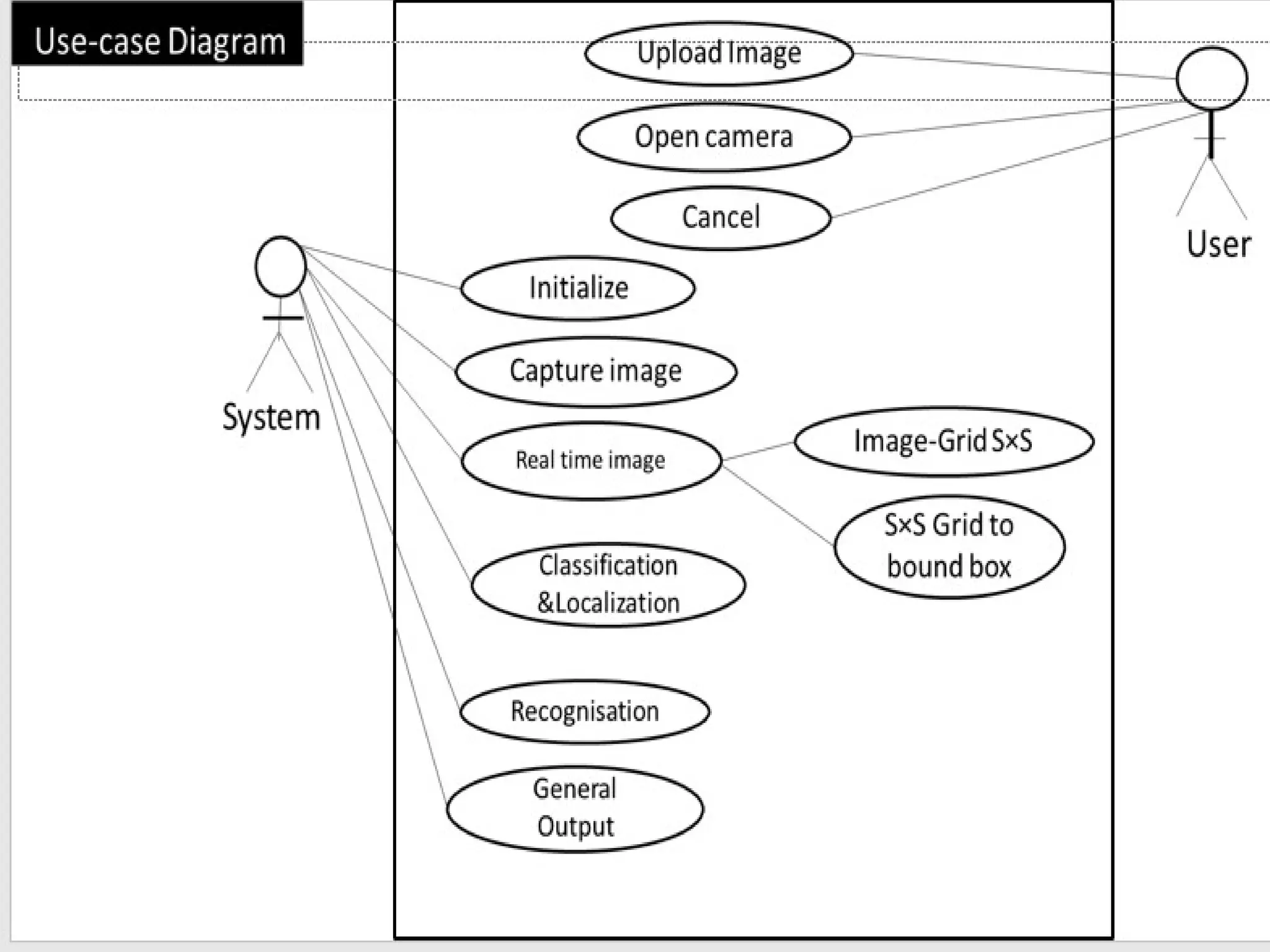

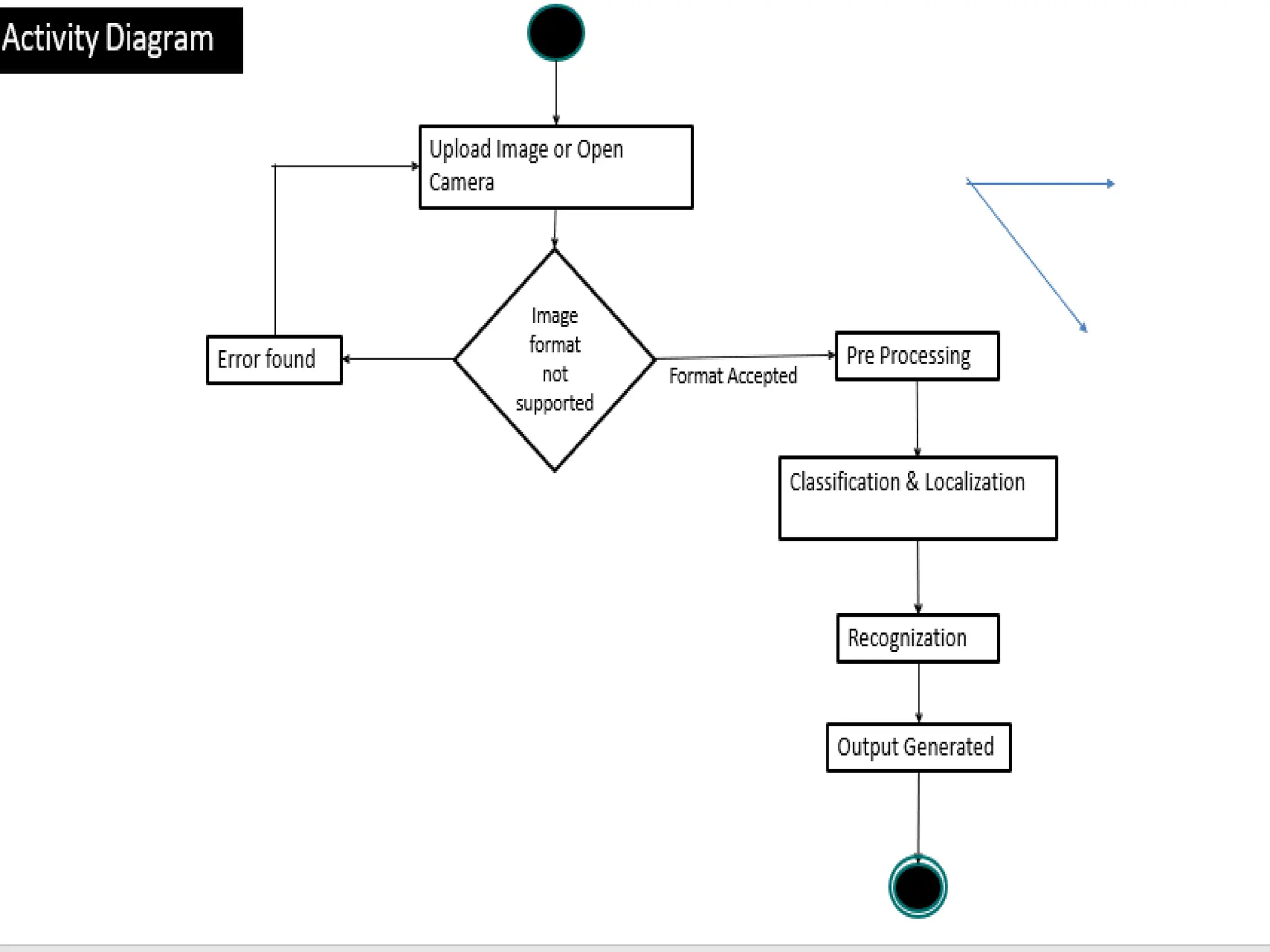

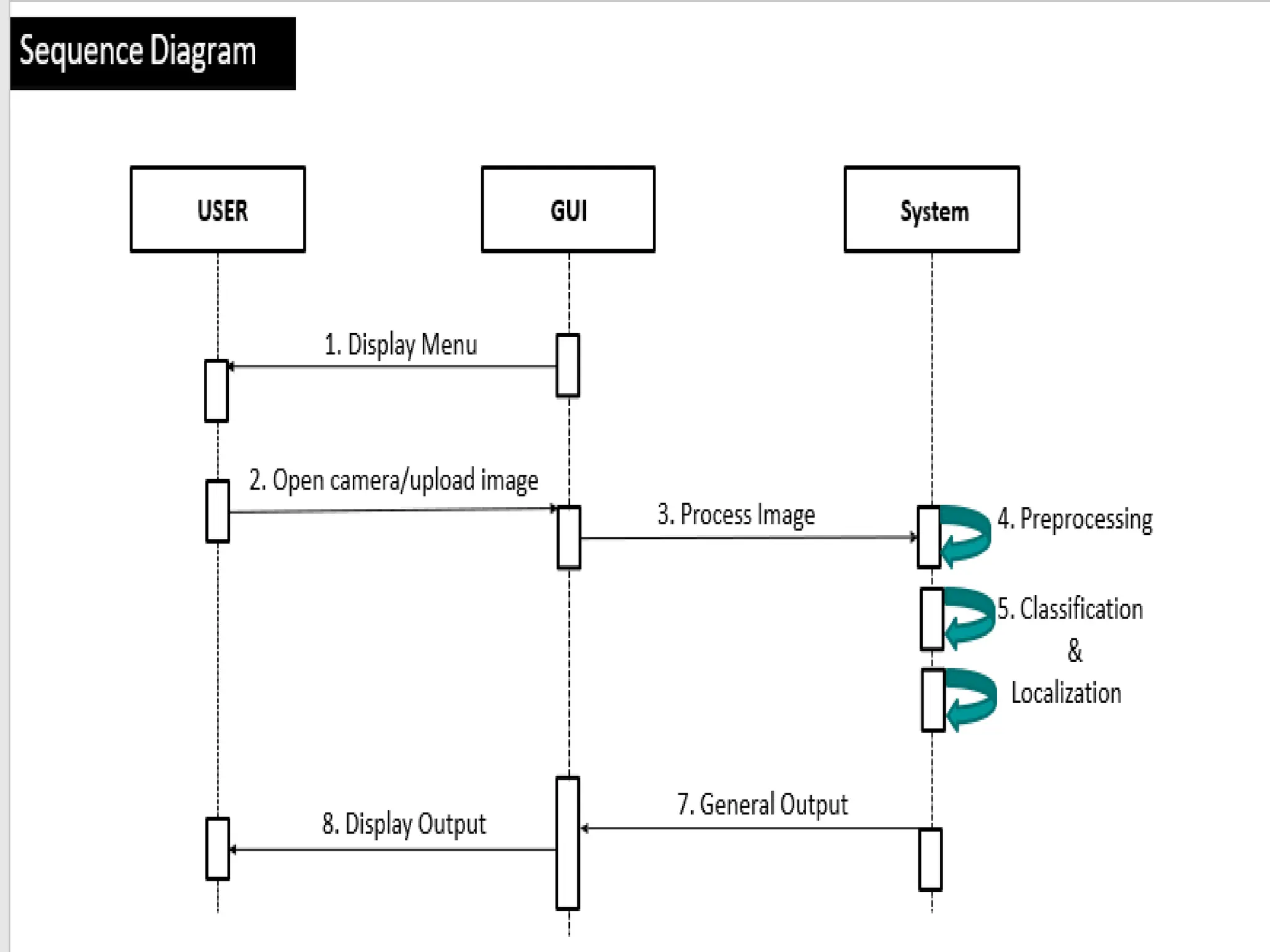

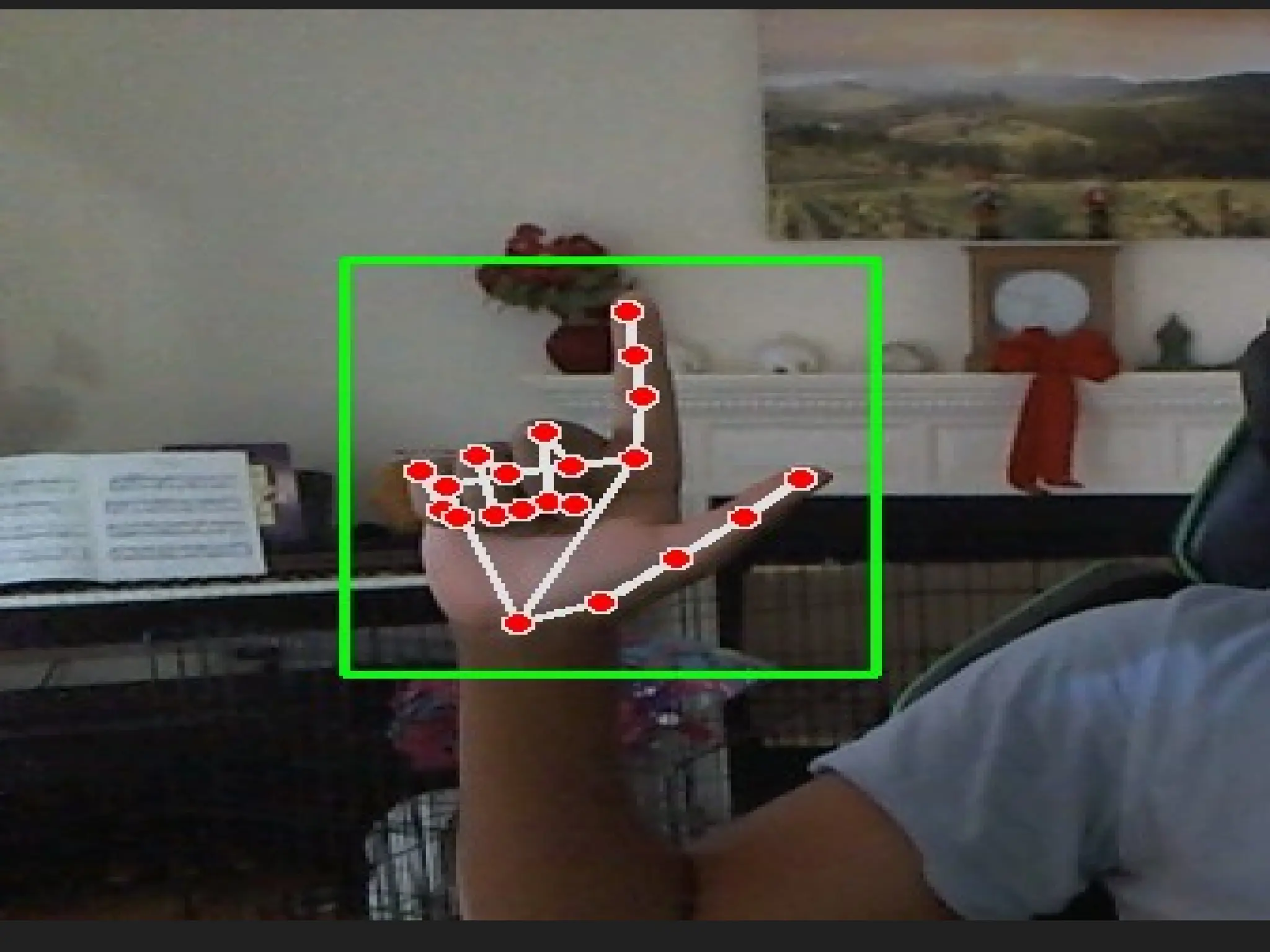

The document outlines a project focused on end-to-end sign language detection using deep learning techniques to recognize and translate gestures into written or spoken language. The objective is to bridge communication gaps between hearing and non-hearing individuals, utilizing methods such as object detection, classification, and localization with the YOLO algorithm. Essential resources include Python, OpenCV, and TensorFlow, aimed at improving communication accessibility in various settings like hospitals and offices.