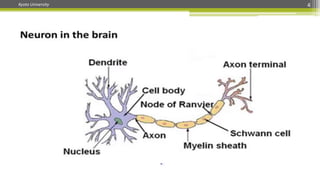

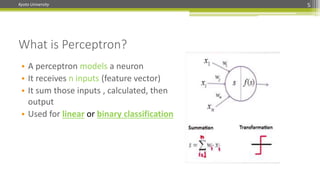

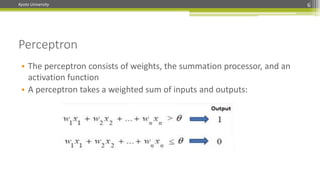

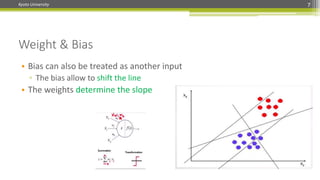

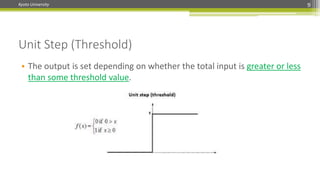

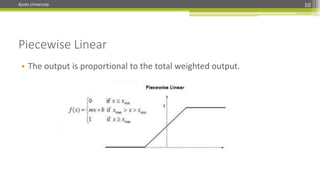

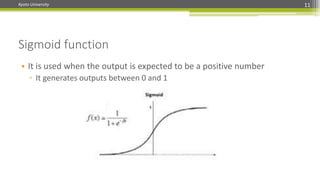

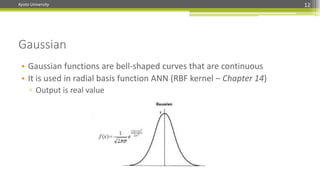

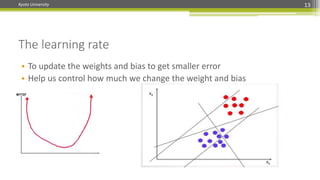

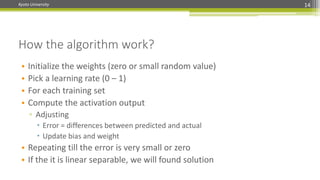

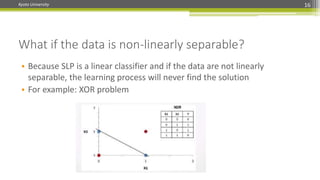

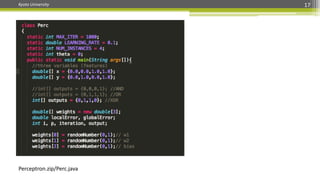

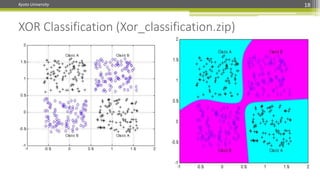

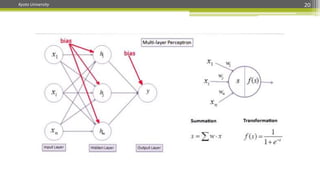

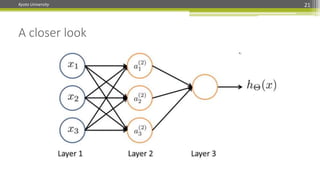

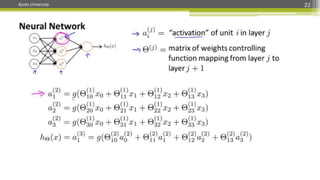

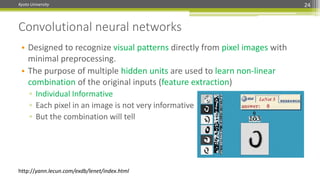

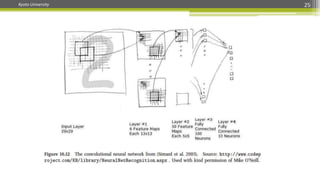

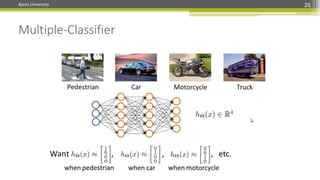

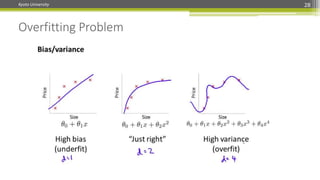

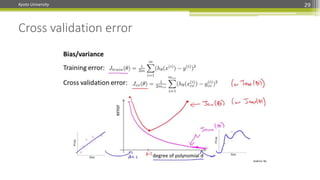

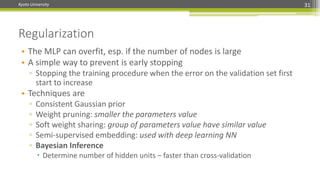

The document discusses feedforward neural networks, specifically multilayer perceptrons (MLPs), explaining their structure, functioning, and the various types of activation functions used. It covers the perceptron model, its limitations with non-linearly separable data such as the XOR problem, and methods to prevent overfitting in MLPs. Additionally, it touches on techniques for adjusting weights and the backpropagation algorithm to improve learning accuracy.