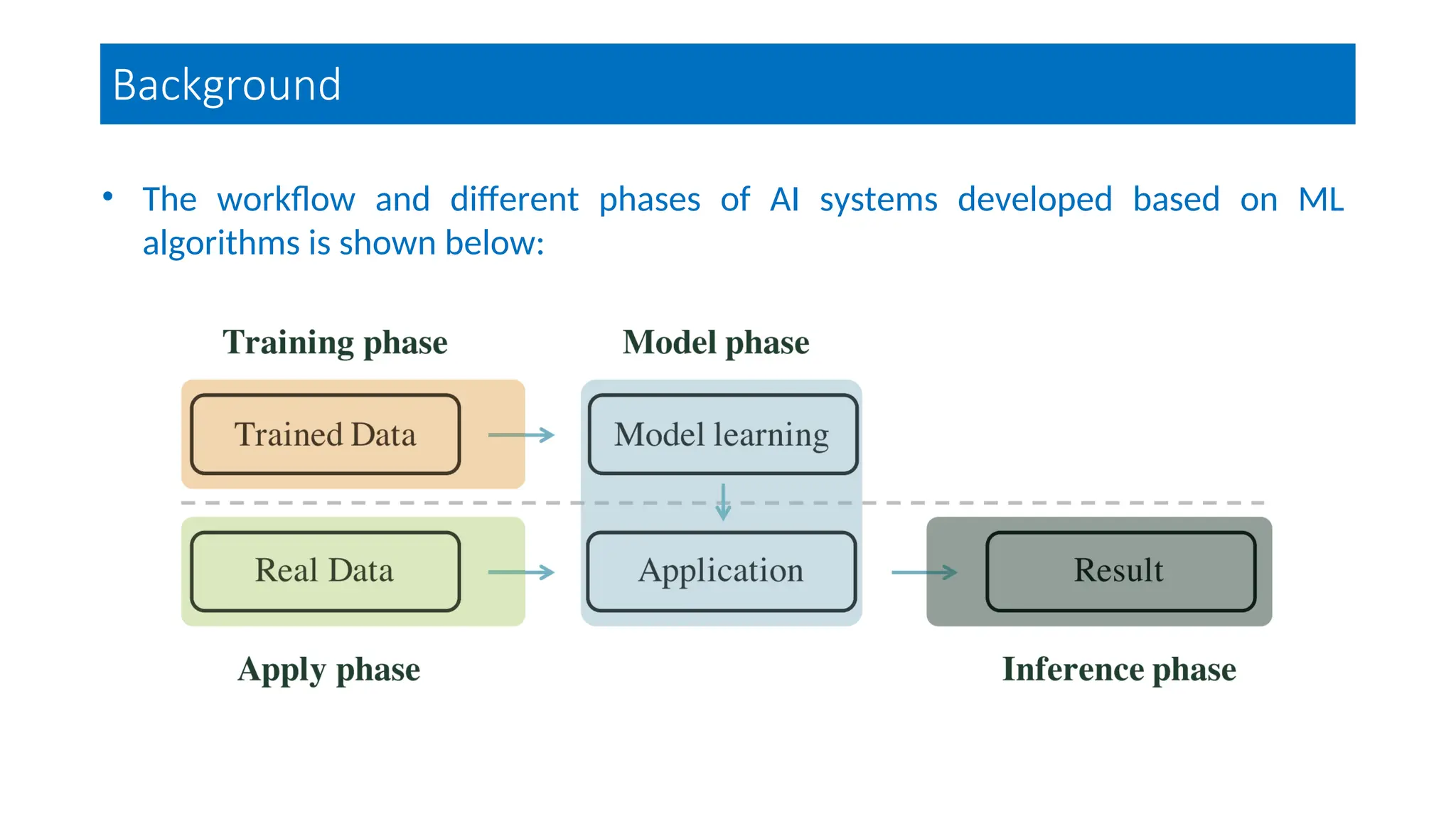

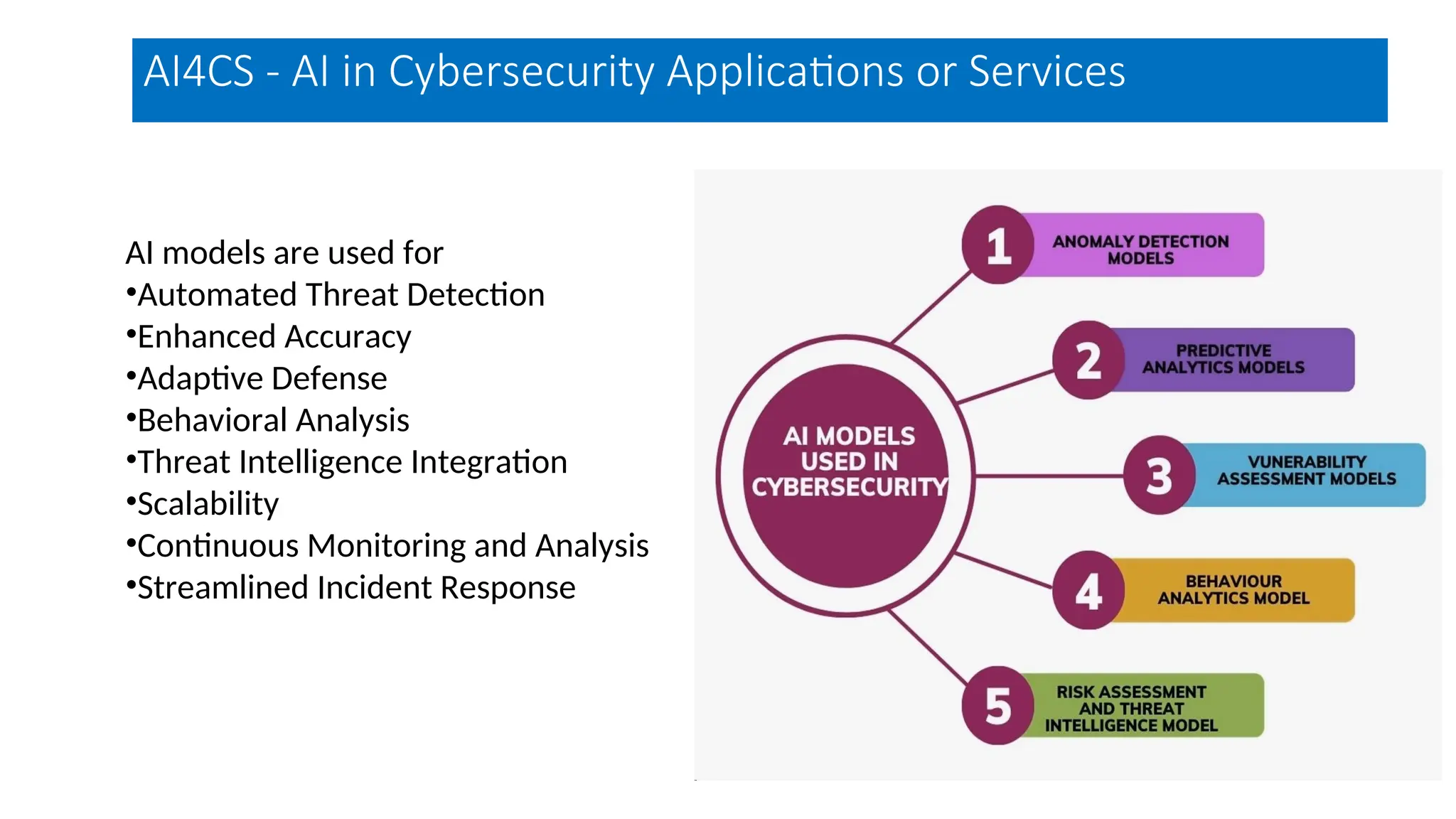

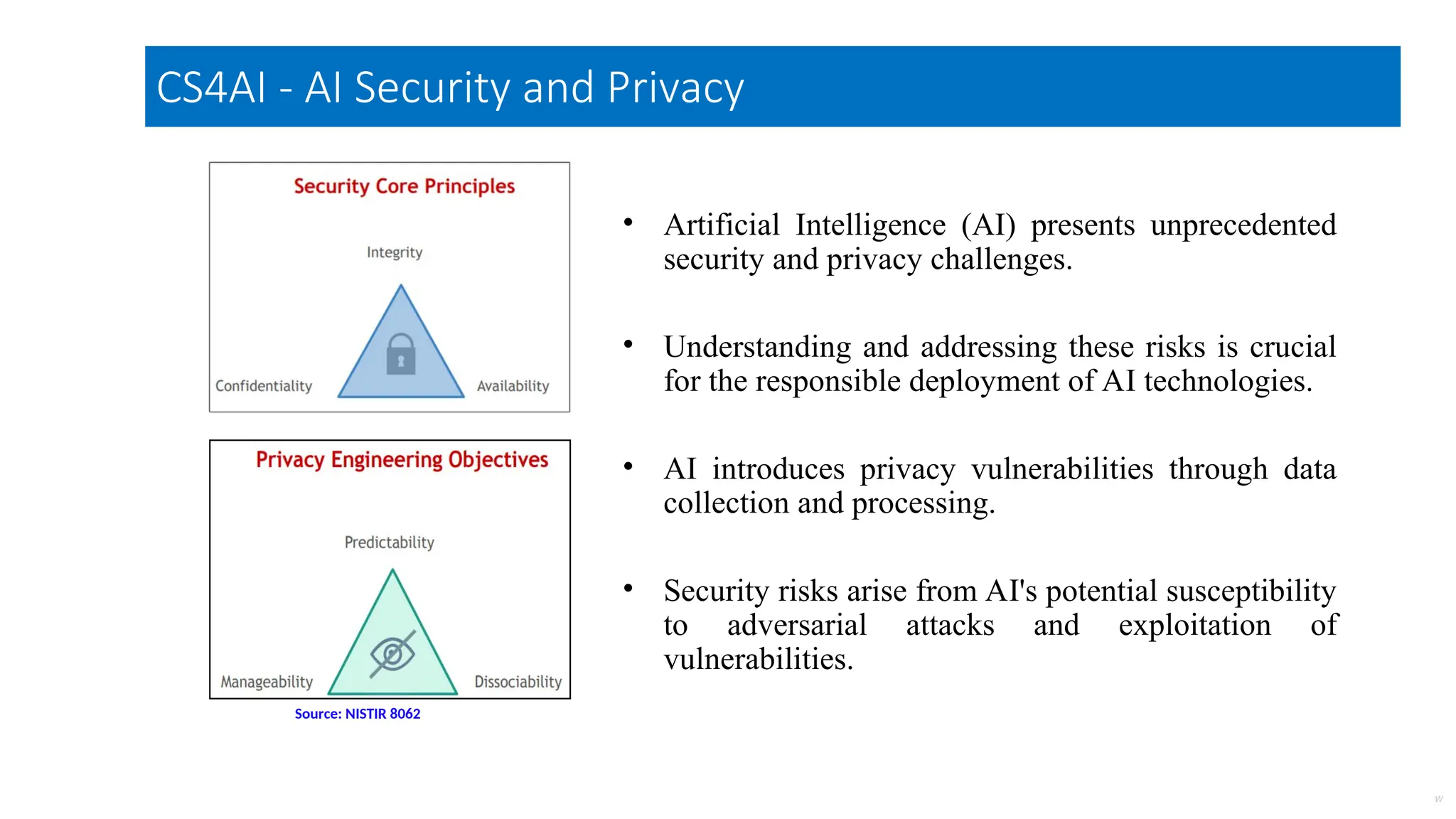

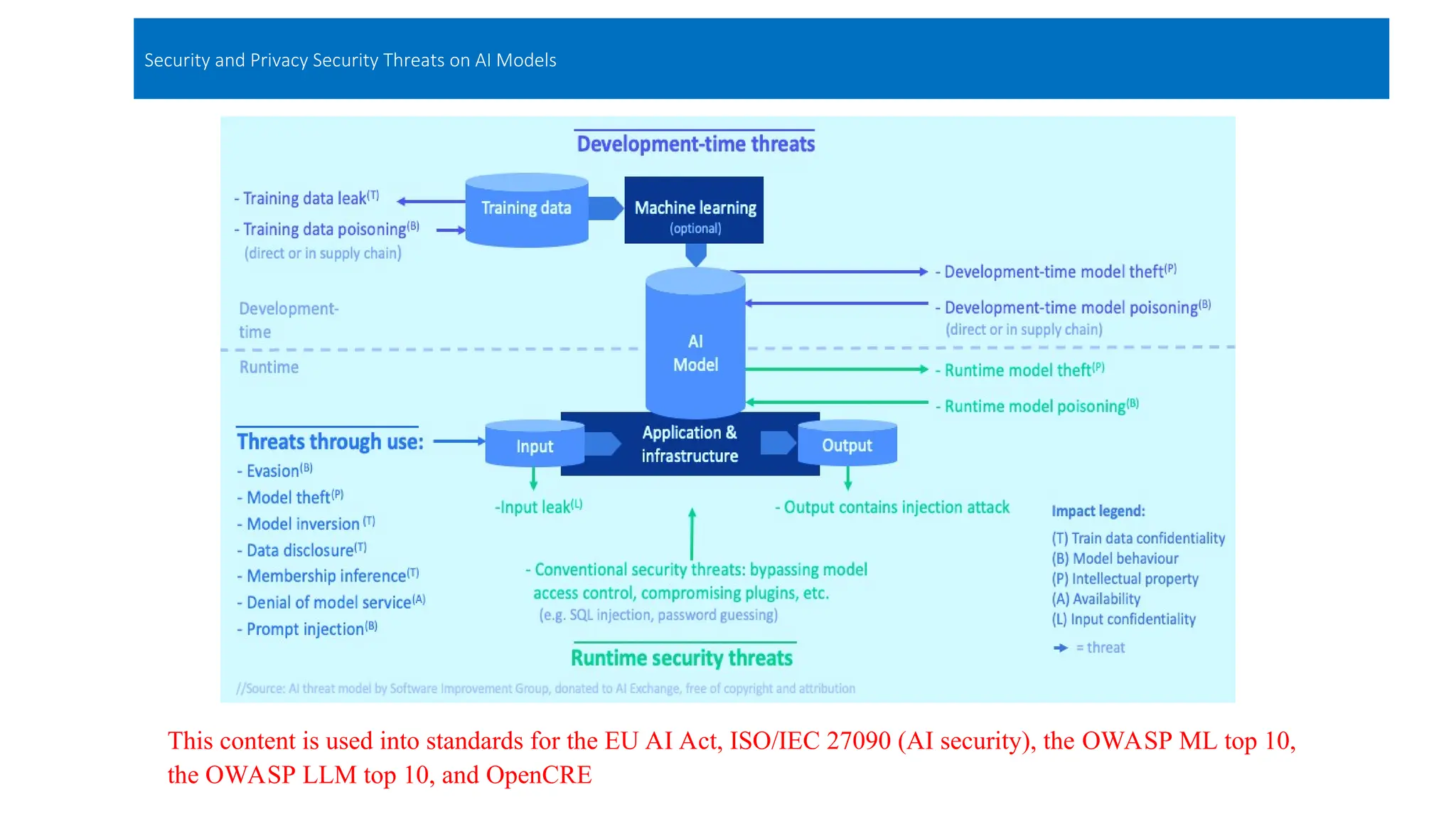

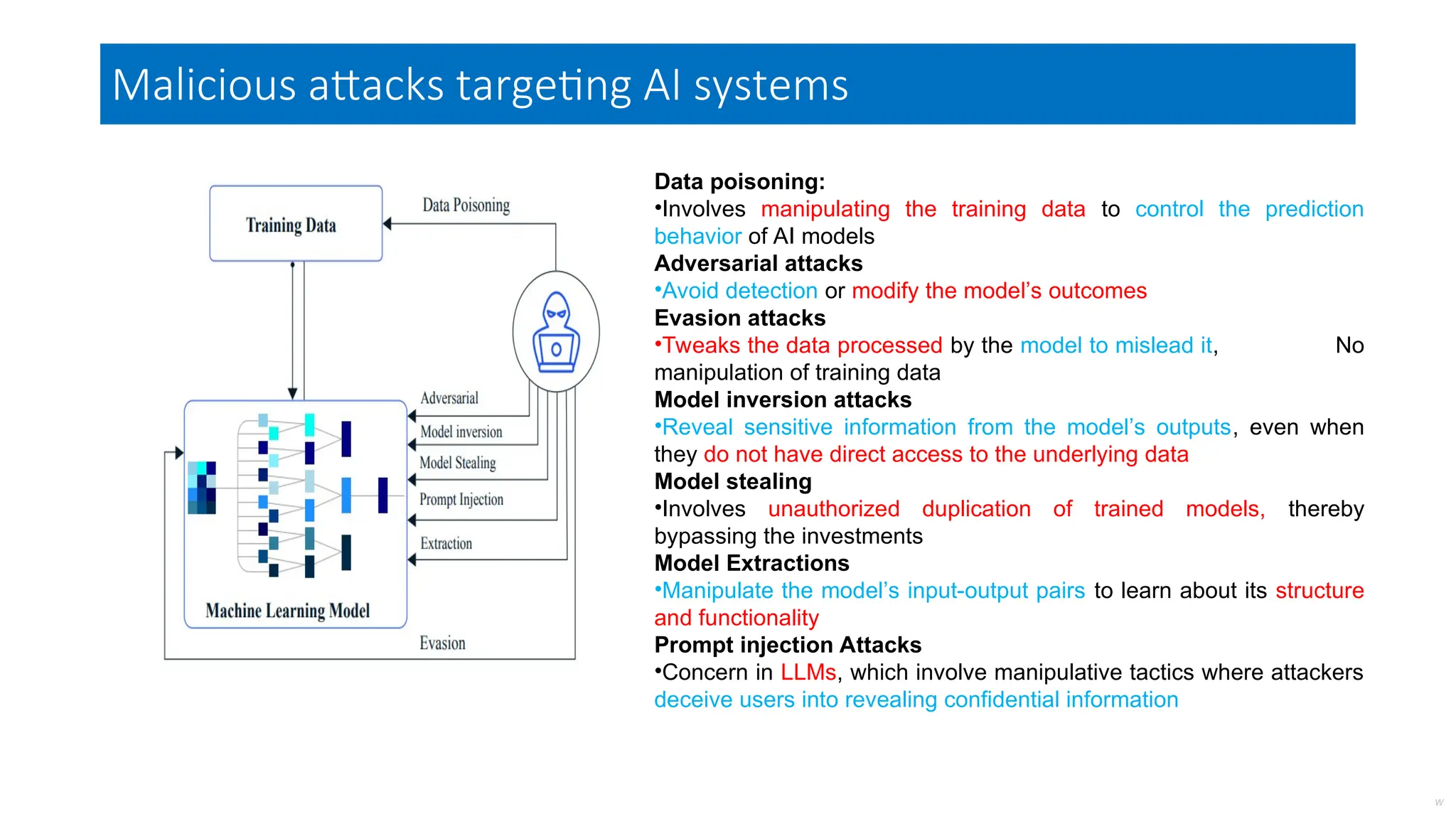

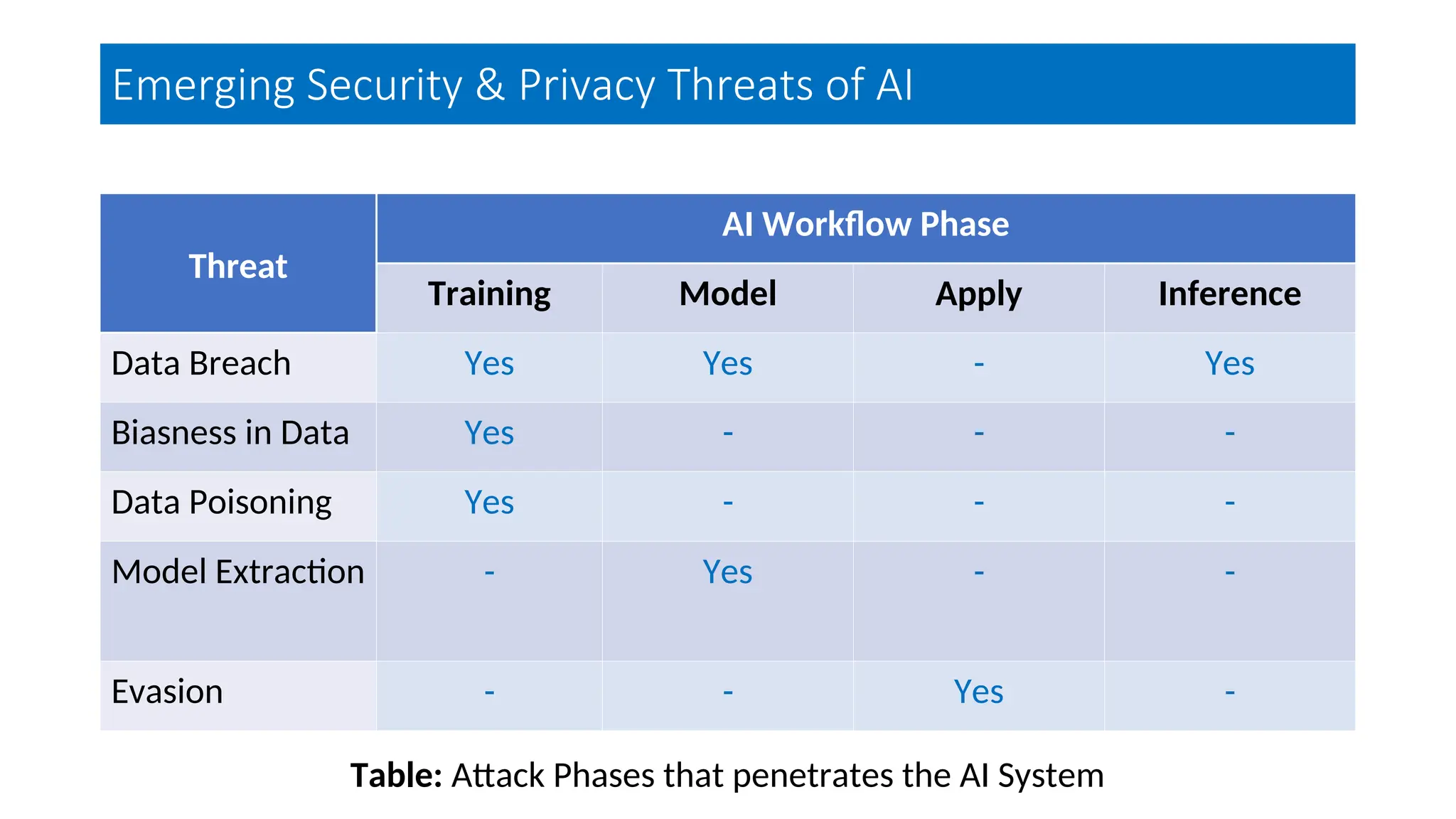

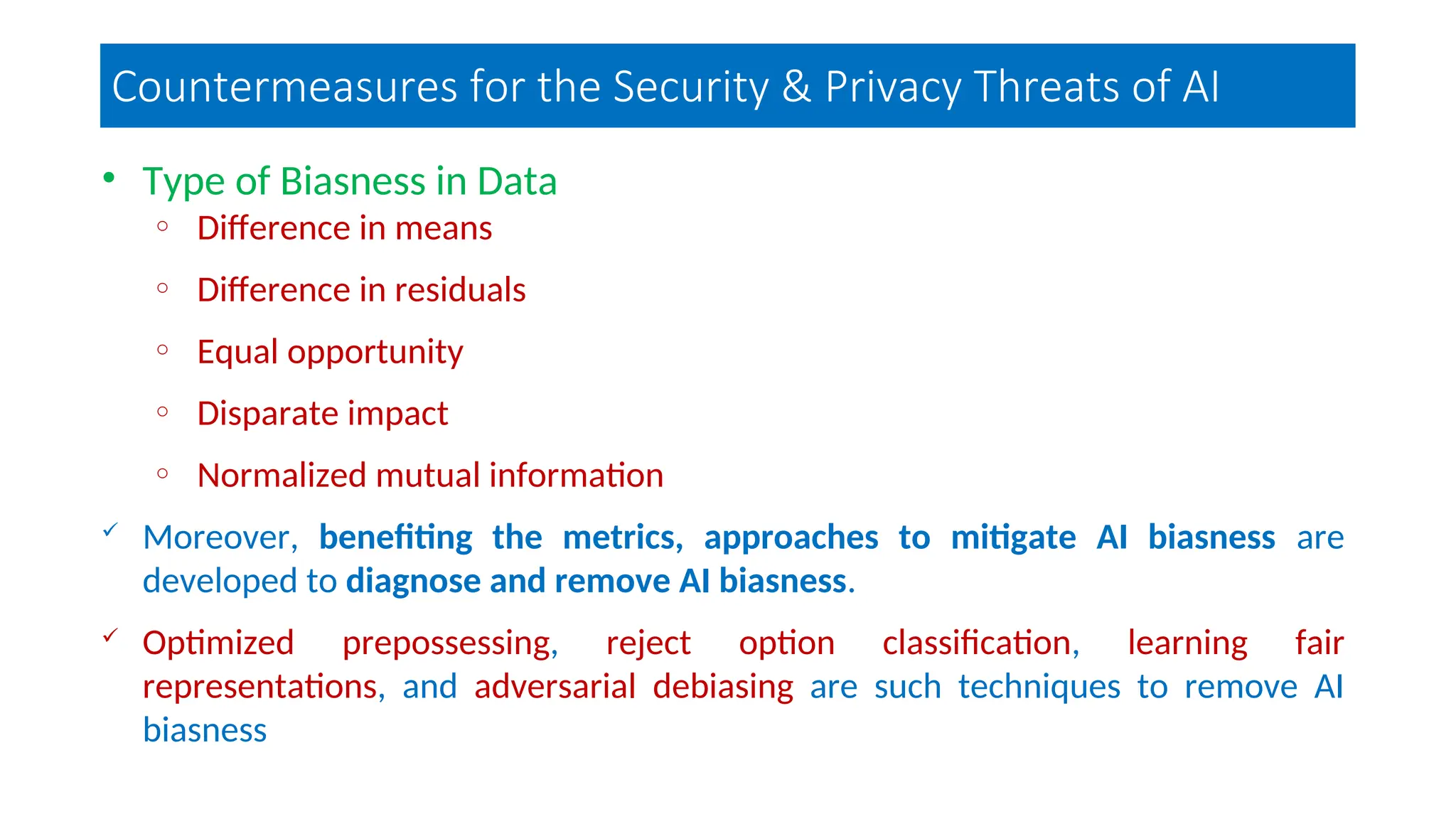

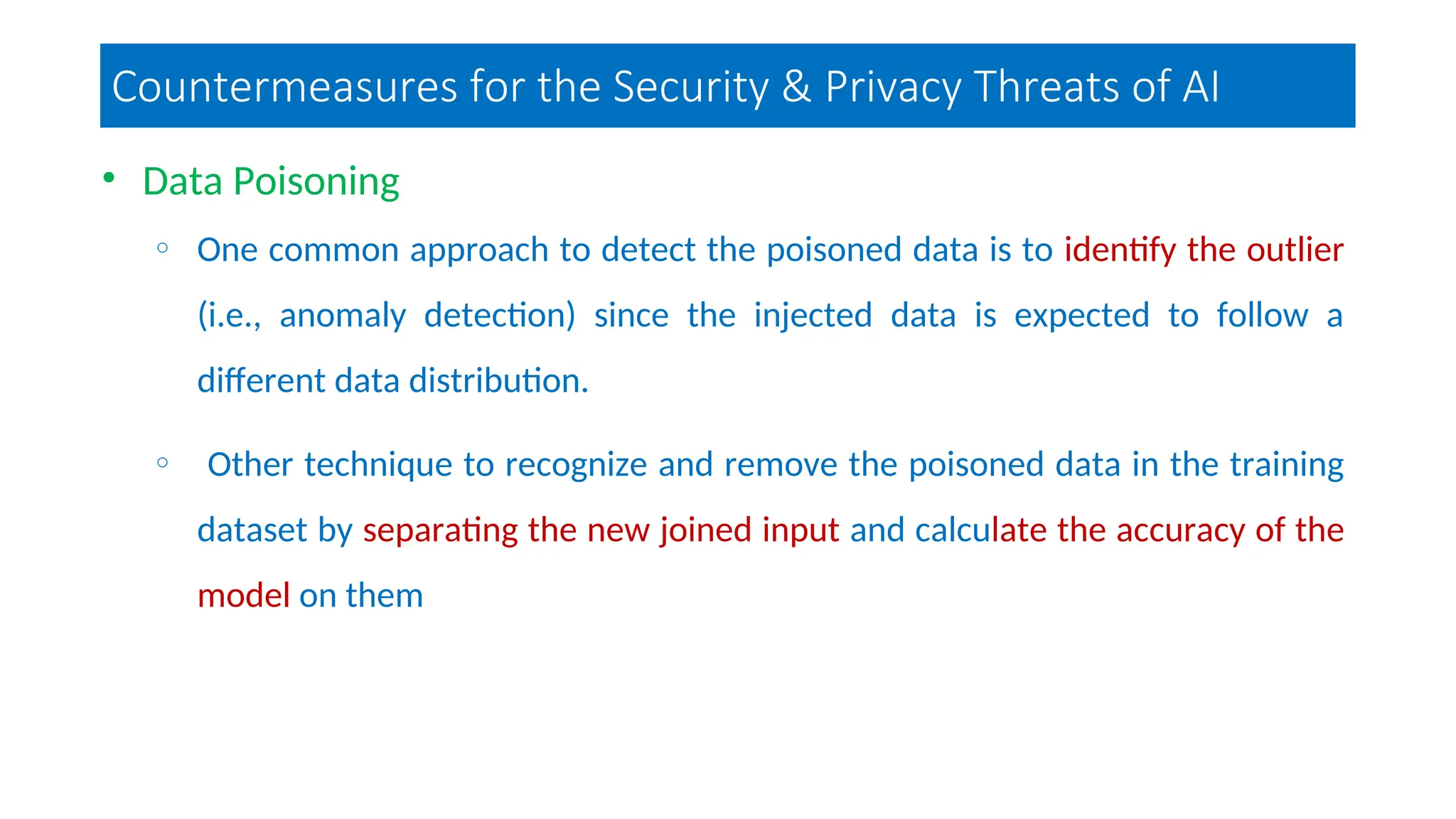

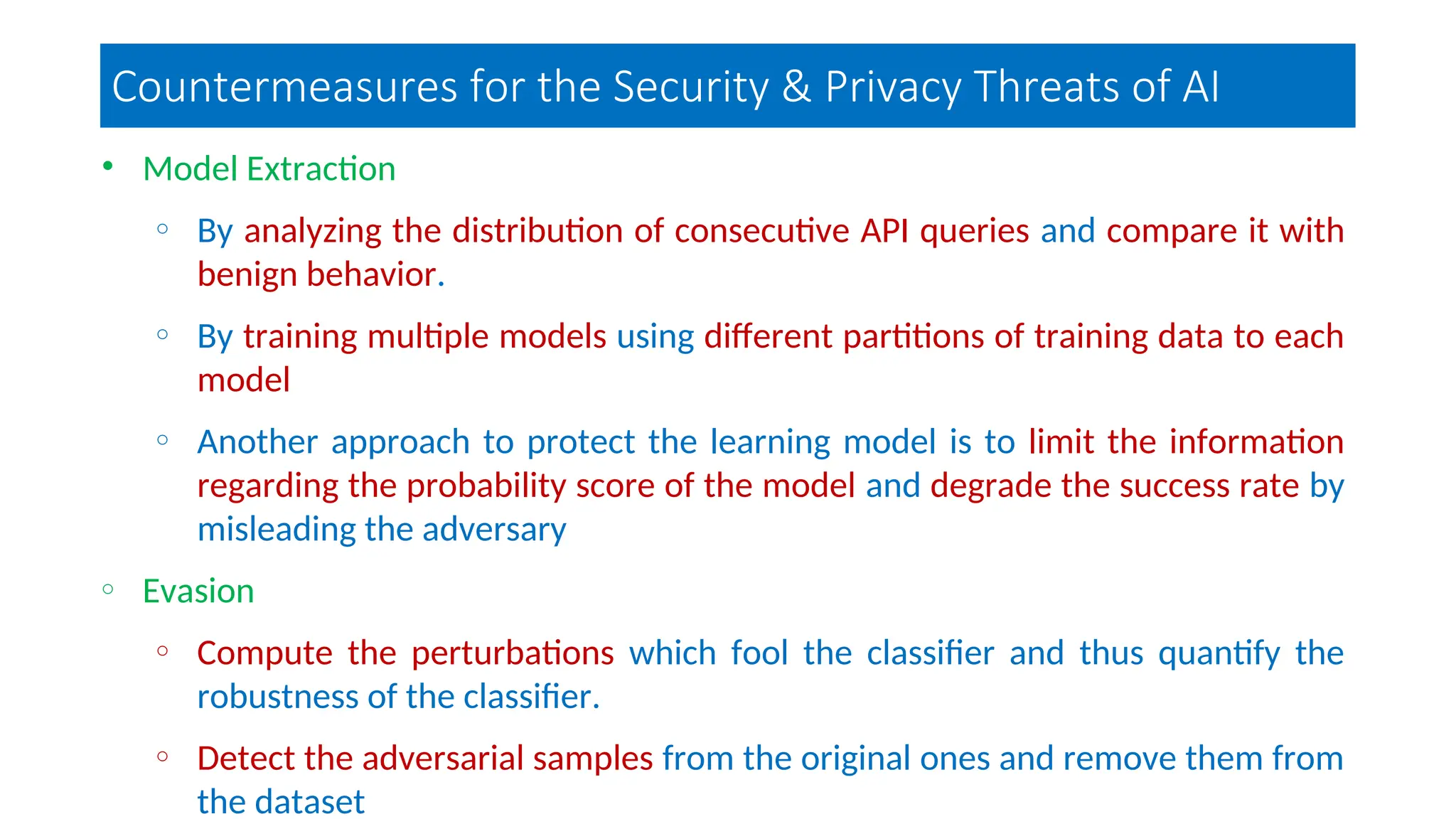

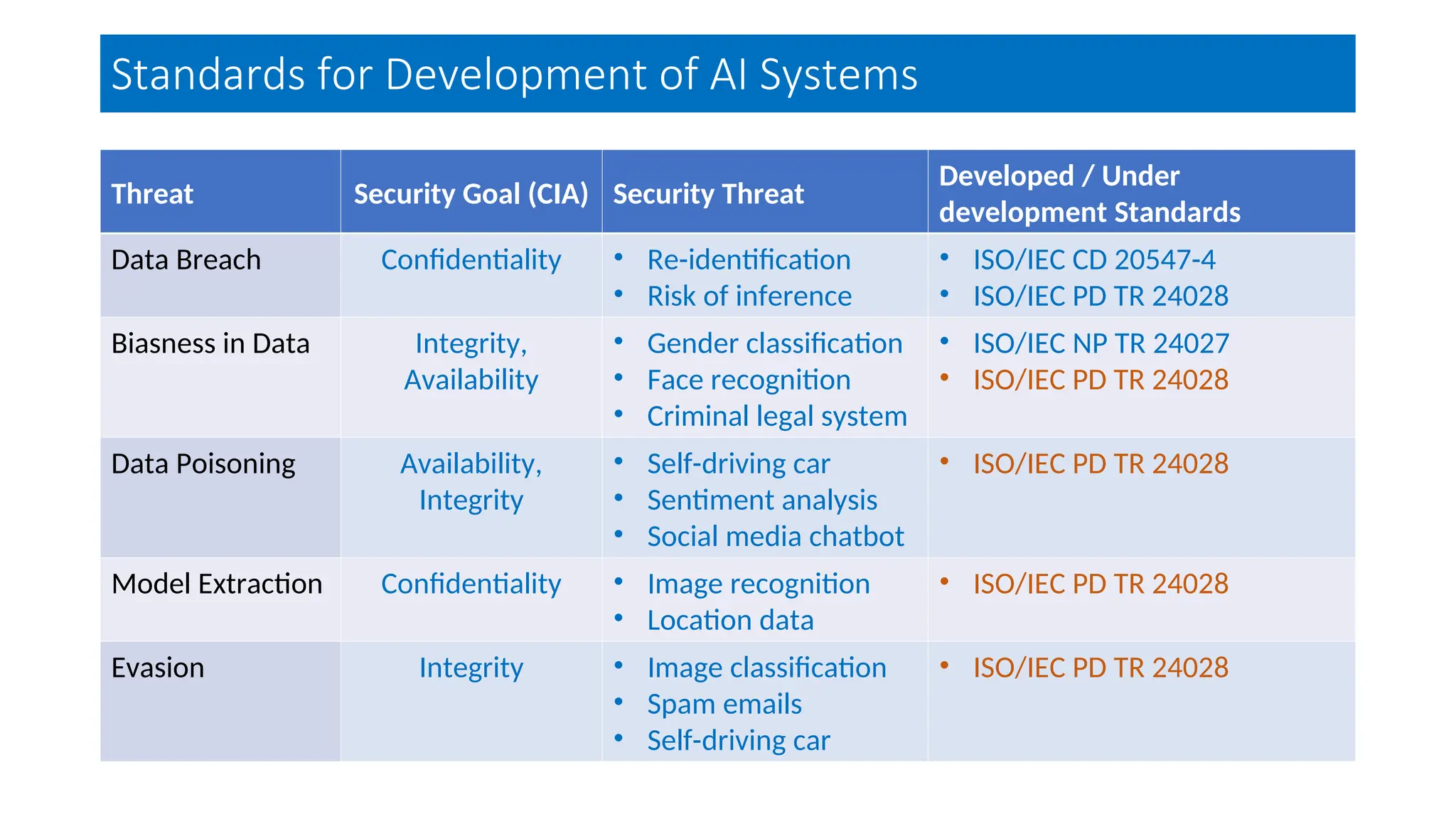

The document discusses emerging security and privacy threats in AI systems, emphasizing issues such as data breaches, bias, data poisoning, and model extraction. It outlines countermeasures to alleviate these risks and highlights the importance of developing trustworthy AI through standards and collaborations. The content also mentions ongoing research and initiatives aimed at enhancing cybersecurity in AI applications.

![References

9. “Iso/iec cd 20547-4: Information technology – big data reference architecture – part 4: Security and privacy,”

International Organization for Standardization, Geneva, CH, Standard.

10. M. Wall. (2019, Jul.) Biased and wrong? facial recognition tech in the dock. BBC. [Online]. Available:

https://www.bbc.com/news/ business-48842750

11. S. X. Zhang, R. E. Roberts, and D. Farabee, “An analysis of prisoner reentry and parole risk using compas and

traditional criminal history measures,” Crime & Delinquency, vol. 60, 2014.

12. “Iso/iec np tr 24027: Information technology – artificial intelligence (ai) – bias in ai systems and ai aided

decision making,” International Organization for Standardization, Geneva, CH, Standard.

13. A. Newell, R. Potharaju, L. Xiang, and C. Nita-Rotaru, “On the practicality of integrity attacks on document-level

sentiment analysis,” in Proc. of the Artificial Intelligent and Security Workshop, 2014.

14. J. Vincent. (2016) Twitter taught microsoft’s ai chatbot to be a racist asshole in less than a day. [Online].

Available: https: //www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist

15. A. Pyrgelis, C. Troncoso, and E. D. Cristofaro, “Knock knock, who’s there? membership inference on aggregate

location data,” CoRR, 2017.](https://image.slidesharecdn.com/emergingsecurityandprivacythreatsinai-15-250212084659-27a6a466/75/Emerging-Security-and-Privacy-Threats-in-AI-15-03-24-ppt-26-2048.jpg)