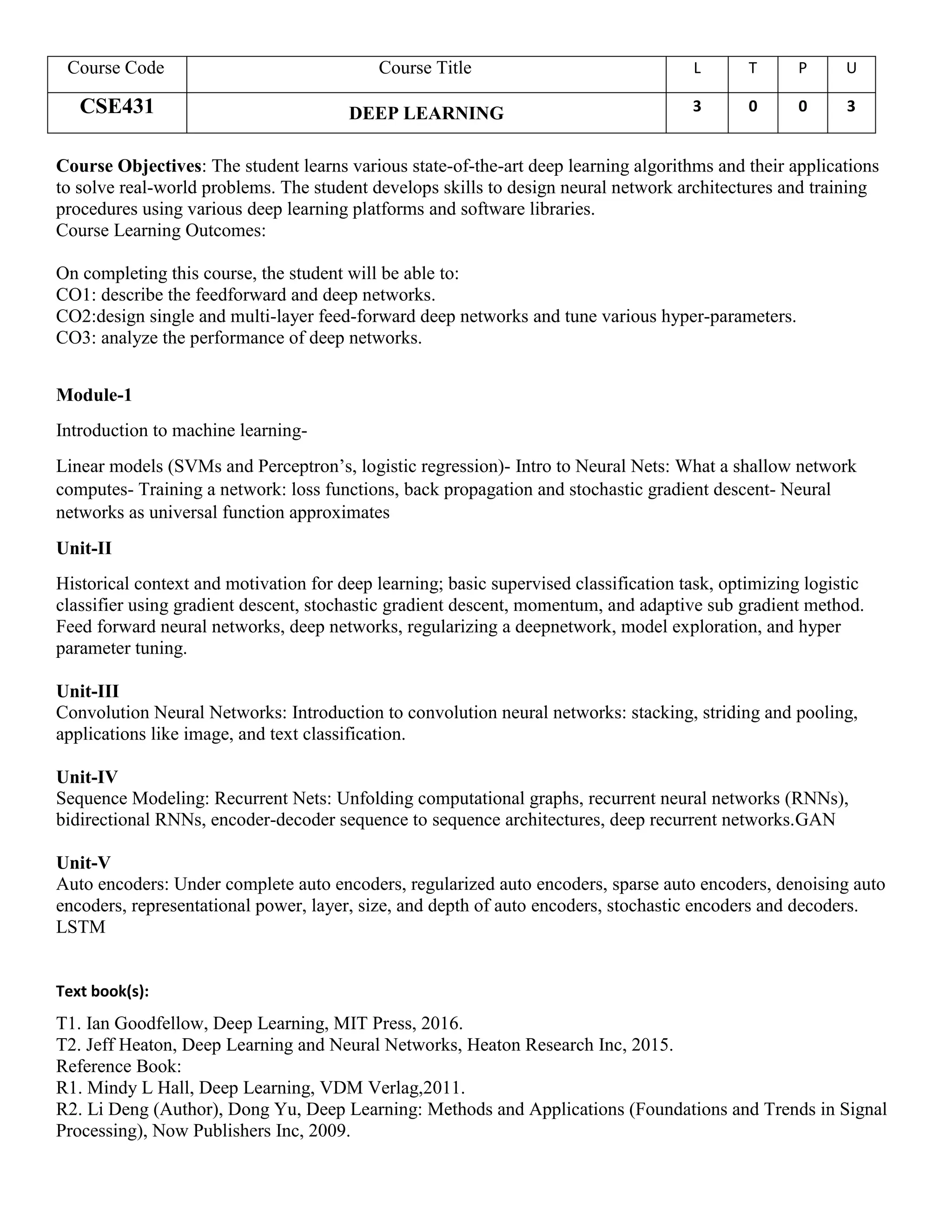

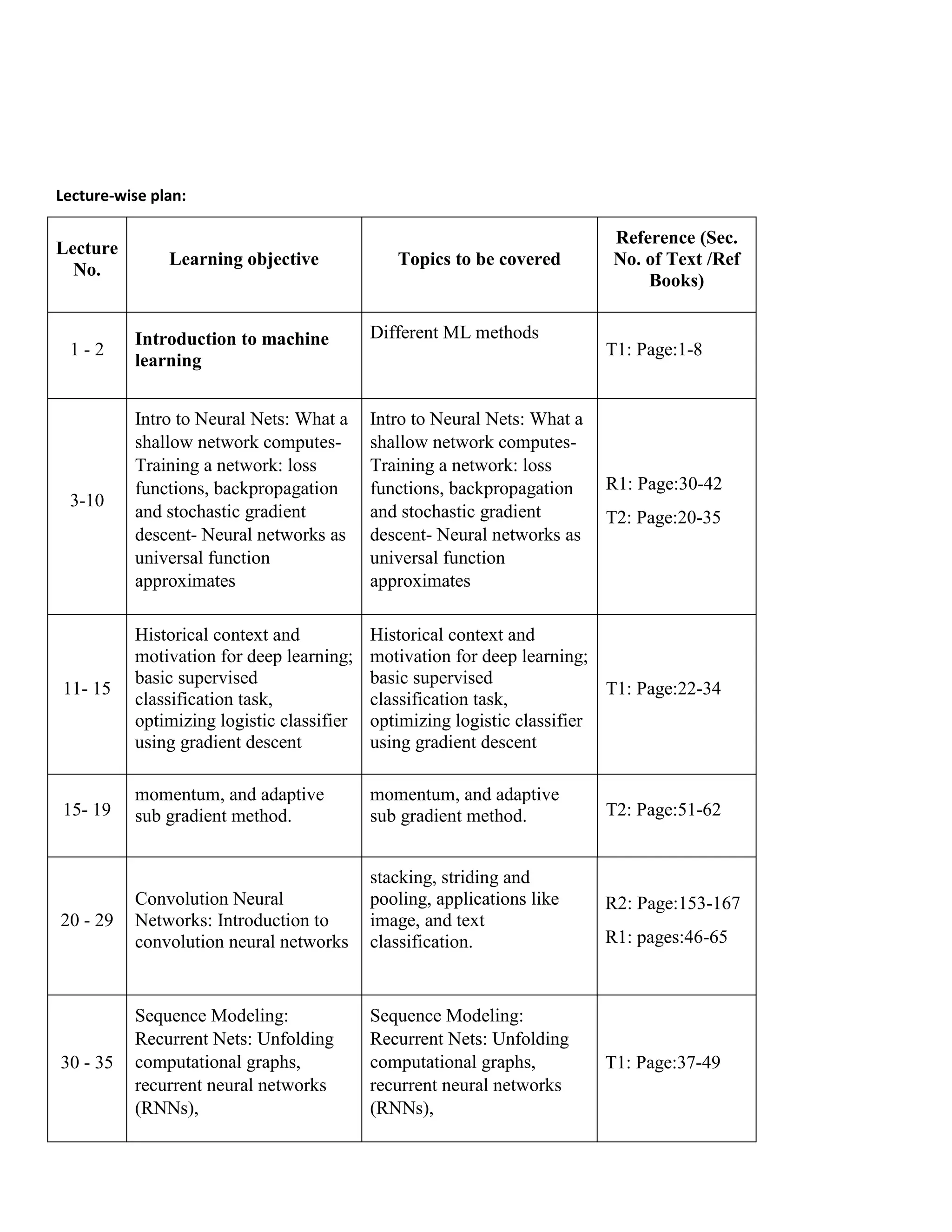

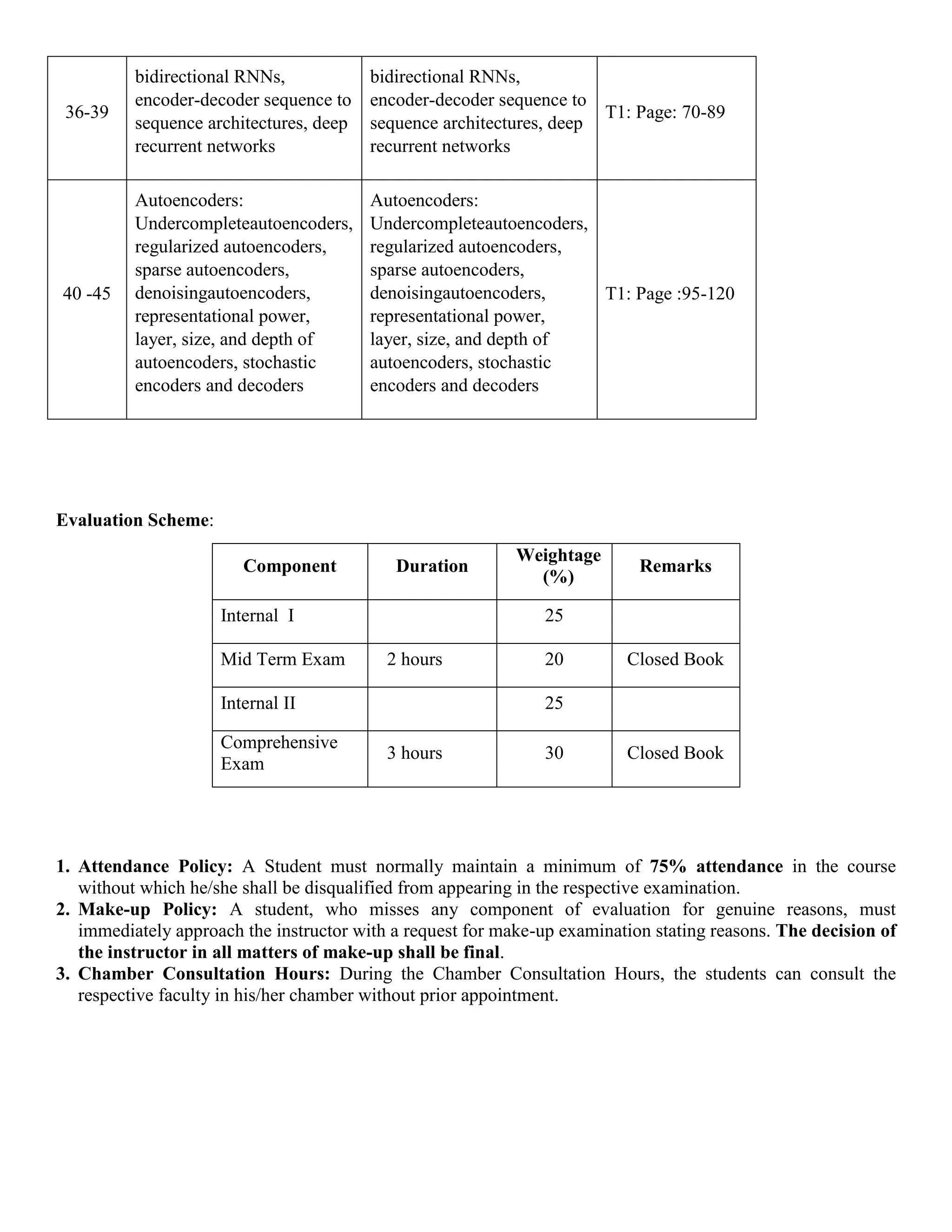

This document outlines the details of a deep learning course including course objectives, learning outcomes, modules, textbook and reference materials, lecture plan, and evaluation scheme. The course aims to teach state-of-the-art deep learning algorithms and their applications through designing neural network architectures. Key topics include feedforward networks, convolutional neural networks, recurrent networks, autoencoders and sequence modeling. Student performance is evaluated through internal assessments, midterm and comprehensive exams.