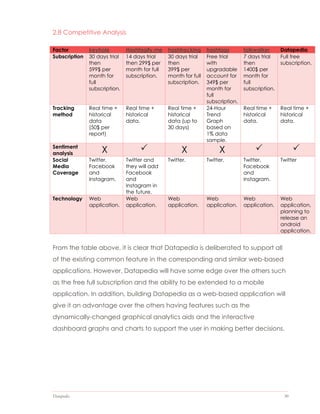

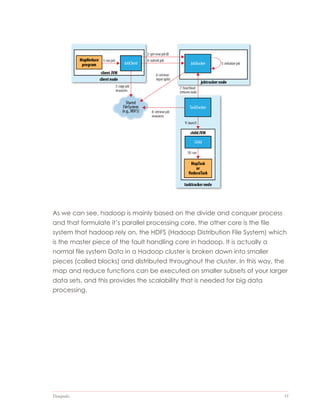

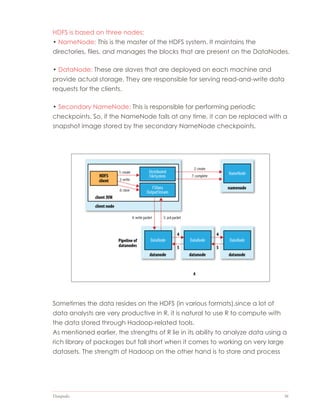

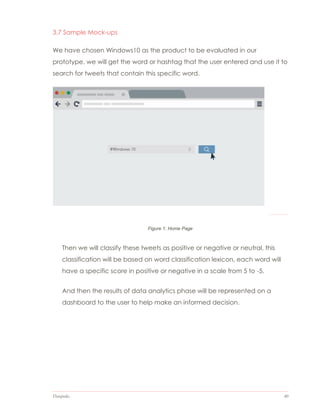

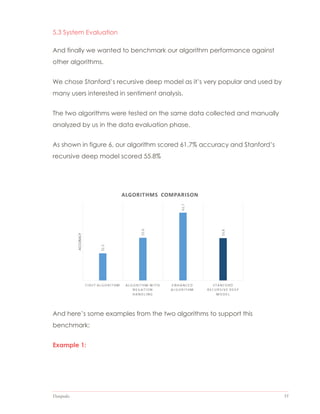

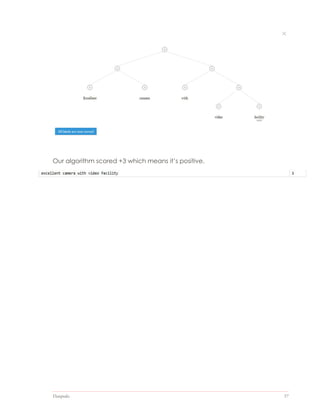

The document outlines the design and implementation of a web application aimed at simplifying the product purchasing decision-making process through the analysis of big data from product reviews and social media. It discusses the project's justification, scope, methodologies, and the technologies utilized, including R, Apache Hadoop, and PHP for data analytics and presentation. The report emphasizes the need for an integrated tool to streamline information collection and enhance user experience in choosing products.