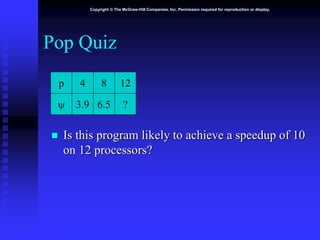

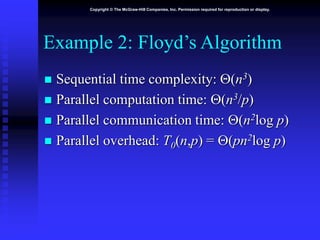

This document discusses performance analysis of parallel programs. It covers speedup formulas, Amdahl's Law, Gustafson-Barsis' Law, and metrics for predicting parallel performance such as Karp-Flatt and isoefficiency. Amdahl's Law predicts maximum speedup based on the serial fraction of a program, while Gustafson-Barsis' Law allows problem size to increase with processors. Karp-Flatt uses experimental data to determine actual serial fraction. Isoefficiency defines the relation between problem size and processors needed to maintain constant efficiency as processors are added.