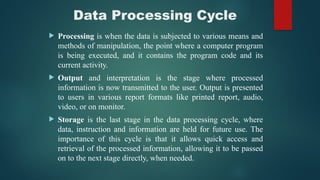

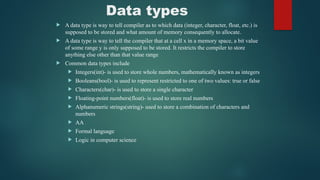

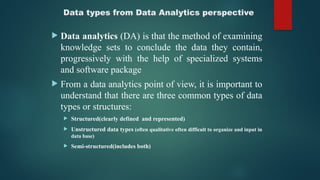

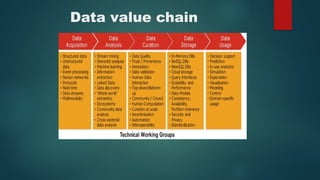

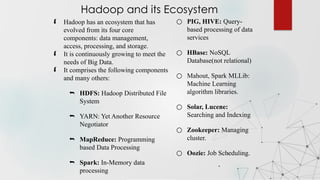

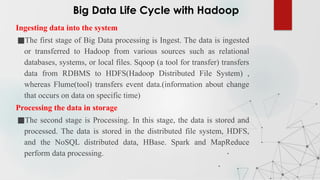

Chapter 2 discusses the significance of data science, highlighting its role in extracting knowledge from various forms of data and the importance of data scientists in this process. It explains the data processing cycle, different types of data (structured, unstructured, semi-structured), and introduces the concept of big data and its characteristics. Moreover, it covers technologies like Hadoop and the stages of big data processing, including ingestion, storage, and analysis.