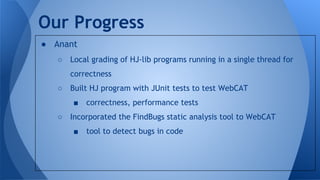

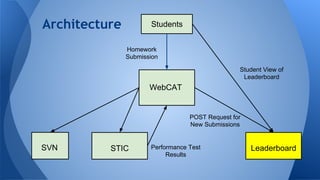

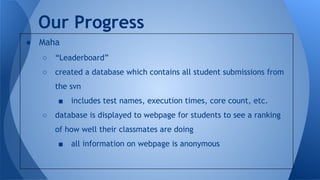

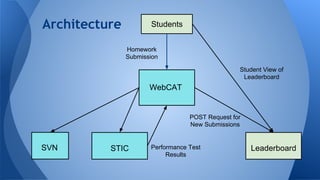

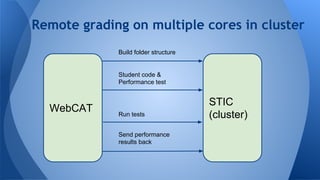

This document summarizes work on developing plugins for the WebCAT autograding system. The team has researched existing autograding systems, set up WebCAT, and developed a feature table and workflow. Anant implemented local single-threaded grading and incorporated static analysis. Maha created a leaderboard to display student performance rankings anonymously. Future plans include improving remote grading on clusters, documentation, and integrating with EduHPC. The goal is to automatically and transparently grade parallel programs for MOOCs.