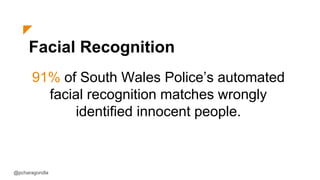

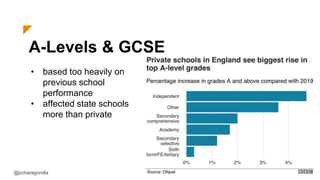

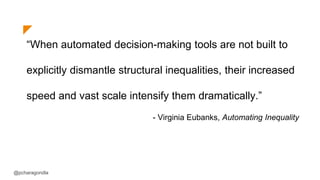

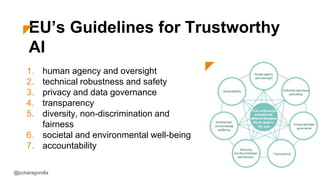

The document discusses algorithmic bias, highlighting its role in creating unfair outcomes in systems such as facial recognition and academic score predictions. It emphasizes the importance of addressing this bias by improving data sourcing, incorporating fairness in design, establishing ethical frameworks, and promoting diversity in teams. Effective prevention of algorithmic bias requires a multi-faceted approach that considers human values and social inequalities.