Report

Share

Download to read offline

Recommended

Recommended

More Related Content

Similar to akanimohadeleye_finalposter_1

Similar to akanimohadeleye_finalposter_1 (20)

[1808.00177] Learning Dexterous In-Hand Manipulation![[1808.00177] Learning Dexterous In-Hand Manipulation](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[1808.00177] Learning Dexterous In-Hand Manipulation](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[1808.00177] Learning Dexterous In-Hand Manipulation

Real-Time Assessment of Flash Flood Threat Using Optical-Acoustic Technology

Real-Time Assessment of Flash Flood Threat Using Optical-Acoustic Technology

Improving Animal Model Translation, Welfare, and Operational Efficiency with ...

Improving Animal Model Translation, Welfare, and Operational Efficiency with ...

Using Visualizations in Remote Online Labs - Talk at CyTSE

Using Visualizations in Remote Online Labs - Talk at CyTSE

Human Movement Recognition Using Internal Sensors of a Smartphone-based HMD (...

Human Movement Recognition Using Internal Sensors of a Smartphone-based HMD (...

Development of a Virtual Reality Simulator for Robotic Brain Tumor Resection

Development of a Virtual Reality Simulator for Robotic Brain Tumor Resection

Embedded Sensing and Computational Behaviour Science

Embedded Sensing and Computational Behaviour Science

Wearable Accelerometer Optimal Positions for Human Motion Recognition(LifeTec...

Wearable Accelerometer Optimal Positions for Human Motion Recognition(LifeTec...

Integration of Estimote Stickers in Smart Apartment Technology-Final

Integration of Estimote Stickers in Smart Apartment Technology-Final

akanimohadeleye_finalposter_1

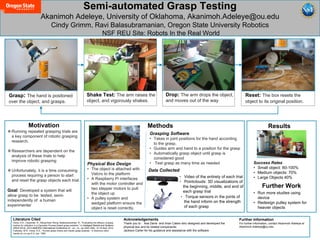

- 1. Semi-automated Grasp Testing Akanimoh Adeleye, University of Oklahoma, Akanimoh.Adeleye@ou.edu Cindy Grimm, Ravi Balasubramanian, Oregon State University Robotics NSF REU Site: Robots In the Real World Grasp Shake Test Drop Motivation Running repeated grasping trials are a key component of robotic grasping research. Researchers are dependent on the analysis of these trials to help improve robotic grasping Unfortunately, it is a time consuming process requiring a person to start and reset the grasp objects each trial. Goal: Developed a system that will allow grasp to be tested, semi- independently of a human experimenter Reset: The box resets the object to its original position. Shake Test: The arm raises the object, and vigorously shakes. Grasp: The hand is positoned over the object, and grasps. • Video of the entirety of each trial • Pointclouds: 3D visualizations of the beginning, middle, and end of each grasp trial • Torque sensors in the joints of the hand inform on the strength of each grasp Drop: The arm drops the object, and moves out of the way. Physical Box Design • The object is attached with Velcro to the platform • A Raspberry Pi interfaces with the motor controller and two stepper motors to pull the object up • A pulley system and wedged platform ensure the object is reset correctly. Grasping Software • Takes in joint positions for the hand according to the grasp. • Guides arm and hand to a position for the grasp • Automatically grasp object until grasp is considered good • Test grasp as many time as needed Methods Data Collected Results Further Work Acknowledgements Thank you to : Asa Davis and Iman Catein who designed and developed the physical box and its related components. Jackson Carter for his guidance and assistance with the software Success Rates • Small object: 80-100% • Medium objects: 70% • Large Objects 40% • Run more studies using device • Redesign pulley system for heavier objects Further Information For further information, contact Akanimoh Adeleye at Akanimoh.Adeleye@ou.edu Literature Cited Goins, A.K.; Carpenter, R.; Weng-Keen Wong; Balasubramanian, R., "Evaluating the efficacy of grasp metrics for utilization in a Gaussian Process-based grasp predictor," in Intelligent Robots and Systems (IROS 2014), 2014 IEEE/RSJ International Conference on , vol., no., pp.3353-3360, 14-18 Sept. 2014 Cutkosky, M.R.; Howe, R.D.; “Human grasp choice and robotic grasp analysis,” in Dextrous robot hands,vol.,no.,pp.5-31,Jan. 1990.