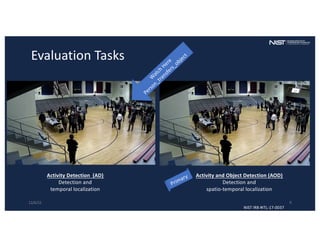

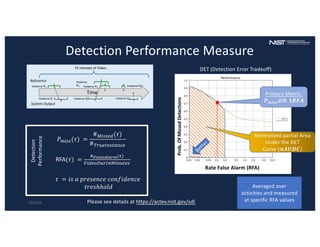

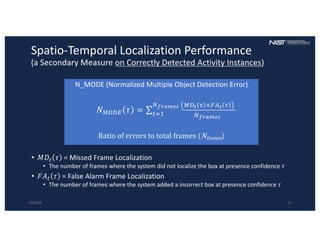

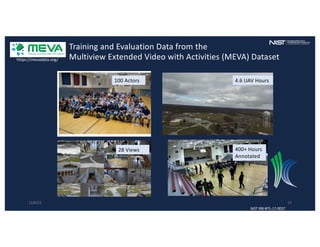

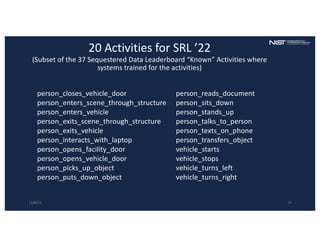

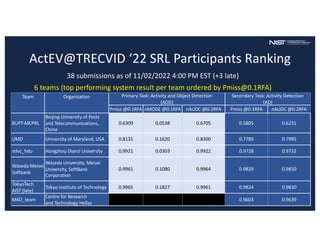

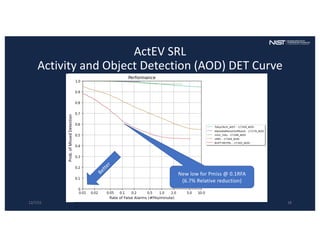

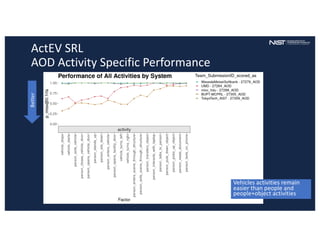

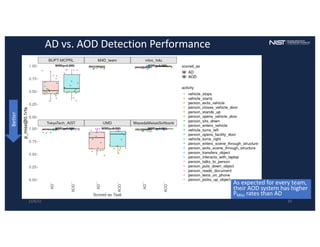

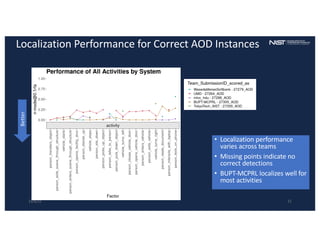

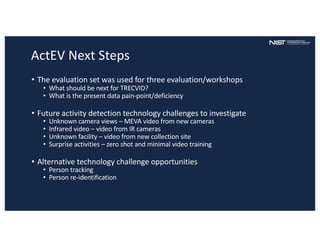

The document summarizes the TRECVID 2022 activity detection in extended video task, presenting an overview of evaluation methods, datasets, and results from various teams. The ACTEV challenge focused on robust detection of activities in multi-camera environments, involving a self-reported leaderboard for participants. Key findings include improved detection performance over prior evaluations and outlines future technology challenges like tracking and surprise activity detection.