Deduplication nhnent

•Download as PPTX, PDF•

0 likes•176 views

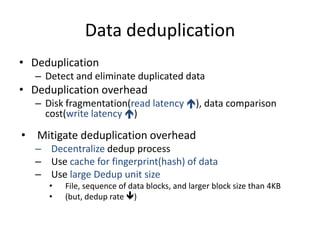

Deduplication detects and eliminates duplicated data but incurs overhead from disk fragmentation, data comparison costs, and write latency increases. To mitigate these issues, the deduplication process can be decentralized, caches can store fingerprints (hashes) of data, and larger deduplication unit sizes like files or sequences of blocks larger than 4KB can be used, though this may decrease the deduplication rate.

Report

Share

Report

Share

More Related Content

What's hot

What's hot (17)

Introduction to Hadoop : A bird eye's view | Abhishek Mukherjee

Introduction to Hadoop : A bird eye's view | Abhishek Mukherjee

Viewers also liked

Viewers also liked (14)

Cuestionario de-macro-nutrimentos-y-micro-nutrimentos

Cuestionario de-macro-nutrimentos-y-micro-nutrimentos

Deduplication nhnent

- 1. Data deduplication • Deduplication – Detect and eliminate duplicated data • Deduplication overhead – Disk fragmentation(read latency ), data comparison cost(write latency ) • Mitigate deduplication overhead – Decentralize dedup process – Use cache for fingerprint(hash) of data – Use large Dedup unit size • File, sequence of data blocks, and larger block size than 4KB • (but, dedup rate )