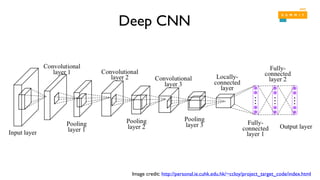

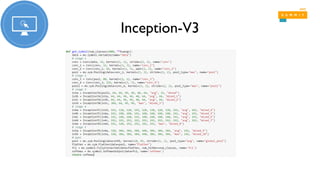

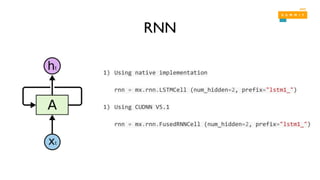

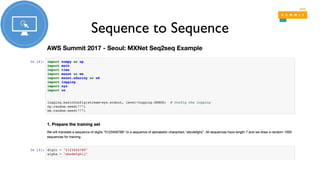

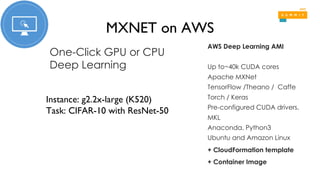

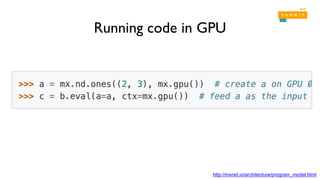

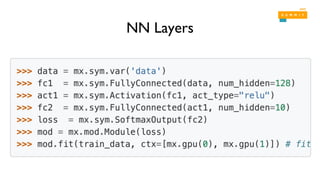

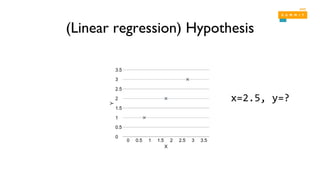

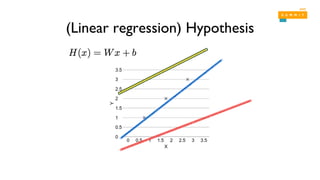

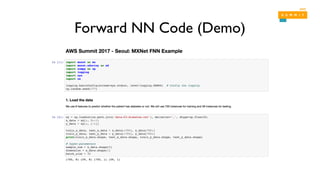

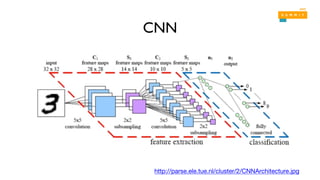

This document summarizes a presentation about MXNet, an open source deep learning framework. It discusses using MXNet for linear regression, logistic regression, deep neural networks (DNNs), convolutional neural networks (CNNs), and recurrent neural networks (RNNs). It also covers running MXNet on Amazon Web Services (AWS) using the Deep Learning AMI and GPU instances for efficient training of deep learning models.

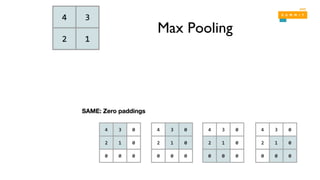

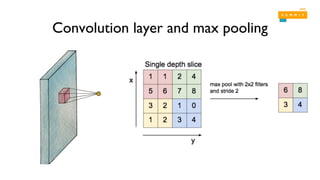

![Simple convolution layer

Image: 1,3,3,1 image, Filter: 2,2,1,1, Stride: 1x1, Padding: VALID

1

1

1

1

[[[[1.]],[[1.]]],

[[[1.]],[[1.]]]]

shape=(2,2,1,1)](https://image.slidesharecdn.com/2mxnet-170418075815/85/MxNET-AWS-Summit-Seoul-2017-21-320.jpg)