Machine Learning Lab

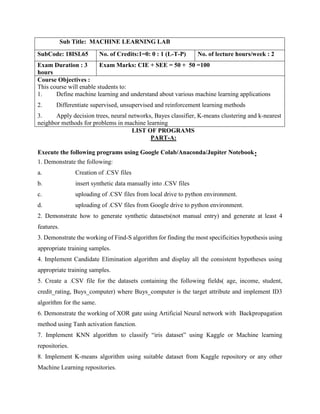

- 1. Sub Title: MACHINE LEARNING LAB SubCode: 18ISL65 No. of Credits:1=0: 0 : 1 (L-T-P) No. of lecture hours/week : 2 Exam Duration : 3 hours Exam Marks: CIE + SEE = 50 + 50 =100 Course Objectives : This course will enable students to: 1. Define machine learning and understand about various machine learning applications 2. Differentiate supervised, unsupervised and reinforcement learning methods 3. Apply decision trees, neural networks, Bayes classifier, K-means clustering and k-nearest neighbor methods for problems in machine learning LIST OF PROGRAMS PART-A: Execute the following programs using Google Colab/Anaconda/Jupiter Notebook: 1. Demonstrate the following: a. Creation of .CSV files b. insert synthetic data manually into .CSV files c. uploading of .CSV files from local drive to python environment. d. uploading of .CSV files from Google drive to python environment. 2. Demonstrate how to generate synthetic datasets(not manual entry) and generate at least 4 features. 3. Demonstrate the working of Find-S algorithm for finding the most specificities hypothesis using appropriate training samples. 4. Implement Candidate Elimination algorithm and display all the consistent hypotheses using appropriate training samples. 5. Create a .CSV file for the datasets containing the following fields( age, income, student, credit_rating, Buys_computer) where Buys_computer is the target attribute and implement ID3 algorithm for the same. 6. Demonstrate the working of XOR gate using Artificial Neural network with Backpropagation method using Tanh activation function. 7. Implement KNN algorithm to classify “iris dataset” using Kaggle or Machine learning repositories. 8. Implement K-means algorithm using suitable dataset from Kaggle repository or any other Machine Learning repositories.

- 2. PART-B: Virtual Lab 1.Implementation of AND/OR/NOT Gate using Single Layer Perceptron. 2. 2.Understanding the concepts of Perceptron Learning Rule. 3.Understanding the concepts of Correlation Learning Rule. Web link for 1,2 and 3: http://vlabs.iitb.ac.in/vlabs-dev/labs/machine_learning/labs/index.php 4.Neural networks simulation Web link for 4: https://playground.tensorflow.org/ Course Outcomes: After completion of course students will be able to: CO1: Identify problems of machine learning and it’s methods CO2: Apply apt machine learning strategy for any given problem CO3: Design systems that uses appropriate models of machine learning CO4: Solve problems related to various learning techniques Cos Mapping with POs CO1 PO1, PO2 CO2 PO3, PO4 CO3 PO3, PO5,PO6 CO4 PO4, PO9, PO12

- 3. PART-A: Execute the following programs using Google Colab/Anaconda/Jupiter Notebook: 1. Demonstrate the following: a. Creation of .CSV files b. insert synthetic data manually into .CSV files c. uploading of .CSV files from local drive to python environment. d. uploading of .CSV files from Google drive to python environment. 1. Demonstrate the following: a. Creation of .CSV files 1. Open Microsoft excel >>new 2. Add contents in excel 3. File >> Save-as → enter( file name) , Save as Type →select ( CSV(comma delimited) >> click(save) Another way to create .csv file 1. Open Microsoft excel >>File >>Open →(chose the desired .xls or .csv file) 2. File >> Save-as → enter( file name) , Save as Type →select ( CSV(comma delimited) >> click(save) b. Insert synthetic data manually into .CSV files 1. Open Microsoft excel >>new 2. Add contents in excel manually 3. File >> Save-as -> enter( file name) , Save as Type ->select ( CSV(comma delimited) >> click(save)

- 4. c. Uploading of .CSV files from local drive to python environment. 1. Open Web browser → Type (colab.research.google.com) → login through your google gmail account 2. Click→ New Notebook 3. Click→ Files ( left hand-side of the new notebook window) → select ( Upload to Session storage icon) 4. Enter the below commands in the code section of your colab notebook to store .csv file contents into pandas dataframe import pandas as pd names = ['sl.no','sl', 'sw', 'pl', 'pw', 'Class'] df=pd.read_csv('/content/filename.csv',names=names) print(df) d. Uploading of .CSV files from Google drive to python environment 1. Click→ Files ( left hand-side of the new notebook window) → select (Mount Drive icon) 2. a pop-up appears → connect to google drive 3. Select → google account( gmail) from the new appeared window tab. 4. Click → drive( from the options appeared under ‘mount drive’) >> right- click on the desired csv_ file >>copy path 5. Enter the below commands in the code section of your colab notebook to store .csv file contents into pandas dataframe import pandas as pd names = ['sl.no','sl', 'sw', 'pl', 'pw', 'Class'] df = pd.read_csv('/content/drive/MyDrive/file_name.csv',names=names) print(df)

- 5. Program 2. Demonstrate how to generate synthetic datasets(not manual entry) and generate at least 4 features. # example of a balanced binary classification task from numpy import where from collections import Counter from sklearn.datasets import make_classification from matplotlib import pyplot # define dataset # weights for imbalanced dataset generation[0.99,0.01] X, y = make_classification(n_samples=1000, n_features=4, n_informative=2, n_redundant=0, n_classes=3, n_clusters_per_class=1, weights=None, random_state=1) # summarize dataset shape print(X.shape, y.shape) # summarize observations by class label counter = Counter(y) print(counter) # summarize first few examples for i in range(10): print(X[i], y[i]) # plot the dataset and color the by class label for label, _ in counter.items(): # counter is a dictinoary row_ix = where(y == label)[0] pyplot.scatter(X[row_ix, 0], X[row_ix, 1], label=str(label)) pyplot.legend() pyplot.show() output: (1000, 4) (1000,) Counter({2: 335, 0: 334, 1: 331}) [ 1.4135301 -0.3023854 1.47357393 -1.58963384] 1 [ 0.15856436 0.80252668 0.58734305 -2.05552344] 2 [-1.211337 1.78859265 0.58428577 0.16807262] 2 [ 0.06837628 -1.9027168 -0.09096474 -1.99639086] 2 [ 0.55920658 -1.99674554 -0.08170227 -0.94733523] 1 [ 0.57335921 -0.29377695 -0.03294189 -1.15071447] 1 [ 1.10313927 1.70798618 0.00726048 -1.35141914] 1 [ 1.03803798 0.78493772 1.88302954 -2.071511 ] 1 [-1.76115588 2.52912789 -0.56393527 -2.58495132] 2 [-0.41755451 -1.24187994 0.03671233 -0.14417975] 2

- 7. Program 3. Demonstrate the working of Find-S algorithm for finding the most specificities hypothesis using appropriate training samples. from google.colab import files #files from your local drive will be picked and file contents will be loaded in "uploaded" uploaded = files.upload() import pandas as pd #imports pandas library and alias name(short name) is given as "pd" import io # to perform and handle data streaming activities # header=None removes column names if specified in the data file df2 = pd.read_csv(io.BytesIO(uploaded['ws copy.csv']), header=None) print("n The Given Training Data Set n") # to see the contents of dataframe df2 print(df2) # just to display the values of most general hypothesis print (" n The most general hypothesis : ['?','?','?','?','?','?']n") # just to display the values of most specific hypothesis print ("n The most specific hypothesis : ['0','0','0','0','0','0']n") #execute this after showing that it modifies the original dataframe dataset import numpy as np # np => short name for numpy .it's user choice to give any short name # iloc is position based from 0 to length-1 of the axis X = np.array(df2.iloc[0:,:-1]) #row(start:stop:step),column(start:stop:step) y = np.array(df2.iloc[0:,-1]) #row(start:stop:step),column(start:stop:step) print(X) #2-D matrix print(y) # vector m,n=X.shape # result of X.shape( two outputs) is stored in m(row value) and n (column value) print(m,n) print("n The initial value of hypothesis: ") hypothesis = ['0'] * (n-1) #model/hypothesis, that my algorithm should output print(hypothesis) # Comparing with Training Examples of Given Data Set print("n Find S: Finding a Maximally Specific Hypothesisn") print(X) for i in range(0,m): #0 to 4. takes you to next instance if y[i]=='Yes': # if the target concept is 'Yes'( positive instance)

- 8. for j in range(0,n): # n => number of columns # checks if hypothesis entry for that particular column is same or not as that of X[i][j] column if X[i][j]!=hypothesis[j]: if hypothesis[j]=='0': # checks if the column value of Hypothesis is null or not hypothesis=X[i,:n] # true => then change it to the column value of that instance else: # false =>then hypothesis[j]='?' # generalize it as ? print(" the hypothesis {0} for training set{0}: ".format(i+1),hypothesis) print("n The Maximally Specific Hypothesis for a given Training Examples :n") print(hypothesis) output: aving target.csv to target.csv The Given Training Data Set 0 1 2 3 4 5 6 0 sunny warm normal strong warm same yes 1 sunny warm high strong warm same yes 2 rainy cold high strong warm change no 3 sunny warm high strong cool change yes The most general hypothesis : ['?','?','?','?','?','?'] The most specific hypothesis : ['0','0','0','0','0','0'] [['sunny ' 'warm' 'normal ' 'strong' 'warm' 'same'] ['sunny ' 'warm' 'high' 'strong' 'warm' 'same'] ['rainy' 'cold' 'high' 'strong' 'warm' 'change'] ['sunny ' 'warm' 'high' 'strong' 'cool' 'change']] ['yes' 'yes' 'no' 'yes'] 4 6 The initial value of hypothesis: ['0', '0', '0', '0', '0'] Find S: Finding a Maximally Specific Hypothesis [['sunny ' 'warm' 'normal ' 'strong' 'warm' 'same'] ['sunny ' 'warm' 'high' 'strong' 'warm' 'same'] ['rainy' 'cold' 'high' 'strong' 'warm' 'change'] ['sunny ' 'warm' 'high' 'strong' 'cool' 'change']] the hypothesis 1 for training set1: ['0', '0', '0', '0', '0'] the hypothesis 2 for training set2: ['0', '0', '0', '0', '0']

- 9. the hypothesis 3 for training set3: ['0', '0', '0', '0', '0'] the hypothesis 4 for training set4: ['0', '0', '0', '0', '0'] The Maximally Specific Hypothesis for a given Training Examples : ['0', '0', '0', '0', '0']

- 10. Implement Candidate Elimination algorithm and display all the consistent hypotheses using appropriate training samples #from google.colab import files #uploaded = files.upload() import pandas as pd import io #df2 = pd.read_csv(io.BytesIO(uploaded['ws.csv']), header=None) df2 = pd.read_csv('/content/ws.csv',header=None) df2 print(df2) # <input,output> 0 1 2 3 4 5 6 0 Sunny Warm High Strong Warm Same Yes 1 Sunny Warm Normal Strong Warm Same Yes 2 Rainy Cold High Strong Warm Change No 3 Sunny Warm High Strong Cool Change Yes df2.loc[0:,6] #df2.loc[0:,-1] #throws error 0 Yes 1 Yes 2 No 3 Yes Name: 6, dtype: object df2.iloc[0:,-1] #doesn't throw error 0 Yes 1 Yes 2 No 3 Yes Name: 6, dtype: object import numpy as np # iloc is position based from 0 to lenght-1 of the axis X = np.array(df2.iloc[0:,:-1]) #row(start:stop:step),column(start:stop:step) y = np.array(df2.iloc[0:,-1]) #row(start:stop:step),column(start:stop:step)

- 11. print(X) #2-D matrix print(y) # vector [['Sunny' 'Warm' 'High' 'Strong' 'Warm' 'Same'] ['Sunny' 'Warm' 'Normal' 'Strong' 'Warm' 'Same'] ['Rainy' 'Cold' 'High' 'Strong' 'Warm' 'Change'] ['Sunny' 'Warm' 'High' 'Strong' 'Cool' 'Change']] ['Yes' 'Yes' 'No' 'Yes'] m,n=X.shape print(m,n) 4 6 print("most specific hypothesis: ['0','0','0','0','0','0']") print("most specific hypothesis: ['?','?','?','?','?','?']") most specific hypothesis: ['0','0','0','0','0','0'] most specific hypothesis: ['?','?','?','?','?','?'] def candi(X, y): print("initialization of specific_h and general_h") specific_h = ['0'] * (n) #model/hypothesis, that my algorith should output print(specific_h) g_h=['?' for i in range(n)] #general_h.append(g_h) general_h=[] print(g_h,"n") for i in range(m): # no.of training examples/rows if y[i] == "Yes": for j in range(n): # runs through columns/attributes if specific_h[j]!=X[i][j]: if specific_h[j]=='0': specific_h=X[i].copy() else: specific_h[j]='?' print(specific_h) #print(general_h) if y[i] == "No": print("n -ve trainign example:",X[i],"n") for j in range(n): # runs through columns/attributes if X[i][j]!=specific_h[j] and specific_h[j]!='?':

- 12. g_h=['?' for i in range(n)] if specific_h[j]=='0': g_h[j]='?' general_h.append(g_h) else: g_h[j] = specific_h[j] general_h.append(g_h) print(general_h) # code to remove contradiction between specific and general hypothesis for i in range(len(general_h)): #print(general_h[i]) for j in range(n): if general_h[i][j]!='?' and specific_h[j]=='?': del general_h[i] #print(general_h) return specific_h,general_h s_final, g_final = candi(X, y) print("Final Specific_h:", s_final, sep="n") print("Final General_h:", g_final, sep="n") output: initialization of specific_h and general_h ['0', '0', '0', '0', '0', '0'] ['?', '?', '?', '?', '?', '?'] ['Sunny' 'Warm' 'High' 'Strong' 'Warm' 'Same'] ['Sunny' 'Warm' '?' 'Strong' 'Warm' 'Same'] -ve trainign example: ['Rainy' 'Cold' 'High' 'Strong' 'Warm' 'Change'] [['Sunny', '?', '?', '?', '?', '?']] [['Sunny', '?', '?', '?', '?', '?'], ['?', 'Warm', '?', '?', '?', '?']] [['Sunny', '?', '?', '?', '?', '?'], ['?', 'Warm', '?', '?', '?', '?'], ['?', '?', '?', '?', '?', 'Same']] ['Sunny' 'Warm' '?' 'Strong' '?' '?'] Final Specific_h: ['Sunny' 'Warm' '?' 'Strong' '?' '?'] Final General_h: [['Sunny', '?', '?', '?', '?', '?'], ['?', 'Warm', '?', '?', '?', '?']]

- 13. 5. Create a .CSV file for the datasets containing the following fields( age, income, student, credit_rating, Buys_computer) where Buys_computer is the target attribute and implement ID3 algorithm for the same import pandas as pd import numpy as np dataset = pd.read_csv("/content/drive/MyDrive/id3dataset.csv - Sheet1.csv" , names= ['age','income','student','credit_rating','buys_computer']) def entropy(target_col): elements,counts = np.unique(target_col,return_counts = True) entropy = np.sum([(-counts[i]/np.sum(counts))*np.log2(counts[i]/np.sum(counts)) for i in range(len(elements))]) return entropy def InfoGain(data,split_attribute_name,target_name="buys_computer"):

- 14. #Calculate the entropy of the total dataset total_entropy = entropy(data[target_name]) ##Calculate the entropy of the dataset #Calculate the values and the corresponding counts for the split attribute vals,counts= np.unique(data[split_attribute_name],return_counts=True) #Calculate the weighted entropy Weighted_Entropy = np.sum([(counts[i]/np.sum(counts))*entropy(data.where(data[split_attribute_name] ==vals[i]).dropna()[target_name]) for i in range(len(vals))]) #Calculate the information gain Information_Gain = total_entropy - Weighted_Entropy return Information_Gain def ID3(data,originaldata,features,target_attribute_name="buys_computer",parent_nod e_class = None): if len(np.unique(data[target_attribute_name])) <= 1: return np.unique(data[target_attribute_name])[0] elif len(data)==0: return np.unique(originaldata[target_attribute_name])[np.argmax(np.unique(originaldata[ target_attribute_name],return_counts=True)[1])] elif len(features) ==0: return parent_node_class else: #Set the default value for this node --> The mode target feature value of the current node parent_node_class = np.unique(data[target_attribute_name])[np.argmax(np.unique(data[target_attribute _name],return_counts=True)[1])] item_values = [InfoGain(data,feature,target_attribute_name) for feature in features] best_feature_index = np.argmax(item_values) best_feature = features[best_feature_index] tree = {best_feature:{}} #Remove the feature with the best inforamtion gain from the feature space

- 15. features = [i for i in features if i != best_feature] for value in np.unique(data[best_feature]): value = value #Split the dataset along the value of the feature with the largest information gain and therwith create sub_datasets sub_data = data.where(data[best_feature] == value).dropna() #Call the ID3 algorithm for each of those sub_datasets with the new parameters --> Here the recursion comes in! subtree = ID3(sub_data,dataset,features,target_attribute_name,parent_node_class) #Add the sub tree, grown from the sub_dataset to the tree under the root node tree[best_feature][value] = subtree return(tree) tree = ID3(dataset , dataset,dataset.columns[:-1]) print('nDisplay Tree n' , tree) Output: Display Tree {‘age’:{middle_aged’ : ‘yes’ , ‘senior’ : {‘credit_rating’ : ‘excellent’: ‘no’ , ‘fair’: ’yes’}} , ‘youth {‘student’ : {‘no’ :’no’ , ‘yes’ : ‘yes’}}}}

- 16. 6.Demonstrate the working of XOR gate using Artificial Neural network with Backpropagation method using Tanh activation function. import numpy as np #np.random.seed(0) def sigmoid (x): return 1/(1 + np.exp(-x)) def sigmoid_derivative(x): return x * (1 - x) #Input datasets inputs = np.array([[0,0],[0,1],[1,0],[1,1]]) expected_output = np.array([[0],[1],[1],[0]]) epochs = 10000 lr = 0.1 inputLayerNeurons, hiddenLayerNeurons, outputLayerNeurons = 2,2,1 #Random weights and bias initialization hidden_weights = np.random.uniform(size=(inputLayerNeurons,hiddenLayerNeurons)) hidden_bias =np.random.uniform(size=(1,hiddenLayerNeurons)) output_weights = np.random.uniform(size=(hiddenLayerNeurons,outputLayerNeurons)) output_bias = np.random.uniform(size=(1,outputLayerNeurons)) print("Initial hidden weights: ",end='') print(*hidden_weights) print("Initial hidden biases: ",end='') print(*hidden_bias) print("Initial output weights: ",end='') print(*output_weights) print("Initial output biases: ",end='') print(*output_bias)

- 17. #Training algorithm for _ in range(epochs): #Forward Propagation hidden_layer_activation = np.dot(inputs,hidden_weights) hidden_layer_activation += hidden_bias hidden_layer_output = sigmoid(hidden_layer_activation) output_layer_activation = np.dot(hidden_layer_output,output_weights) output_layer_activation += output_bias predicted_output = sigmoid(output_layer_activation) #Backpropagation error = expected_output - predicted_output d_predicted_output = error * sigmoid_derivative(predicted_output) error_hidden_layer = d_predicted_output.dot(output_weights.T) d_hidden_layer = error_hidden_layer * sigmoid_derivative(hidden_layer_output) #Updating Weights and Biases output_weights += hidden_layer_output.T.dot(d_predicted_output) * lr output_bias += np.sum(d_predicted_output,axis=0,keepdims=True) * lr hidden_weights += inputs.T.dot(d_hidden_layer) * lr hidden_bias += np.sum(d_hidden_layer,axis=0,keepdims=True) * lr print("Final hidden weights: ",end='') print(*hidden_weights) print("Final hidden bias: ",end='') print(*hidden_bias) print("Final output weights: ",end='') print(*output_weights) print("Final output bias: ",end='') print(*output_bias) print("nOutput from neural network after 10,000 epochs: ",end='') print(*predicted_output) Output from neural network after 10,000 epochs: [0.05770383] [0.9470198] [0.9469948] [0.05712647]

- 18. 7. Implement KNN algorithm to classify “iris dataset” using Kaggle or Machine learning repositories. #1.Import Data from sklearn.datasets import load_iris iris = load_iris() #print("Feature Names:",iris.feature_names,"Iris Data:",iris.data,"TargetNames:",iris.target_names,"Target:",iris.target) #2. Split the data into Test and Data from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split( iris.data, iris.target, test_size = .25) #neighbors_settings = range(1, 11) #for n_neighbors in neighbors_settings: #3.Build The Model from sklearn.neighbors import KNeighborsClassifier clf = KNeighborsClassifier() clf.fit(X_train, y_train) #predict the test resuts y_pred=clf.predict(X_test) cm=confusion_matrix(y_test,y_pred) print('Confusion matrix is as followsn',cm) print('Accuracy Metrics') print(classification_report(y_test,y_pred)) print(" correct predicition",accuracy_score(y_test,y_pred)) print(" worng predicition",(1-accuracy_score(y_test,y_pred))) #5 Calculate the prediction with the labels of the test data print("Predicted Data") print(clf.predict(X_test)) prediction=clf.predict(X_test) print("Test data :") print(y_test) #6 To identify the miss classification diff=prediction-y_test print("Result is ") print(diff) print('Total no of samples misclassied =', sum(abs(diff)))

- 19. Output:

- 20. 8. Implement K-means algorithm using suitable dataset from Kaggle repository or any other Machine Learning repositories. #importing the libraries import numpy as np import matplotlib.pyplot as plt import pandas as pd #importing the Iris dataset with pandas dataset = pd.read_csv('/content/Iris.csv') x = dataset.iloc[:, [1, 2, 3, 4]].values #Finding the optimum number of clusters for k-means classification from sklearn.cluster import KMeans wcss = [] for i in range(1, 11): kmeans = KMeans(n_clusters = i, init = 'k-means++', max_iter = 300, n_init = 10, random_state = 0) kmeans.fit(x) wcss.append(kmeans.inertia_) #Plotting the results onto a line graph, allowing us to observe 'The elbow' plt.plot(range(1, 11), wcss) plt.title('The elbow method') plt.xlabel('Number of clusters') plt.ylabel('WCSS') #within cluster sum of squares plt.show() #Applying kmeans to the dataset / Creating the kmeans classifier kmeans = KMeans(n_clusters = 3, init = 'k-means++', max_iter = 300, n_init = 10, random_state = 0) y_kmeans = kmeans.fit_predict(x) plt.scatter(x[y_kmeans == 0, 0], x[y_kmeans == 0, 1], s = 100, c = 'red', label = 'Iris-setosa')

- 21. plt.scatter(x[y_kmeans == 1, 0], x[y_kmeans == 1, 1], s = 100, c = 'blue', label = 'Iris-versicolour') plt.scatter(x[y_kmeans == 2, 0], x[y_kmeans == 2, 1], s = 100, c = 'green', label = 'Iris-virginica') #Plotting the centroids of the clusters plt.scatter(kmeans.cluster_centers_[:, 0], kmeans.cluster_centers_[:,1], s = 100, c = 'yellow', label = 'Centroids') plt.legend() Output: