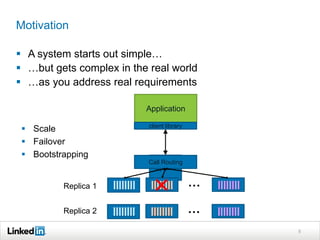

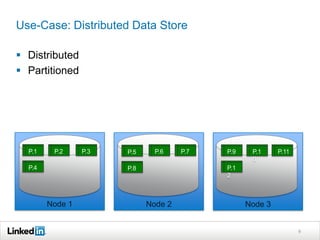

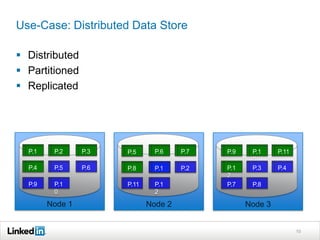

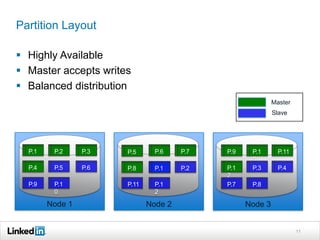

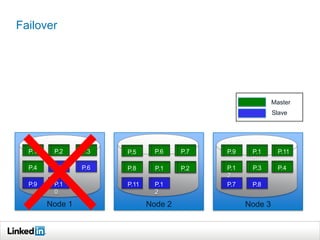

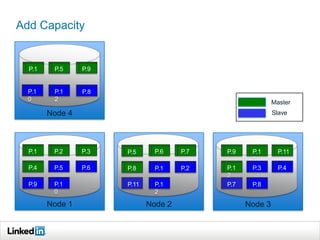

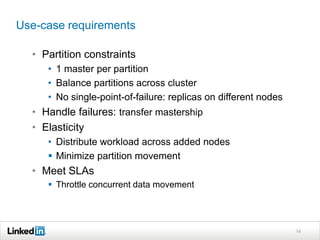

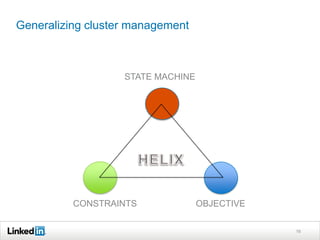

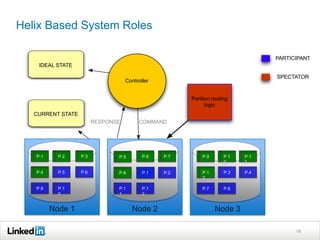

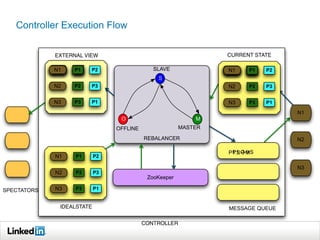

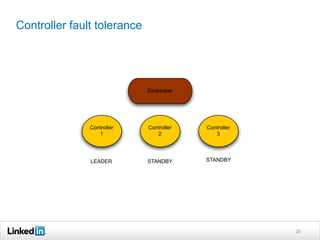

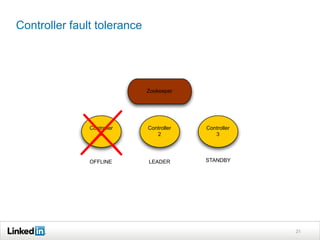

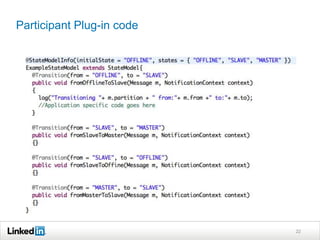

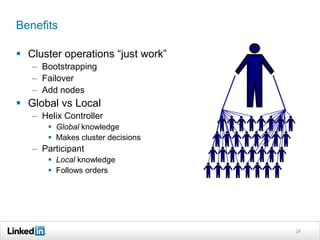

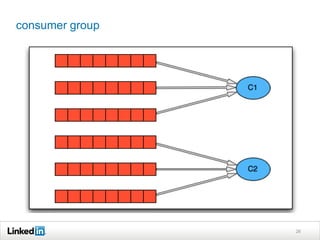

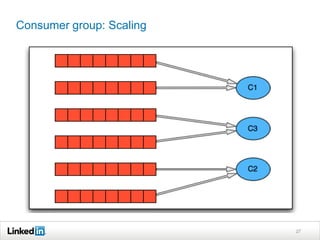

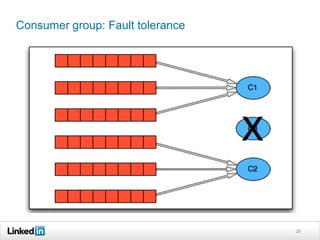

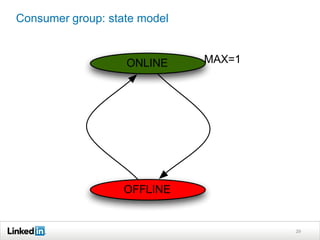

Helix is a cluster management framework from LinkedIn that allows for distributed systems to handle issues like scaling, failover, and bootstrapping through declarative state models and partitioning. It provides abstractions that make building distributed systems easier by handling common cluster management problems. Helix uses a controller to manage participants and make global cluster decisions based on ideal and current states while keeping fault tolerance. It has been used at LinkedIn for systems like distributed data stores and consumer groups.