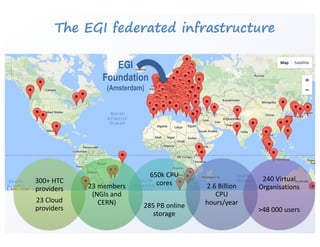

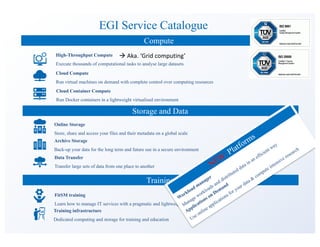

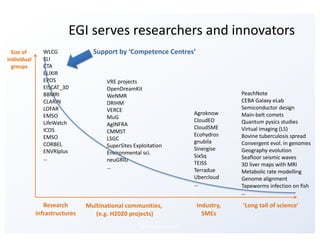

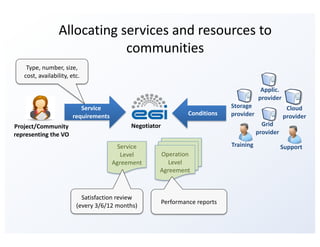

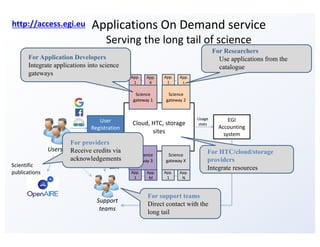

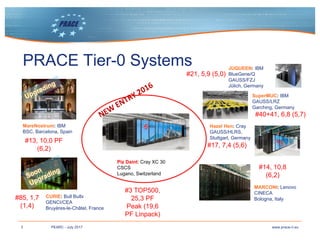

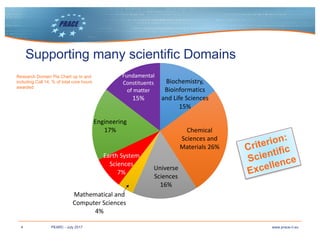

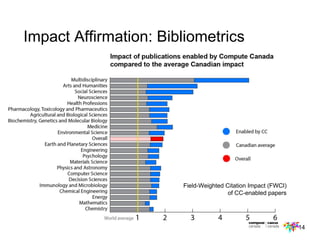

The panel discussed opportunities for and challenges to international collaboration among national and international research computing organizations. Panelists represented Compute Canada, PRACE, EGI, and XSEDE. They described their organizations' user communities, which span many scientific domains, as well as practices like resource allocation and support that could benefit from sharing. Big challenges include securing long-term funding and balancing local needs with international cooperation. The panel saw potential for collaboration on operational practices, shared resources, and addressing challenges no single organization can solve alone.