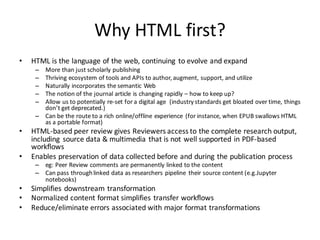

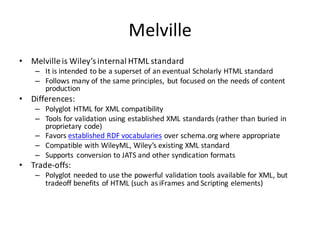

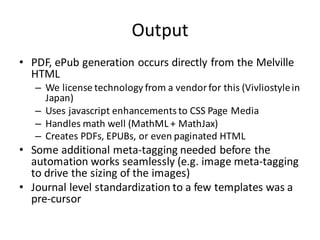

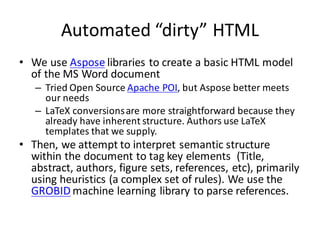

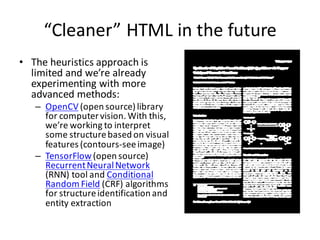

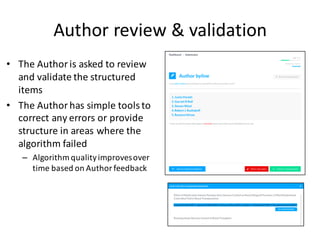

The document outlines the advantages of adopting an HTML-first approach for journal workflows, emphasizing the evolution of HTML as a standard for digital publishing. It details Wiley's internal 'Melville' standard, which streamlines content production and facilitates the integration of multimedia and data in peer reviews, while addressing format transformations. The document also discusses the validation and enhancement processes involving author feedback and machine learning technologies to improve the quality of published articles.