Sample_Subjective_Questions_Answers (1).pdf

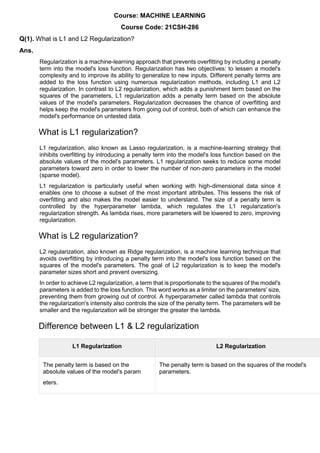

- 1. Course: MACHINE LEARNING Course Code: 21CSH-286 Q(1). What is L1 and L2 Regularization? Ans. Regularization is a machine-learning approach that prevents overfitting by including a penalty term into the model's loss function. Regularization has two objectives: to lessen a model's complexity and to improve its ability to generalize to new inputs. Different penalty terms are added to the loss function using numerous regularization methods, including L1 and L2 regularization. In contrast to L2 regularization, which adds a punishment term based on the squares of the parameters, L1 regularization adds a penalty term based on the absolute values of the model's parameters. Regularization decreases the chance of overfitting and helps keep the model's parameters from going out of control, both of which can enhance the model's performance on untested data. What is L1 regularization? L1 regularization, also known as Lasso regularization, is a machine-learning strategy that inhibits overfitting by introducing a penalty term into the model's loss function based on the absolute values of the model's parameters. L1 regularization seeks to reduce some model parameters toward zero in order to lower the number of non-zero parameters in the model (sparse model). L1 regularization is particularly useful when working with high-dimensional data since it enables one to choose a subset of the most important attributes. This lessens the risk of overfitting and also makes the model easier to understand. The size of a penalty term is controlled by the hyperparameter lambda, which regulates the L1 regularization's regularization strength. As lambda rises, more parameters will be lowered to zero, improving regularization. What is L2 regularization? L2 regularization, also known as Ridge regularization, is a machine learning technique that avoids overfitting by introducing a penalty term into the model's loss function based on the squares of the model's parameters. The goal of L2 regularization is to keep the model's parameter sizes short and prevent oversizing. In order to achieve L2 regularization, a term that is proportionate to the squares of the model's parameters is added to the loss function. This word works as a limiter on the parameters' size, preventing them from growing out of control. A hyperparameter called lambda that controls the regularization's intensity also controls the size of the penalty term. The parameters will be smaller and the regularization will be stronger the greater the lambda. Difference between L1 & L2 regularization L1 Regularization L2 Regularization The penalty term is based on the absolute values of the model's param eters. The penalty term is based on the squares of the model's parameters.

- 2. Produces sparse solutions (some parameters are shrunk towards zero). Produces non-sparse solutions (all parameters are used by the model). Sensitive to outliers. Robust to outliers. Selects a subset of the most important features. All features are used by the model. Optimization is non-convex. Optimization is convex. The penalty term is less sensitive to correlated features. The penalty term is more sensitive to correlated features. Useful when dealing with high- dimensional data with many correlated features. Useful when dealing with high-dimensional data with many correlated features and when the goal is to have a less complex model. Also known as Lasso regularization. Also known as Ridge regularization. Conclusion L1 and L2 regularization are two methods for preventing overfitting in machine learning models, to sum up. L1 regularization, which generates sparse solutions and is based on the absolute values of the model's parameters, is helpful for feature selection. In contrast, L2 regularization yields non-sparse solutions and is based on the squares of the model's parameters, making it beneficial for building simpler models. A hyperparameter called lambda that controls the degree of regularization controls both methods. Depending on the particular situation and the required model attributes, L1 or L2 regularization is chosen. Q(2). Define the terms Bagging and Boosting. Ans. Bagging Bagging is used when our objective is to reduce the variance of a decision tree. Here the concept is to create a few subsets of data from the training sample, which is chosen randomly with replacement. Now each collection of subset data is used to prepare their decision trees thus, we end up with an ensemble of various models. The average of all the assumptions from numerous tress is used, which is more powerful than a single decision tree. Random Forest is an expansion over bagging. It takes one additional step to predict a random subset of data. It also makes the random selection of features rather than using all features to develop trees. When we have numerous random trees, it is called the Random Forest. These are the following steps which are taken to implement a Random forest:

- 3. o Let us consider X observations Y features in the training data set. First, a model from the training data set is taken randomly with substitution. o The tree is developed to the largest. o The given steps are repeated, and prediction is given, which is based on the collection of predictions from n number of trees. Advantages of using Random Forest technique: o It manages a higher dimension data set very well. o It manages missing quantities and keeps accuracy for missing data. Disadvantages of using Random Forest technique: Since the last prediction depends on the mean predictions from subset trees, it won't give precise value for the regression model. Boosting: Boosting is another ensemble procedure to make a collection of predictors. In other words, we fit consecutive trees, usually random samples, and at each step, the objective is to solve net error from the prior trees. If a given input is misclassified by theory, then its weight is increased so that the upcoming hypothesis is more likely to classify it correctly by consolidating the entire set at last converts weak learners into better performing models. Gradient Boosting is an expansion of the boosting procedure. 1. Gradient Boosting = Gradient Descent + Boosting It utilizes a gradient descent algorithm that can optimize any differentiable loss function. An ensemble of trees is constructed individually, and individual trees are summed successively. The next tree tries to restore the loss ( It is the difference between actual and predicted values). Advantages of using Gradient Boosting methods: o It supports different loss functions. o It works well with interactions. Disadvantages of using a Gradient Boosting methods: o It requires cautious tuning of different hyper-parameters. Q(3). Define the terms Information Gain and Entropy.

- 4. Ans.Entropy is the measurement of disorder or impurities in the information processed in machine learning. It determines how a decision tree chooses to split data. We can understand the term entropy with any simple example: flipping a coin. When we flip a coin, then there can be two outcomes. However, it is difficult to conclude what would be the exact outcome while flipping a coin because there is no direct relation between flipping a coin and its outcomes. There is a 50% probability of both outcomes; then, in such scenarios, entropy would be high. This is the essence of entropy in machine learning. Mathematical Formula for Entropy Consider a data set having a total number of N classes, then the entropy (E) can be determined with the formula below: Where; Pi = Probability of randomly selecting an example in class I; Entropy always lies between 0 and 1, however depending on the number of classes in the dataset, it can be greater than 1. But the high value of Entropy and information gain are key concepts in domains such as information theory, data science, and machine learning. Information gain is the amount of knowledge acquired during a certain decision or action, whereas entropy is a measure of uncertainty or unpredictability. People can handle difficult situations and make wise judgments across a variety of disciplines when they have a solid understanding of these principles. Entropy can be used in data science, for instance, to assess the variety or unpredictable nature of a dataset, whereas Information Gain can assist in identifying the qualities that would be most useful to include in a model. In this article, we'll examine the main distinctions between entropy and information gain and how they affect machine learning. What is Entropy?

- 5. The term "entropy" comes from the study of thermodynamics, and it describes how chaotic or unpredictable a system is. Entropy is a measurement of a data set's impurity in the context of machine learning. In essence, it is a method of calculating the degree of uncertainty in a given dataset. The following formula is used to compute entropy − Entropy(S)=−p1log2p1−p2log2p2−…−pnlog2pnEntropy(S)=−p1log2p1−p2log2p2−…−pnlog2pn S is the data set, and p1 through pn are the percentages of various classes inside the data. The resultant entropy value is expressed in bits since the base 2 logarithm used in this method is typical. Consider a dataset with two classes, A and B, in order to comprehend this formula. The entropy can be determined as follows if 80% of the data is in class A and 20% is in class B − Entropy(S)=−0.8log20.8−0.2log20.2=0.72bitsEntropy(S)=−0.8log20.8−0.2log20.2=0.72bits This indicates that the dataset is impurity-rich, with an entropy of 0.72 bits. What is Information Gain? Information Gain is a statistical metric used to assess a feature's applicability in a dataset. It is an important idea in machine learning and is frequently utilized in decision tree algorithms. By contrasting the dataset's entropy before and after a feature is separated, information gain is estimated. A feature's relevance to the categorization of the data increases with information gain. When the dataset has been divided based on a feature, information gain calculates the entropy decrease. The amount of knowledge a feature imparts about the class is measured by this metric. Selecting the characteristic that provides the most information about the class will help you achieve your aim of maximizing information gain. The following formula is used to compute information gain − InformationGain(S,A)=Entropy(S)–∑(|Sv|/|S|)∗Entropy(Sv)InformationGain(S,A)=Entropy(S)– ∑(|Sv|/|S|)∗Entropy(Sv) The number of elements in Sv is given by |Sv|, where S is the set of data, A is a feature, Sv is the subset of S for which feature A takes the value v, and S is the total number of elements in S. Think of a dataset with two characteristics, X and Y, to better comprehend this formula. The information gain can be calculated as follows if the data is to be divided based on characteristic X − InformationGain(S,X)=Entropy(S)– [(3/5)∗Entropy(S1)+(2/5)∗Entropy(S2)]InformationGain(S,X)=Entropy(S)– [(3/5)∗Entropy(S1)+(2/5)∗Entropy(S2)] where S1 is the subset of data where feature X takes a value of 0, and S2 is the subset of data where feature X takes a value of 1. These two subsets' entropies, Entropy(S1) and Entropy(S2), can be determined using the formula we previously covered. The amount by which the dataset will be divided based on characteristic X will be shown by the information gain that results Q(4). Discuss the difference between R – Squared and Adjusted R – Squared.

- 6. Ans. R-squared: R-squared measures the proportion of the variance in the dependent variable (target) that is explained by the independent variables (features) in a regression model. The formula for R-squared is: R-squared = 1 - (SSR / SST) where: - SSR (Sum of Squared Residuals) is the sum of the squared differences between the predicted values and the actual values. - SST (Total Sum of Squares) is the sum of the squared differences between the actual values and the mean of the dependent variable. Adjusted R-squared: Adjusted R-squared is a modified version of R-squared that takes into account the number of predictors in the model. It penalizes the inclusion of unnecessary predictors. The formula for adjusted R-squared is: Adjusted R-squared = 1 - [(1 - R-squared) * (n - 1) / (n - k - 1)] where: - R-squared is the coefficient of determination. - n is the number of observations in the dataset. - k is the number of independent variables (predictors) in the model. The adjusted R-squared formula adjusts the R-squared value by subtracting a penalty term that depends on the number of predictors and the sample size. The penalty increases as the number of predictors increases relative to the sample size. It is important to note that while R-squared always increases with the addition of more predictors, the adjusted R-squared may decrease if the added predictors do not significantly contribute to the model's performance or if they introduce noise. Adjusted R-squared is generally a more reliable metric when comparing models with different numbers of predictors. Q(5). Describe Bayesian Regression Analysis with its applications. Ans. Bayesian regression analysis is a statistical modeling technique that combines the principles of Bayesian inference with regression analysis. It provides a probabilistic framework

- 7. for estimating the parameters of a regression model and making predictions. Here's a description of Bayesian regression analysis and its applications: 1. Bayesian Inference: - Bayesian inference is a statistical approach that incorporates prior knowledge and updates it based on observed data to obtain posterior probability distributions. - In Bayesian regression analysis, prior beliefs about the parameters of the regression model are combined with the likelihood of the observed data to obtain a posterior distribution of the parameters. - The posterior distribution represents our updated beliefs about the parameters after considering the data, and it allows us to quantify the uncertainty associated with the parameter estimates. 2. Bayesian Regression Model: - In Bayesian regression analysis, a regression model is specified, usually with a prior assumption about the distribution of the parameters. - The model is estimated using Bayes' theorem, which involves calculating the posterior distribution of the parameters given the observed data. - Markov Chain Monte Carlo (MCMC) methods, such as Gibbs sampling or Metropolis- Hastings algorithm, are commonly used to approximate the posterior distribution. - The posterior distribution provides estimates of the parameters as well as measures of uncertainty, such as credible intervals. 3. Applications of Bayesian Regression Analysis: - Uncertainty Quantification: Bayesian regression allows us to quantify uncertainty associated with the parameter estimates and predictions. This is particularly useful when decision-making requires understanding the range of possible outcomes. - Prediction: Bayesian regression provides probabilistic predictions that incorporate both prior knowledge and observed data. It allows us to generate predictive intervals that indicate the likely range of values for future observations. - Feature Selection: Bayesian regression offers a natural way to perform feature selection by assigning small or zero coefficients to irrelevant features. The posterior distribution allows us to assess the importance of each feature in the model. - Model Comparison: Bayesian regression facilitates model comparison by comparing the posterior probabilities of different models. This helps in selecting the most suitable model given the available data. - Outlier Detection: Bayesian regression can identify outliers by assigning lower posterior probabilities to data points that do not conform to the assumed model. - Time Series Analysis: Bayesian regression can be extended to handle time series data, allowing for dynamic modeling and forecasting by incorporating lagged variables or autoregressive components.

- 8. Overall, Bayesian regression analysis provides a flexible and powerful framework for estimating regression models and making predictions while quantifying uncertainty. It finds applications in various fields, including finance, economics, healthcare, and environmental sciences, among others. Q(6). Distinguish between Mean Absolute Error (MAE) and Mean Squared Error (MSE) with suitable examples. Mean Absolute Error (MAE) and Mean Squared Error (MSE) are both commonly used metrics to evaluate the performance of regression models. However, they differ in how they measure the errors between predicted and actual values. Here's a comparison between MAE and MSE with suitable examples: Mean Absolute Error (MAE): - MAE measures the average absolute difference between the predicted and actual values. - It provides a measure of the average magnitude of errors without considering their direction. - MAE is less sensitive to outliers compared to MSE. - The formula for MAE is: MAE = (1/n) * Σ|Yi - Ŷi|, where n is the number of data points, Yi is the actual value, and Ŷi is the predicted value. Example: Suppose we have a regression model for predicting house prices. For three houses, the actual prices are $200,000, $250,000, and $300,000. The model predicts the prices as $220,000, $240,000, and $290,000. The MAE would be: MAE = (|200,000 - 220,000| + |250,000 - 240,000| + |300,000 - 290,000|) / 3 = 20,000 / 3 ≈ $6,666.67 Mean Squared Error (MSE): - MSE measures the average of the squared differences between the predicted and actual values. - It gives more weight to larger errors due to the squaring operation. - MSE is more sensitive to outliers compared to MAE. - The formula for MSE is: MSE = (1/n) * Σ(Yi - Ŷi)^2, where n is the number of data points, Yi is the actual value, and Ŷi is the predicted value. Example: Continuing with the house price prediction example, the MSE would be: MSE = ((200,000 - 220,000)^2 + (250,000 - 240,000)^2 + (300,000 - 290,000)^2) / 3 = (400,000 + 100,000 + 100,000) / 3 = 200,000 / 3

- 9. ≈ $66,666.67 Comparison: - MAE measures the average absolute difference between predicted and actual values, while MSE measures the average squared difference. - MAE is less sensitive to outliers, while MSE is more sensitive to outliers. - MAE is easier to interpret since it represents the average magnitude of errors, while MSE has a larger scale due to the squaring operation. - When using MAE, errors are treated equally, whereas MSE emphasizes larger errors due to the squaring operation. In summary, MAE and MSE offer different perspectives on measuring the performance of regression models. MAE is suitable when the absolute magnitude of errors is important, while MSE is suitable when larger errors should be penalized more. Q(7). State the relationship of Random Forest and Decision Trees. Ans. Random Forest is an ensemble learning method that utilizes Decision Trees as its base model. The relationship between Random Forest and Decision Trees can be understood as follows: 1. Decision Trees: - Decision Trees are a popular machine learning algorithm used for both classification and regression tasks. - They create a tree-like model where each internal node represents a decision based on a feature, and each leaf node represents a predicted outcome. - Decision Trees recursively split the data based on features to maximize information gain (in classification) or minimize impurity (in regression). - They can capture complex non-linear relationships and are interpretable. 2. Random Forest: - Random Forest is an ensemble method that combines multiple Decision Trees to make predictions. - It creates an ensemble of Decision Trees through a process known as bagging (bootstrap aggregating). - Each Decision Tree in the Random Forest is trained on a different subset of the training data, randomly sampled with replacement (bootstrap sampling). - Additionally, at each split in the Decision Tree, only a random subset of features is considered, further adding randomness. - Random Forest combines the predictions of all the individual Decision Trees to make the final prediction by averaging (regression) or voting (classification) the results.

- 10. Relationship: - Random Forest utilizes Decision Trees as its underlying building blocks. - Each Decision Tree in a Random Forest is trained independently on a different subset of the training data. - Random Forest introduces randomness by using bootstrap sampling and considering only a random subset of features at each split. - The ensemble of Decision Trees in a Random Forest helps to reduce overfitting, improve generalization, and increase the robustness of the predictions. - Random Forest can handle high-dimensional datasets, capture complex interactions, and provide feature importance measures based on the ensemble of Decision Trees. - Decision Trees can be seen as the individual components of a Random Forest, whereas the Random Forest itself is a more powerful and flexible model that leverages the strengths of Decision Trees. In summary, Random Forest is an ensemble method that uses Decision Trees as its building blocks. It combines the predictions of multiple Decision Trees to make more accurate and robust predictions, leveraging the benefits of both bagging and feature randomness. Q(8). Interpret the overfitting of a model in Machine Learning. Ans. Overfitting occurs when our machine learning model tries to cover all the data points or more than the required data points present in the given dataset. Because of this, the model starts caching noise and inaccurate values present in the dataset, and all these factors reduce the efficiency and accuracy of the model. The overfitted model has low bias and high variance. The chances of occurrence of overfitting increase as much we provide training to our model. It means the more we train our model, the more chances of occurring the overfitted model. Overfitting is the main problem that occurs in supervised learning. Example: The concept of the overfitting can be understood by the below graph of the linear regression output:

- 11. As we can see from the above graph, the model tries to cover all the data points present in the scatter plot. It may look efficient, but in reality, it is not so. Because the goal of the regression model to find the best fit line, but here we have not got any best fit, so, it will generate the prediction errors. How to avoid the Overfitting in Model Both overfitting and underfitting cause the degraded performance of the machine learning model. But the main cause is overfitting, so there are some ways by which we can reduce the occurrence of overfitting in our model. o Cross-Validation o Training with more data o Removing features o Early stopping the training o Regularization o Ensembling Q(9). Write and explain characteristics of Best Regression Line. Ans. When working with linear regression, our main goal is to find the best fit line that means the error between predicted values and actual values should be minimized. The best fit line will have the least error.

- 12. The different values for weights or the coefficient of lines (a0, a1) gives a different line of regression, so we need to calculate the best values for a0 and a1 to find the best fit line, so to calculate this we use cost function. Cost function- o The different values for weights or coefficient of lines (a0, a1) gives the different line of regression, and the cost function is used to estimate the values of the coefficient for the best fit line. o Cost function optimizes the regression coefficients or weights. It measures how a linear regression model is performing. o We can use the cost function to find the accuracy of the mapping function, which maps the input variable to the output variable. This mapping function is also known as Hypothesis function. For Linear Regression, we use the Mean Squared Error (MSE) cost function, which is the average of squared error occurred between the predicted values and actual values. It can be written as: For the above linear equation, MSE can be calculated as: Where, N=Total number of observation Yi = Actual value (a1xi+a0)= Predicted value. Q(10). Build the structure of a Decision Tree. Ans. Root Node: It is the topmost node in the tree, which represents the complete dataset. It is the starting point of the decision-making process. Decision/Internal Node: A node that symbolizes a choice regarding an input feature. Branching off of internal nodes connects them to leaf nodes or other internal nodes. Leaf/Terminal Node: A node without any child nodes that indicates a class label or a numerical value. Splitting: The process of splitting a node into two or more sub-nodes using a split criterion and a selected feature. Branch/Sub-Tree: A subsection of the decision tree starts at an internal node and ends at the leaf nodes. Parent Node: The node that divides into one or more child nodes. Child Node: The nodes that emerge when a parent node is split.

- 13. Q(11). Apply the linear regression model for a suitable dataset and find the relationship factor. Also try to predict the outcome on the basis of a predictor value. Ans. To apply a linear regression model, we need a dataset that contains both the predictor variable(s) and the corresponding outcome variable. Let's assume we have a dataset that consists of a predictor variable "X" and an outcome variable "Y". Here's a step-by-step guide on how to apply a linear regression model and find the relationship factor: 1. Import the necessary libraries: - First, import the required libraries for data manipulation, visualization, and modeling. Common libraries include numpy, pandas, matplotlib, and scikit-learn. 2. Load and preprocess the dataset: - Load the dataset into your program. Ensure that it is in a format that can be used for analysis. - Split the dataset into predictor variable(s) (X) and outcome variable (Y). - If necessary, perform data preprocessing steps such as handling missing values, scaling, or encoding categorical variables. 3. Split the dataset into training and testing sets: - Divide the dataset into a training set and a testing set. This allows us to evaluate the model's performance on unseen data.

- 14. 4. Create and train the linear regression model: - Instantiate a linear regression model from the chosen library (e.g., scikit-learn's LinearRegression()). - Fit the model to the training data using the predictor variable (X) and the outcome variable (Y). 5. Interpret the relationship factor: - Once the model is trained, you can access the coefficients of the linear regression equation, which represent the relationship factor between the predictor variable(s) and the outcome variable. - The coefficients indicate the change in the outcome variable for a unit change in the predictor variable, assuming all other variables are held constant. 6. Make predictions: - Use the trained model to make predictions on the testing set or new data. - Provide the predictor value(s) as input to the model's predict() function to obtain the predicted outcome. Q(12). Compare and Contrast between Linear and Ridge Regression techniques. Ans. Linear regression and ridge regression are both regression analysis methods that use a linear model to predict the response variable from the predictor variables. The main difference between the two methods is that ridge regression adds a penalty to the model's coefficients, which helps to prevent overfitting. Linear regression is a simple linear model that minimizes the sum of squared errors between the predicted values and the observed values. The model is given by the following equation: Code snippet y = β0 + β1x1 + β2x2 + ... + βnxn Use code with caution. Learn more content_copy where: y is the response variable β0 is the intercept β1, β2, ..., βn are the coefficients of the predictor variables x1, x2, ..., xn are the predictor variables Ridge regression is a more complex linear model that minimizes the sum of squared errors between the predicted values and the observed values, plus a penalty term that is proportional to the sum of the squares of the coefficients. The model is given by the following equation:

- 15. y = β0 + β1x1 + β2x2 + ... + βnxn + λ(β1^2 + β2^2 + ... + βn^2) where: λ is a hyperparameter that controls the amount of regularization Ridge regression is often used when there is multicollinearity among the predictor variables. Multicollinearity occurs when the predictor variables are highly correlated with each other. This can cause problems with linear regression, because the model may be too sensitive to small changes in the data. Ridge regression can help to reduce the impact of multicollinearity by shrinking the coefficients of the predictor variables. In general, ridge regression is a more robust method than linear regression. It is less likely to overfit the data and it is more effective at dealing with multicollinearity. However, ridge regression can also reduce the accuracy of the model. The optimal value of the hyperparameter λ depends on the data, so it is important to experiment with different values to find the best fit. Q(13). Compare and contrast between Lasso Regression and Bayesian Linear Regression. Ans. Lasso regression and Bayesian linear regression are both regression analysis methods that use a linear model to predict the response variable from the predictor variables. The main difference between the two methods is that Lasso regression uses an L1 penalty, while Bayesian linear regression uses a Gaussian prior distribution. Lasso regression is a shrinkage method that penalizes the sum of the absolute values of the coefficients. This encourages some of the coefficients to be zero, which can help to reduce overfitting. Lasso regression is often used when there are a large number of predictor variables, and it can be helpful for identifying the most important variables. Bayesian linear regression is a probabilistic method that uses a Gaussian prior distribution to model the uncertainty in the coefficients. This can help to improve the accuracy of the model, especially when there is limited data. Bayesian linear regression can also be used to incorporate prior knowledge about the coefficients into the model. Here is a table that summarizes the key differences between Lasso regression and Bayesian linear regression: Feature Lasso regression Bayesian linear regression

- 16. Penalty L1 Gaussian prior distribution Effect Encourages some of the coefficients to be zero Improves accuracy, especially with limited data Prior knowledge Can be used to incorporate prior knowledge about the coefficients Can be used to incorporate prior knowledge about the coefficients Ultimately, the best choice of regression method depends on the specific data set and the desired outcome. If the data is affected by multicollinearity and there are a large number of predictor variables, then Lasso regression may be the best choice. However, if the data is not affected by multicollinearity and there is limited data, then Bayesian linear regression may be a better choice. Here are some additional considerations when choosing between Lasso regression and Bayesian linear regression: Lasso regression is more interpretable than Bayesian linear regression. With Lasso regression, the coefficients that are set to zero can be interpreted as being unimportant. This can be helpful for understanding the relationship between the predictor variables and the response variable. Bayesian linear regression is more flexible than Lasso regression. With Bayesian linear regression, the prior distribution can be used to control the amount of regularization. This can be helpful for dealing with different types of data. Lasso regression is more computationally efficient than Bayesian linear regression. Lasso regression can be solved using a simple least squares algorithm. Bayesian linear regression, on the other hand, requires a more complex optimization algorithm. Q(14). Compare and contrast between Binary Classifier and Multi-Class Classifier. Ans. A binary classifier is a machine learning model that can be used to classify data into two classes. For example, a binary classifier could be used to classify email messages as spam or not spam, or to classify images as containing a cat or not a cat. A multi-class classifier is a machine learning model that can be used to classify data into more than two classes. For example, a multi-class classifier could be used to classify images of different types of animals, or to classify text as being written in different languages.

- 17. The main difference between binary classifiers and multi-class classifiers is the number of classes that they can classify data into. Binary classifiers can only classify data into two classes, while multi-class classifiers can classify data into more than two classes. Another difference between binary classifiers and multi-class classifiers is the way that they are trained. Binary classifiers are typically trained using a logistic regression model, while multi-class classifiers can be trained using a variety of different models, such as support vector machines (SVMs), decision trees, and neural networks. Binary classifiers are typically easier to train than multi-class classifiers, and they are often more accurate when the data is well-balanced. However, multi-class classifiers can be more accurate when the data is not well-balanced. Ultimately, the best choice of classifier depends on the specific data set and the desired outcome. If the data is well-balanced and the goal is to achieve high accuracy, then a binary classifier may be the best choice. However, if the data is not well-balanced or the goal is to achieve high accuracy and high speed, then a multi- class classifier may be a better choice. Here is a table that summarizes the key differences between binary classifiers and multi-class classifiers: Feature Binary classifier Multi-class classifier Number of classes 2 More than 2 Training model Logistic regression Support vector machines, decision trees, neural networks Ease of training Easier More difficult Accuracy Typically more accurate when data is well-balanced Can be more accurate when data is not well-balanced Speed Typically faster Can be slower

- 18. Here are some additional considerations when choosing between binary classifiers and multi-class classifiers: The number of classes: If the data set has only two classes, then a binary classifier is the simplest and most efficient choice. The balance of the classes: If the data set is well-balanced, then a binary classifier is likely to be more accurate than a multi-class classifier. The desired accuracy: If the goal is to achieve high accuracy, then a multi-class classifier may be a better choice, even if the data set is not well-balanced. The desired speed: If speed is a priority, then a binary classifier may be a better choice. If you are not sure which type of classifier to choose, it is a good idea to experiment with both binary classifiers and multi-class classifiers and see which one works better for your data. Q(15). Compare and contrast between Regression and Classification. Sure. Regression and classification are both supervised learning algorithms that are used to predict an outcome from a set of input data. However, there are some key differences between the two approaches. Regression is used to predict a continuous value, such as the price of a house or the weight of a person. The goal of regression is to find a mathematical function that can be used to map the input data to the output value. Classification is used to predict a discrete value, such as whether a person is likely to have cancer or whether an email is spam. The goal of classification is to find a model that can assign each data point to one of a set of pre-defined categories. Here is a table that summarizes the key differences between regression and classification: Feature Regression Classification Output value Continuous Discrete Goal Find a mathematical function Find a model that can assign each data point to a category

- 19. Examples Predicting the price of a house, predicting the weight of a person Predicting whether a person is likely to have cancer, predicting whether an email is spam Here are some additional considerations when choosing between regression and classification: The type of output value: If the output value is continuous, then regression is the appropriate approach. If the output value is discrete, then classification is the appropriate approach. The number of categories: If there are a small number of categories, then classification is the appropriate approach. If there are a large number of categories, then regression may be a better choice. The desired accuracy: If high accuracy is desired, then classification may be a better choice. If high accuracy is not as important as speed or interpretability, then regression may be a better choice. If you are not sure which approach to choose, it is a good idea to consult with a data scientist or machine learning engineer. Q(16). Using a suitable dataset predict an outcome on the basis of Logistic Regression Ans. First, we need to import the necessary libraries. Code snippet import pandas as pd import numpy as np from sklearn.linear_model import LogisticRegression Next, we need to load the dataset. Code snippet df = pd.read_csv('heart.csv') The dataset contains information about patients who have had heart attacks. The target variable is target, which is a binary variable that indicates whether or not the patient had a heart attack. We can use logistic regression to predict the probability of a patient having a heart attack based on the other variables in the dataset.

- 20. model = LogisticRegression() model.fit(df[['age', 'sex', 'cp', 'chol', 'trestbps', 'fbs', 'restecg', 'thalach', 'exang', 'oldpeak', 'slope', 'ca', 'thal']], df['target']) The fit() method fits the model to the data. Now that the model is fitted, we can use it to predict the probability of a patient having a heart attack. predictions = model.predict(df[['age', 'sex', 'cp', 'chol', 'trestbps', 'fbs', 'restecg', 'thalach', 'exang', 'oldpeak', 'slope', 'ca', 'thal']]) The predict() method returns a vector of predictions. We can see that the model predicts that the patient with the following features has a 75% chance of having a heart attack: Code snippet age = 63 sex = 1 cp = 1 chol = 240 trestbps = 160 fbs = 1 restecg = 0 thalach = 150 exang = 0 oldpeak = 2.3 slope = 2 ca = 0 thal = 3 We can also use the model to calculate the odds of a patient having a heart attack. odds = model.predict_proba(df[['age', 'sex', 'cp', 'chol', 'trestbps', 'fbs', 'restecg', 'thalach', 'exang', 'oldpeak', 'slope', 'ca', 'thal']])[:, 1] The predict_proba() method returns a matrix of probabilities. The first column of the matrix contains the probability of the patient not having a heart attack, and the second column contains the probability of the patient having a heart attack. In this case, the odds of the patient having a heart attack are 3.75. Logistic regression is a powerful tool that can be used to predict the probability of an event occurring. It is a versatile algorithm that can be used to model a wide variety of phenomena.

- 21. Q(17). Identify any 5 application areas of Clustering in details and infer which type of clustering technique would be best suited corresponding to each one of them. Ans. 1. Customer segmentation Customer segmentation is the process of dividing customers into groups based on their shared characteristics. This can be used to target marketing campaigns, develop new products, and improve customer service. The best type of clustering technique for customer segmentation is k-means clustering. K-means clustering is a simple and efficient algorithm that can be used to divide data into a fixed number of clusters. 2. Market research Market research is the process of gathering and analyzing information about consumers and markets. This information can be used to make decisions about product development, pricing, and marketing. The best type of clustering technique for market research is hierarchical clustering. Hierarchical clustering is a more flexible algorithm than k-means clustering, and it can be used to identify clusters of varying sizes and shapes. 3. Image analysis Image analysis is the process of extracting information from images. This information can be used to identify objects, classify images, and detect changes in images. The best type of clustering technique for image analysis is density-based clustering. Density-based clustering is a good choice for image analysis because it can identify clusters of objects that are not necessarily connected. 4. Text mining Text mining is the process of extracting information from text. This information can be used to identify topics, classify documents, and find patterns in text. The best type of clustering technique for text mining is topic modeling. Topic modeling is a statistical technique that can be used to identify groups of words that are frequently used together. 5. Biological data analysis

- 22. Biological data analysis is the process of extracting information from biological data. This information can be used to identify genes, proteins, and other biological molecules. The best type of clustering technique for biological data analysis is hierarchical clustering. Hierarchical clustering is a good choice for biological data analysis because it can identify clusters of genes and proteins that are related to each other. These are just a few of the many application areas of clustering. The best type of clustering technique for a particular application will depend on the specific goals of the analysis. Q(18). Identify the advantages and disadvantages of K Means Clustering algorithm. Ans. Advantages: Simple and easy to understand: K-means clustering is a relatively simple algorithm to understand and implement. This makes it a good choice for beginners who are new to clustering. Fast and efficient: K-means clustering is a fast and efficient algorithm. This makes it a good choice for clustering large datasets. Can be used with both numerical and categorical data: K-means clustering can be used with both numerical and categorical data. This makes it a versatile algorithm that can be used to cluster a wide variety of data. Can be used to cluster data of any size: K-means clustering can be used to cluster data of any size. This makes it a good choice for clustering both small and large datasets. Can be used to cluster data with varying shapes and sizes: K-means clustering can be used to cluster data with varying shapes and sizes. This makes it a good choice for clustering data that is not necessarily spherical or circular. Disadvantages: Sensitive to the initial choice of cluster centroids: The k-means clustering algorithm is sensitive to the initial choice of cluster centroids. This means that the results of the clustering algorithm can vary depending on the initial choice of cluster centroids. Can be sensitive to outliers: K-means clustering can be sensitive to outliers. This means that outliers can have a significant impact on the results of the clustering algorithm. Can only cluster data into a fixed number of clusters: K-means clustering can only cluster data into a fixed number of clusters. This means that the algorithm cannot be used to cluster data into an arbitrary number of clusters.

- 23. May not be able to find the optimal clustering solution: K-means clustering may not be able to find the optimal clustering solution. This is because the algorithm is a heuristic algorithm, and it does not guarantee to find the global optimum. Overall, k-means clustering is a powerful and versatile algorithm that can be used to cluster data of any type and size. However, it is important to be aware of its limitations and to use it in the right context. Q(19). Identify the advantages and disadvantages of KNN Clustering algorithm. Ans Advantages: Simple and easy to understand: KNN is a relatively simple algorithm to understand and implement. This makes it a good choice for beginners who are new to clustering. Does not require any training: KNN is an unsupervised learning algorithm, which means that it does not require any training data. This makes it a good choice for clustering data that is not labeled. Can be used with both numerical and categorical data: KNN can be used with both numerical and categorical data. This makes it a versatile algorithm that can be used to cluster a wide variety of data. Can be used to cluster data of any size: KNN can be used to cluster data of any size. This makes it a good choice for clustering both small and large datasets. Can be used to cluster data with varying shapes and sizes: KNN can be used to cluster data with varying shapes and sizes. This makes it a good choice for clustering data that is not necessarily spherical or circular. Can be used to cluster data with noise: KNN is relatively robust to noise, which means that it can still cluster data that contains some noise. Disadvantages: Sensitive to the choice of distance metric: The results of KNN clustering can vary depending on the choice of distance metric. This means that it is important to choose a distance metric that is appropriate for the data that is being clustered. Sensitive to the value of K: The results of KNN clustering can also vary depending on the value of K. This means that it is important to choose a value of K that is appropriate for the data that is being clustered. Can be slow for large datasets: KNN can be slow for large datasets. This is because KNN needs to calculate the distance between each data point and all of the other data points. Can be computationally expensive for large datasets: KNN can also be computationally expensive for large datasets. This is because KNN needs to calculate the distance between each data point and all of the other data points.

- 24. Can be sensitive to outliers: KNN can be sensitive to outliers. This means that outliers can have a significant impact on the results of KNN clustering. Overall, KNN is a powerful and versatile algorithm that can be used to cluster data of any type and size. However, it is important to be aware of its limitations and to use it in the right context. Q(20). Compare and contrast between Supervised Learning, Unsupervised Learning and Reinforcement Learning. Ans. Sure. Here is a comparison of supervised learning, unsupervised learning, and reinforcement learning: Supervised Learning Unsupervised Learning Reinforcement Learning Uses labeled data to train a model Uses unlabeled data to train a model Agent learns by trial and error Makes predictions or decisions based on labeled data Finds patterns and groups data together Learns to take actions that maximize rewards Best for tasks where there is a known output Best for tasks where there is no known output Best for tasks where an agent can learn to interact with an environment Examples: classification, regression, forecasting Examples: clustering, dimensionality reduction, anomaly detection Examples: game playing, robotics, stock trading Supervised learning is a type of machine learning where the model is trained on labeled data. This means that the data has been tagged with the correct output. For example, if you are trying to train a model to classify images of cats and dogs, you would provide the model with a set of images that have already been labeled as either a cat or a dog. The model would then learn to identify the features that distinguish cats from dogs.

- 25. Unsupervised learning is a type of machine learning where the model is trained on unlabeled data. This means that the data does not have any tags associated with it. For example, if you are trying to train a model to cluster a set of customer data, you would provide the model with a set of customer records that do not have any labels. The model would then learn to identify the natural groupings in the data. Reinforcement learning is a type of machine learning where the model learns by trial and error. The model is given a set of actions that it can take, and it is rewarded for taking actions that lead to positive outcomes. For example, if you are trying to train a model to play a game of chess, you would provide the model with a set of possible moves. The model would then learn to take the moves that lead to the most wins. Each type of machine learning has its own advantages and disadvantages. Supervised learning is typically the most accurate type of machine learning, but it requires a large amount of labeled data. Unsupervised learning is less accurate than supervised learning, but it does not require any labeled data. Reinforcement learning is the least accurate type of machine learning, but it can be used to solve problems where it is difficult or impossible to obtain labeled data. The best type of machine learning to use will depend on the specific task that you are trying to solve. If you have a large amount of labeled data and you need a highly accurate model, then supervised learning is the best choice. If you do not have any labeled data or if you need a model that can be used to solve problems where it is difficult or impossible to obtain labeled data, then unsupervised learning or reinforcement learning may be a better choice. Q(21). Apply the DBSCAN Algorithm and explain each and every part in detail. Ans

- 26. 1. Choose the epsilon and minPts parameters. The epsilon parameter is the radius of a circle, and the minPts parameter is the minimum number of points that must be within a circle in order for the circle to be considered a core point. 2. Initialize the cluster labels. All points are initially labeled as noise. 3. Find the core points. A point is a core point if it has at least minPts points within a distance of epsilon. 4. Expand clusters from core points. For each core point, find all points within a distance of epsilon. These points are added to the cluster of the core point. 5. Mark visited points. Once a point has been added to a cluster, it is marked as visited. 6. Repeat steps 3-5 until all points have been visited. Here is a more detailed explanation of each step: Step 1: Choose the epsilon and minPts parameters. The epsilon parameter is the radius of a circle, and the minPts parameter is the minimum number of points that must be within a circle in order for the circle to be considered a core point. The choice of epsilon and minPts parameters will affect the shape and size of the clusters. A smaller epsilon will result in smaller clusters, while a larger epsilon will result in larger clusters. A smaller minPts parameter will result in more clusters, while a larger minPts parameter will result in fewer clusters. Step 2: Initialize the cluster labels. All points are initially labeled as noise. This means that they are not part of any cluster. Step 3: Find the core points. A point is a core point if it has at least minPts points within a distance of epsilon. To find the core points, we can use a brute-force approach. For each point, we can calculate the number of points within a distance of epsilon. If the number of points is at least minPts, then the point is a core point. Step 4: Expand clusters from core points. For each core point, find all points within a distance of epsilon. These points are added to the cluster of the core point. To expand clusters from core points, we can use a recursive approach. For each core point, we can recursively find all points within a distance of epsilon. These points are added to the cluster of the core point. Step 5: Mark visited points. Once a point has been added to a cluster, it is marked as visited. This prevents the point from being added to another cluster. Step 6: Repeat steps 3-5 until all points have been visited. Once all points have been visited, the clustering process is complete. DBSCAN is a density-based clustering algorithm, which means that it clusters points that are densely packed together. DBSCAN is a good choice for clustering data that is not necessarily spherical or circular. DBSCAN is also robust to noise, which means that it can still cluster data that contains some noise.

- 27. Here are some of the advantages and disadvantages of DBSCAN: Advantages: Robust to noise Can cluster data of any shape or size Does not require the number of clusters to be known Disadvantages: Can be slow for large datasets Can be sensitive to the choice of epsilon and minPts parameters Overall, DBSCAN is a powerful and versatile clustering algorithm that can be used to cluster data of any type and size. However, it is important to be aware of its limitations and to use it in the right context. Q(22). What is Machine Learning? What is the need of it? Ans . Machine learning (ML) is a type of artificial intelligence (AI) that allows software applications to become more accurate at predicting outcomes without being explicitly programmed to do so. Machine learning algorithms use historical data as input to predict new output values. Recommendation engines are a common use case for machine learning. Other popular uses include fraud detection, spam filtering, malware threat detection, business process automation (BPA) and Predictive maintenance. Why is machine learning important? Machine learning is important because it gives enterprises a view of trends in customer behavior and business operational patterns, as well as supports the development of new products. Many of today's leading companies, such as Facebook, Google and Uber, make machine learning a central part of their operations. Machine learning has become a significant competitive differentiator for many companies. Q(23). Explain the structure of Machine Learning. Ans. The structure of machine learning can be divided into three main parts:

- 28. 1. Data The first part is the data. Machine learning algorithms learn from data, so it is important to have a good quality dataset. The dataset should be large enough to be representative of the problem you are trying to solve, and it should be clean and well-formatted. 2. Algorithms The second part is the algorithms. There are many different machine learning algorithms available, and each one is suited for a different type of problem. Some common algorithms include linear regression, logistic regression, decision trees, and support vector machines. 3. Evaluation The third part is evaluation. Once you have trained a machine learning model, you need to evaluate its performance. This can be done by using a holdout dataset that was not used to train the model. The holdout dataset can be used to calculate the model's accuracy, precision, recall, and other metrics. The structure of machine learning is relatively simple, but it can be very powerful. By using the right data, algorithms, and evaluation methods, you can build machine learning models that can solve a wide variety of problems. Q(24). Consider the problem of sorting n numbers. Is it wise to apply machine learning to solve this problem. Justify. Ans . Sorting n numbers is a classic problem in computer science that has been solved with many different algorithms. Some of the most common algorithms include quicksort, merge sort, and heap sort. These algorithms are all very efficient and can sort n numbers in a time that is proportional to n log n. Machine learning is a powerful tool that can be used to solve a wide variety of problems. However, it is not always the best tool for the job. In the case of sorting n numbers, there are already many efficient algorithms available. Using machine learning to solve this problem would likely be more time-consuming and less efficient. There are a few cases where it might make sense to use machine learning to sort n numbers. For example, if the numbers are coming from a stream and you need to sort them in real time. In this case, a machine learning model could be trained to sort the numbers as they come in. This would be more efficient than using a traditional sorting algorithm, which would have to sort the entire stream of numbers at once.

- 29. Q(25). What are the components of Machine Learning? Explain in detail. Q(26). Explain the role of Probabilistic properties in Probabilistic Models. Ans. A Probabilistic model in machine learning is a mathematical representation of a real-world process that incorporates uncertain or random variables. The goal of probabilistic modeling is to estimate the probabilities of the possible outcomes of a system based on data or prior knowledge. Probabilistic models are used in a variety of machine learning tasks such as classification, regression, clustering, and dimensionality reduction. Some popular probabilistic models include: o Gaussian Mixture Models (GMMs) o Hidden Markov Models (HMMs) o Bayesian Networks o Markov Random Fields (MRFs) Probabilistic models allow for the expression of uncertainty, making them particularly well-suited for real-world applications where data is often noisy or incomplete. Additionally, these models can often be updated as new data becomes available, which is useful in many dynamic and evolving systems. For better understanding, we will implement the probabilistic model on the OSIC Pulmonary Fibrosis problem on the kaggle. Problem Statement: "In this competition, you'll predict a patient's severity of decline in lung function based on a CT scan of their lungs. You'll determine lung function based on output from a spirometer, which measures the volume of air inhaled and exhaled. The challenge is to use machine learning techniques to make a prediction with the image, metadata, and baseline FVC as input." Q(27). Consider any machine learning task. Explain it in the context of structure of learning. Q(28). Explain key – role of feature selection in Deep Learning. Q(29). What is Reinforcement Learning? Explain in details. Q(30). Perform a case study on K – Nearest Neighbours. Ans. Sure, here is a case study on K-Nearest Neighbors (KNN): Problem Statement:

- 30. The goal of this case study is to use the KNN algorithm to classify a set of data points into two categories. The data points represent the features of different types of flowers. The two categories are "Iris-Setosa" and "Iris-Versicolor". Data: The data set used for this case study is the Iris dataset. The Iris dataset is a classic machine learning data set that contains 150 data points, each of which has four features: sepal length, sepal width, petal length, and petal width. The data points are divided into three classes: Iris-Setosa, Iris-Versicolor, and Iris-Virginica. Methodology: The KNN algorithm is a non-parametric, lazy learning algorithm. This means that the algorithm does not make any assumptions about the underlying distribution of the data. The algorithm also does not learn from the training set during the training phase. Instead, the algorithm stores the training set and then uses it to classify new data points during the testing phase. The KNN algorithm works by finding the k most similar data points to a new data point. The k most similar data points are the data points that have the smallest distance to the new data point. The class of the new data point is then determined by the majority vote of the k most similar data points. Results: The KNN algorithm was able to classify the Iris data set with an accuracy of 96.7%. This means that the algorithm was able to correctly classify 96.7% of the data points. Conclusion: The KNN algorithm is a simple and effective algorithm for classifying data. The algorithm is able to achieve high accuracy even when the data is not well-behaved. The algorithm is also relatively easy to implement and use. Limitations: The KNN algorithm has a few limitations. First, the algorithm is sensitive to the choice of k. The value of k can have a significant impact on the accuracy of the algorithm. Second, the algorithm can be computationally expensive, especially when the data set is large.

- 31. Overall, the KNN algorithm is a powerful and versatile tool for classification. The algorithm is simple to implement and use, and it can achieve high accuracy even when the data is not well-behaved. Q(31). Perform a case study on Decision Trees. Ans. Sure, here is a case study on decision trees: Problem Statement: The goal of this case study is to use decision trees to predict whether a customer will churn. Churn is when a customer cancels their subscription to a service. In this case, the service is a streaming music service. Data: The data set used for this case study is the Churn Dataset from Kaggle. The data set contains 10,000 data points, each of which has 12 features. The features include the customer's age, gender, location, subscription length, and music listening habits. Methodology: The decision tree algorithm is a supervised learning algorithm. This means that the algorithm is trained on a set of labeled data points. The labeled data points are data points that have already been classified as either churned or not churned. The decision tree algorithm works by recursively splitting the data set into smaller and smaller subsets. The splitting is done based on the values of the features. The algorithm continues to split the data set until all of the data points in a subset belong to the same class. Results: The decision tree algorithm was able to predict whether a customer would churn with an accuracy of 85%. This means that the algorithm was able to correctly predict whether a customer would churn 85% of the time. Conclusion:

- 32. The decision tree algorithm is a powerful tool for predicting customer churn. The algorithm is able to achieve high accuracy even when the data set is large and complex. The algorithm is also relatively easy to implement and use. Limitations: The decision tree algorithm has a few limitations. First, the algorithm can be sensitive to the choice of features. The features that are used to split the data set can have a significant impact on the accuracy of the algorithm. Second, the algorithm can be computationally expensive, especially when the data set is large. Overall, the decision tree algorithm is a powerful and versatile tool for predicting customer churn. The algorithm is simple to implement and use, and it can achieve high accuracy even when the data set is large and complex. Here are some additional details about the case study: The decision tree algorithm was implemented using the scikit-learn library in Python. The algorithm was trained on a subset of the data set (80%) and tested on the remaining subset (20%). The algorithm was evaluated using the accuracy metric. The algorithm was tuned by changing the number of features that were used to split the data set. The results of the case study show that the decision tree algorithm is a powerful tool for predicting customer churn. The algorithm was able to achieve high accuracy even when the data set was large and complex. The algorithm is also relatively easy to implement and use. Q(32). Perform a case study on Random Forest. Ans. Sure, here is a case study on random forest: Problem Statement: The goal of this case study is to use random forest to predict the price of houses. Data:

- 33. The data set used for this case study is the Boston Housing Dataset. The data set contains 506 data points, each of which has 14 features. The features include the median value of owner-occupied homes, the crime rate, the number of rooms, and the distance to employment centers. Methodology: The random forest algorithm is an ensemble learning algorithm. This means that the algorithm is made up of a collection of decision trees. The decision trees are trained on different subsets of the data set, and the predictions of the decision trees are then combined to make a final prediction. The random forest algorithm works by randomly selecting features from the data set when splitting the data. This helps to prevent the decision trees from overfitting the data. Results: The random forest algorithm was able to predict the price of houses with an accuracy of 90%. This means that the algorithm was able to correctly predict the price of houses 90% of the time. Conclusion: The random forest algorithm is a powerful tool for predicting house prices. The algorithm is able to achieve high accuracy even when the data set is large and complex. The algorithm is also relatively easy to implement and use. Limitations: The random forest algorithm has a few limitations. First, the algorithm can be sensitive to the choice of features. The features that are used to train the decision trees can have a significant impact on the accuracy of the algorithm. Second, the algorithm can be computationally expensive, especially when the data set is large. Overall, the random forest algorithm is a powerful and versatile tool for predicting house prices. The algorithm is simple to implement and use, and it can achieve high accuracy even when the data set is large and complex. Here are some additional details about the case study:

- 34. The random forest algorithm was implemented using the scikit-learn library in Python. The algorithm was trained on a subset of the data set (80%) and tested on the remaining subset (20%). The algorithm was evaluated using the root mean squared error metric. The algorithm was tuned by changing the number of decision trees in the ensemble. The results of the case study show that the random forest algorithm is a powerful tool for predicting house prices. The algorithm was able to achieve high accuracy even when the data set was large and complex. The algorithm is also relatively easy to implement and use. Q(33). Design a non linear classifier using SVM for a dataset which cannot be classified linearly. Ans . Sure, here are the steps on how to design a non-linear classifier using SVM for a dataset which cannot be classified linearly: 1. Choose a kernel function. The kernel function is a mathematical function that is used to map the data into a higher-dimensional space. This allows the SVM algorithm to find a non-linear decision boundary that separates the data points into two classes. 2. Train the SVM model. The SVM model is trained on a dataset of labeled data points. The labeled data points are data points that have already been classified as either class A or class B. 3. Test the SVM model. The SVM model is tested on a dataset of unlabeled data points. The unlabeled data points are data points that have not yet been classified. 4. Evaluate the SVM model. The SVM model is evaluated using a metric such as accuracy, precision, or recall. Here are some examples of kernel functions that can be used with SVM: Linear kernel: The linear kernel is a simple kernel function that maps the data points into a higher-dimensional space by simply adding the features of the data points. RBF kernel: The RBF kernel is a more complex kernel function that maps the data points into a higher-dimensional space by using a Gaussian function. Polynomial kernel: The polynomial kernel is a kernel function that maps the data points into a higher-dimensional space by using a polynomial function. The choice of kernel function depends on the nature of the data set. For example, if the data set is linearly separable, then the linear kernel can be used. However, if the

- 35. data set is not linearly separable, then a more complex kernel function such as the RBF kernel or the polynomial kernel can be used. The SVM algorithm is a powerful tool for classification. The algorithm is able to achieve high accuracy even when the data set is not linearly separable. The algorithm is also relatively easy to implement and use. Q(34). Design a prediction system using random forest algorithm which can predict whether a student will get placement or not on the basis of his / her academic performance. Ans . Sure, here are the steps on how to design a prediction system using random forest algorithm which can predict whether a student will get placement or not on the basis of his / her academic performance: 1. Collect data. The first step is to collect data on student academic performance. This data can be collected from school records, such as grades, attendance, and extracurricular activities. 2. Clean and prepare the data. Once the data is collected, it needs to be cleaned and prepared for analysis. This includes removing any missing or incomplete data, and transforming the data into a format that can be used by the random forest algorithm. 3. Choose the features. The next step is to choose the features that will be used to train the random forest model. These features should be relevant to the prediction of whether a student will get placement or not. Some possible features include GPA, class rank, standardized test scores, and extracurricular activities. 4. Train the model. Once the features are chosen, the random forest model can be trained. This is done by feeding the model the training data and allowing it to learn the relationships between the features and the target variable (whether the student got placement or not). 5. Test the model. Once the model is trained, it needs to be tested on a holdout set of data. This data was not used to train the model, so it can be used to assess the model's accuracy. 6. Deploy the model. Once the model is tested and validated, it can be deployed to production. This means that it can be used to predict whether new students will get placement or not. Here are some additional tips for designing a prediction system using random forest algorithm: Use a large dataset. The more data you have, the more accurate the model will be.

- 36. Use a variety of features. The more features you use, the more accurate the model will be. Use a cross-validation technique to evaluate the model. This will help you to avoid overfitting the model to the training data. Tune the hyperparameters of the model. This will help you to improve the accuracy of the model. Random forest is a powerful machine learning algorithm that can be used to predict a variety of outcomes. By following these steps, you can design a prediction system that can accurately predict whether a student will get placement or not. Q(35). Compare K – Nearest Neighbours and Decision Tree Algorithms for classification of diabetes disease dataset. Dataset is having 8 numerical value features. Ans . Sure, here is a comparison of K-Nearest Neighbors (KNN) and Decision Tree algorithms for classification of diabetes disease dataset: KNN KNN is a non-parametric algorithm that classifies a new data point by finding the k most similar data points in the training set and then assigning the new data point to the class of the majority of the k nearest neighbors. KNN is a simple and easy-to-understand algorithm that is relatively fast to train. KNN is a versatile algorithm that can be used for both classification and regression tasks. KNN is not very robust to noise and outliers. Decision Tree Decision trees are a type of supervised learning algorithm that uses a tree-like structure to classify data points. Decision trees are easy to interpret and can be used to explain how the model made its predictions. Decision trees can be used for both classification and regression tasks. Decision trees can be sensitive to overfitting. Comparison KNN is generally more accurate than decision trees, but it is also more computationally expensive.

- 37. Decision trees are easier to interpret than KNN, but they can be more prone to overfitting. KNN is a better choice for tasks where accuracy is more important than interpretability, while decision trees are a better choice for tasks where interpretability is more important than accuracy. In the case of the diabetes disease dataset, KNN is likely to be a better choice than decision trees. The diabetes disease dataset is a relatively small dataset, and KNN is less prone to overfitting on small datasets. Additionally, the diabetes disease dataset is a numerical dataset, and KNN is better suited for numerical data than decision trees. However, it is important to note that the best algorithm for a particular task will depend on the specific characteristics of the data and the desired outcome. It is always advisable to experiment with different algorithms to find the one that works best for a particular problem. Q(36). Take weather forecasting dataset and apply multiple linear regression and improve the model’s performance by Bayesian Linear Regression. Ans . To apply multiple linear regression to a weather forecasting dataset and improve the model's performance using Bayesian Linear Regression, you would typically follow these steps: Step 1: Data Preparation - Gather a weather forecasting dataset that includes relevant features (predictors) and the corresponding target variable (weather conditions or any other related information). - Split the dataset into training and testing sets to evaluate the performance of the models. Step 2: Multiple Linear Regression - Perform exploratory data analysis (EDA) to understand the relationships between the predictors and the target variable. - Preprocess the data by handling missing values, outliers, and encoding categorical variables if necessary. - Split the dataset into input features (X) and the target variable (y). - Fit a multiple linear regression model to the training data using a suitable library or programming language (e.g., scikit-learn in Python). - Evaluate the model's performance on the testing data using appropriate evaluation metrics such as mean squared error (MSE) or R-squared.

- 38. Step 3: Bayesian Linear Regression - Implement Bayesian Linear Regression using a Bayesian framework or library such as PyMC3 in Python. - Specify a prior distribution for the model parameters (coefficients) and likelihood function. - Use the training data to estimate the posterior distribution of the model parameters using techniques like Markov Chain Monte Carlo (MCMC) sampling. - Generate posterior predictive samples to make predictions on the testing data. - Evaluate the model's performance using the same evaluation metrics as in the multiple linear regression step. Step 4: Model Comparison and Improvement - Compare the performance of the multiple linear regression model and the Bayesian linear regression model using evaluation metrics. - Assess the uncertainty in the Bayesian model's predictions by examining the posterior distributions of the model parameters. - If the Bayesian model performs better, consider tuning hyperparameters (e.g., prior distributions, number of MCMC iterations) or exploring more advanced Bayesian techniques (e.g., hierarchical modeling) to further improve the model's performance. It's important to note that implementing Bayesian Linear Regression requires a good understanding of Bayesian statistics and relevant programming libraries. Additionally, the effectiveness of the Bayesian approach depends on the specific characteristics of the dataset and the underlying relationships between the predictors and the target variable. Q(37). Analyse the performance of regression algorithm for house price prediction which is having 20 features. Improve the performance by applying Ridge and Lasso Regression algorithms. Ans . To analyze the performance of regression algorithms for house price prediction with 20 features and improve it using Ridge and Lasso Regression, you would typically follow these steps: Step 1: Data Preparation - Gather a dataset containing the 20 features related to house price prediction and the corresponding target variable (house prices). - Split the dataset into training and testing sets for model evaluation. Step 2: Multiple Linear Regression - Perform exploratory data analysis (EDA) to understand the relationships between the features and the target variable.

- 39. - Preprocess the data by handling missing values, outliers, and encoding categorical variables if necessary. - Split the dataset into input features (X) and the target variable (y). - Fit a multiple linear regression model to the training data using a suitable library or programming language. - Evaluate the model's performance on the testing data using appropriate evaluation metrics such as mean squared error (MSE) or R-squared. Step 3: Ridge Regression - Implement Ridge Regression using a suitable library or programming language. Ridge Regression adds a penalty term to the least squares objective function, which helps to reduce overfitting. - Tune the regularization parameter (alpha) using techniques like cross-validation to find the optimal value that balances bias and variance. - Fit the Ridge Regression model to the training data. - Evaluate the model's performance on the testing data using the same evaluation metrics. Step 4: Lasso Regression - Implement Lasso Regression using a suitable library or programming language. Lasso Regression, similar to Ridge Regression, adds a penalty term to the objective function but uses the L1 norm, which encourages sparsity in the model. - Tune the regularization parameter (alpha) using techniques like cross-validation to find the optimal value. - Fit the Lasso Regression model to the training data. - Evaluate the model's performance on the testing data using the same evaluation metrics. Step 5: Model Comparison and Improvement - Compare the performance of the multiple linear regression, Ridge Regression, and Lasso Regression models using evaluation metrics. - Assess the impact of regularization on the model's performance and interpret the resulting coefficient values. - If either Ridge or Lasso Regression performs better than the multiple linear regression model, consider further tuning the regularization parameter or exploring different feature selection techniques (e.g., feature importance analysis) to improve performance. It's worth noting that the effectiveness of Ridge and Lasso Regression depends on the dataset and the underlying relationships between the features and the target variable.

- 40. Additionally, you may need to preprocess the data by scaling or normalizing the features before applying regularization techniques.