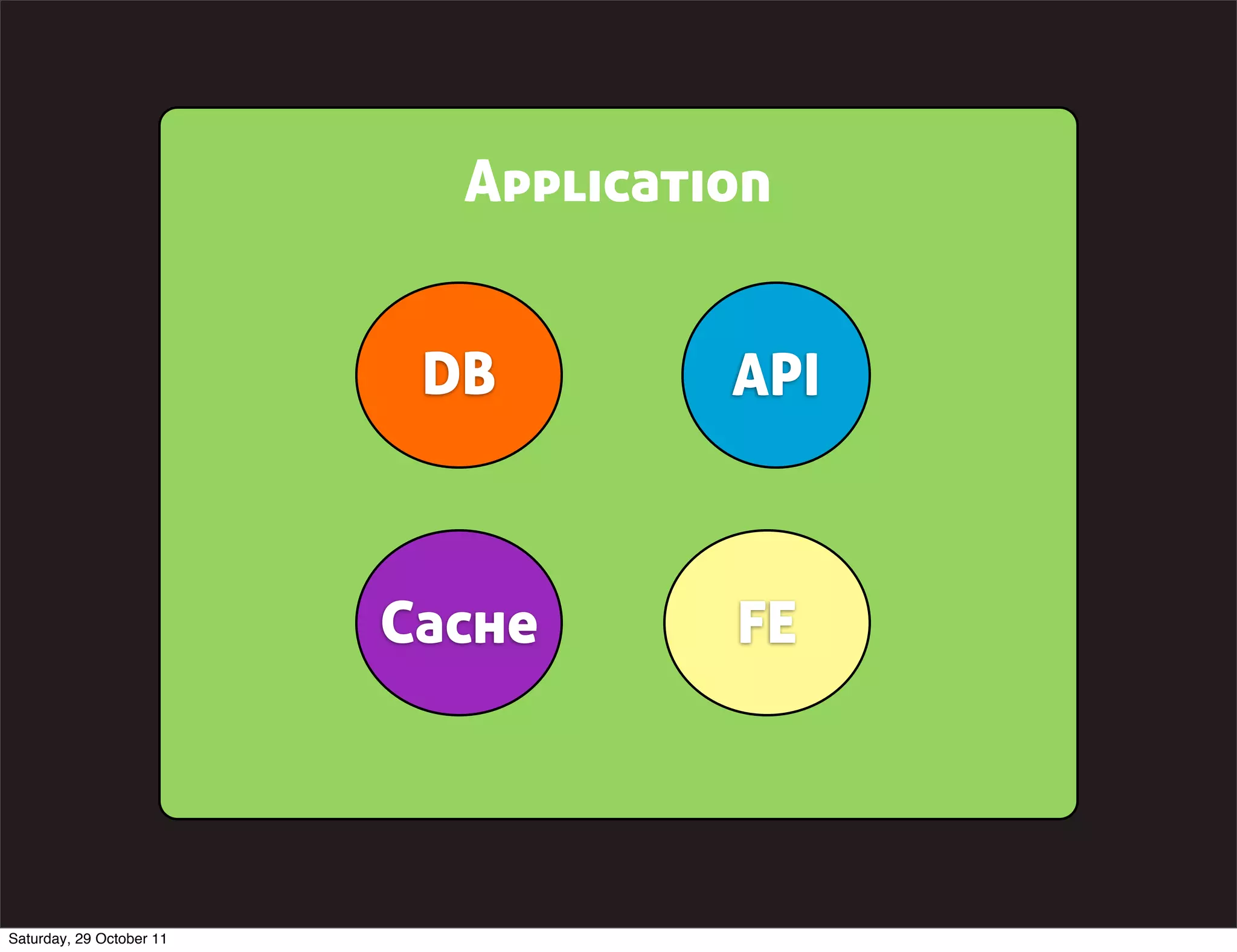

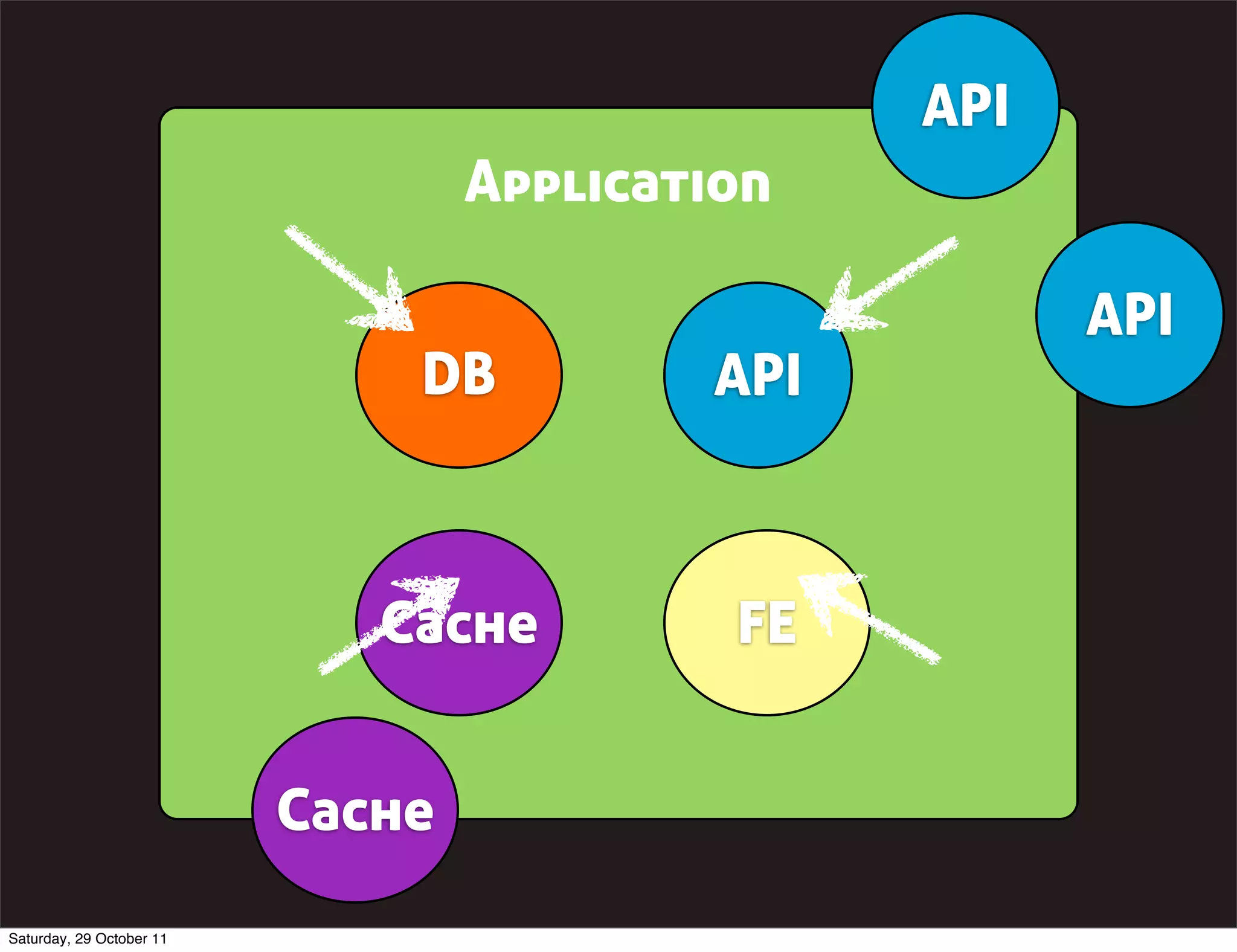

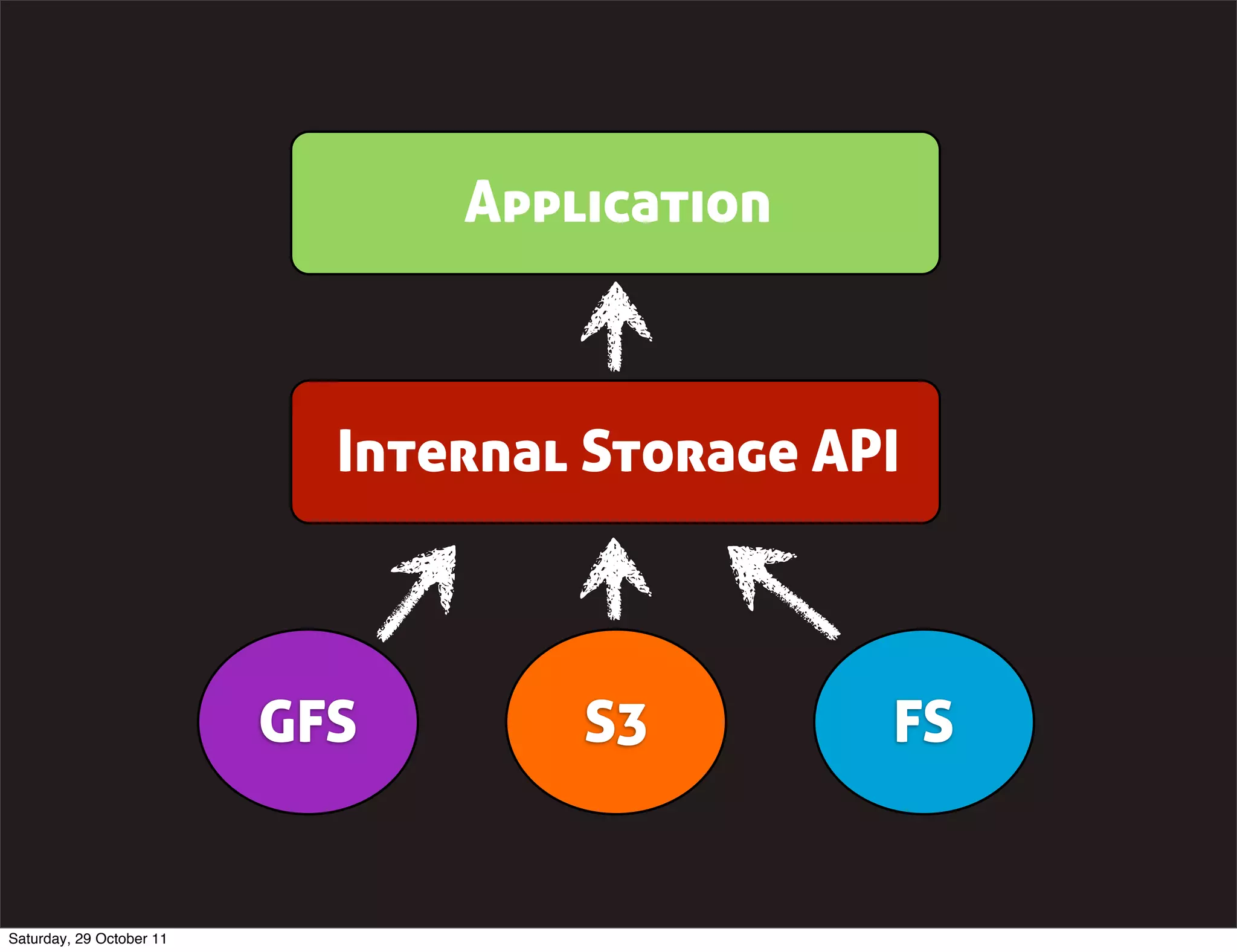

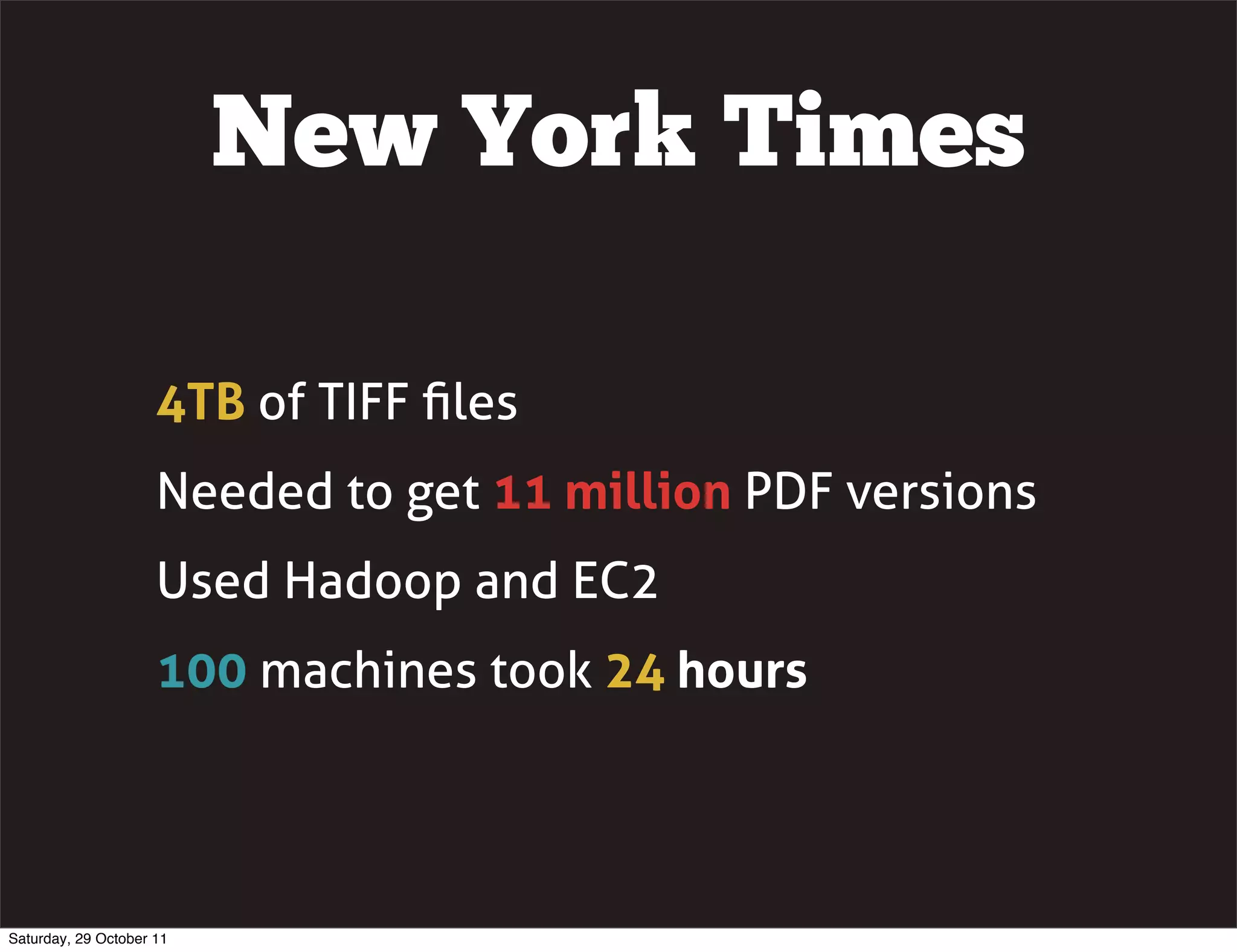

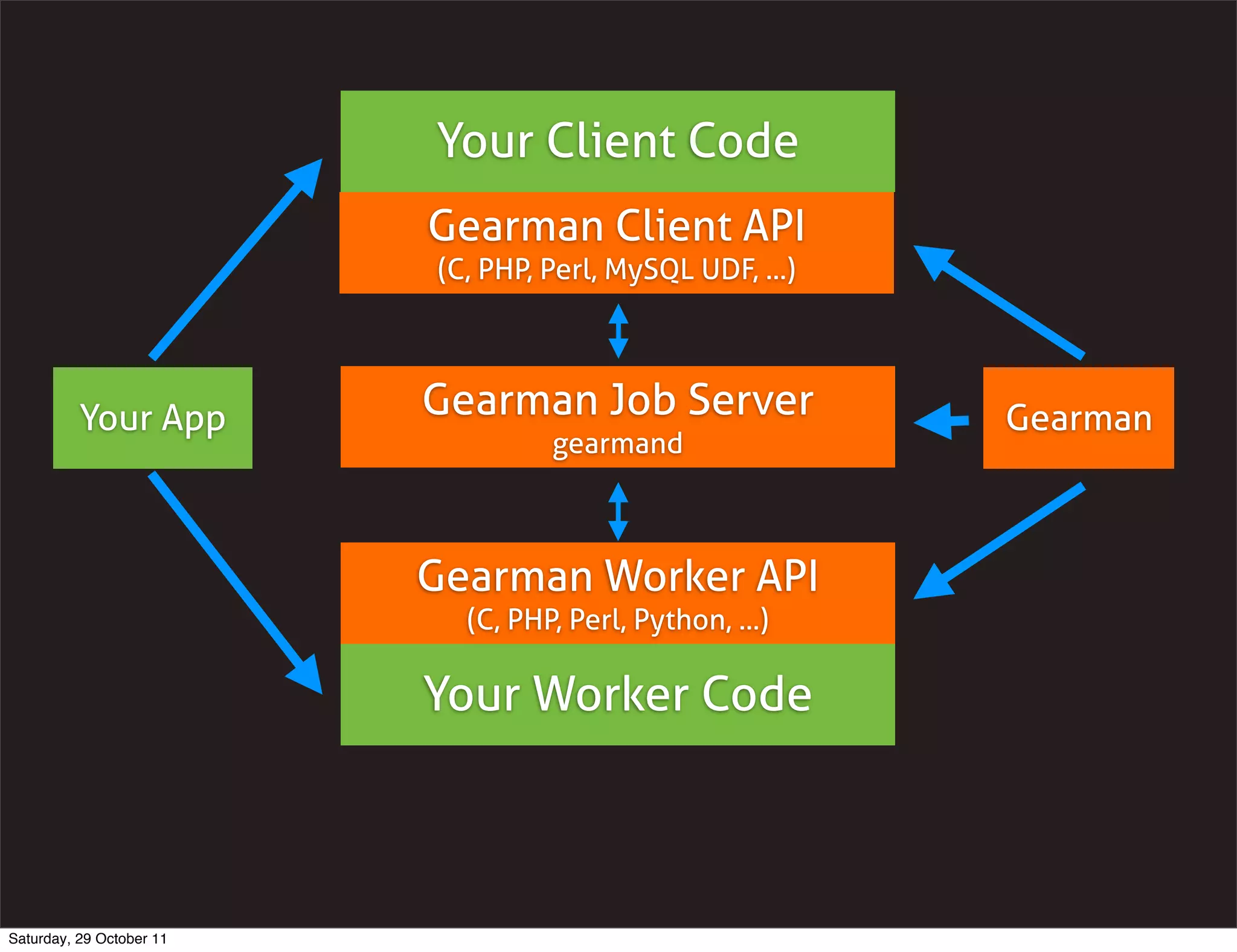

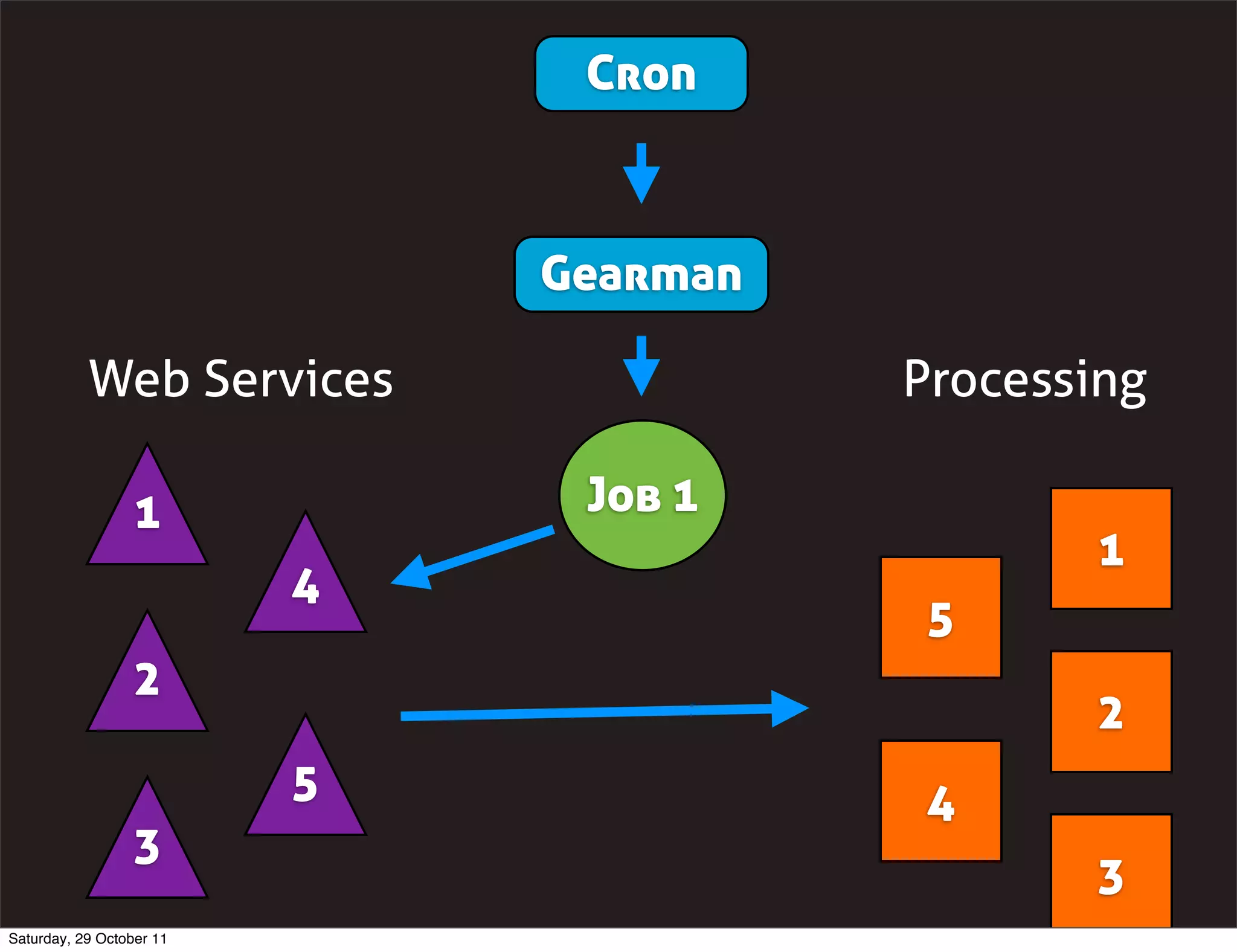

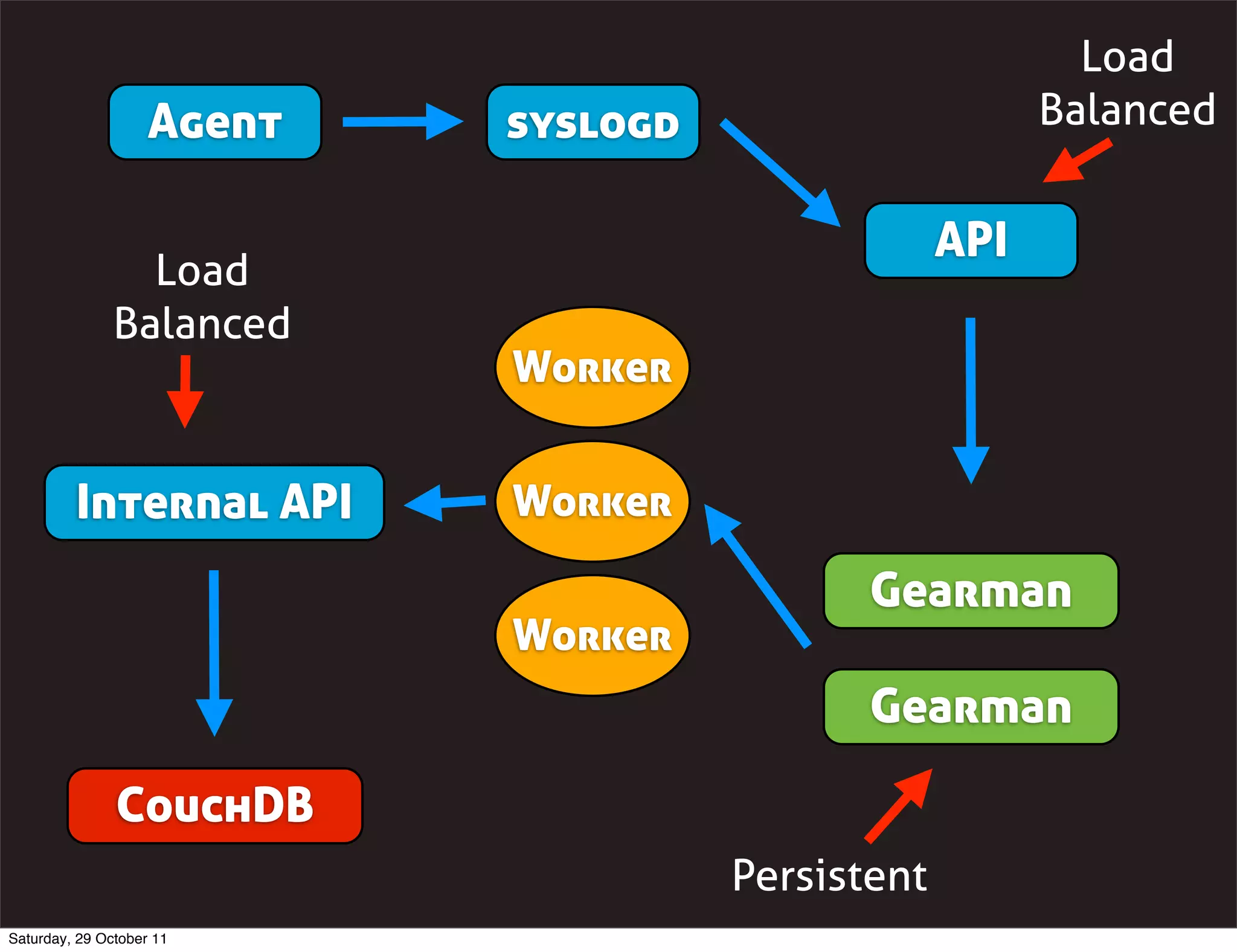

The document discusses the importance of distributing workloads to increase efficiency, manage budget effectively, and enhance user experience. It covers various aspects of distributed systems, including characteristics like decoupling, elasticity, and high availability, and reviews tools and strategies for implementing such systems, like load balancing, queue systems, and map/reduce methods. The author shares practical examples and applications of distributed computing in real-world scenarios, specifically in cloud analytics and financial software management.