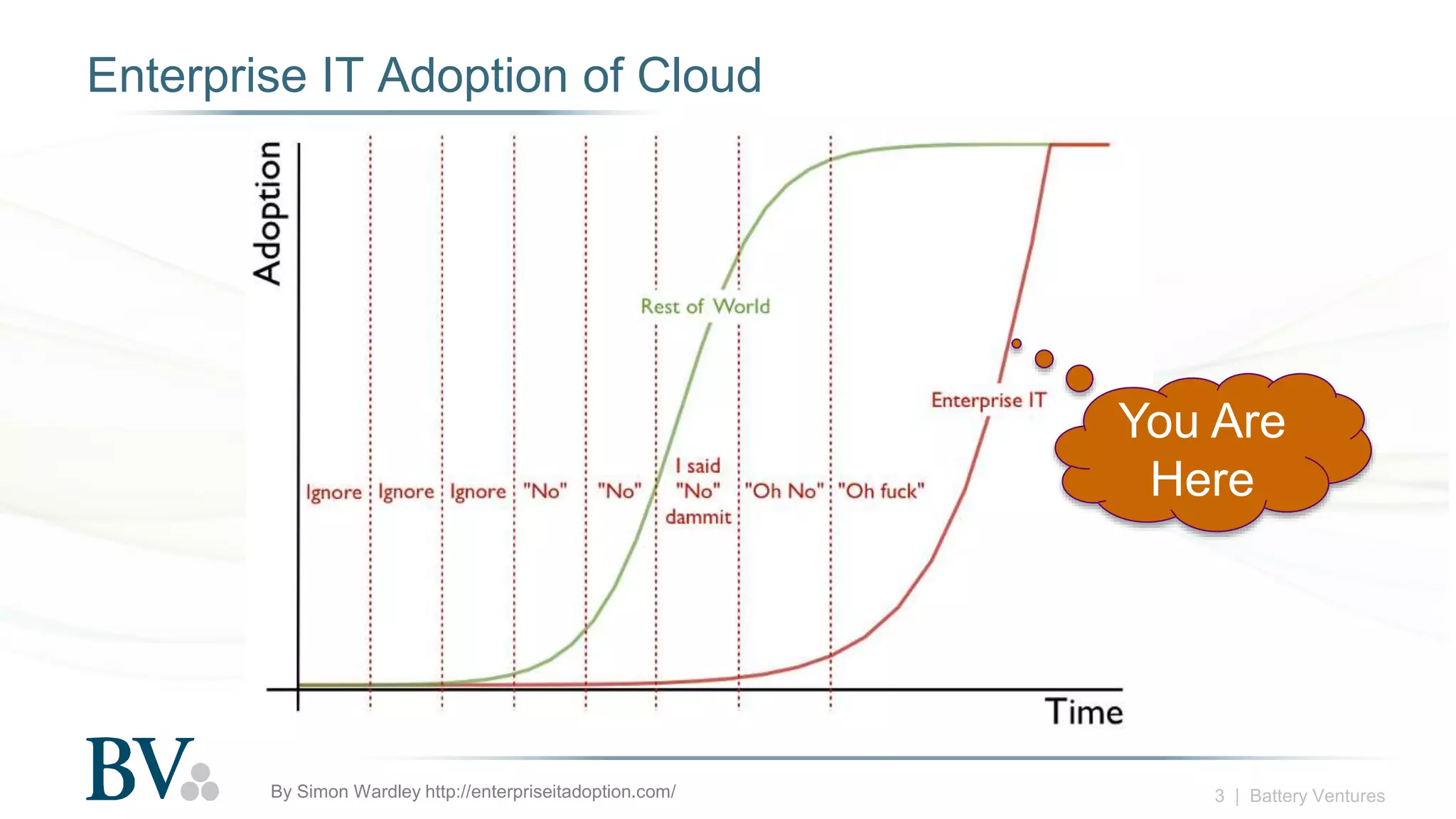

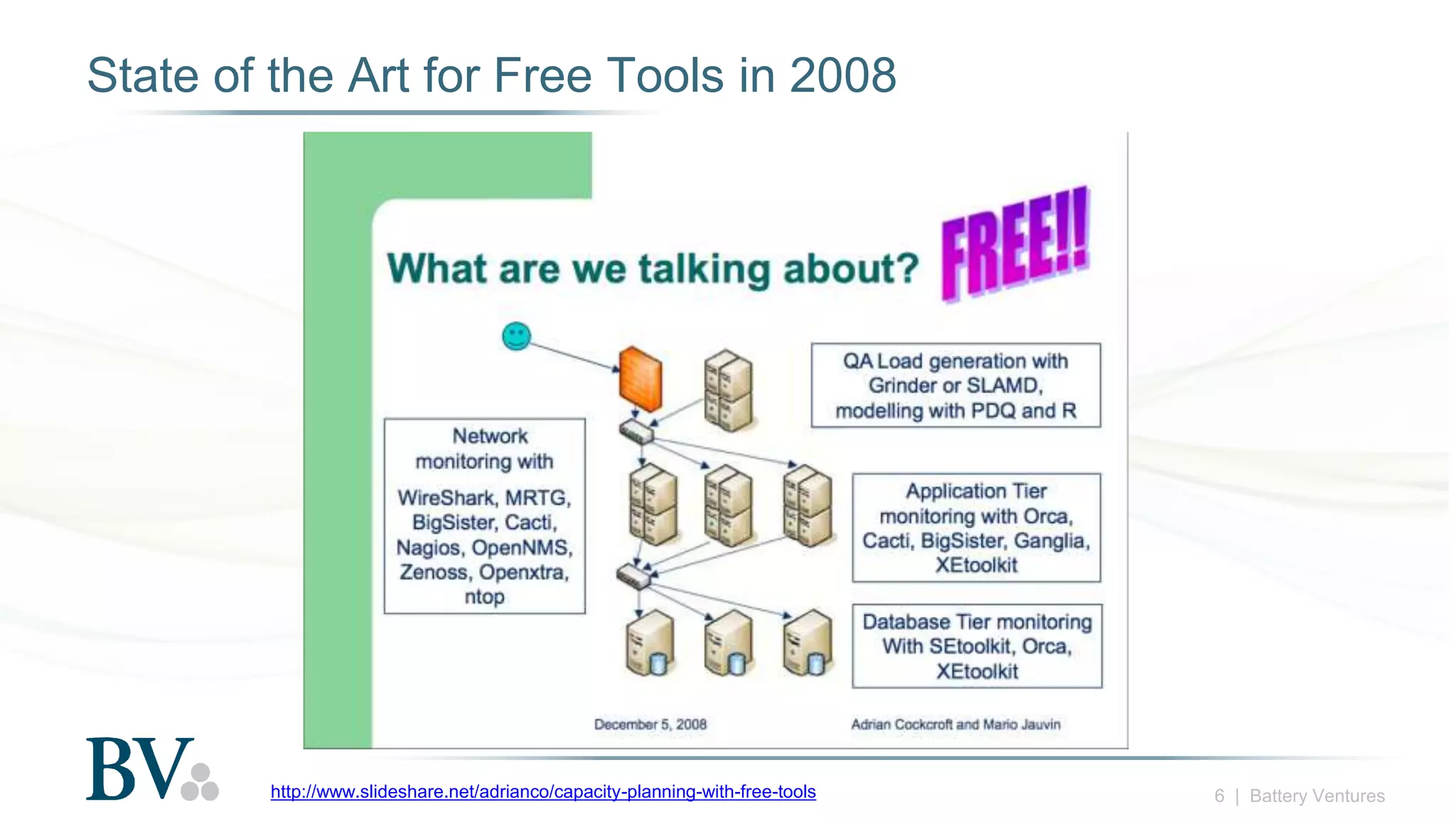

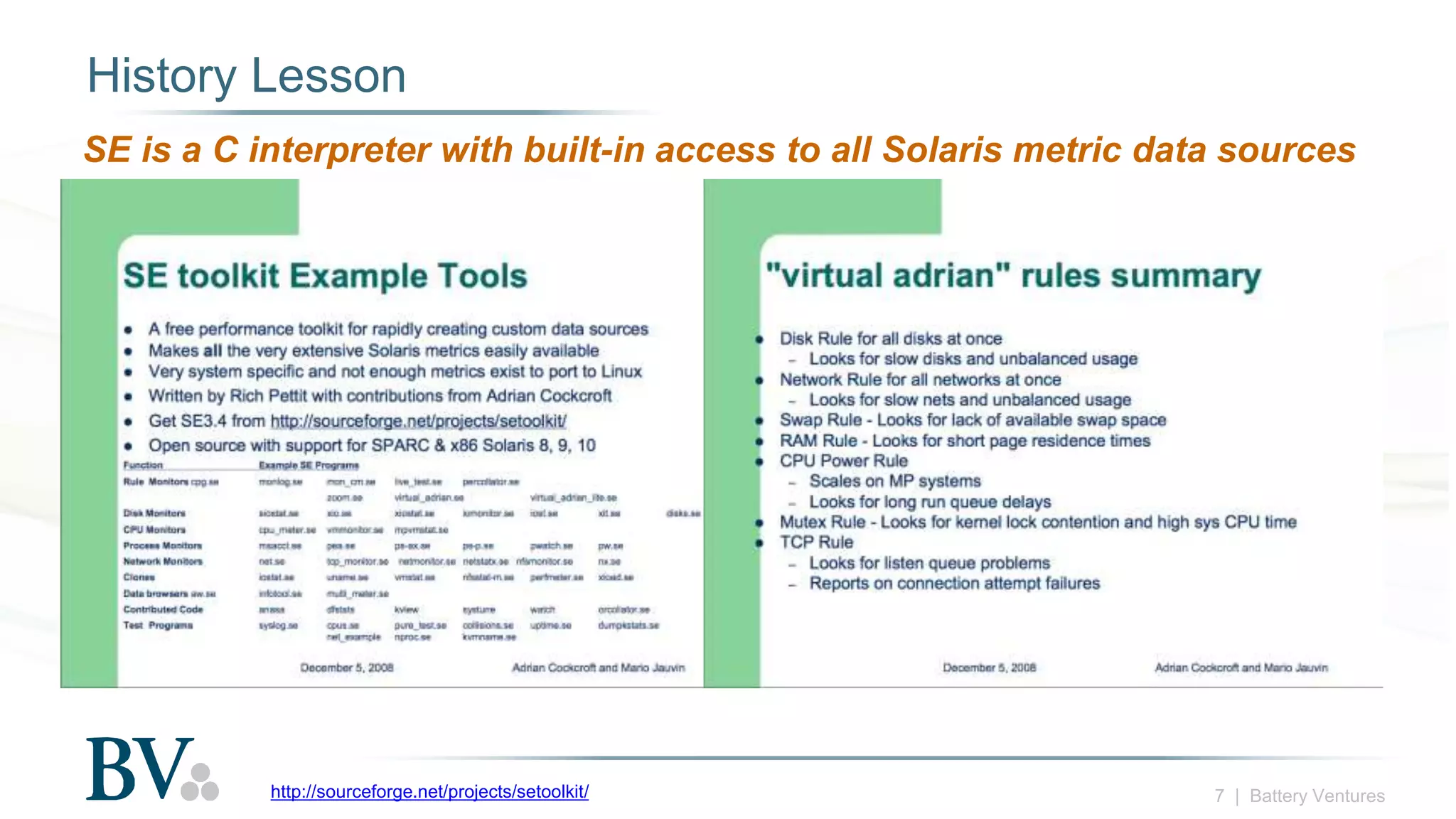

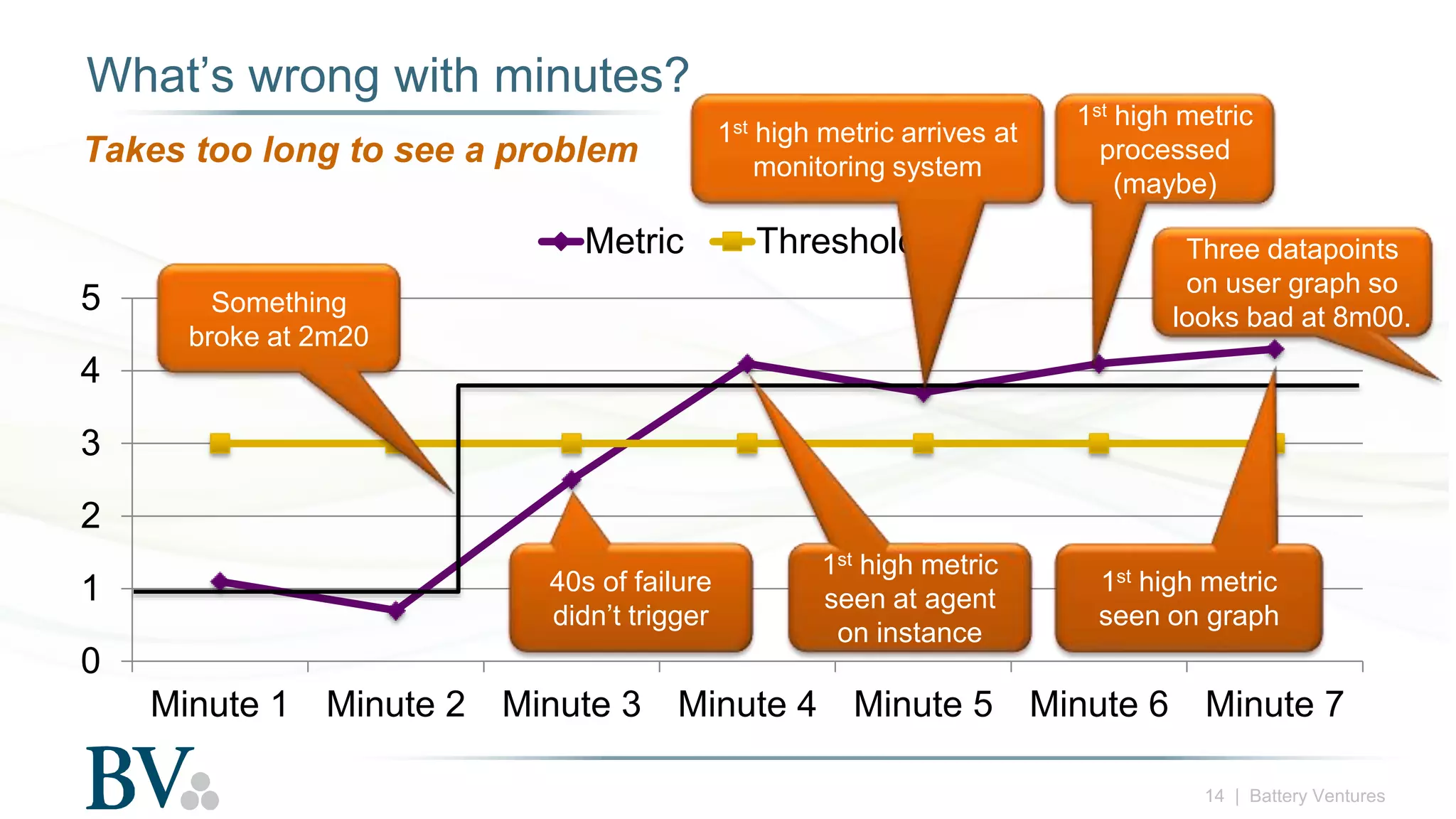

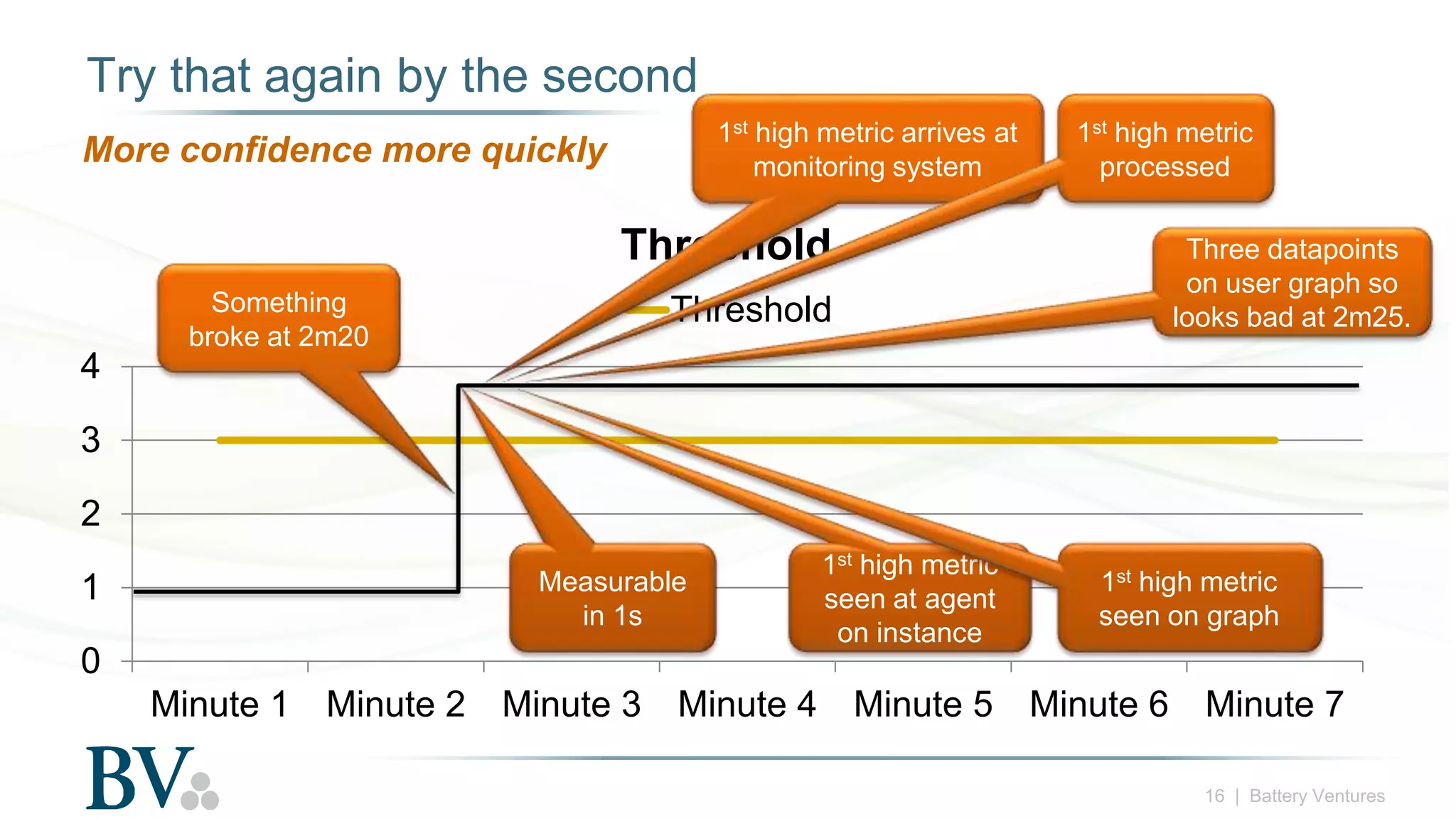

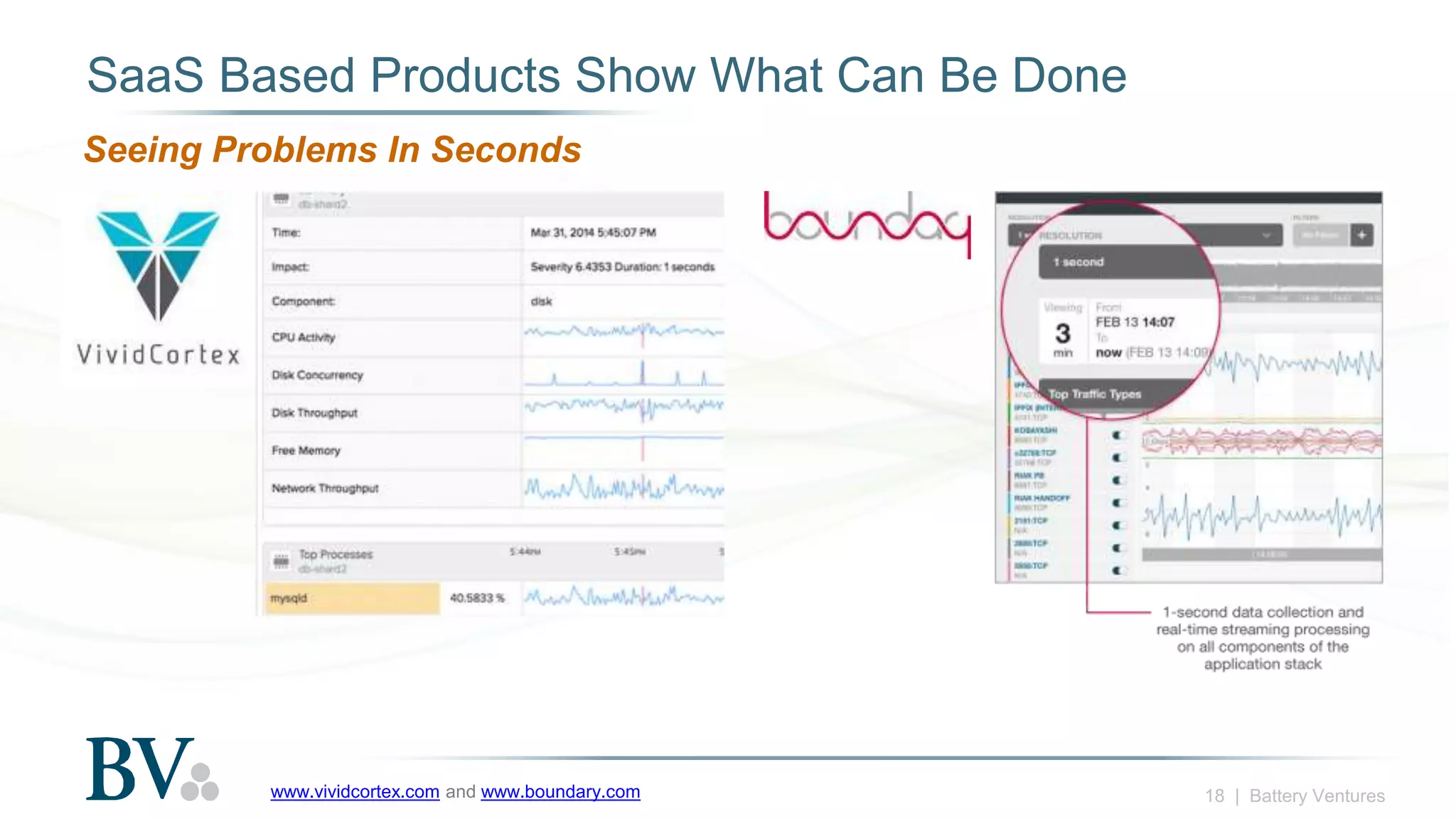

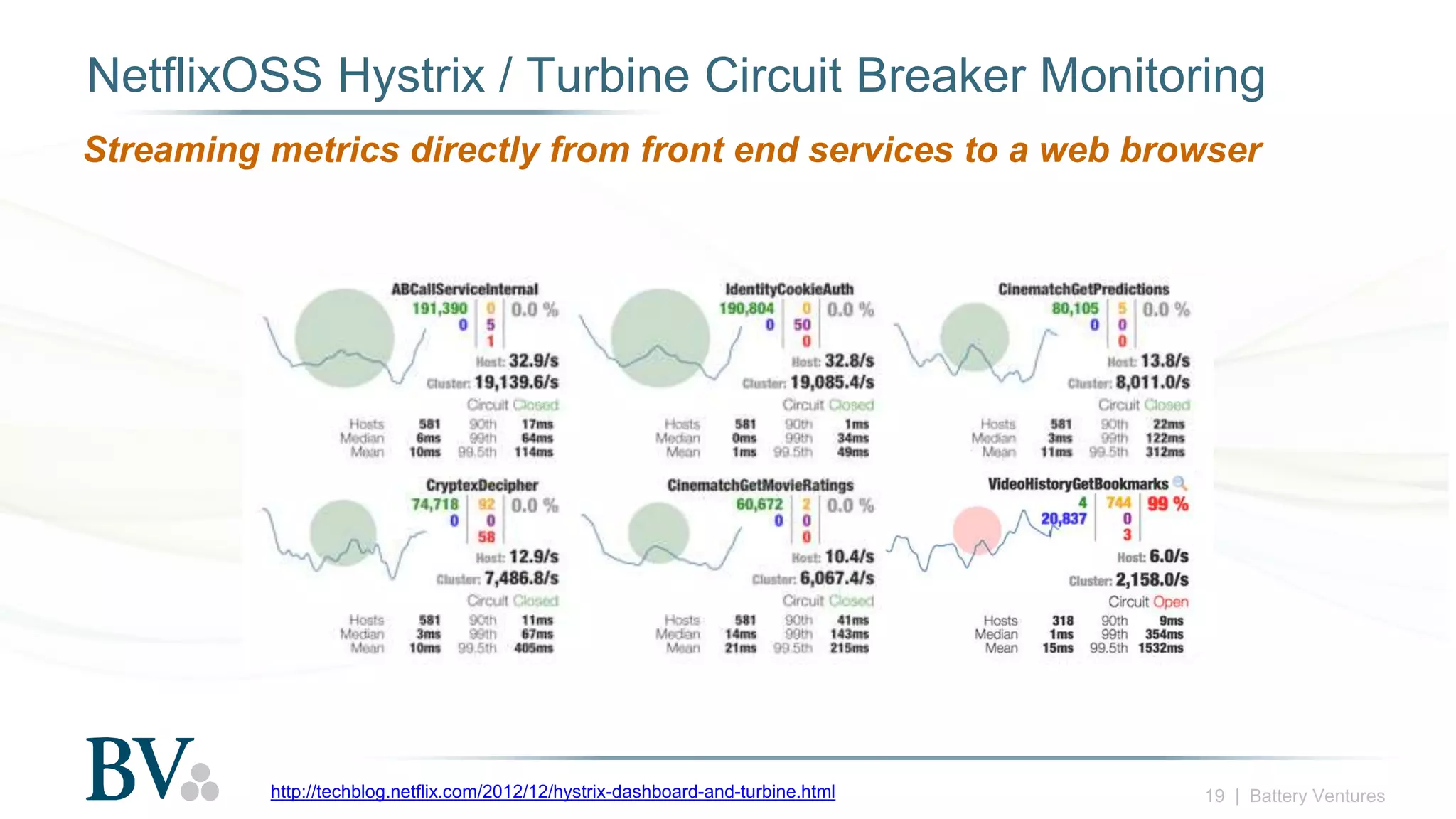

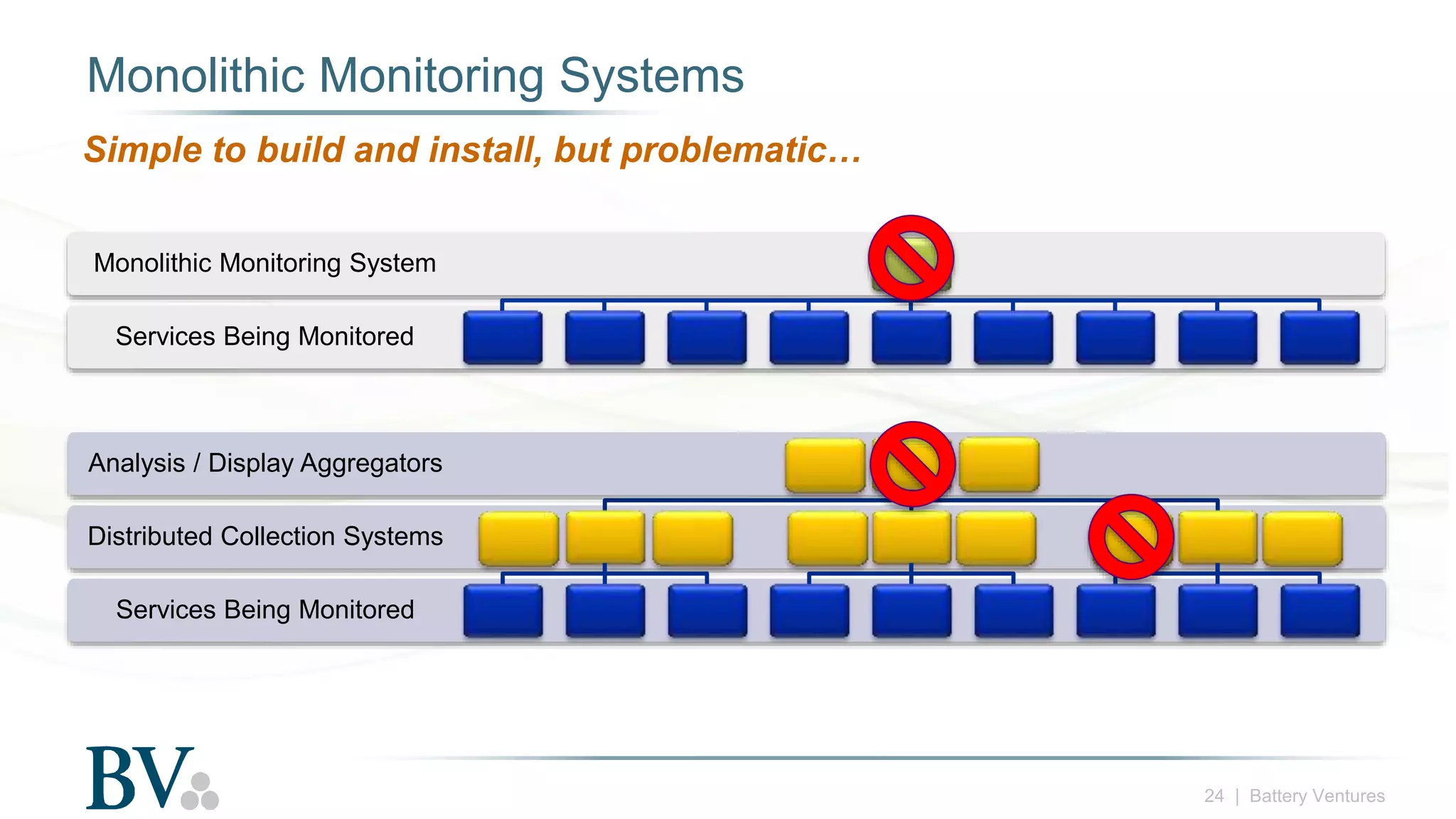

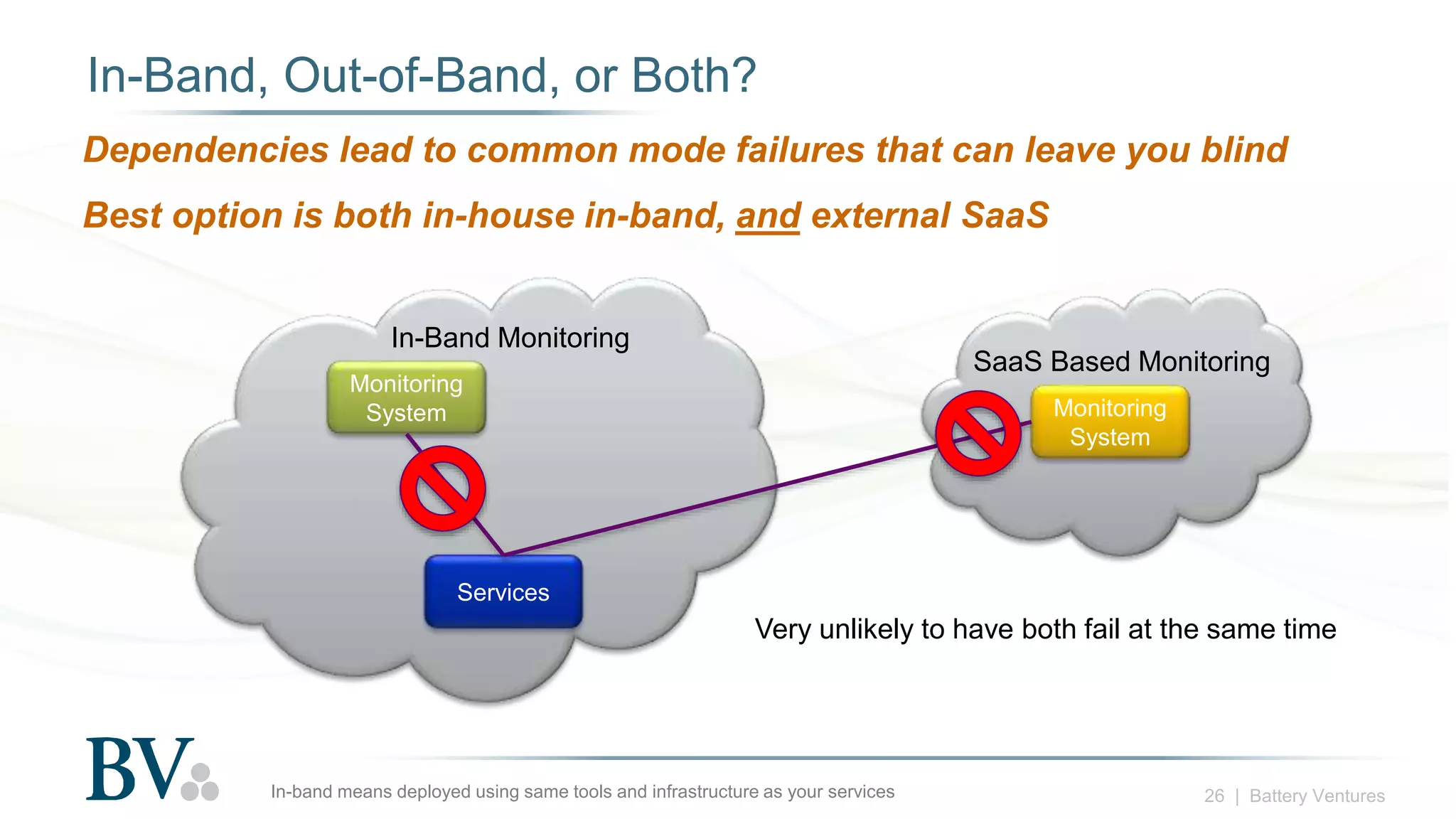

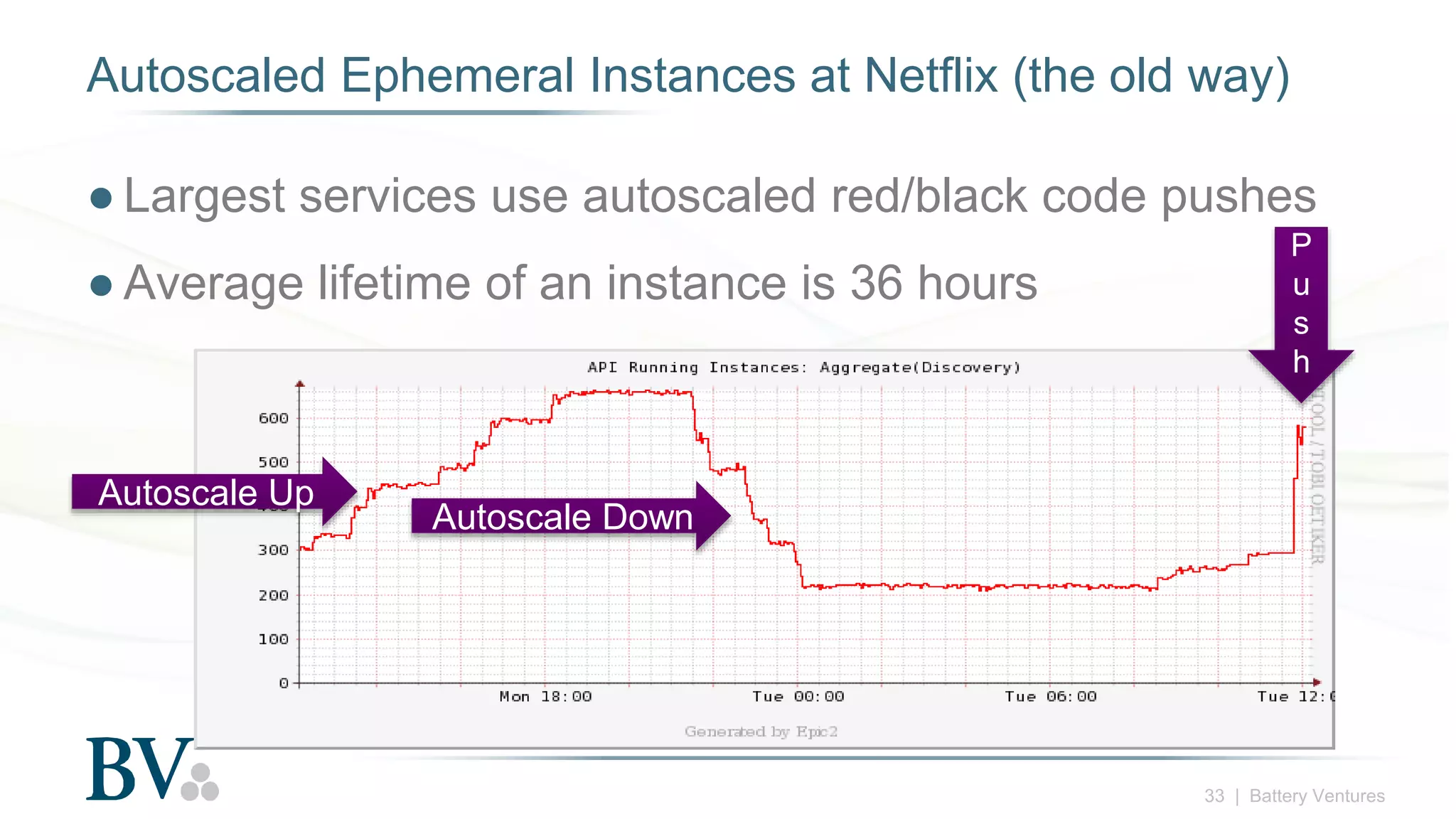

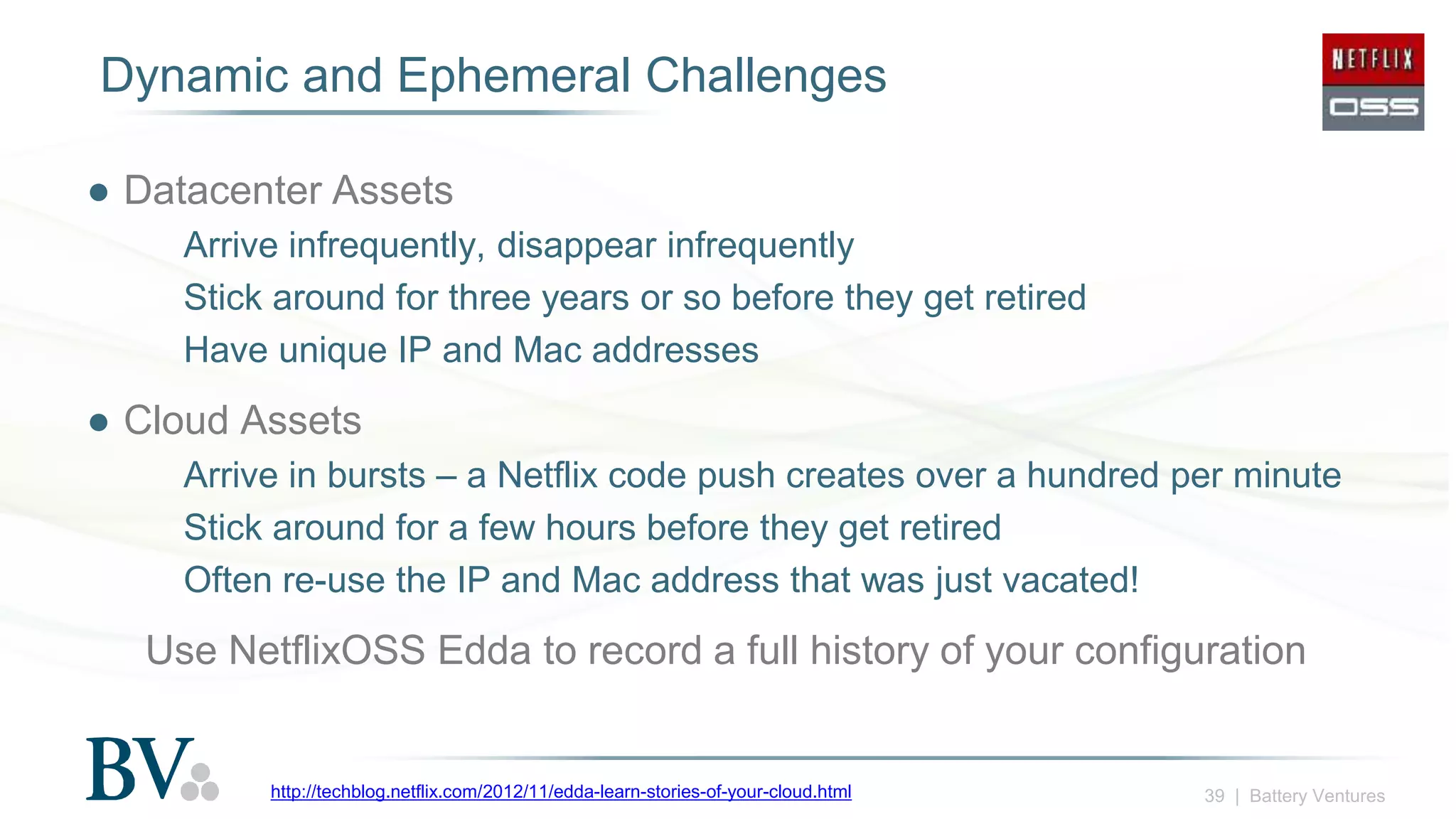

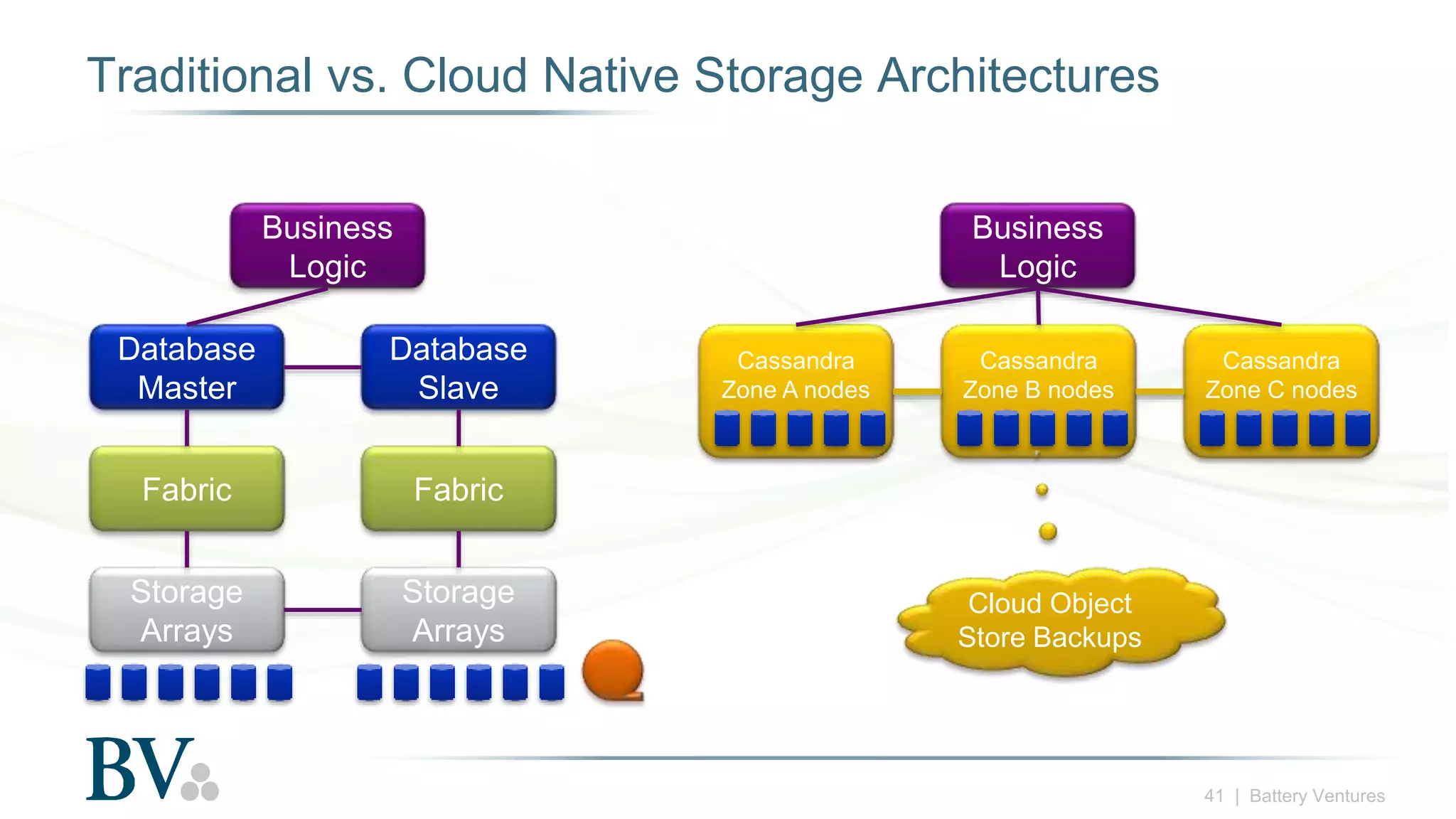

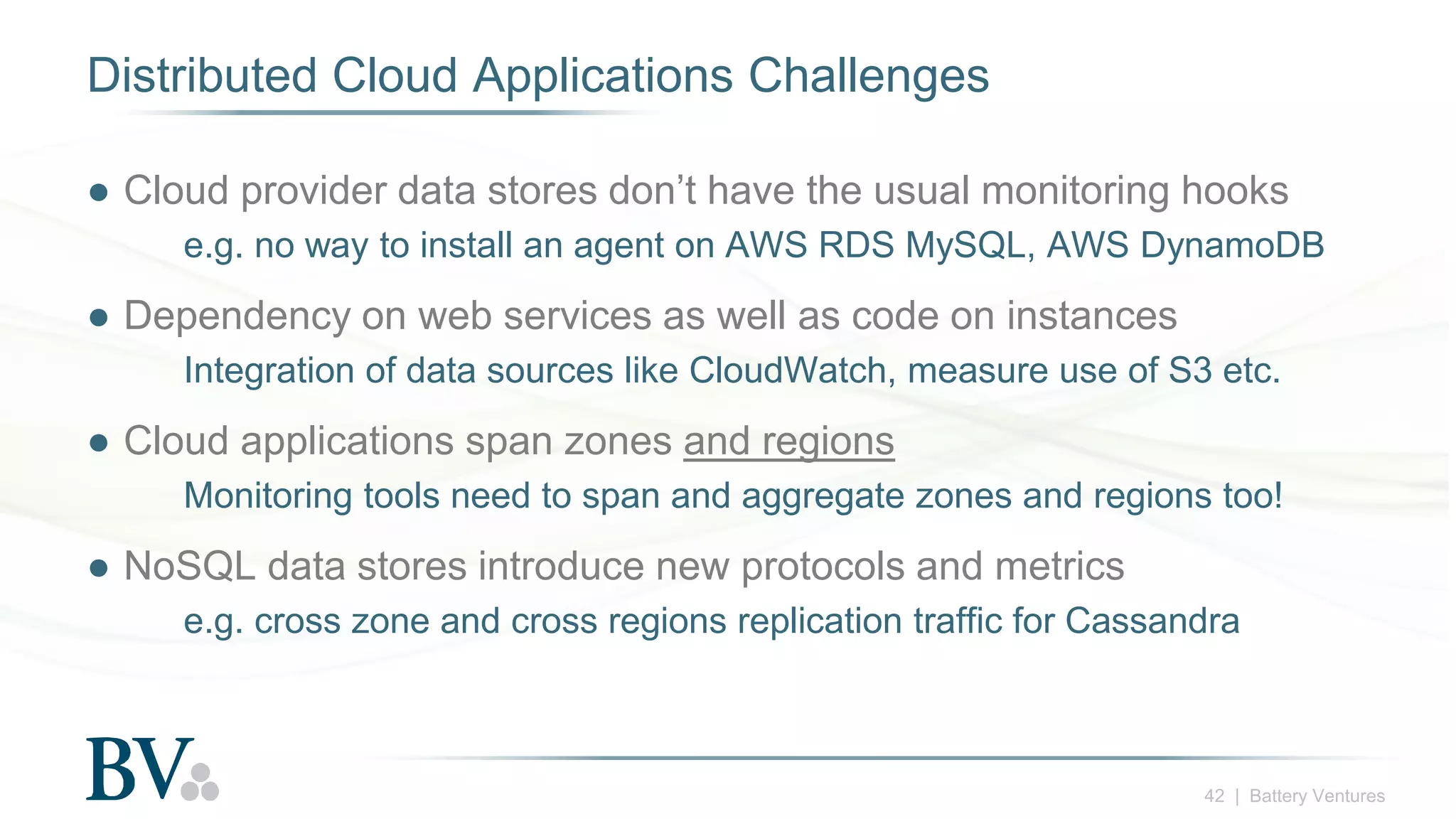

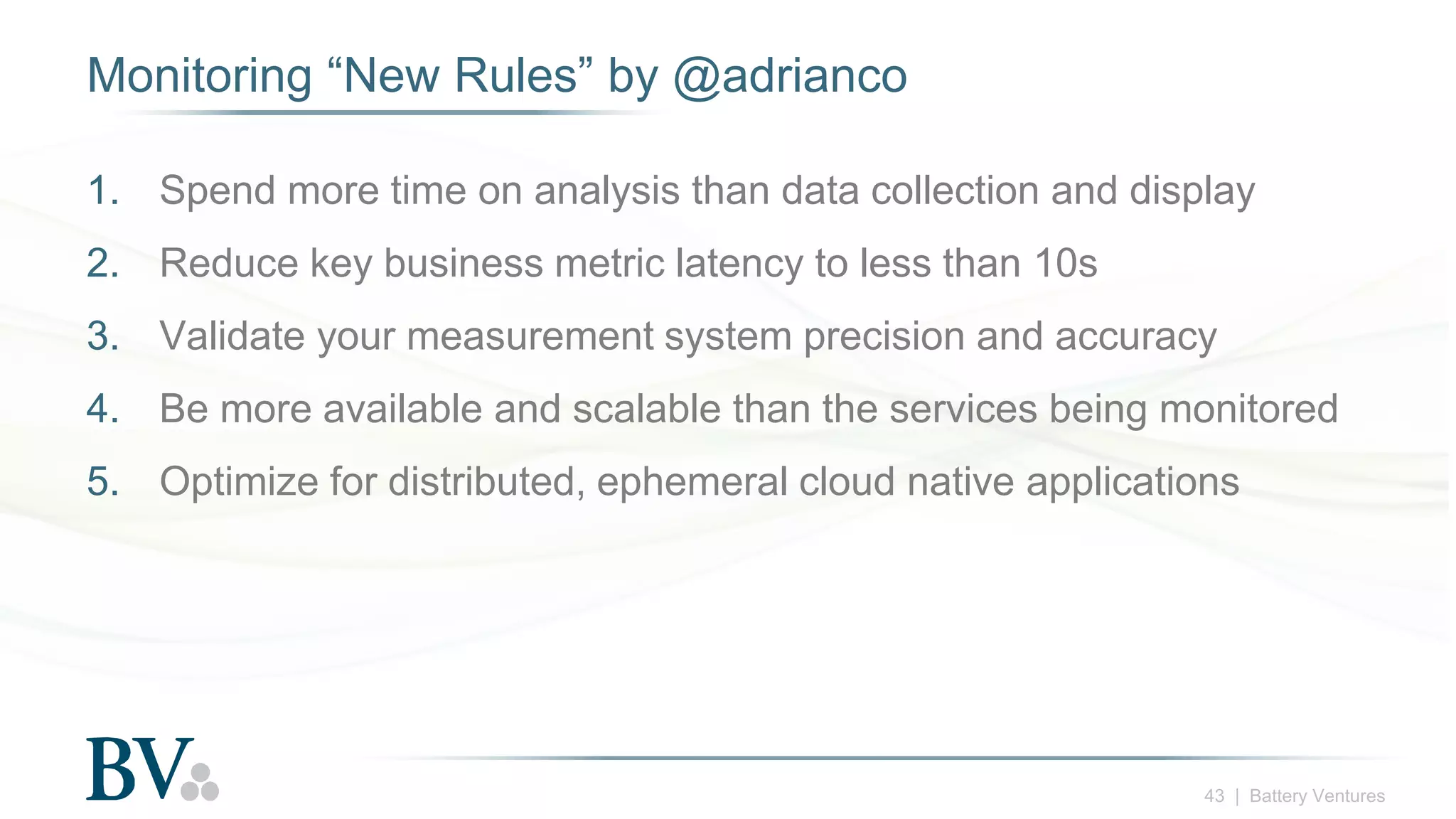

The document discusses the evolution and challenges of monitoring tools in the context of continuous delivery and microservices. It critiques traditional monitoring methods, advocating for a focus on metrics analysis instead of just collection and display. The author emphasizes the importance of immediate problem detection and the scalability of monitoring systems, particularly for dynamic cloud applications.