Transport Layer Description By Varun Tiwari

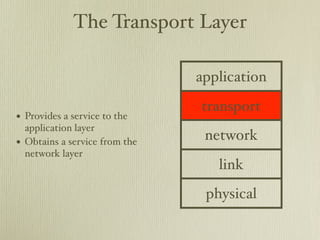

- 1. The Transport Layer application transport • Provides a service to the application layer • Obtains a service from the network network layer link physical

- 2. The Transport Layer • Principles behind transport layer services • multiplexing/demultiplexing • reliable data transfer • flow control • congestion control • Transport layer protocols used in the Internet • UDP: connectionless • TCP: connection-oriented • TCP congestion control

- 3. Transport services and protocols • Provide logical communication between application processes running on different hosts • Transport protocols run on end systems • send side: break app messages into segments, pass to network layer • recv side: reassemble segments into messages, pass to app layer • End-to-end transport between sockets • network layer provides end- to-end delivery between hosts

- 4. Internet transport-layer protocols • TCP • connection-oriented, reliable in-order stream of bytes • congestion control, flow control, connection setup • users see stream of bytes - TCP breaks into segments • UDP • unreliable, unordered • users supply chunks to UDP, which wraps each chunk into a segment / datagram • Both TCP and UDP use IP, which is best-effort - no delay or bandwidth guarantees

- 5. Multiplexing • Goal: put several transport-layer ‘connections’ over one network-layer ‘connection’ Multiplexing at send host: Demultiplexing at rcv host: gathering data from multiple delivering received segments sockets, enveloping data with to correct socket header (used for demultiplexing)

- 6. Demultiplexing • Host receives IP datagrams 32 bits • each datagram has source IP address, destination IP source port # dest port # address • each datagram carries one transport-layer segment other header fields • each segment has source, destination port numbers • Host uses IP addresses and application data port numbers to direct (message) segment to the appropriate socket TCP/UDP segment format

- 7. Connectionless (UDP) demultiplexing • Create sockets with port numbers DatagramSocket mySocket1 = new DatagramSocket(99111); DatagramSocket mySocket2 = new DatagramSocket(99222); • UDP socket identified by two-tuple: (destination IP address, destination port number) • When host receives UDP segment • checks destination port number in segment • directs UDP segment to socket with that port number • IP datagrams with different source IP addresses and/ or source port numbers, but same dest address/port, are directed to the same socket

- 8. Connection-oriented (TCP) demultiplexing • TCP socket identified by four-tuple: (source IP address, source port number, destination IP address, destination port number) • Receiving host uses all four values to direct segment to the correct socket • Server host may support many simultaneous TCP sockets • each socket identified by own 4-tuple • Web servers have different sockets for each connecting client • non-persistent HTTP has different socket for each request

- 10. User Datagram Protocol (UDP) • RFC 768 Why have UDP? • The ‘no frills’ Internet • no connection establishment transport protocol (means lower delay) • ‘best efforť: UDP segments • simple; no connection state may be: at sender and receiver • lost • small segment header • delivered out of order • no congestion control: UDP • connectionless can blast away as fast as • no handshaking between desired sender and receiver • no retransmits: useful for some applications (lower • each segment handled independently of others delay)

- 11. UDP • Often used for streaming 32 bits multimedia, games • loss-tolerant source port # dest port # • rate or delay-sensitive length (in bytes of UDP • Also used for DNS, SNMP segment, including checksum • If you need reliable transfer header) over UDP, can add reliability at application-layer • application-specific error application data recovery (message) • but think about what you are doing... UDP segment format

- 12. UDP checksum • Purpose: to detect errors (e.g., flipped bits) in a transmitted segment Sender Receiver • treat segment contents as a • compute checksum of received sequence of 16-bit integers segment • checksum: addition (1’s • check if computed checksum complement sum) of segment equals checksum field value contents • NO = error detected • sender puts checksum value into • YES = no error detected UDP checksum field (but maybe errors anyway?)

- 13. UDP checksum example • e.g., add two 16-bit integers • NB, when adding numbers, carry from MSB is added to result 1 1 1 0 0 1 1 0 0 1 1 0 0 1 1 0 1 1 0 1 0 1 0 1 0 1 0 1 0 1 0 1 _________________________________ wraparound 1 1 0 1 1 1 0 1 1 1 0 1 1 1 0 1 1 _________________________________ sum 1 0 1 1 1 0 1 1 1 0 1 1 1 1 0 0 checksum 0 1 0 0 0 1 0 0 0 1 0 0 0 0 1 1

- 14. Reliable data transfer • Principles are important in app, transport, link layers • Complexity of the reliable data transfer (rdt) protocol determined by characteristics of unreliable channel

- 15. rdt_send(): called from above deliver_data(): called by (e.g., by app). Passed data to rdt to deliver data to upper deliver to receiver’s upper layer layer SEND RECV SIDE SIDE udt_send(): called by rdt_recv(): called rdt to transfer packet when packet arrives over unreliable channel on rcv side of to receiver channel

- 16. Developing an rdt protocol • Develop sender and receiver sides of rdt protocol • Consider only unidirectional data transfer • although control information will flow in both directions • Use finite state machines (FSM) to specify sender and receiver event causing state transition actions taken on state transition state: when in state state this state, next 1 2 state is uniquely determined by event next event actions initial state

- 17. rdt1.0 • No bit errors, no packet loss, no packet reordering SENDER RECEIVER

- 18. rdt2.0 • What if channel has bit errors (flipped bits)? • Use a checksum to detect bit errors • How to recover? • acknowledgements (ACKs): receiver explicitly tells sender that packet was received (“OK”) • negative acknowledgements (NAKs): receiver explicitly tells sender that packet had errors (“Pardon?”) • sender retransmits packet on receipt of a NAK • ARQ (Automatic Repeat reQuest) • New mechanisms needed in rdt2.0 (vs. rdt1.0) • error detectio# • receiver feedback: control messages (ACK, NAK) • retransmissio#

- 19. rdt2.0 SENDER RECEIVER

- 20. But rdt2.0 doesn’t always work... SENDER RECEIVER data1 • If ACK/NAK corrupted data1 ACK delivered • sender doesn’t know what happened at receiver data2 ! • shouldn’t just retransmit: NAK possible duplicate data2 • Solution: data2 ACK delivered • sender adds sequence number to each packet data3 • sender retransmits current data3 ACK delivered packet if ACK/NAK garbled NAK ! • receiver discards duplicates data3 data3 • Stop and wai$ ACK delivered • Sender sends one packet, then waits for receiver response

- 21. rdt2.1 sender

- 22. rdt2.1 receiver

- 23. rdt2.1 Sender Receiver • seq # added • must check if received packet is • two seq #’s (0,1) sufficient duplicate • must check if received ACK/ • state indicates whether 0 or 1 NAK is corrupted is expected seq # • 2x state • receiver can not know if its last • state must “remember” ACK/NAK was received OK at whether “current” packet sender has 0 or 1 seq #

- 24. rdt2.1 works! SENDER RECEIVER data1/0 data1 ACK delivered data2/1 ! NAK data2/1 data2 ACK delivered data3/0 data3 ACK delivered NAK ! data3/0 ACK

- 25. Do we need NAKs? rdt2.2 • Instead of NAK, receiver sends ACK for last packet received OK • receiver explicitly includes seq # of packet being ACKed

- 26. rdt2.2 sender • duplicate ACK at sender results in the same action as a NAK: retransmit current packe$

- 27. rdt2.2 works! SENDER RECEIVER data1/0 data1 ACK0 delivered data2/1 ! ACK0 data2/1 data2 ACK1 delivered data3/0 data3 ACK0 delivered #%$ ! data3/0 ACK0

- 28. What about loss? rdt3.0 Approach: • sender waits “reasonable” Assume: amount of time for ACK • retransmits if no ACK • Underlying channel can also received in this time lose packets (both data and ACKs) • if pkt (or ACK) just delayed (not lost) • checksum, seq #, ACKs, • retransmission is duplicate, but retransmissions will help, but seq # handles this not enough • received must specify seq # of packet being ACKed • requires countdown timer

- 29. rdt3.0 sender

- 30. rdt3.0 in action SENDER RECEIVER { data1/0 ACK0 data1 delivered { data2/1 ! { timeout data2/1 data2 ACK1 delivered { data3/0 data3 ACK0 delivered ! { timeout data3/0 ACK0

- 31. rdt3.0 works, but not very well... L 8kb/pkt Ttransmit = = 9 = 8microsec R 10 b/sec L R 0.008 Usender = L = = 0.00027 RT T + R 30.008 • e.g., 1Gbps link, 15 ms propagation delay, 1KB packet: • L = packet length in bits, R = transmission rate in bps • Usender = utilisation - the fraction of time sender is busy sending • 1 KB packet every 30 ms 33 kB/s throughput over a 1Gbps link • good value for money upgrading to Gigabit Ethernet! • network protocol limits the use of the physical resources! • because rdt3.0 is stop-and-wai$

- 32. Pipelining • Pipelined protocols • send multiple packets without waiting • number of outstanding packets 1, but still limited • range of sequence numbers needs to be increased • buffering at sender and/or receiver • Two generic forms • go-back-N and selective repea$

- 33. Go-back-N • Sender • k-bit sequence number in packet header • “window” of up to N, consecutive unACKed packets allowed • ACK(n): ACKs all packets up to, including sequence number n = “cumulative ACK” • (this may deceive duplicate ACKs) • timer for each packet in flight • timeout(n): retransmit packet n and all higher sequence # packets in window

- 34. Go-back-N sender

- 35. Go-back-N receiver • ACK-only: always send ACK for correctly-received packet with highest in-order sequence number • may generate duplicate ACKs • only need to remember expectedseqnum • out-of-order packet: • discard (don’t buffer), i.e., no receiver buffering • reACK packet with highest in-order sequence number

- 36. go-back-N in action SENDER RECEIVER data1 data2 ACK1 data3 ! ACK2 data4 data5 ACK2 data3 timeout data3 ACK2 data4 ACK3 ACK4

- 37. Selective Repeat • If we lose one packet in go-back-N • must send all N packets again • Selective Repeat (SR) • only retransmit packets that didn’t make it • Receiver individuay acknowledges all correctly- received packets • buffers packets as needed for eventual in-order delivery to upper layer • Sender only resends pkts for which ACK not received • sender timer for each unACKed packet • Sender window • N consecutive sequence numbers • as in go-back-N, limits seq numbers of sent, unACKed pkts

- 39. Selective Repeat Sender Receiver • if next available seq # is in pkt n in [rcvbase, rcvbase+N-1]: window, send packet • send ACK(n) • timeout(n): resend pkt n, • if out of order, buffer restart timer • if in order: deliver (also deliver • ACK(n) in [sendbase, sendbase+N]: any buffered, in-order pkts), • mark packet n as received advance window to next • if n is smallest unACKed not-yet-received pkt packet, advance window base pkt n in [rcvbase, rcvbase+n-1]: to next unACKed seq # • send ACK(n) • even though already ACKed • Need = 2N sequence numbers otherwise • or reuse may confuse • ignore receiver

- 40. SR in action SENDER RECEIVER 1 2 3 4 5 6 7 8 9 10 data1 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 data2 1 2 3 4 5 6 7 8 9 10 ACK1 1 2 3 4 5 6 7 8 9 10 data3 ! ACK2 1 2 3 4 5 6 7 8 9 10 (window full) data4 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 (ACK1 received) data5 ACK4 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 data6 ACK5 1 2 3 4 5 6 7 8 9 10 data3 timeout data3 1 2 3 4 5 6 7 8 9 10 (data3 received, so data3-6 ACK3 delivered up, ACK3 sent)

- 41. TCP • point-to-point • full-duplex data • one sender, one receiver • bi-directional data flow in • reliable, in-order byte strea' same connection • no “message boundaries” • MSS: maximum segment size • pipelined • connection-oriented • handshaking initialises sender, • TCP congestion control and receiver state before data flow control set window size exchange • send and receive buffers • flow-controlled • sender will not overwhelm receiver

- 42. TCP segment structure 32 bits U = urgent data (not often used) source port # dest port # A = ACK# valid P = push data (not sequence number often used) counted in bytes (not segments) acknowledgement number R,S,F = RST, SYN, FIN = connection head not setup/teardown UA P R S F receive window len used commands #bytes receiver willing to accept checksum urgent data pointer Internet options (variable length) checksum (like UDP) application data (message)

- 43. TCP sequence numbers ACKs • Sequence numbers • byte-stream # of first byte in segmenťs data • ACKs • seq # of next byte expected from other side • cumulative ACK • How does receiver handle out- of-order segments? • Spec doesn’t say; up to implementor • Most buffer and wait for missing to be retransmitted

- 44. TCP RTT timeout • How to set TCP timeout? • How to estimate RTT? • longer than RTT • sampleRTT: measured time from • but RTT can vary segment transmission until ACK • too short premature receipt timeout • ignore retransmissions • unnecessary • sampleRTT will vary, but we want retransmissions “smooth” estimated RTT • too long slow reaction to • average several recent loss measurements, not just current • So estimate RTT EstimatedRT T = (1 − α) ∗ EstimatedRT T + α ∗ SampleRT T • Exponentially-weighted moving average • Influence of past samples decrease exponentially fast • typical α = 0.125

- 46. TCP timeout • Timeout = EstimatedRTT + “safety margin” • if timeouts too short, too many retransmissions • if margin is too large, timeouts take too long • larger the variation in EstimatedRTT, the larger the margin • first estimate of deviation DevRT T = (1 − β) ∗ DevRT T + β ∗ |SampleRT T − EstimatedRT T | (typical β = 0.25) • Then set timeout interval T imeoutInterval = EstimatedRT T + 4 ∗ DevRT T

- 47. RDT in TCP • TCP provides RDT on top of unreliable IP • Pipelined segments • Cumulative ACKs • Single retransmission timer • Retransmissions triggered by : • timeout events • duplicate ACKs

- 48. TCP sender events Timeout: Data received from app: • retransmit segment that caused timeout • Create segment with seq # • restart timer • seq # is byte-stream number of first data byte in segment ACK received: • start timer if not already • If ACK acknowledges running (timer for oldest previously-unACKed segments unACKed segment) • update what is known to be • expiration interval = ACKed TimeOutInterval • start timer if there are outstanding segments

- 49. TCP sender (simplified) NextSeqNum = InitialSeqNum SendBase = InitialSeqNum loop (forever) { switch(event) • SendBase-1 = last event: data received from application above cumulatively- create TCP segment with sequence number NextSeqNum if (timer currently not running) ACKed byte start timer pass segment to IP NextSeqNum = NextSeqNum + length(data) • e.g., event: timer timeout • SendBase-1 = 71; retransmit not-yet-acknowledged segment with y = 73, so receiver smallest sequence number start timer wants 73+ event: ACK received, with ACK field value of y if (y SendBase) { • y SendBase, so SendBase = y if (there are currently not-yet-acknowledged segments) the new data is start timer ACKed } } /* end of loop forever */

- 50. TCP retransmissions - lost ACK HOST A HOST B SEQ=92 , 8 by tes da timeout ta 0 ! ACK=10 SEQ=92 , 8 by tes da ta 0 ACK=10 SendBase = 100 time

- 51. TCP retransmissions - premature timeout HOST A HOST B SEQ=92 , 8 by tes da ta SEQ=10 timeout 0, 20 bytes data K= 100 AC SEQ=92 120 , A8K= y C b tes SendBase = data 100 timeout SendBase = 120 ACK=120 SendBase = 120 time

- 52. TCP retransmissions - saving retransmits HOST A HOST B SEQ=92 , 8 by tes da ta SEQ=10 0, 20 bytes data timeout K= 100 AC ! K= 120 AC SendBase = 120 time

- 53. TCP ACK generation Event at receiver TCP receiver actio# Arrival of in-order segment with Delayed ACK. Wait up to 500ms for expected seq #. All data up to next segment. If no next segment, expected seq # already ACKed. send ACK. Arrival of in-order segment with Immediately send single cumulative expected seq #. One other segment ACK, ACKing both in-order has ACK pending. segments. Arrival of out-of-order segment Immediately send duplicate ACK, higher than expected seq #. Gap indicating seq # of next expected detected. byte. Immediately send ACK, provided Arrival of segment that partially or that segment starts at lower end of completely fills gap. gap.

- 54. TCP Fast Retransmit • Timeout is often quite long • so long delay before resending lost packet • Lost segments are detected via DUP ACKs • sender often sends many segments back-to-back (pipeline) • if segment is lost, there will be many DUP ACKs • If a sender receives 3 ACKs for the same data, it assumes that the segment after the ACKed data was lost • fast retransmit: resend segment before the timer expires

- 55. TCP flow control Flow control: prevent sender from overwhelming receiver • Receiver side of TCP connection has a receive buffer • Application process may be slow at reading from buffer • Need ‘speed-matching’ service: match send rate to receiving application’s ‘drain’ rate

- 56. TCP flow control • Spare room in buffer • Receiver advertises spare room = RcvWindow by including value of RcvWindow = RcvBuffer - [LastByteRcvd- LastByteRead] in segments • value is dynamic • Sender limits unACKed data to RcvWindow • guarantees receive buffer will not overflow

- 57. TCP connection management Three way handshake TCP sender and receiver establish ‘connection’ before 1. Client host sends TCP SYN exchanging data segments segment to server • specifies initial sequence # • Initialise TCP variables: • no data • sequence numbers • buffers, flow control info 2. Server receives SYN, replies • Client: initiates connection with SYNACK segment Socket clientSocket = new • server allocates buffers Socket(hostname,port number); • specifies server initial seq # • Server: contacted by client Socket connectionSocket = 3. Client receives SYNACK, welcomeSocket.accept(); replies with ACK • may contain data

- 58. TCP connection management Closing a connection CLIENT SERVER close 1. Client host sends TCP FIN FIN segment to server ACK • specifies initial sequence # close • no data FIN 2. Server receives FIN, replies with ACK closed ACK segment, closes connection, timed wait sends FIN 3. Client receives FIN, replies with ACK, enters ‘timed waiť • during timed wait, will respond with ACK to FINs closed time 4. Server receives ACK, closes.

- 59. TCP connection management TCP server lifecycle TCP client lifecycle

- 60. Other TCP flags • RST = reset the connection • used e.g., to reset non-synchronised handshakes • or if host tries to connect to server on non-listening port • PSH = push • receiver should pass data to upper layer immediately • receiver pushes all data in window up • URG = urgent • sender’s upper layer has marked data in segment as urgent • location of last byte of urgent data indicated by urgent data pointer • URG and PSH are hardly ever used • except Blitzmail, which appears to use PSH for every segment • See RFC 793 for more info (also 1122, 1323, 2018, 2581)

- 61. Congestion control • What is congestion? • Too many sources sending too much data too fast for the network to handle • Not flow control! • network, not end systems • Manifestations • lost packets (buffer overflow at routers) • long delays (queueing in router buffers) • One of the most important problems in networking

- 62. Congestion control: scenario 1 • 2 senders, 2 receivers • link capacity R • 1 router, infinite buffers • no retransmissions • large delays when congested • maximum achievable throughput = R/2

- 63. Congestion control: scenario 2 • 1 router, finite buffers • sender retransmits lost packets • λin = sending rate, λ′in = offered load (inc. retransmits)

- 64. Congestion control: scenario 2 • λin = λout (goodput) • ‘perfecť retransmission, only when loss: λ′in λout • retransmissions of delayed (not lost) packets means λ′in greater than perfect case • So congestion causes • more work (retransmits) for given goodput • unnecessary retransmissions; link carries multiple copies

- 65. Congestion control: scenario 3 • 4 senders •A C •B D • finite buffers • multihop paths • timeouts/ retransmits

- 66. Congestion control: scenario 3 • A C limited by R1 R2 link • B D traffic saturates R2 • A C end-to-end throughput goes to zero • may as well have used R1 for something else • So congestion causes • packet drops any upstream transmission capacity used for that packet is wasted

- 67. Approaches to congestion control Network-assisted congestion End-to-end congestion control control • routers provide feedback to end systems • no explicit feedback from • direct feedback, e.g. choke network packet • congestion inferred from end- • mark single bit indicating system observed loss and delay congestion • this is what TCP does • tells sender the explicit rate at which it should send • ATM, DECbit, TCP/IP ECN

- 68. ATM ABR congestion control ATM (Asynchronous Transfer Mode) • alternative network RM (Resource Management) architecture Cells • sent by sender, interspersed • virtual circuits, fixed-size cells with data cells • bits in RM cell set by switches ABR (Available Bit Rate) (i.e., network-assisted CC) • elastic server • NI bit: no increase in rate • if sender’s path is underloaded, (mild congestion) sender should use available • CI bit: congestion indication bandwidth • RM cells are returned to the • if sender’s path is congested, sender by receiver, with bits sender is throttled to the intact minimum guaranteed rate

- 69. ATM ABR congestion control • 2-byte ER (Explicit Rate) field in RM cell • congested switch may lower ER value in cell • sender’s send rate is thus the minimum supportable rate on path • EFCI bit in data cell is set to 1 in a congested switch • if data cell preceding RM cell has EFCI set, sender sets CI bit in returned RM cell

- 70. TCP congestion control • end-to-end (no network assist) • sender limits transmission LastByteSent − LastByteAcked ≤ min{CongW in, RcvW indow} CongW in rate = Bytes/sec RT T • CongWin is dynamic function of perceived congestion • How does sender perceive congestion? • loss event: timeout or 3 DUP ACKs • TCP sender reduces rate (CongWin) after loss event • 3 mechanisms: AIMD, slow start, conservative after timeouts • TCP congestion control is self-clocking

- 71. TCP AIMD Multiplicative decrease Additive increase • halve CongWin after loss event • increase CongWin by 1 MSS every RTT in the absence of loss events (probing) TCP ‘sawtooth’

- 72. TCP Slow Start • When connection begins, CongWin = 1 MSS • e.g., MSS = 500 bytes, RTT = 200 ms • initial rate = 20 kbps • But available bandwidth may be ≥ MSS/RTT • want to quickly ramp up to respectable rate • When connection begins, increase rate exponentially until the first loss event • double CongWin every RTT • increment CongWin for every ACK received • Slow start: sender starts sending at slow rate, but quickly speeds up

- 73. TCP slow start HOST A HOST B 1 segment RTT 2 segment s RTT 4 segment s time

- 74. TCP - reaction to timeout events • After 3 DUP ACKs • CongWin halved • window then grows linearly • But after timeout • CongWin set to 1 MSS • window then grows exponentially • to threshold, then grows linearly (AIMD: congestion avoidance) • Why? • 3 DUP ACKs means network capable of delivering some segments, so do Fast Recovery (TCP Reno) • timeout before 3 DUP ACKs is more troubling

- 75. TCP - reaction to timeout events • When to switch from exponential to linear? • When CongWin gets to ½ of its value before timeout • Implementation: • Threshold variable • At loss event, Threshold is set to ½ of CongWin just before loss event

- 76. TCP congestion control - summary • When CongWin is below Threshold, sender in slow start phase; window grows exponentially • When CongWin is above Threshold, sender in congestion-avoidance phase; window grows linearly • When a triple duplicate ACK occurs, Threshold set to CongWin/2 and CongWin set to Threshold • When timeout occurs, Threshold set to CongWin/2 and CongWin set to 1 MSS

- 77. TCP throughput • W = window size when loss occurs • When window = W, throughput = W/RTT • After loss, window = W/2, throughput = W/2RTT • Average throughput = 0.75W/RTT • (ignoring slow start, assume throughput increases linearly between W/2 and W)

- 78. High-speed TCP • assume: 1500 byte MSS (common for Ethernet), 100ms RTT, 10Gbps desired throughput • W = 83,333 segments • a big CongWin! What if loss? • Throughput in terms of loss: 1.22 ∗ M SS √ RT T ∗ L • so L (loss rate) = 2*10-10 (1 loss every 5m segments) • is this realistic? • Lots of people working on modifying TCP for high- speed networks

- 79. Fairness • If k TCP sessions share same bottleneck link of bandwidth R, each should have an average rate of R/*

- 80. How is TCP fair? Two competing sessions • additive increase gives slope of 1 as throughput increases • multiplicative decrease decreases throughput proportionally • suppose we are at A • total R, so both increase • B, total R, so loss • both decrease window by a factor of 2 • C • total R, so both increase • etc...

- 81. Fairness • multimedia apps often use UDP • do not want congestion/flow control to throttle rate • pump A/V at constant rate, tolerate packet loss • How to enforce fairness in UDP? • application-layer congestion control • long-term throughput of a UDP flow is equivalent to a TCP flow on the same link • Parallel TCP connections • nothing to stop application from opening parallel connections between two hosts (web browser, download ‘accelerator’) • e.g., link of rate R with 9 connections • new app asks for 1 TCP, gets rate R/(9+1) = R/10 • new app asks for 11 TCP, gets rate R/(9+11) = R/2 (!)

- 82. What is ‘fair’? • Max-min fairness • Give the flow with the lowest rate the largest possible share • Proportional fairness • TCP favours short flows • Proportional fairness: flows allocated bandwidth in proportion to number of links traversed • Pareto-fairness • can’t give another flow any more bandwidth without taking bandwidth away from another flow • Per-link fairness • each flow gets a fair share of each link traversed • Utility functions/pricing • I pay/want more, I get more

- 83. Quick history of the Internet time 10million 100000 1000 4 100million 1million 10000 300million hosts hosts hosts hosts hosts hosts hosts hosts 1999 1990 1983 1995 1976 1969 1957 20011996 1991 1978 1985 early ‘60s 1971 20031997 1979 1993 1973 19671986 1998 1994 2004 1982 1988 1968 1974 ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- ------- DNS End of business.com RealAudio, developed. TheFirst IMP Hotmail debuts. Queen sends Lawsuitsreleases CERN close ISI manages DNS TCPTomlinson Ray becomes Sputnik launched. Packet-switching Lawrence Robert BBN andlaunches. 802.11MUD First standard Bob Blaster GoogleCerf and Slammer,launched. First e-commerce. Mosaic Metcalfe Network NSFNET created. UCL starts work DoD adopts OSI Vint Norway an sells AltaVista debut. installed at Internet2 domaincreated in WWW. down. ARPA e-mail.First Napster First SRI ARPANET.for root server, web TCP/IP. develops e-mail. independently released. WWW grows by Solutions offers worms. Flash invents Ethernet. First the IMP developed at proposes the IETF/IRTF on cyberbank. model. Internet connect to Bob Kahn publish By Red, byQuake launched. Paul commercialhost invented e-mail is Netscape ISP $7.5m. server UCLA (first IPO. Code 1973manages response. NIC Nimda mobs, blogs UCL becomes 100“A online pizza- 341,634%. Essex. “ARPANET” created. First Protocol for year domain ARPANET/ worm infects (Interface (world.std.com). Baran released. Napster launches. on ARPANET). II (RAND), (nsoc01.cern.ch). worms. registrations. 75% of become popular. Doom released. ordering. first international registrations. Internetof the most using Message Packet Network Second host BitTorrent Donald Daviesis symbolic.com ARPANET Verisign almost First spam. ARPANET node. ARPANET, leads TCP/IP over Processor). Interconnection” installed at SRI. introduced. (NPL,registered firsttraffic. and UK) destroys DNS First banner ad. to formation of SATNET. (TCP) First host-to-host X-Box debuts domain. Leonard (Site Finder). Yahoo! launches. CERT. with integrated message crashes Kleinrock (MIT). RIAA starts on “G” of Ethernet port. sueing P2P end- “LOGIN”. users.