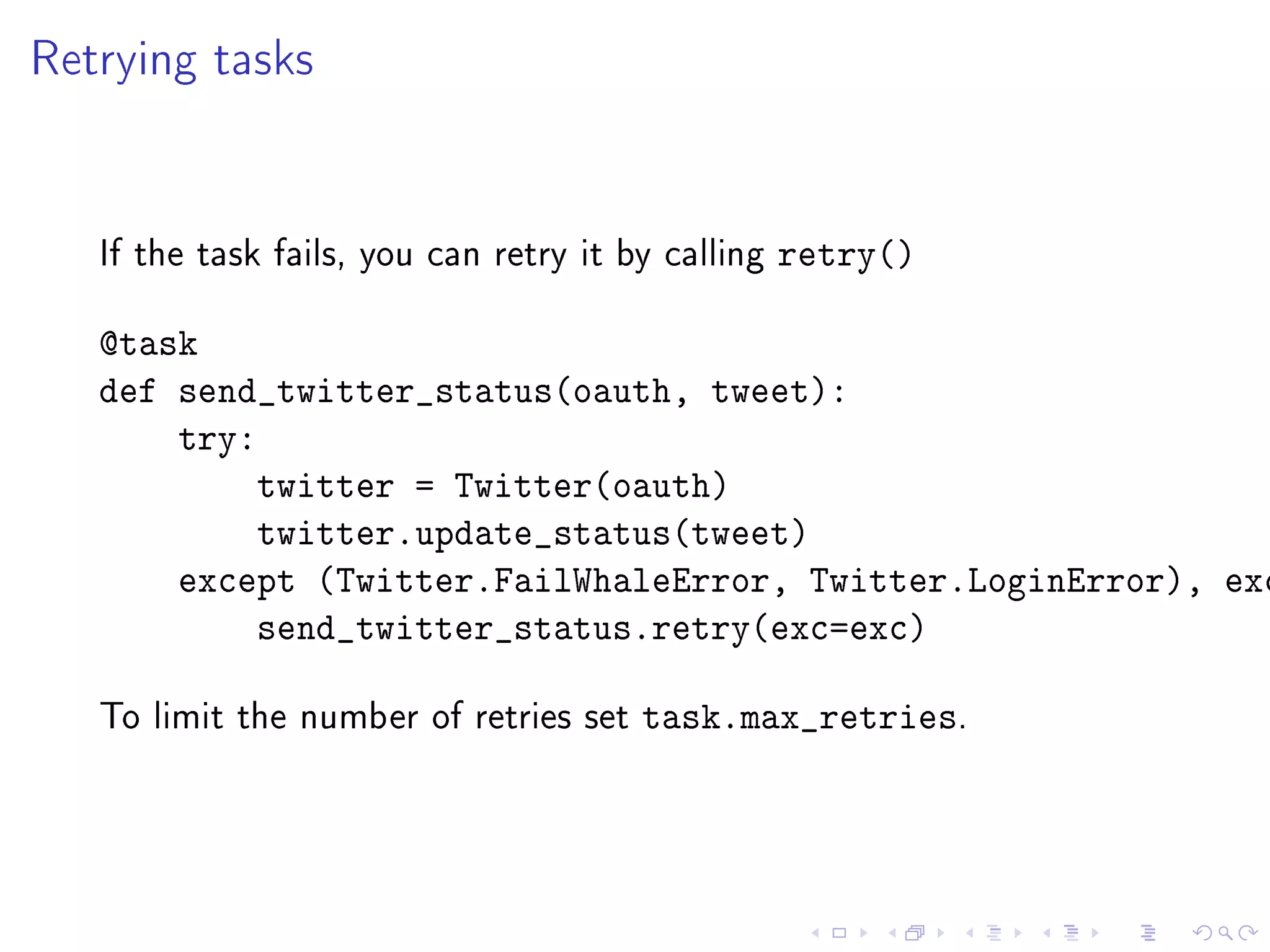

This document discusses using Celery and RabbitMQ to handle asynchronous and distributed tasks in Python. It provides an overview of Celery and RabbitMQ and how they can be used together. Examples are given of defining and running tasks, including periodic tasks, task sets, routing, retries, and interacting with results. The benefits of using Celery for slow, memory-intensive, or externally-dependent tasks are highlighted.

![self.__dict__

{'name': 'Òscar Vilaplana',

'origin': 'Catalonia',

'company': 'Paylogic',

'tags': ['developer', 'architect', 'geek'],

'email': 'dev@oscarvilaplana.cat',

}](https://image.slidesharecdn.com/talk-120410024546-phpapp02/75/Celery-with-python-4-2048.jpg)

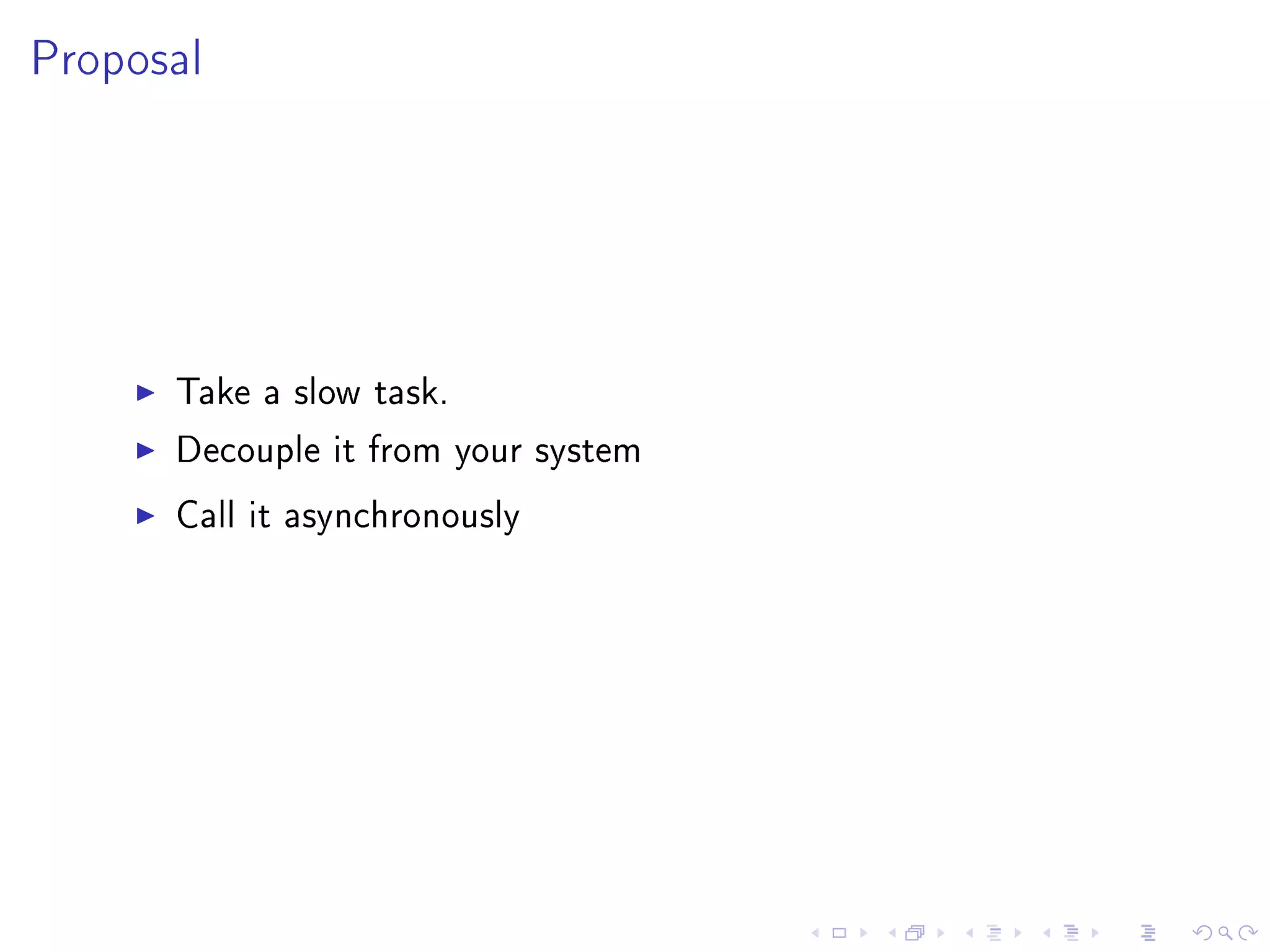

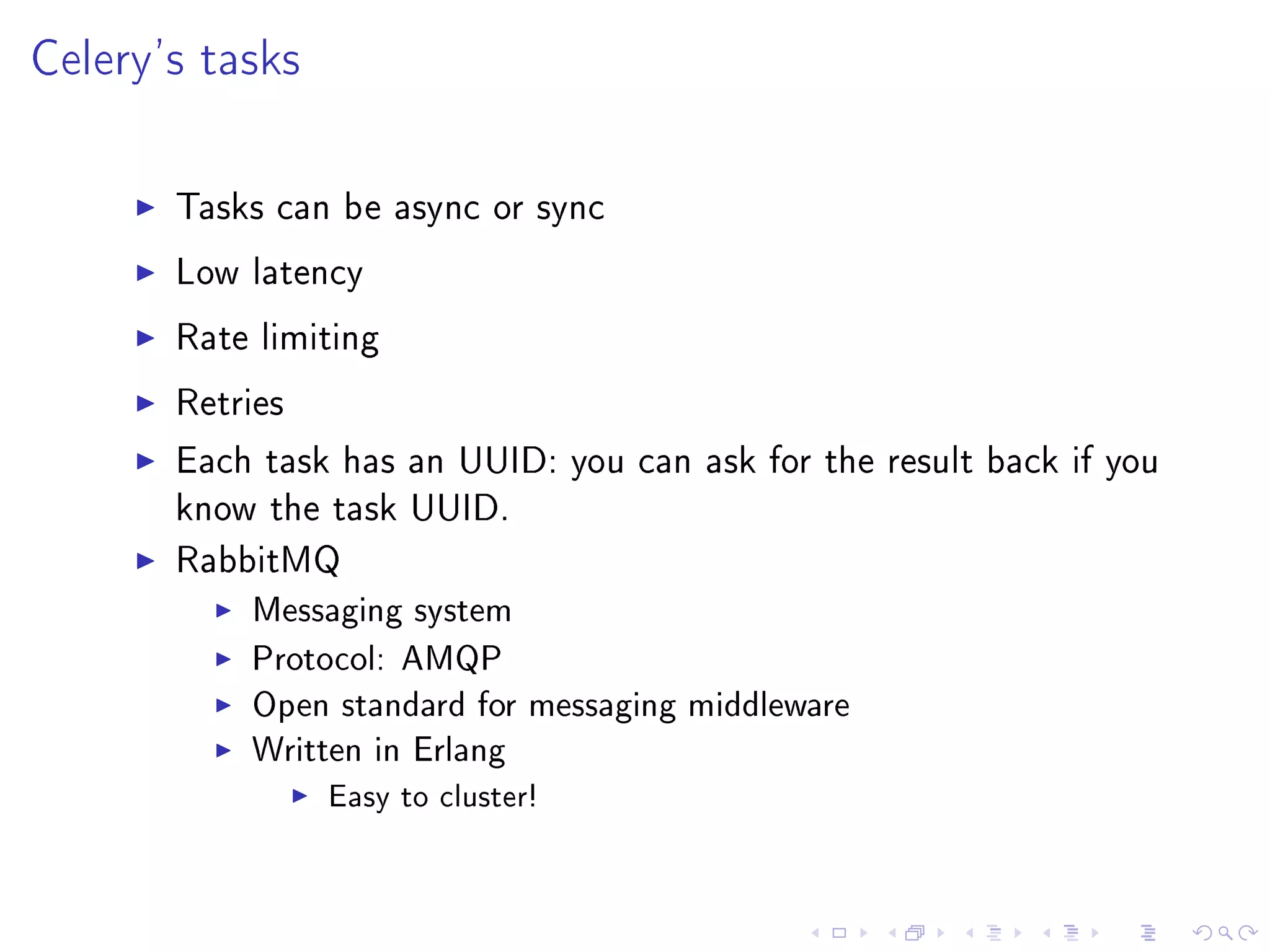

![Set up a cluster

rabbit1$ rabbitmqctl cluster_status

Cluster status of node rabbit@rabbit1 ...

[{nodes,[{disc,[rabbit@rabbit1]}]},{running_nodes,[rabbit@ra

...done.

rabbit2$ rabbitmqctl stop_app

Stopping node rabbit@rabbit2 ...done.

rabbit2$ rabbitmqctl reset

Resetting node rabbit@rabbit2 ...done.

rabbit2$ rabbitmqctl cluster rabbit@rabbit1

Clustering node rabbit@rabbit2 with [rabbit@rabbit1] ...done

rabbit2$ rabbitmqctl start_app

Starting node rabbit@rabbit2 ...done.](https://image.slidesharecdn.com/talk-120410024546-phpapp02/75/Celery-with-python-15-2048.jpg)

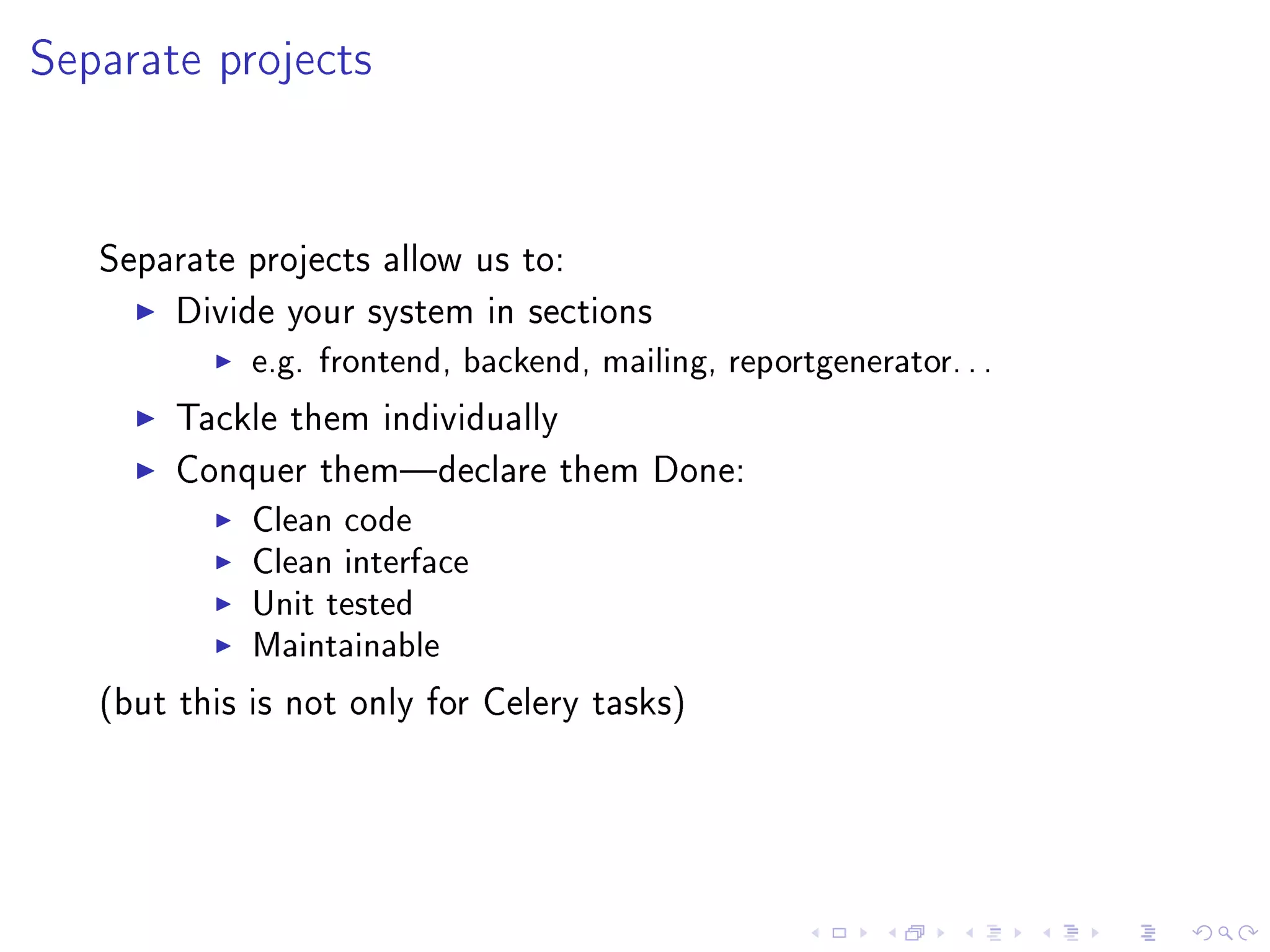

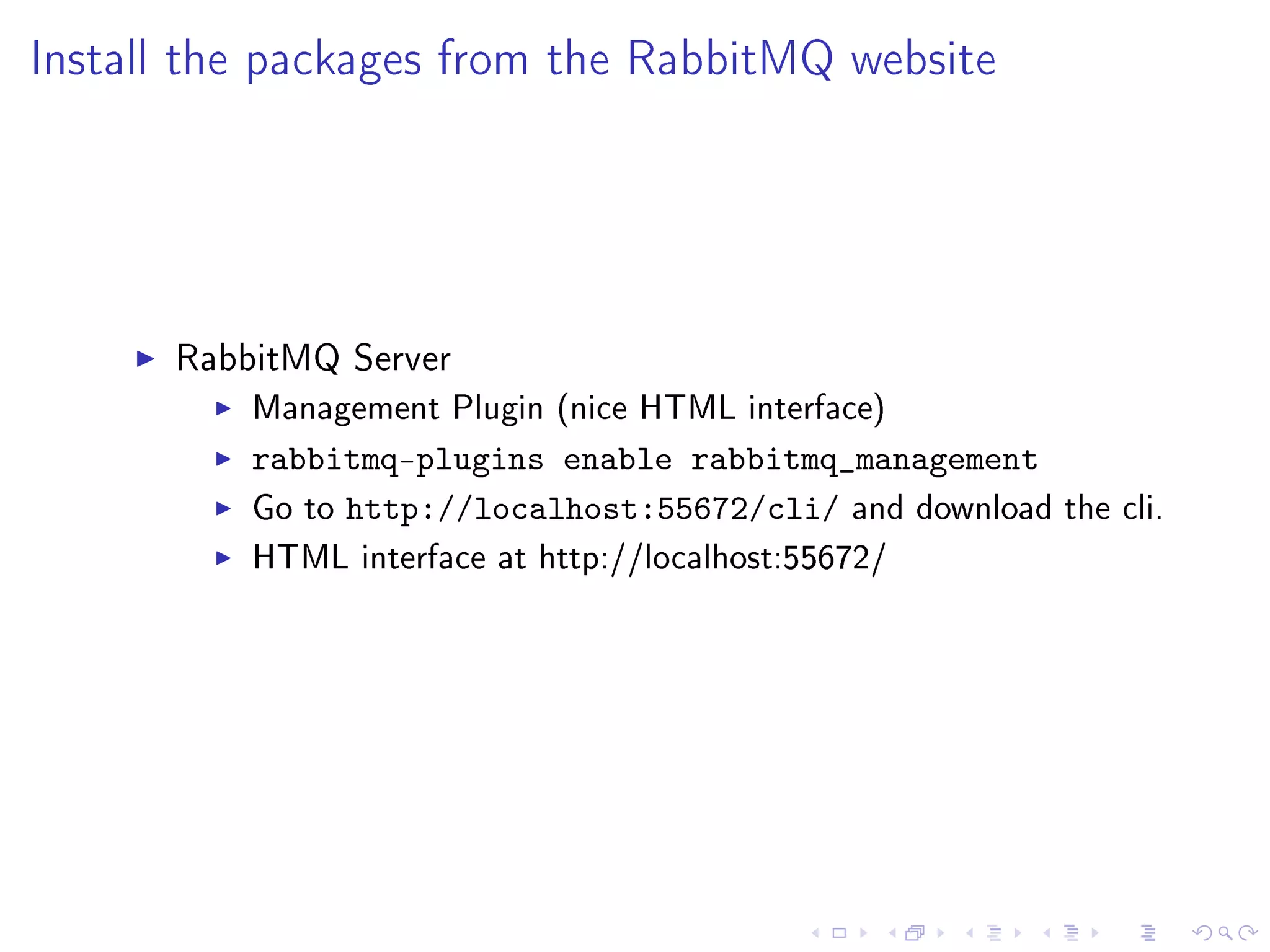

![TaskSet

Run several tasks at once. The result keeps the order.

from celery.task.sets import TaskSet

from tasks import add

job = TaskSet(tasks=[

add.subtask((4, 4)),

add.subtask((8, 8)),

add.subtask((16, 16)),

add.subtask((32, 32)),

])

result = job.apply_async()

result.ready() # True -- all subtasks completed

result.successful() # True -- all subtasks successful

values = result.join() # [4, 8, 16, 32, 64]

print values](https://image.slidesharecdn.com/talk-120410024546-phpapp02/75/Celery-with-python-26-2048.jpg)

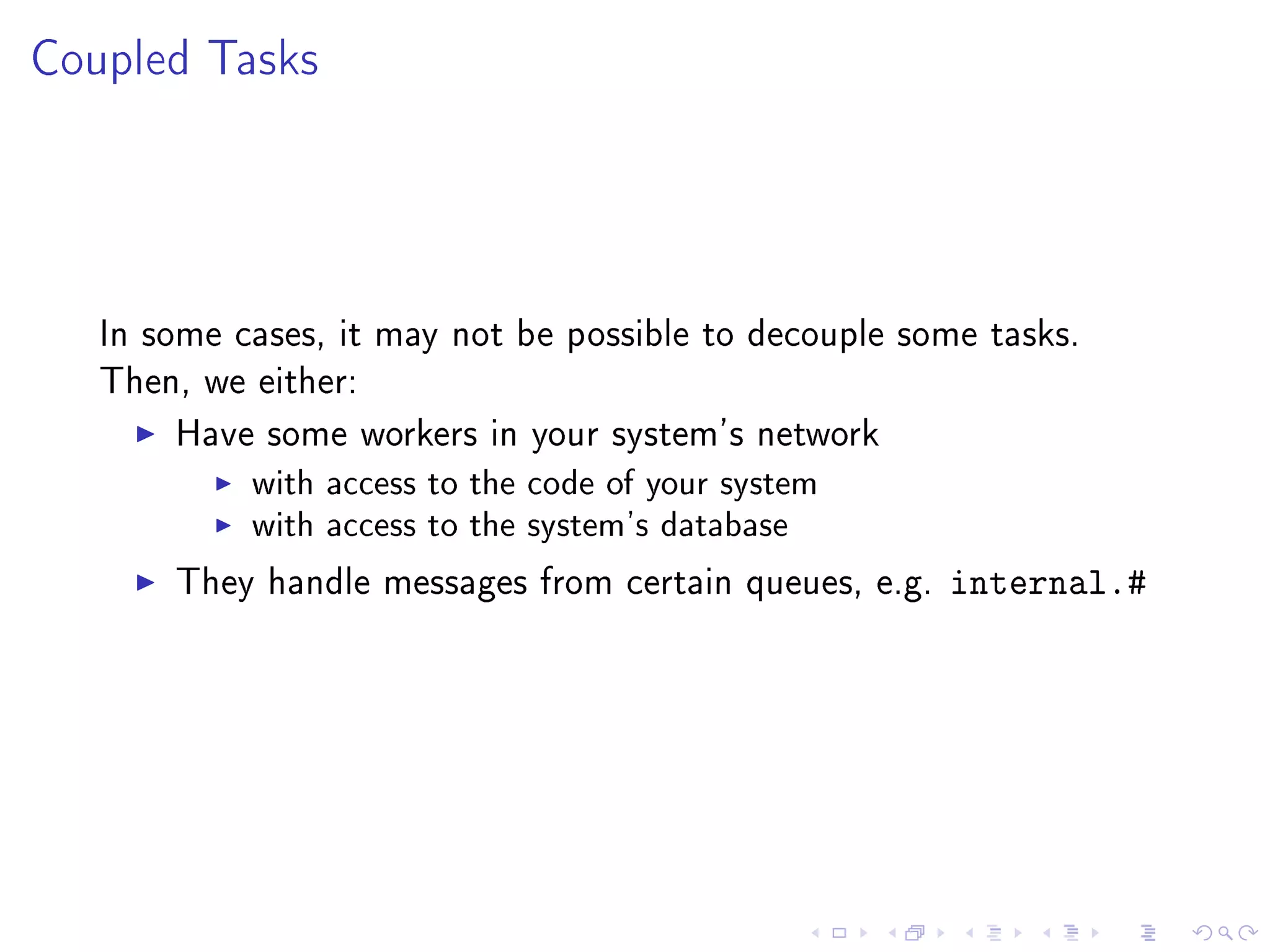

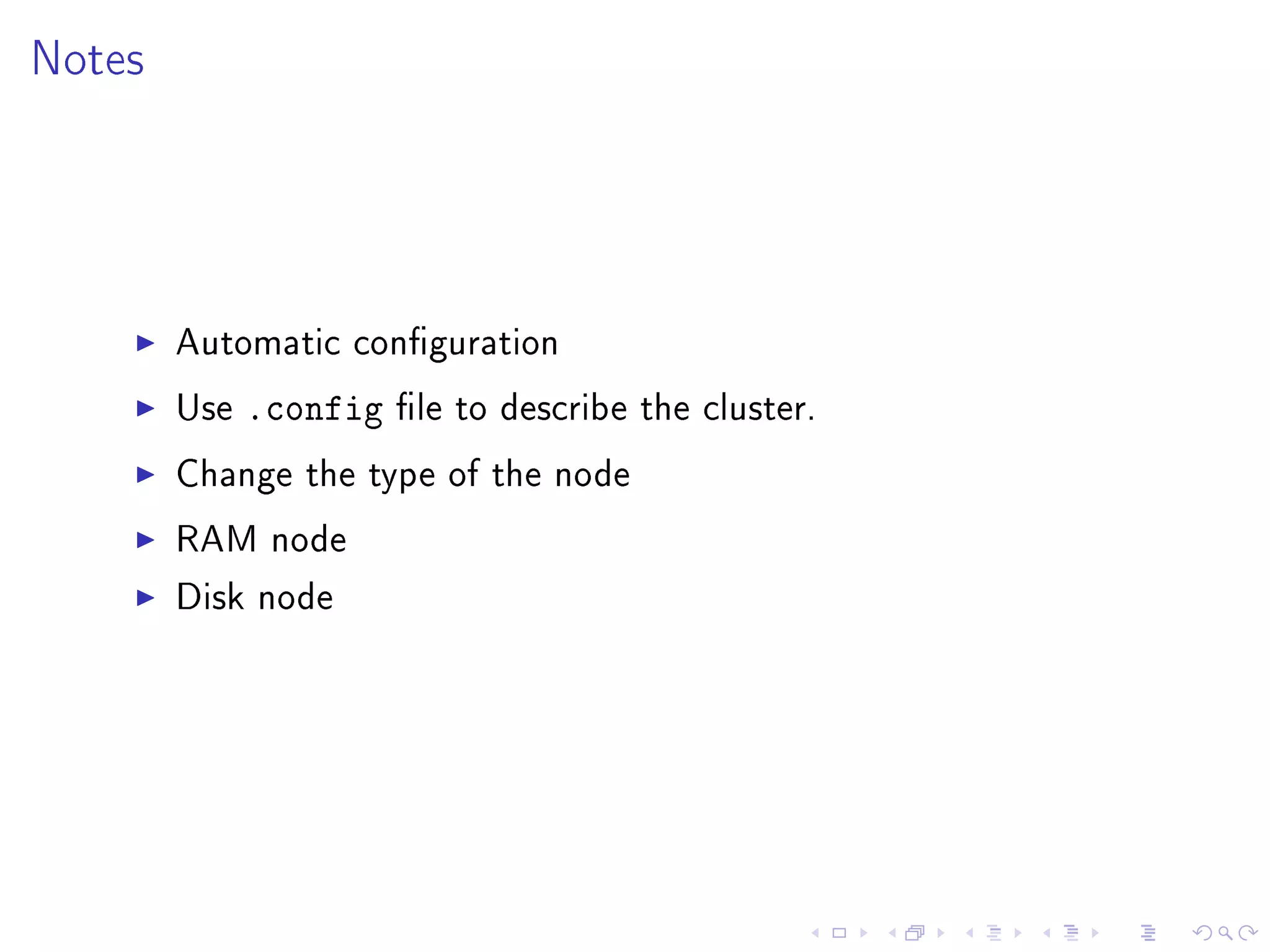

![Routing

apply_async accepts the parameter routing to create some

RabbitMQ queues

pdf: ticket.#

import_files: import.#

Schedule the task to the appropriate queue

import_vouchers.apply_async(args=[filename],

routing_key=import.vouchers)

generate_ticket.apply_async(args=barcodes,

routing_key=ticket.generate)](https://image.slidesharecdn.com/talk-120410024546-phpapp02/75/Celery-with-python-29-2048.jpg)