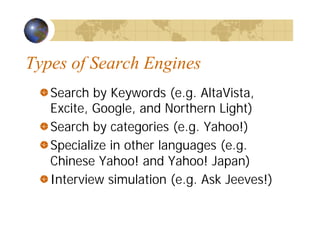

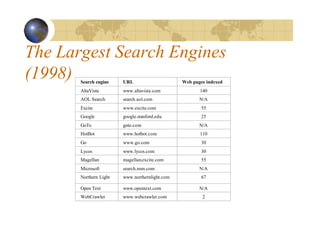

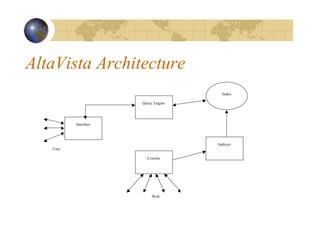

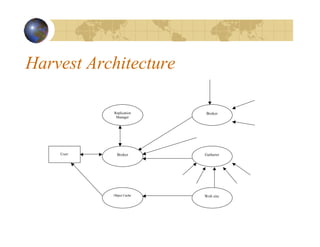

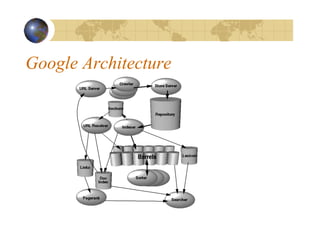

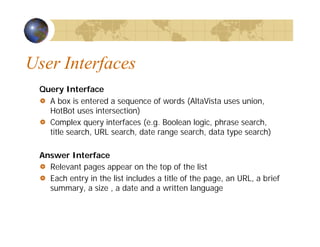

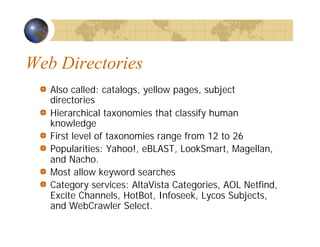

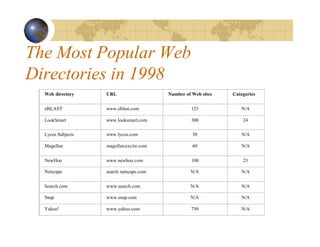

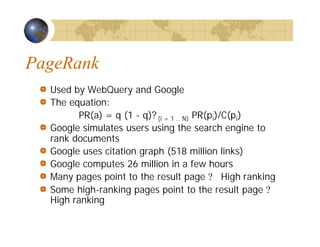

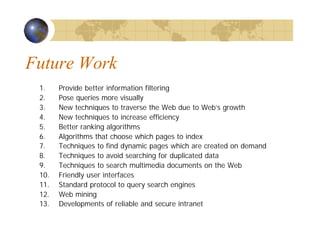

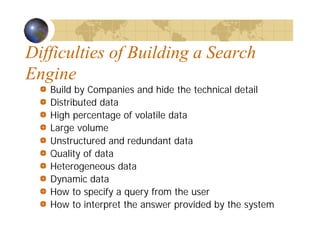

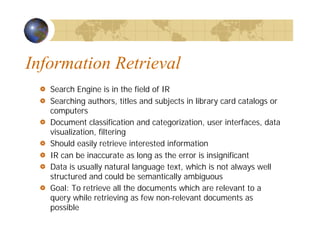

The document provides an overview of web search engines. It discusses the difficulties in building search engines due to the distributed, unstructured, and dynamic nature of web data. It describes the major components of search engines including crawling, indexing, ranking algorithms, and interfaces. The document also discusses the largest search engines in 1998, different types of search engines, directories, ranking algorithms like PageRank, and future work in improving search technology.

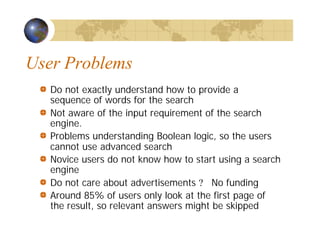

![Searching Guidelines

Specify the words clearly (+, -)

Use Advanced Search when necessary

Provide as many particular terms as possible

If looking for a company, institution, or organization, try:

www.name [.com | .edu | .org | .gov | country code]

Some searching engine specialize in some areas

If the user use broad queries, try to use Web directories as

starting points

The user should notice that anyone can publish data on the

Web, so information that they get from search engines might

not be accurate.](https://image.slidesharecdn.com/sunny-slides-221108034706-6a158349/85/sunny-slides-8-320.jpg)