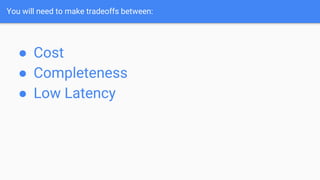

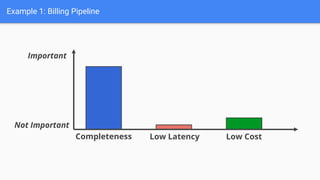

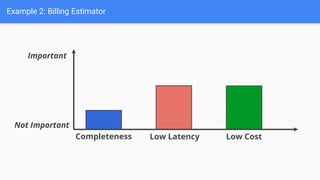

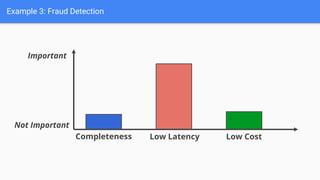

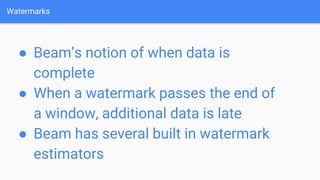

The document presents an overview of Apache Beam, a unified model for processing both batch and streaming data. It covers key components such as PCollections, transforms, pipelines, and methods for handling trade-offs between cost, completeness, and latency in data processing. Additionally, it discusses advanced topics like windowing, watermarks, and triggers that are essential for managing real-time data streams.

![Discarding Accumulation Mode

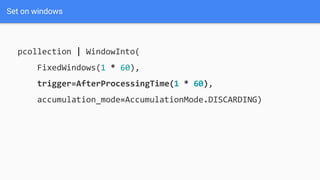

pcollection | WindowInto(

FixedWindows(1 * 60),

trigger=Repeating(AfterCount(3)),

accumulation_mode=AccumulationMode.DISCARDING)

[5, 8, 3, 1, 2, 6, 9, 7]](https://image.slidesharecdn.com/streamingdatapipelineswithapachebeamato-221115162527-5ab557f5/85/Streaming-Data-Pipelines-With-Apache-Beam-60-320.jpg)

![Discarding Accumulation Mode

pcollection | WindowInto(

FixedWindows(1 * 60),

trigger=Repeating(AfterCount(3)),

accumulation_mode=AccumulationMode.DISCARDING)

[5, 8, 3, 1, 2, 6, 9, 7] -> [5, 8, 3]](https://image.slidesharecdn.com/streamingdatapipelineswithapachebeamato-221115162527-5ab557f5/85/Streaming-Data-Pipelines-With-Apache-Beam-61-320.jpg)

![Discarding Accumulation Mode

pcollection | WindowInto(

FixedWindows(1 * 60),

trigger=Repeating(AfterCount(3)),

accumulation_mode=AccumulationMode.DISCARDING)

[5, 8, 3, 1, 2, 6, 9, 7] -> [5, 8, 3]

[1, 2, 6]](https://image.slidesharecdn.com/streamingdatapipelineswithapachebeamato-221115162527-5ab557f5/85/Streaming-Data-Pipelines-With-Apache-Beam-62-320.jpg)

![Discarding Accumulation Mode

pcollection | WindowInto(

FixedWindows(1 * 60),

trigger=Repeating(AfterCount(3)),

accumulation_mode=AccumulationMode.DISCARDING)

[5, 8, 3, 1, 2, 6, 9, 7] -> [5, 8, 3]

[1, 2, 6]

[9, 7]](https://image.slidesharecdn.com/streamingdatapipelineswithapachebeamato-221115162527-5ab557f5/85/Streaming-Data-Pipelines-With-Apache-Beam-63-320.jpg)

![Discarding Accumulation Mode

pcollection | WindowInto(

FixedWindows(1 * 60),

trigger=Repeating(AfterCount(3)),

accumulation_mode=AccumulationMode.Accumulating)

[5, 8, 3, 1, 2, 6, 9, 7]](https://image.slidesharecdn.com/streamingdatapipelineswithapachebeamato-221115162527-5ab557f5/85/Streaming-Data-Pipelines-With-Apache-Beam-64-320.jpg)

![Discarding Accumulation Mode

pcollection | WindowInto(

FixedWindows(1 * 60),

trigger=Repeating(AfterCount(3)),

accumulation_mode=AccumulationMode.Accumulating)

[5, 8, 3, 1, 2, 6, 9, 7] -> [5, 8, 3]](https://image.slidesharecdn.com/streamingdatapipelineswithapachebeamato-221115162527-5ab557f5/85/Streaming-Data-Pipelines-With-Apache-Beam-65-320.jpg)

![Discarding Accumulation Mode

pcollection | WindowInto(

FixedWindows(1 * 60),

trigger=Repeating(AfterCount(3)),

accumulation_mode=AccumulationMode.Accumulating)

[5, 8, 3, 1, 2, 6, 9, 7] -> [5, 8, 3]

[5, 8, 3, 1, 2, 6]](https://image.slidesharecdn.com/streamingdatapipelineswithapachebeamato-221115162527-5ab557f5/85/Streaming-Data-Pipelines-With-Apache-Beam-66-320.jpg)

![Discarding Accumulation Mode

pcollection | WindowInto(

FixedWindows(1 * 60),

trigger=Repeating(AfterCount(3)),

accumulation_mode=AccumulationMode.Accumulating)

[5, 8, 3, 1, 2, 6, 9, 7] -> [5, 8, 3]

[5, 8, 3, 1, 2, 6]

[5, 8, 3, 1, 2, 6, 9, 7]](https://image.slidesharecdn.com/streamingdatapipelineswithapachebeamato-221115162527-5ab557f5/85/Streaming-Data-Pipelines-With-Apache-Beam-67-320.jpg)