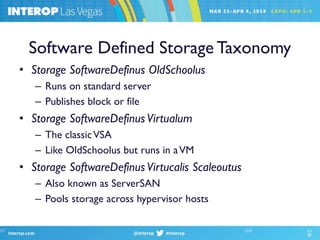

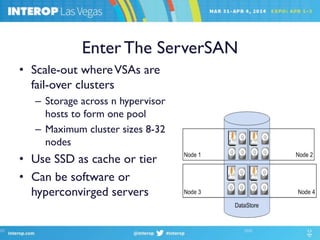

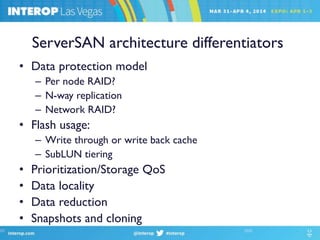

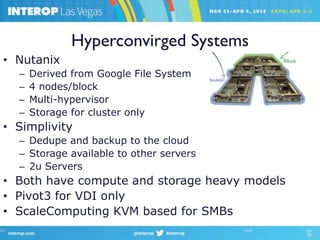

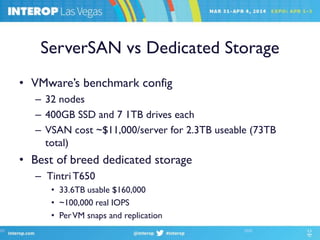

This document discusses software defined storage and evaluates whether it is a real technology or just hype. It defines software defined storage as storage software that runs on standard x86 server hardware and can be sold as software or as an appliance. The document examines different types of software defined storage like storage that runs on a single server, in a virtual machine, or across multiple hypervisor hosts in a scale-out cluster. It also compares the benefits and challenges of converged infrastructure solutions using software defined storage versus dedicated storage arrays.