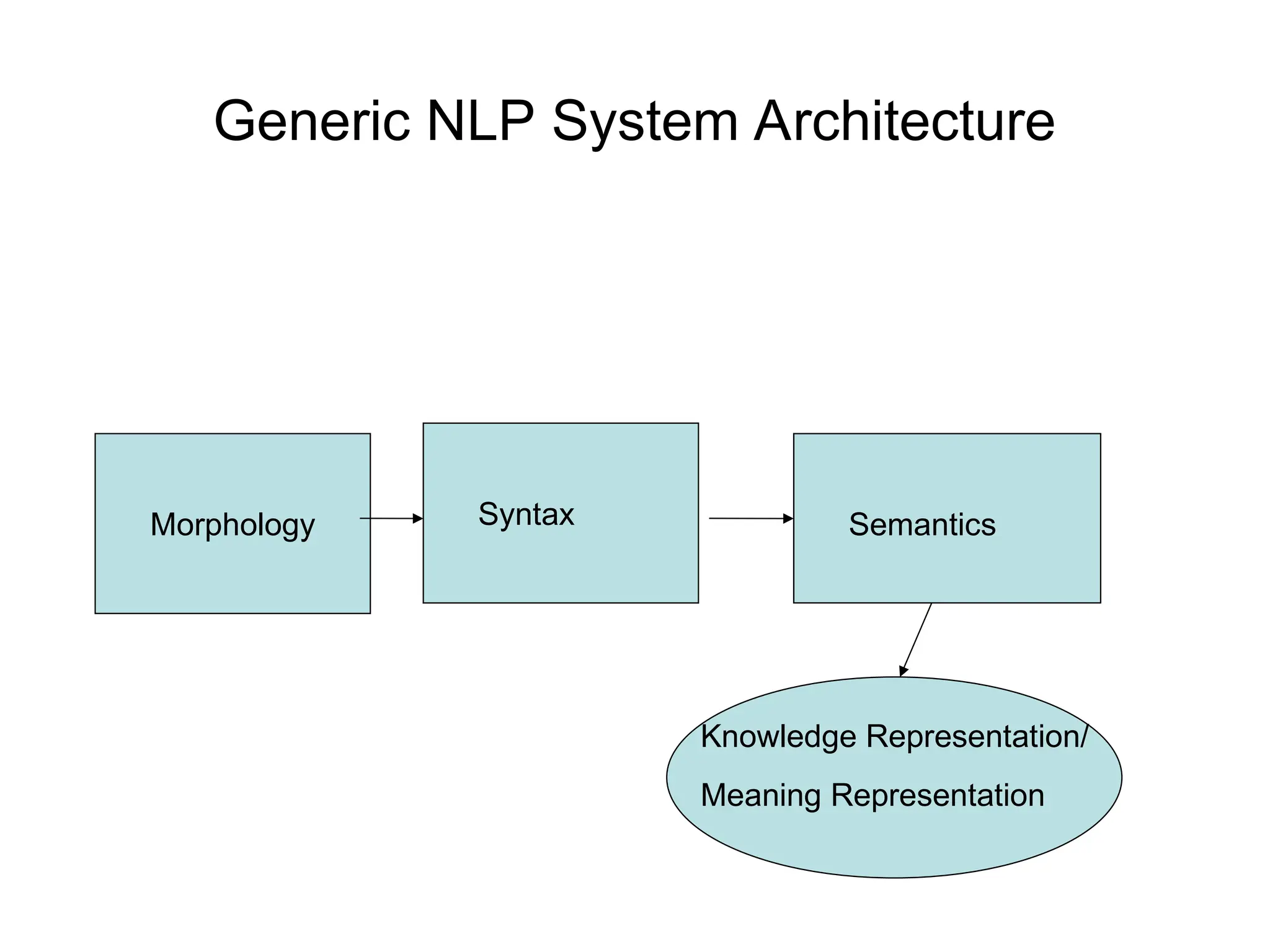

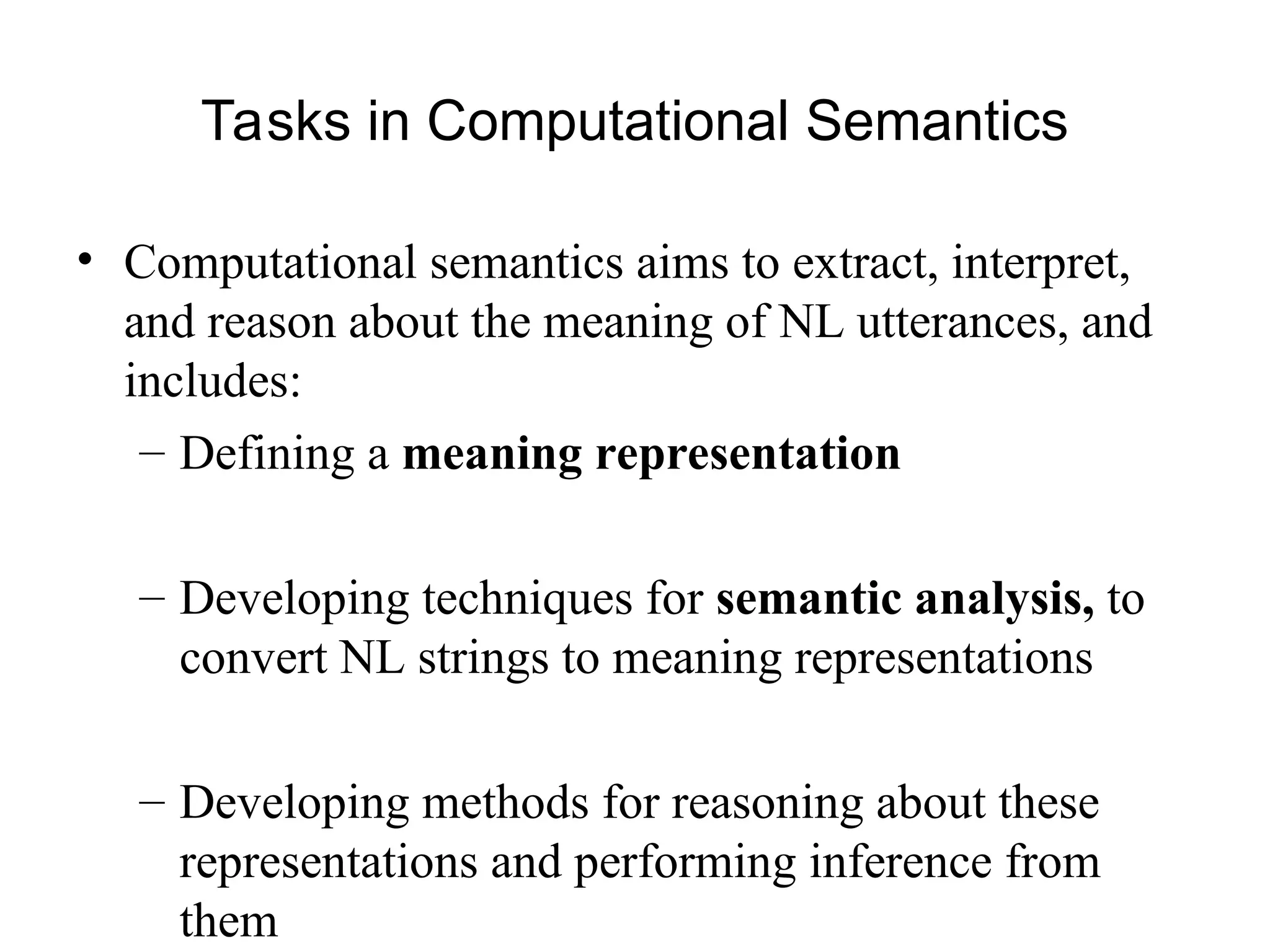

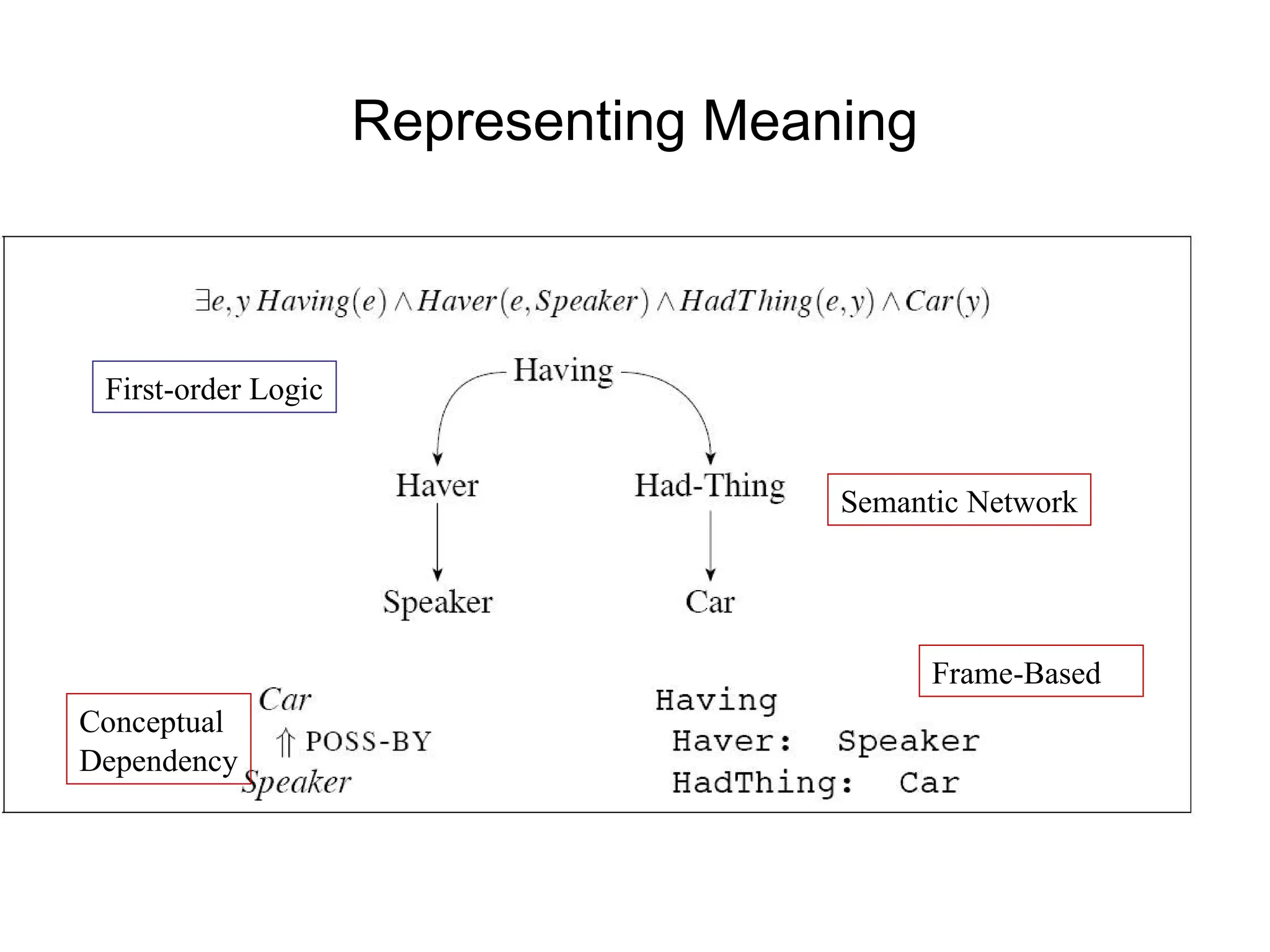

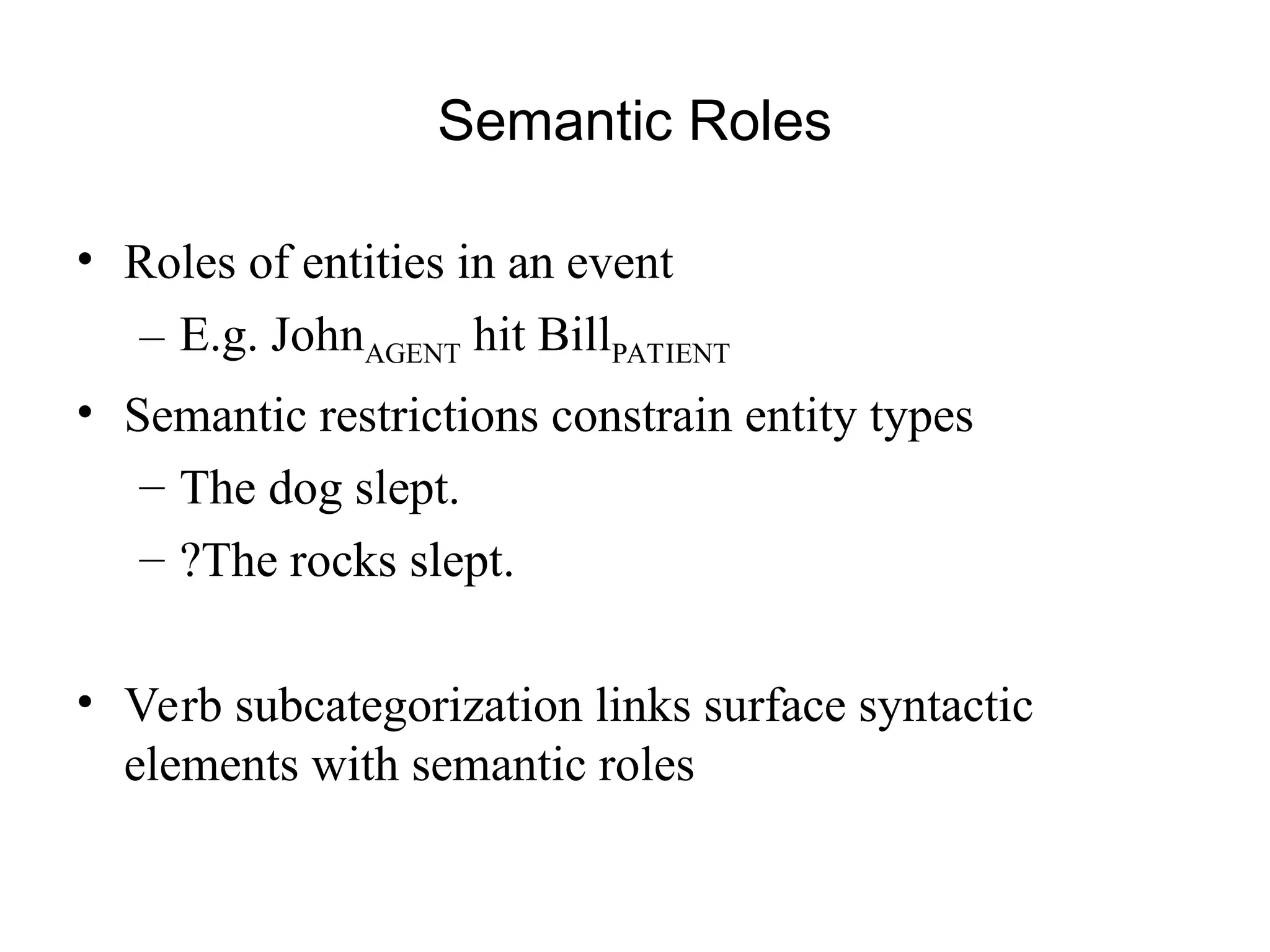

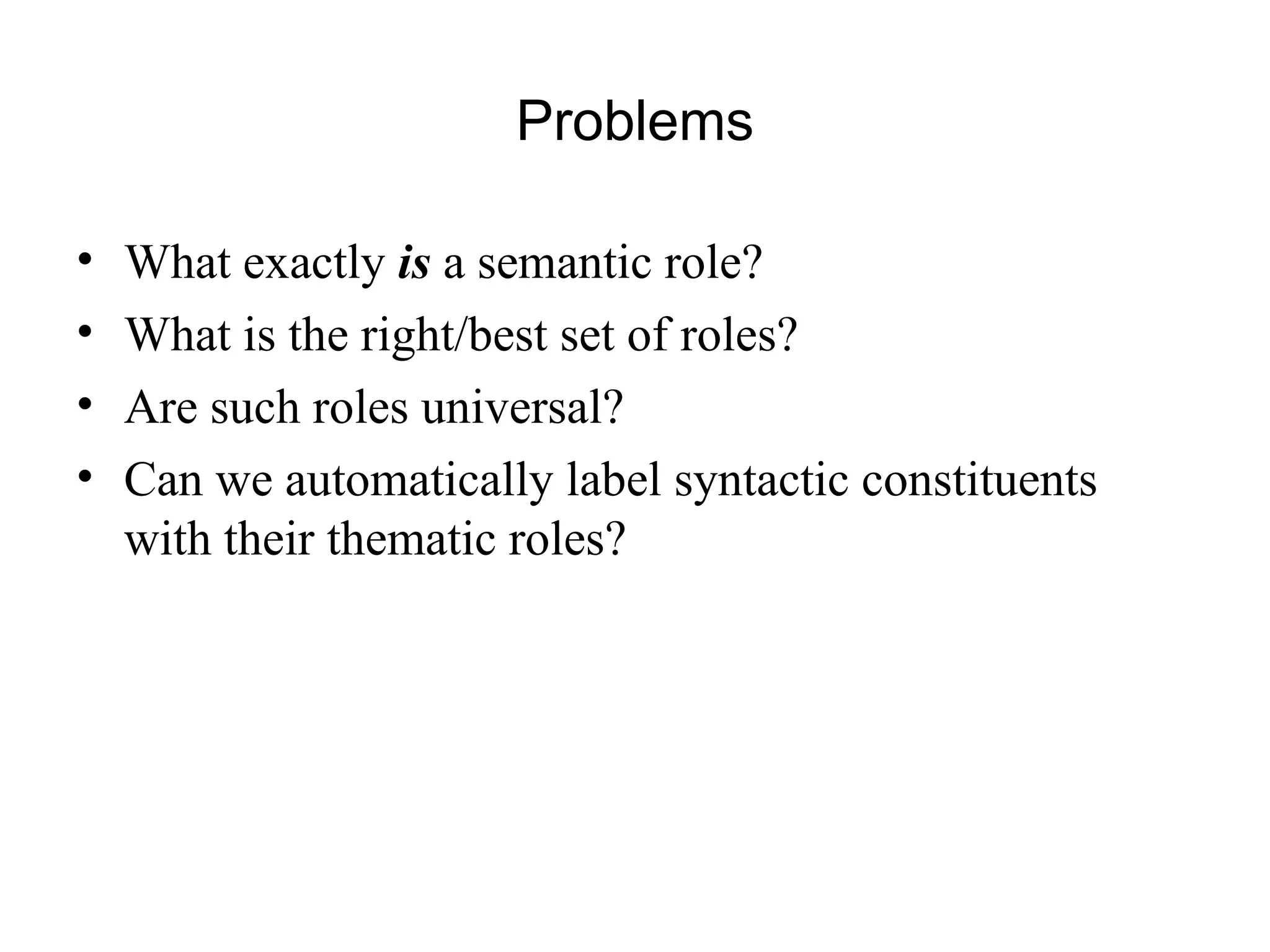

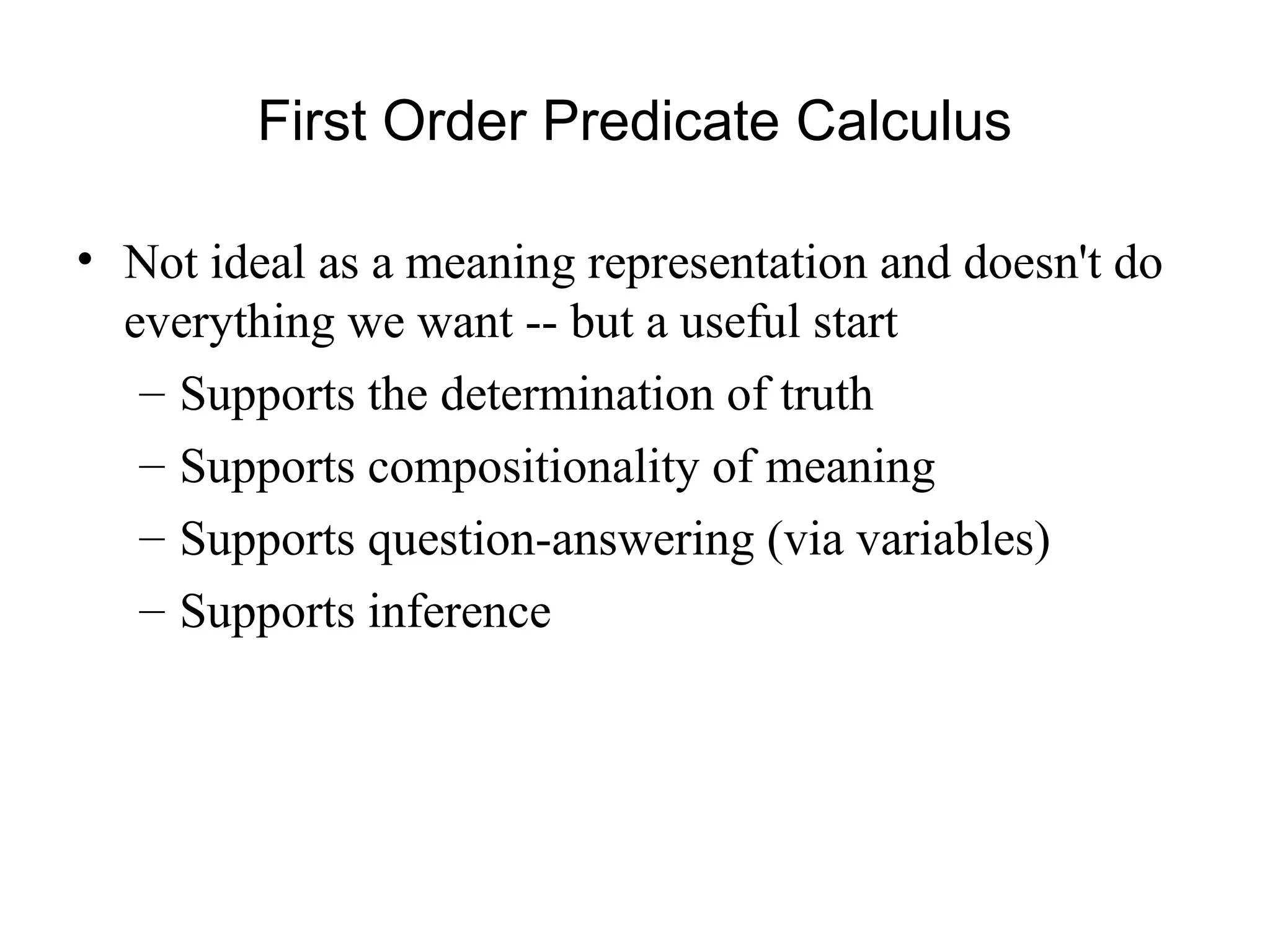

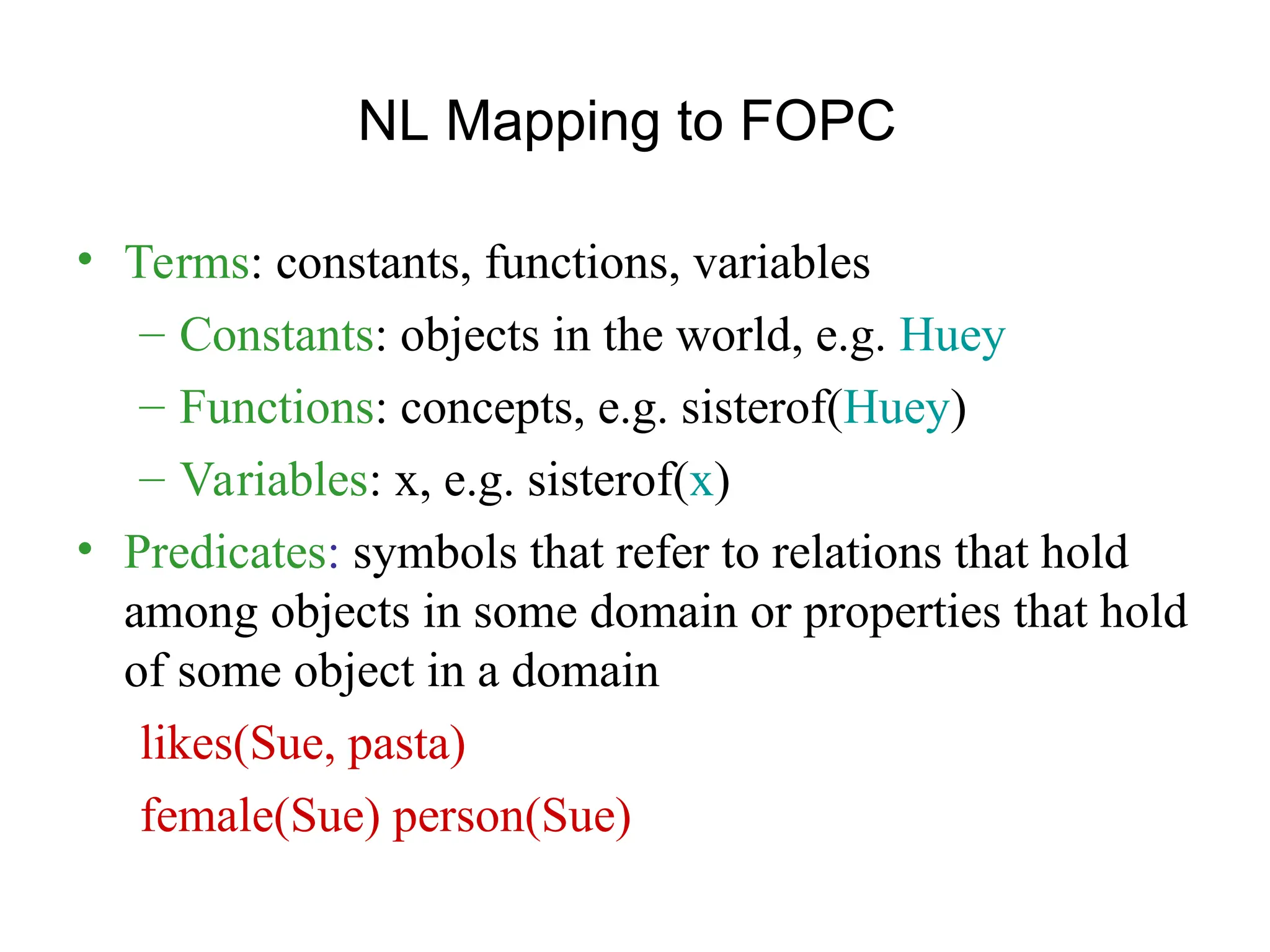

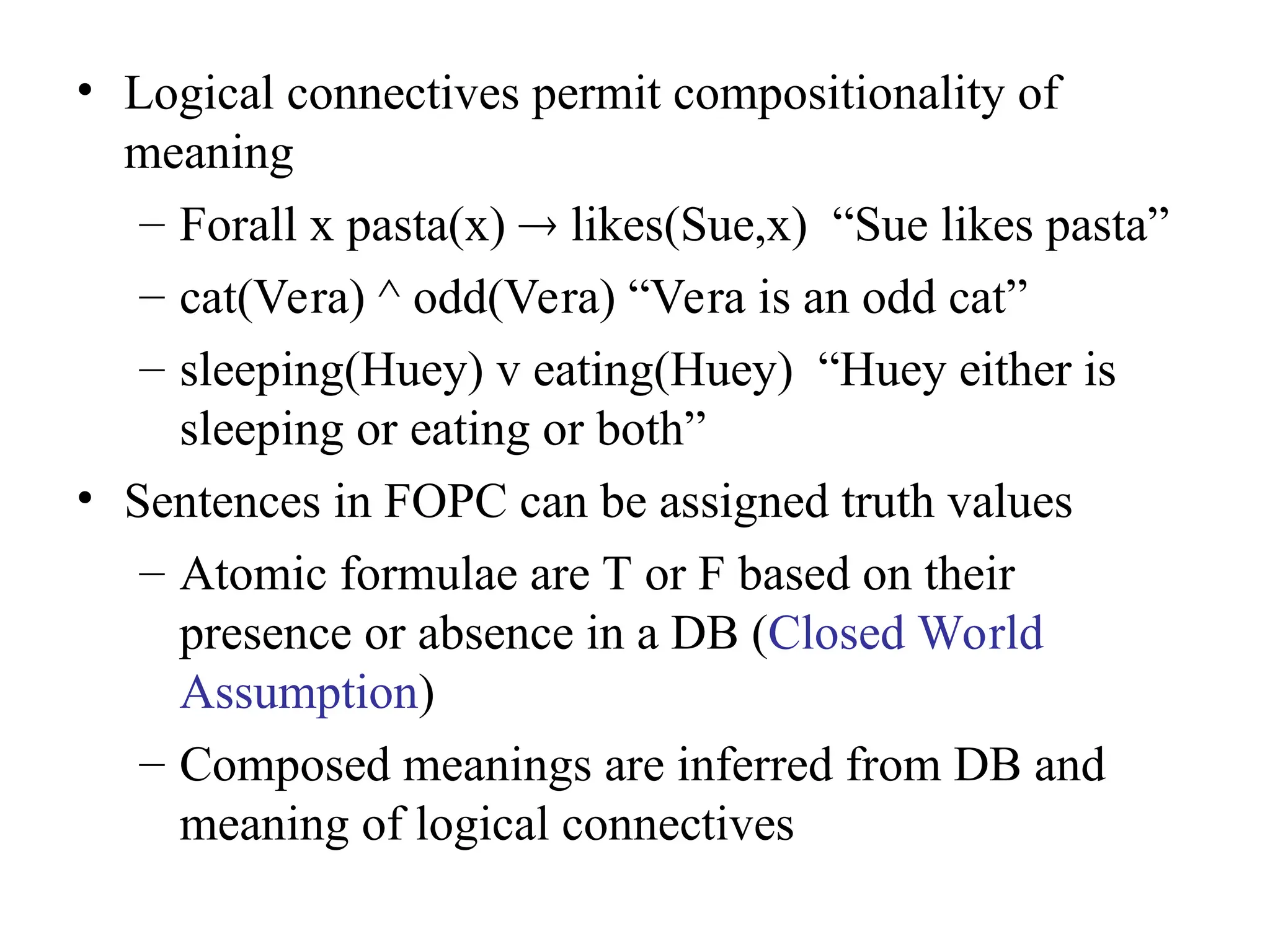

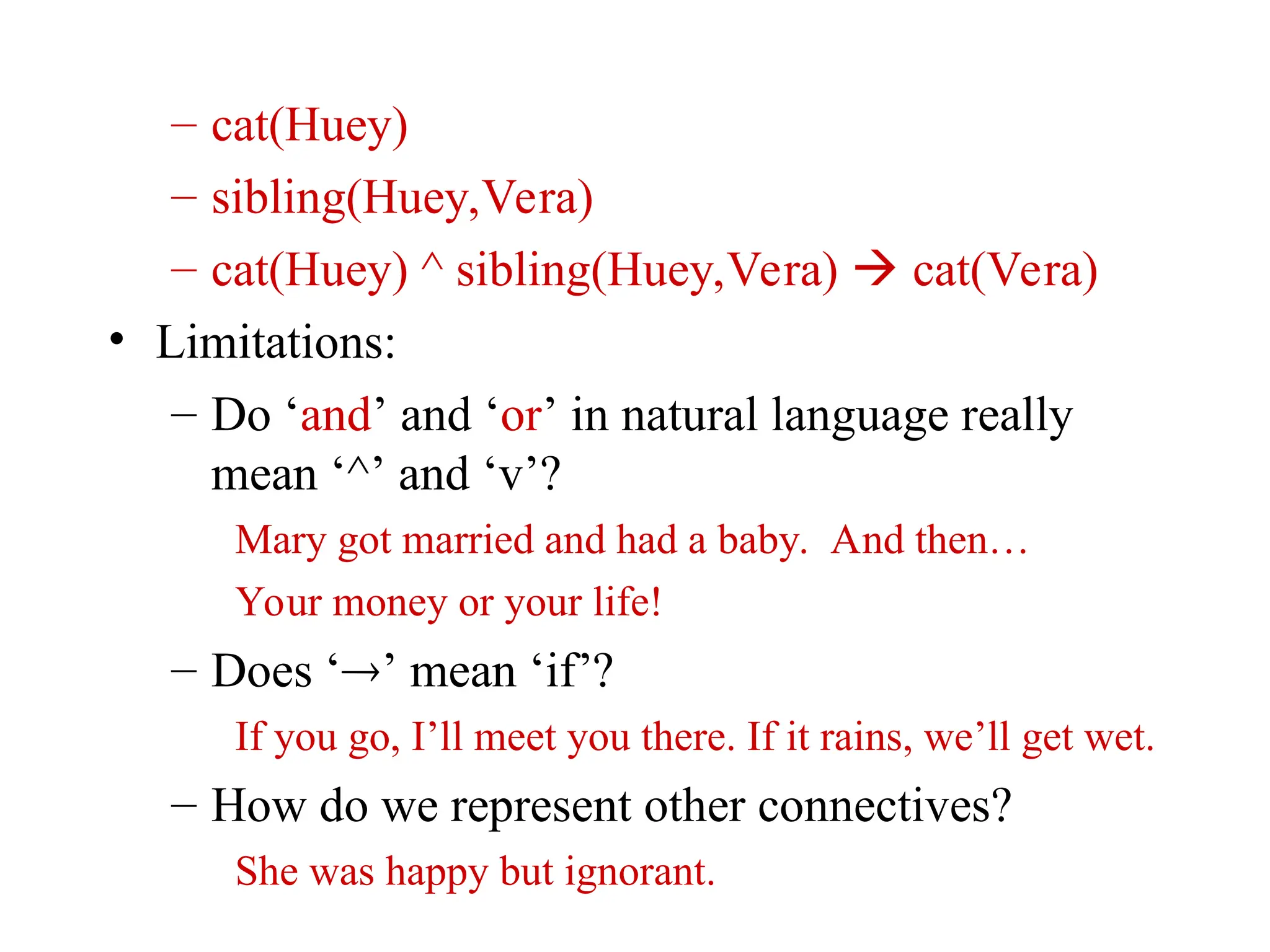

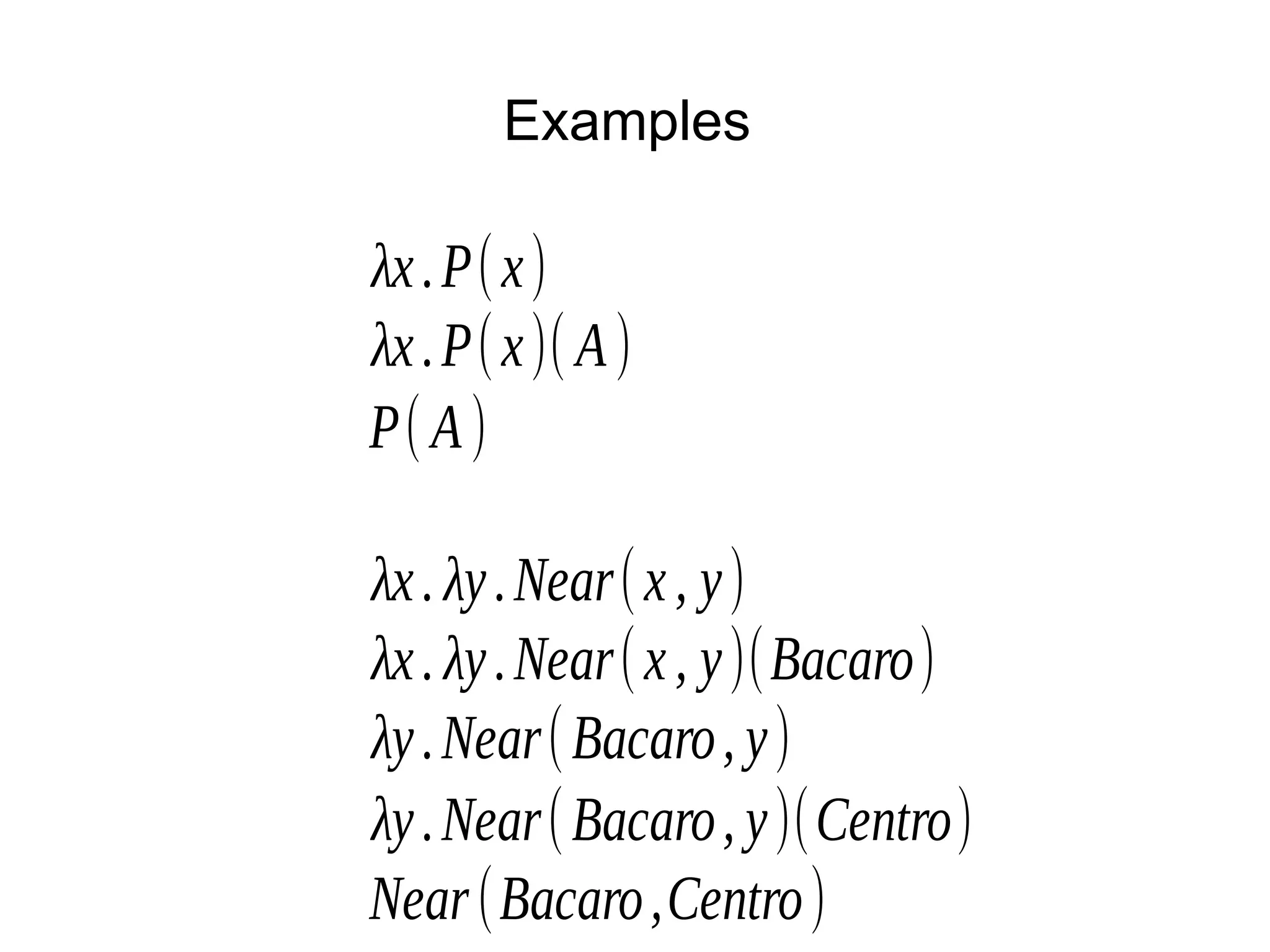

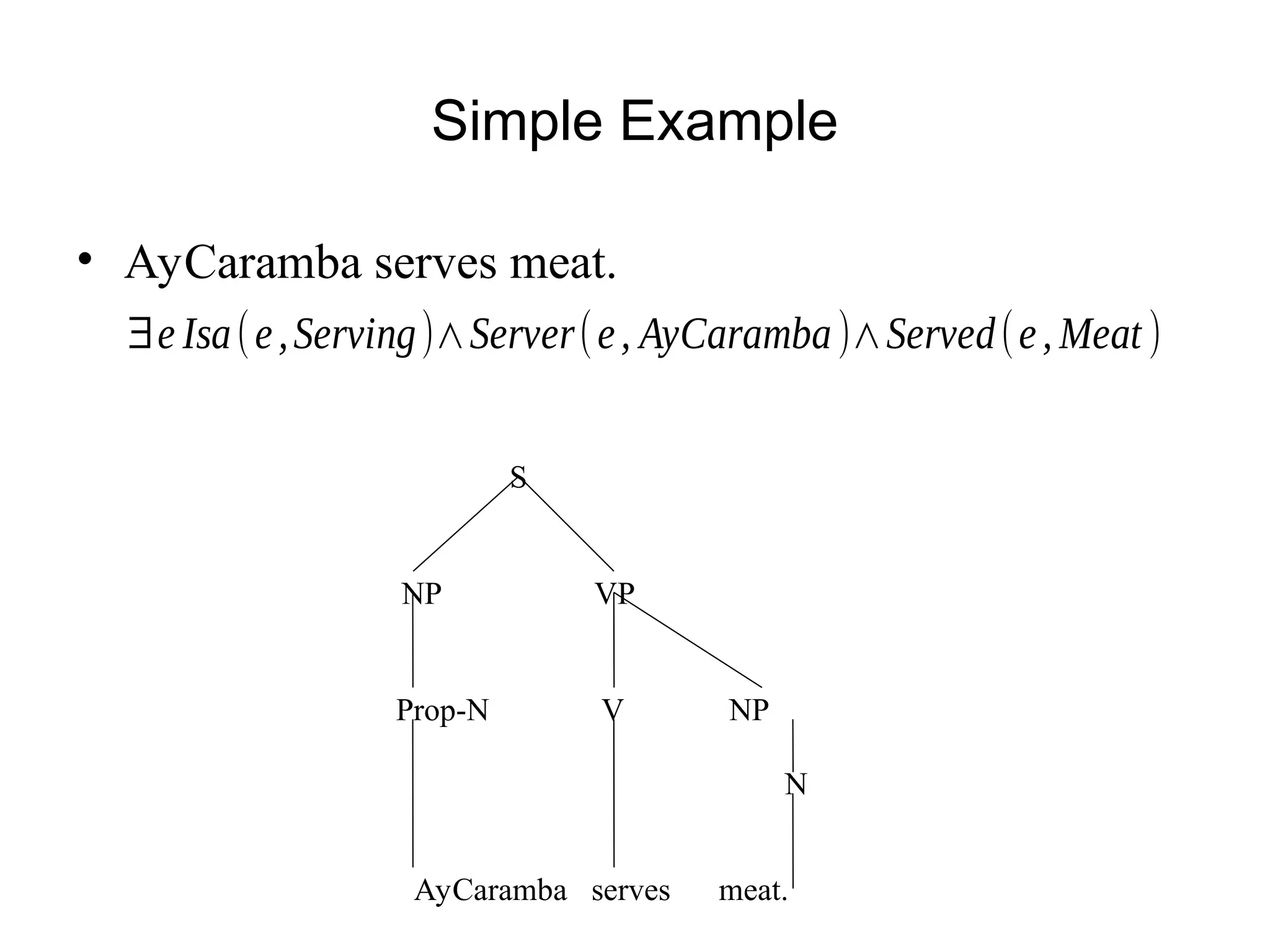

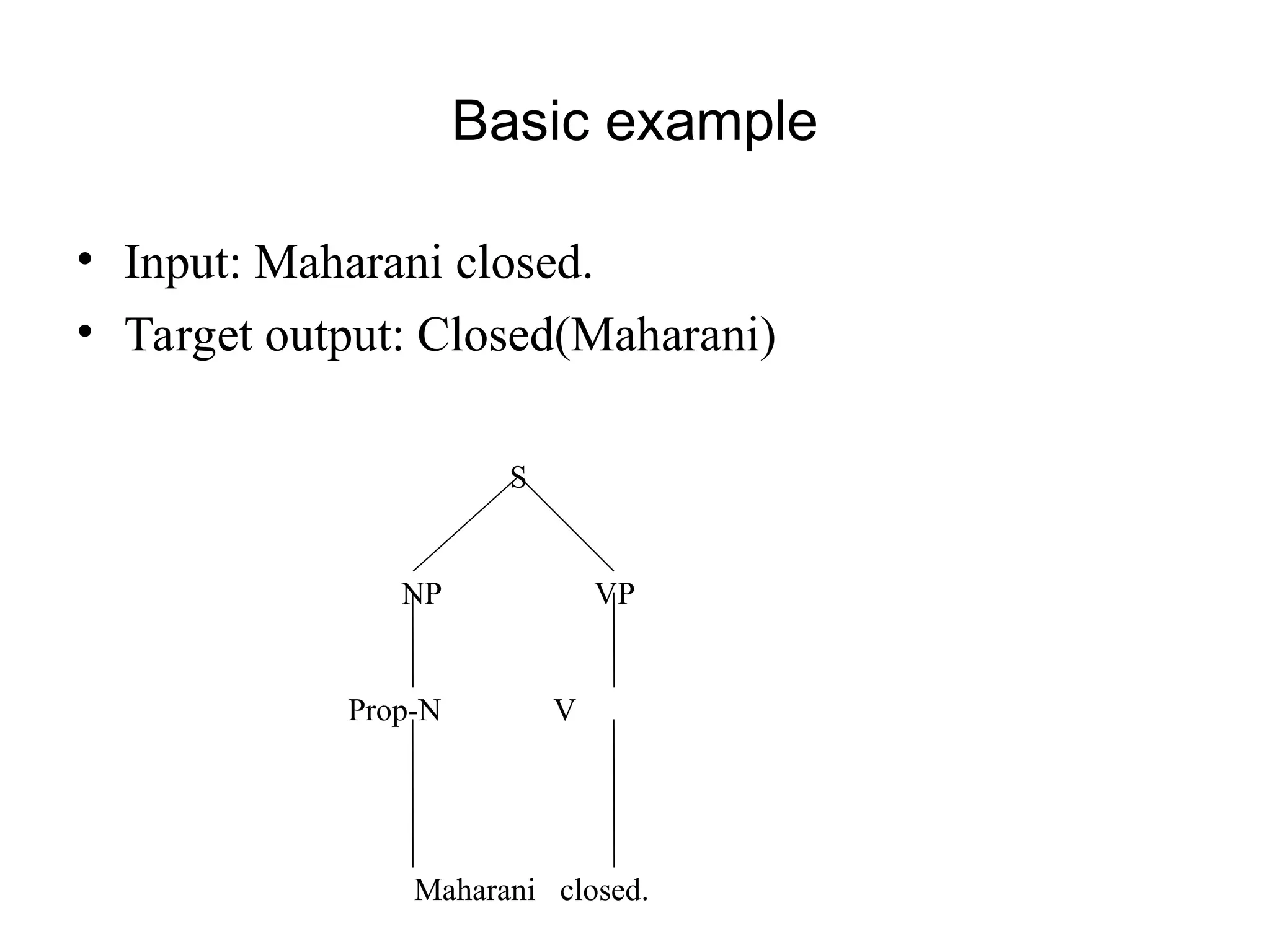

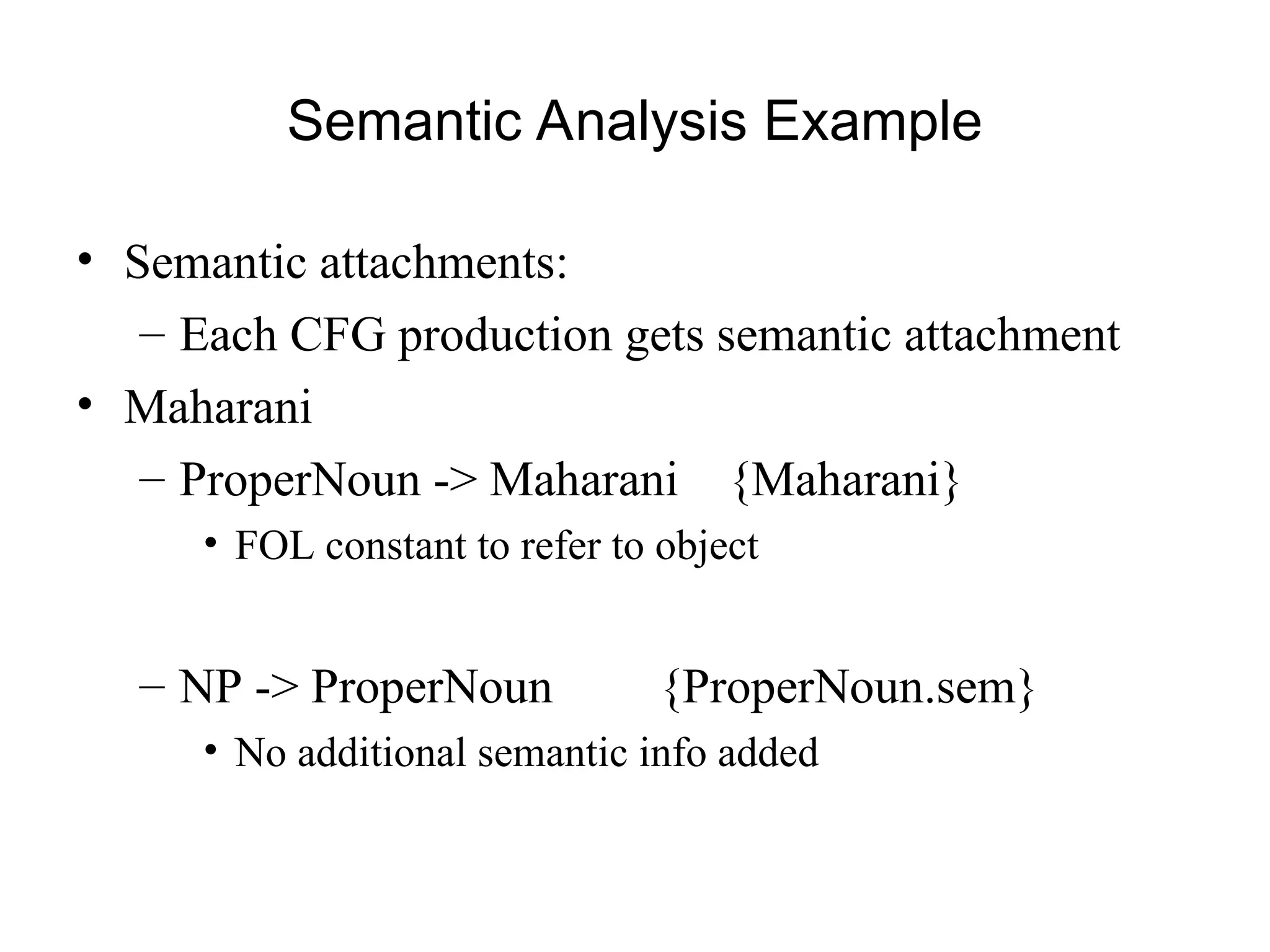

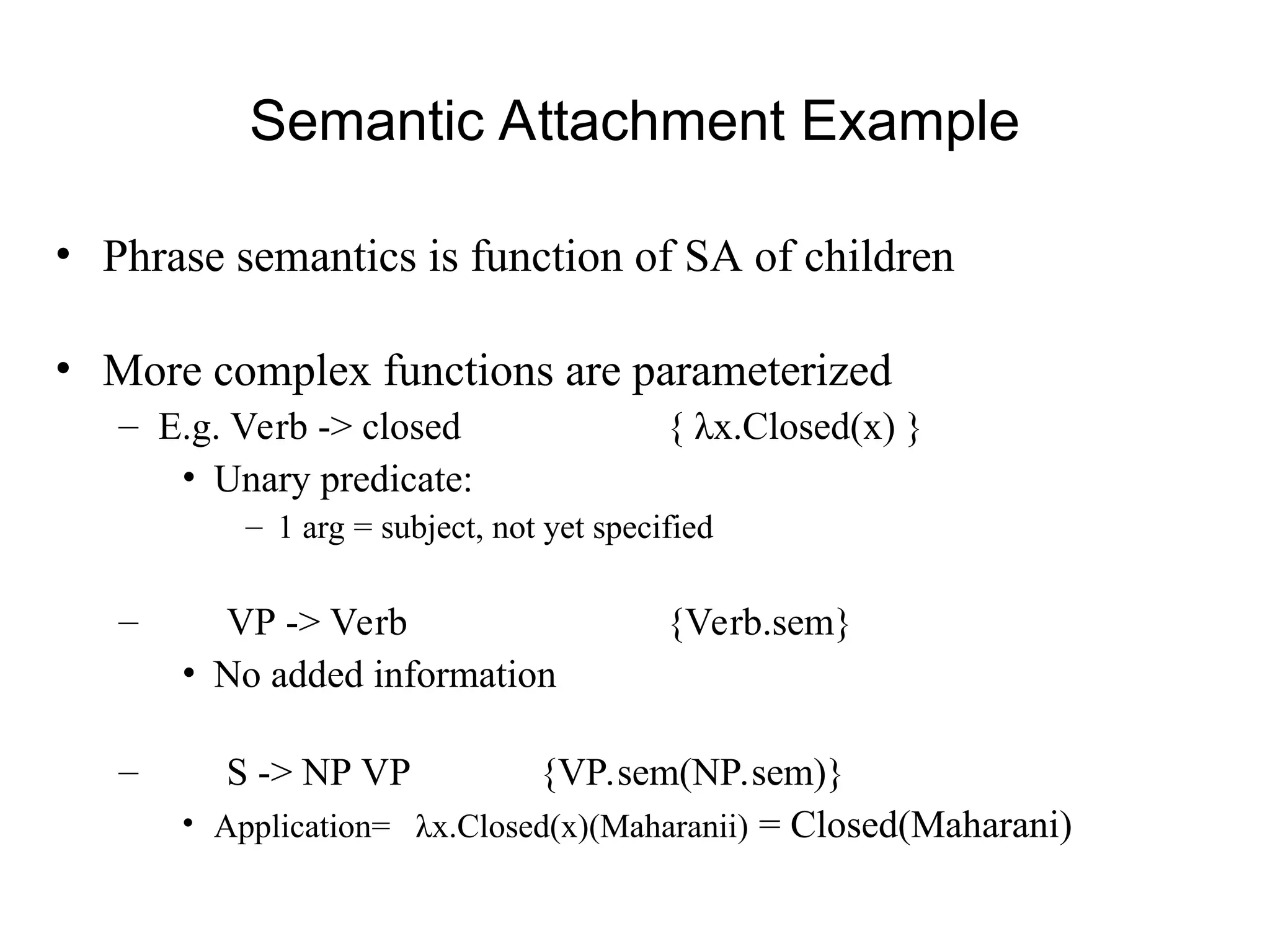

The document outlines the principles of computational semantics, focusing on meaning representation, interpretation, and inference from natural language (NL) utterances. It discusses the complexities involved, such as knowledge of language and the world, ambiguity resolution, and the need for verifiable and unambiguous representations. Additionally, it emphasizes the importance of a structured approach using first-order predicate calculus and lambda calculus to effectively analyze and represent meaning in language.