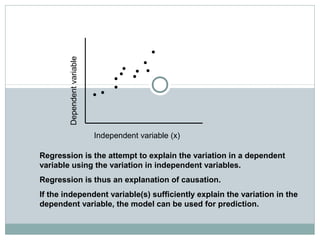

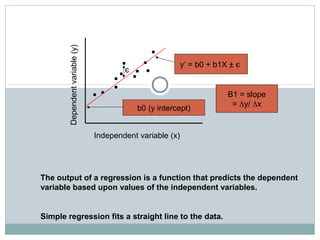

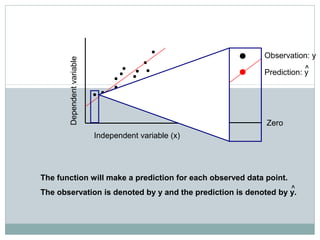

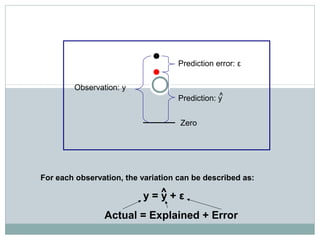

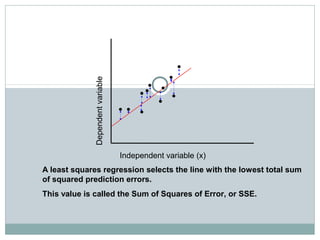

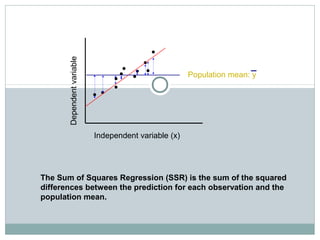

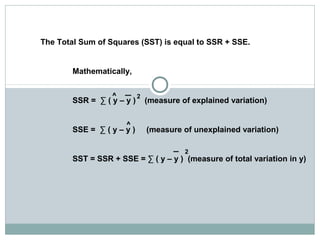

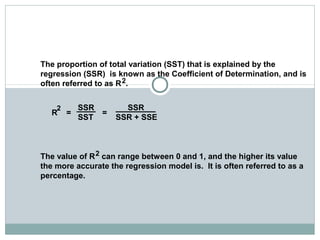

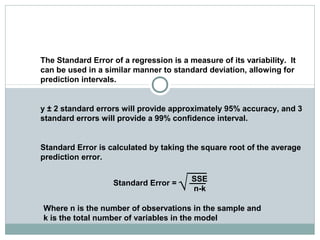

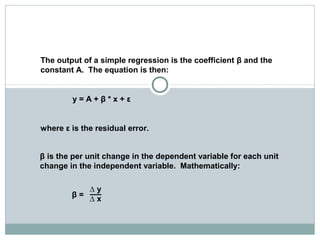

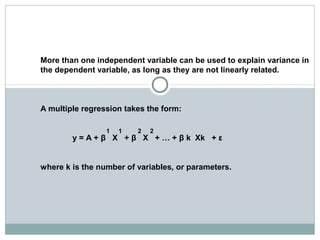

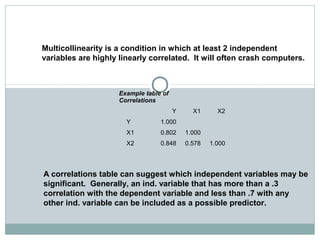

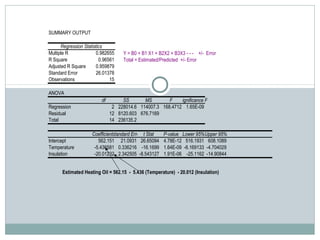

Regression analysis is used to identify relationships between variables and make predictions. Simple linear regression fits a straight line to data using one independent variable to predict a dependent variable. Multiple linear regression uses more than one independent variable to explain variance in the dependent variable. The goal is to select variables that sufficiently explain variation in the dependent variable to allow for accurate prediction. Key outputs of regression include coefficients, R-squared, standard error, and significance values.