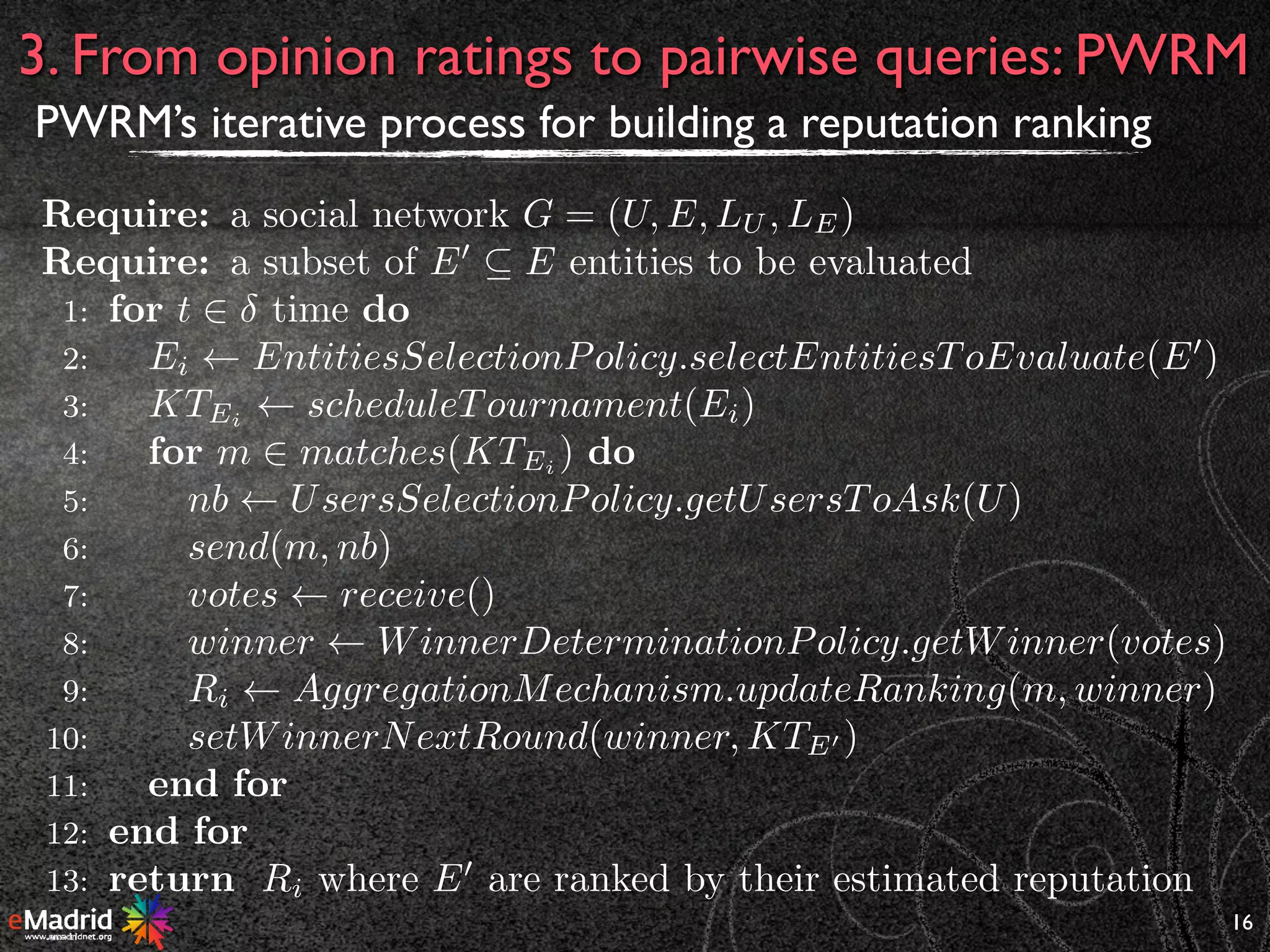

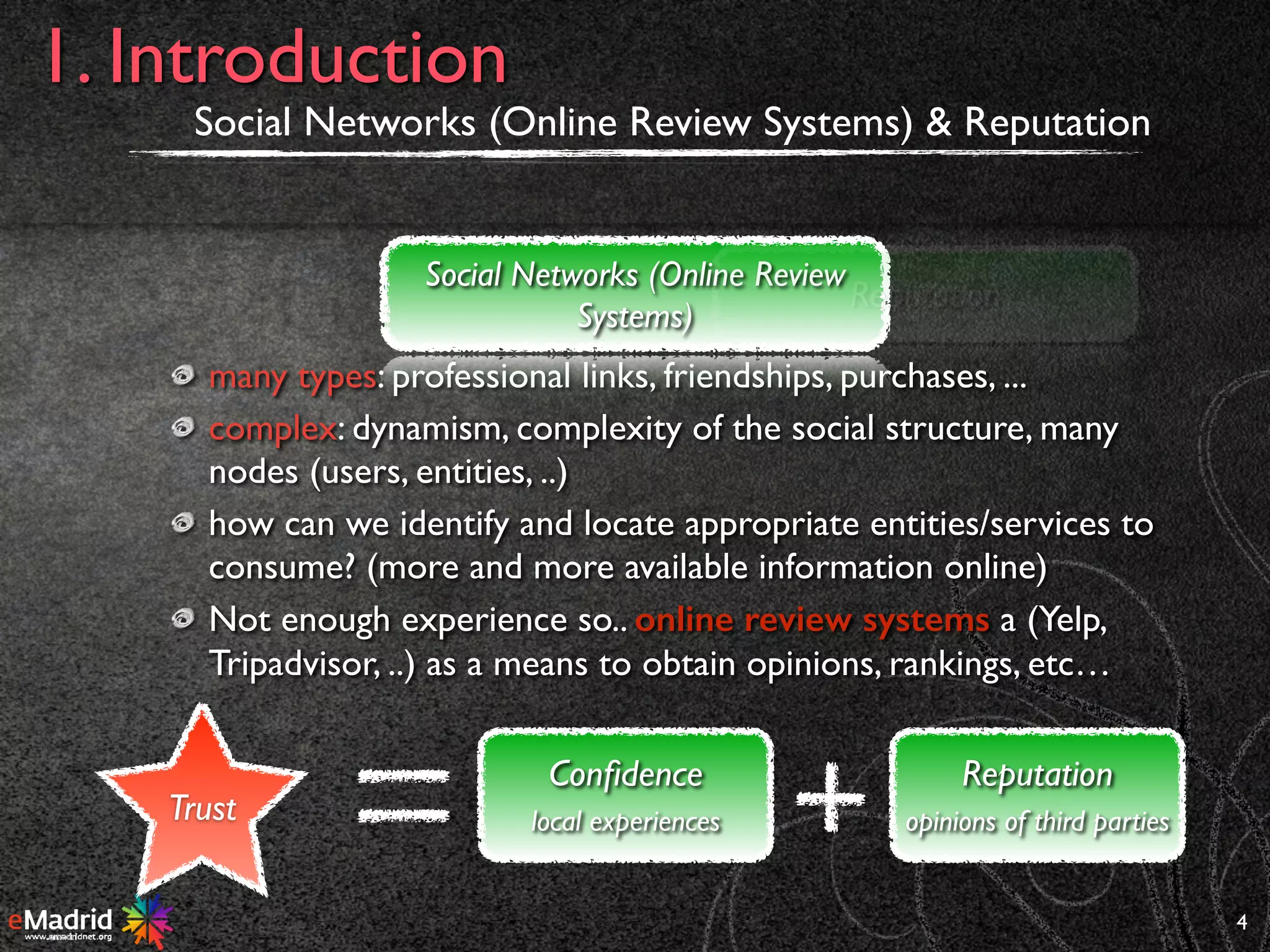

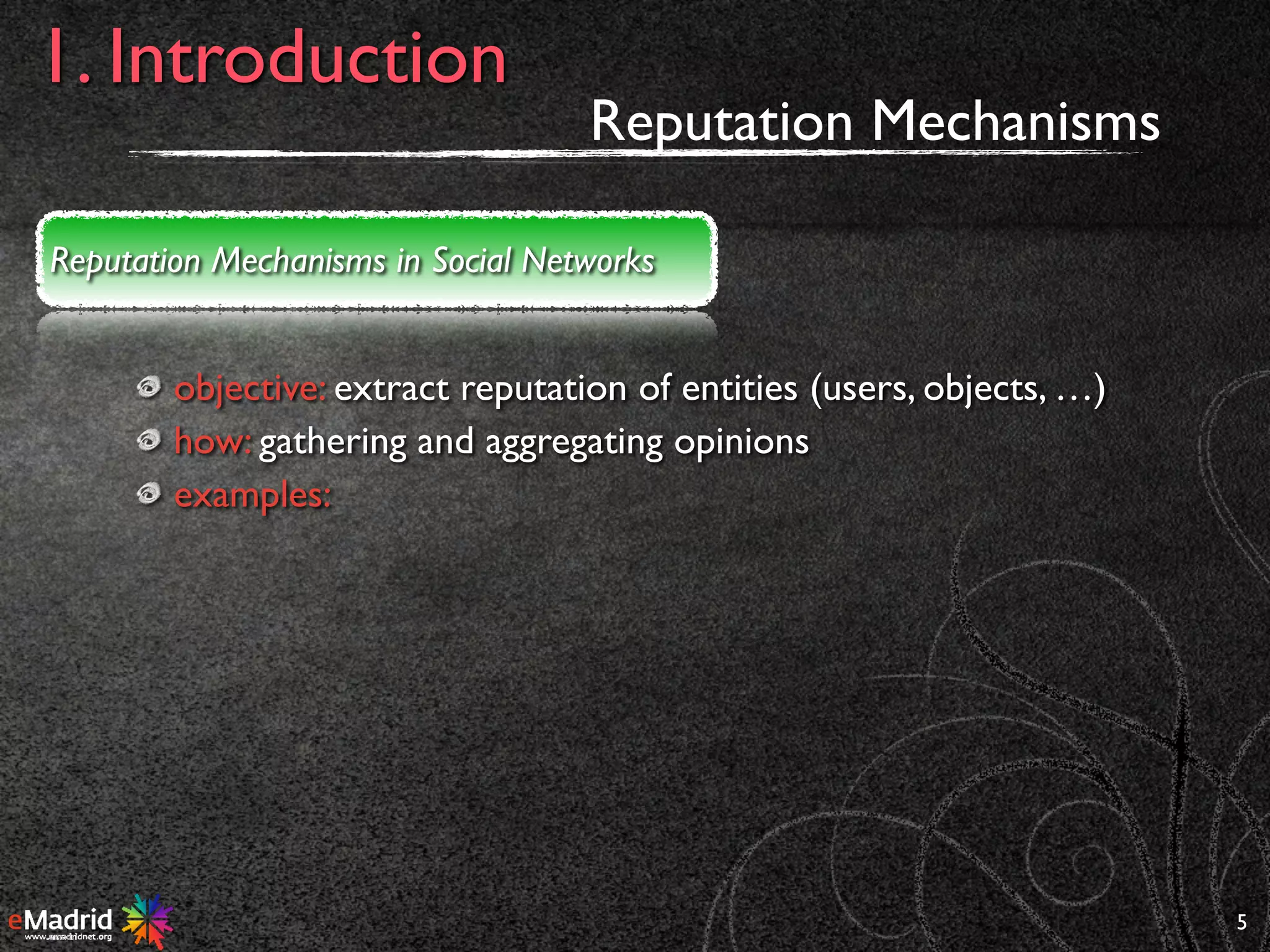

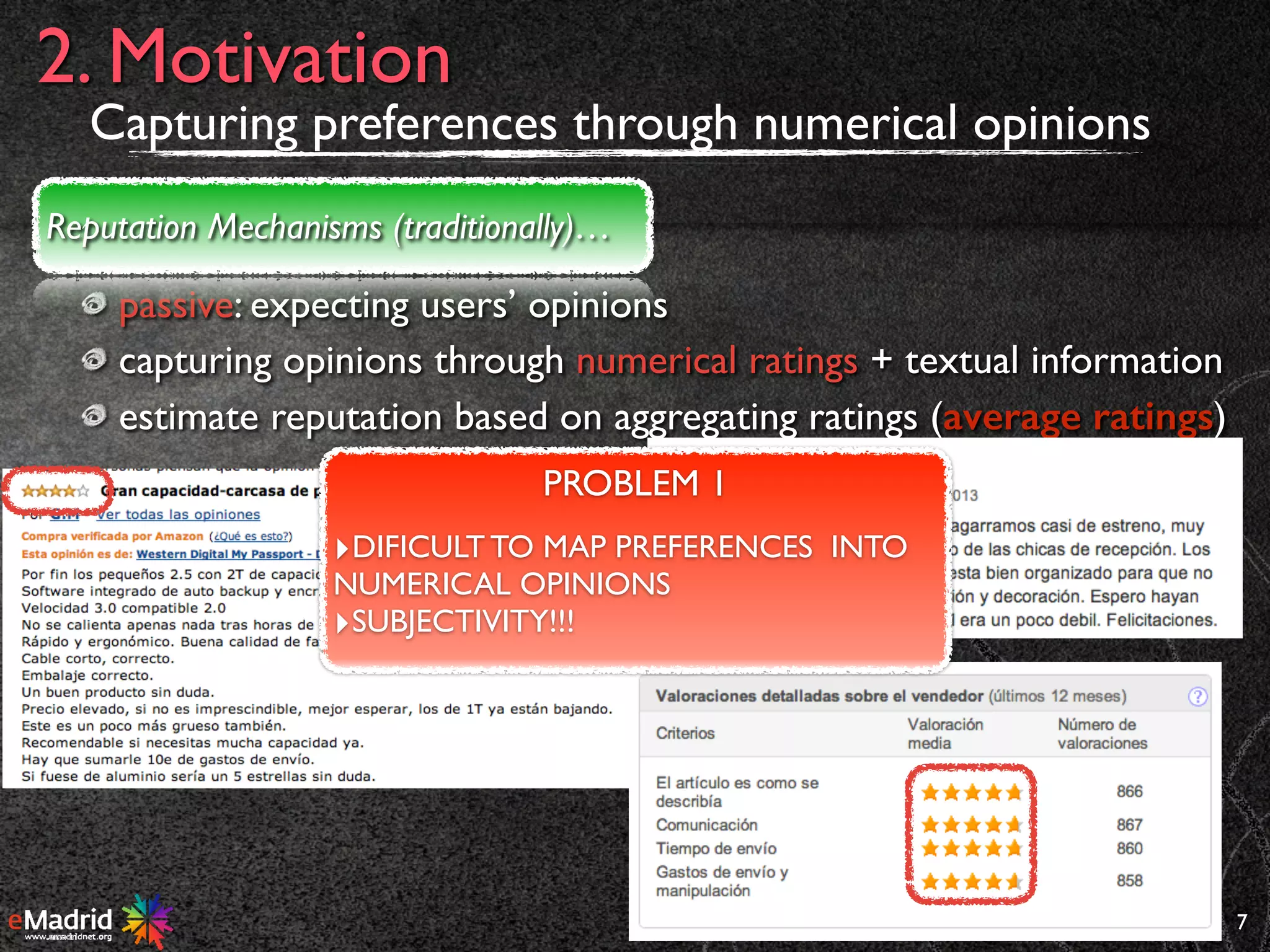

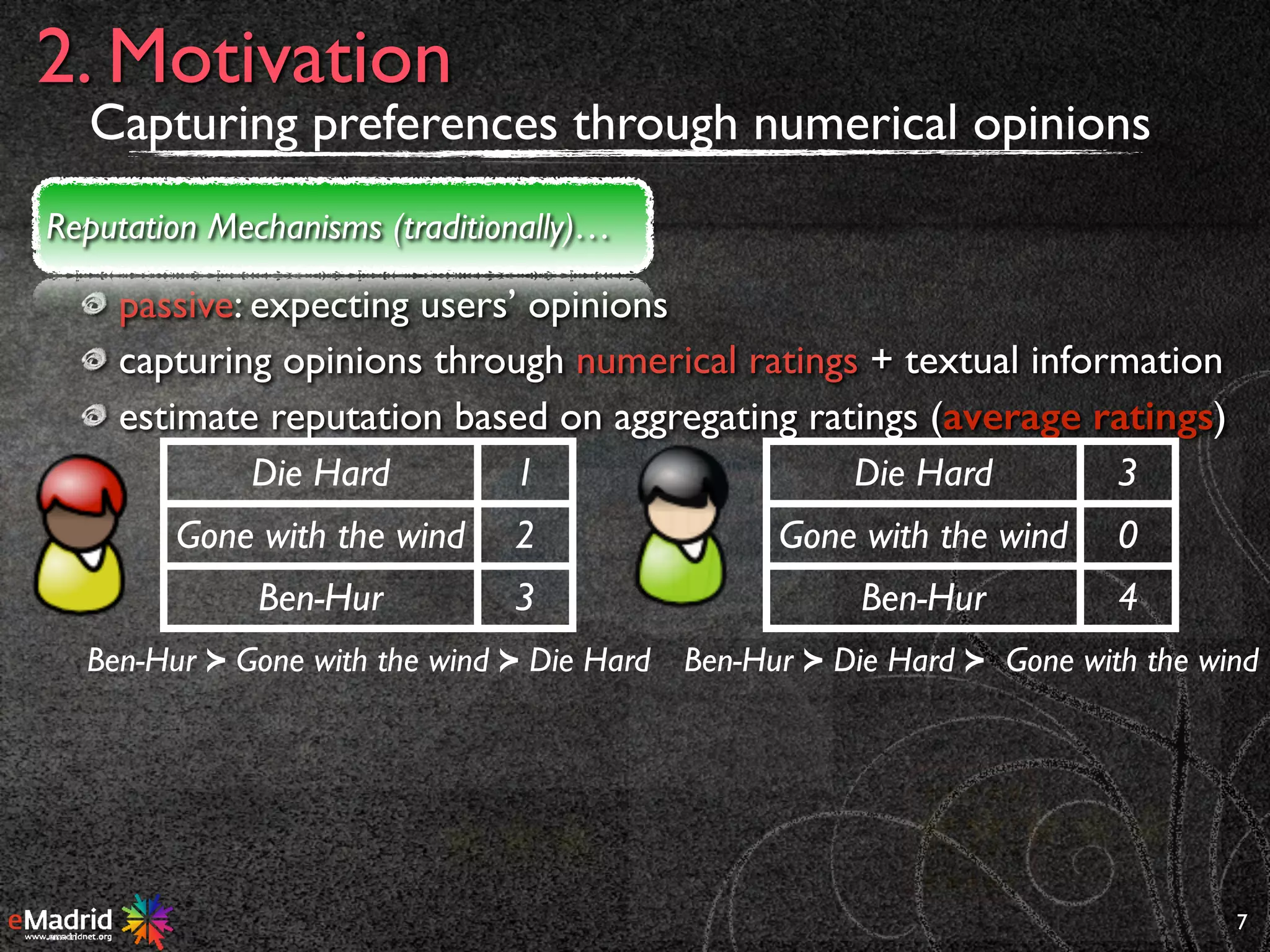

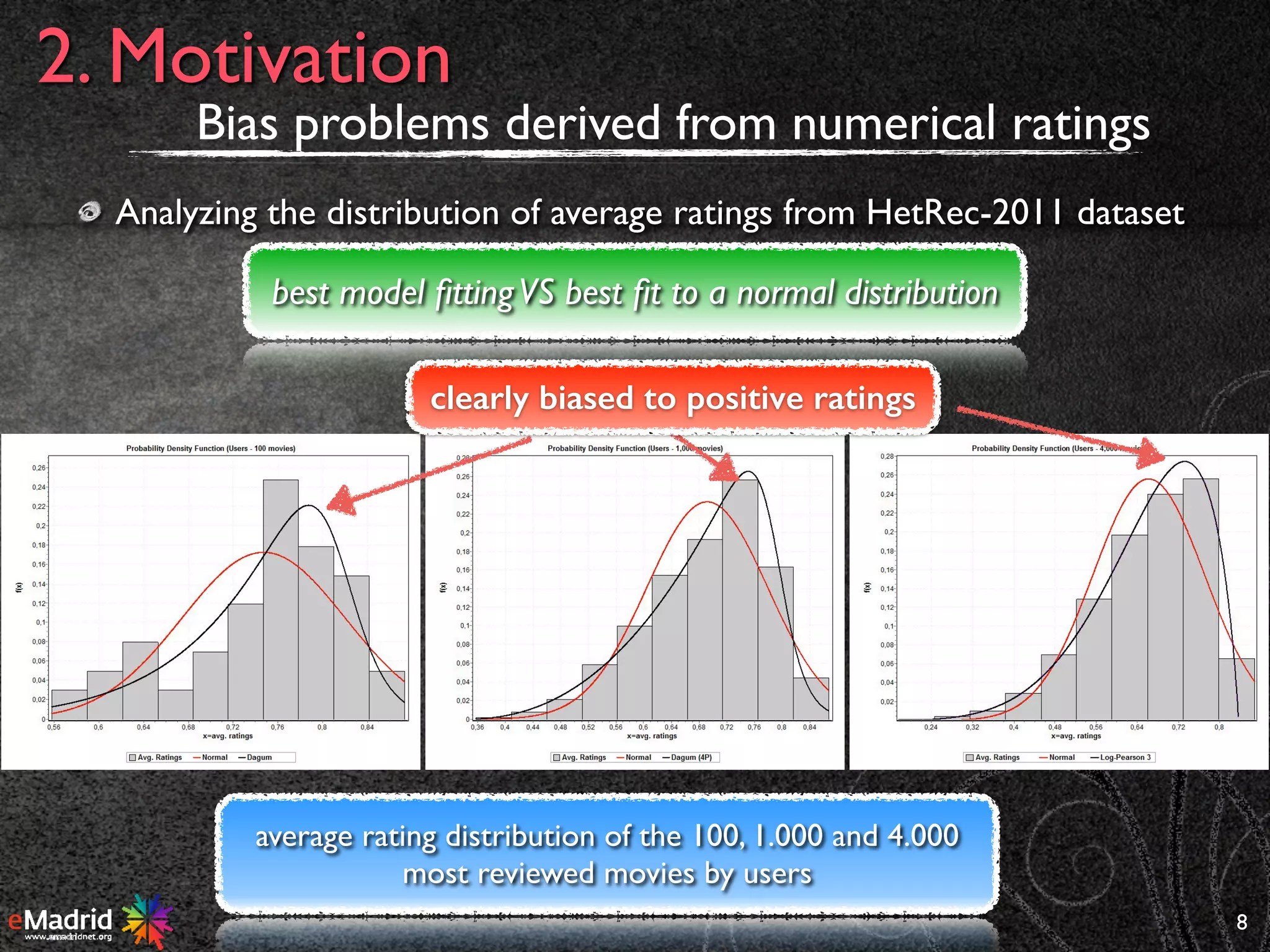

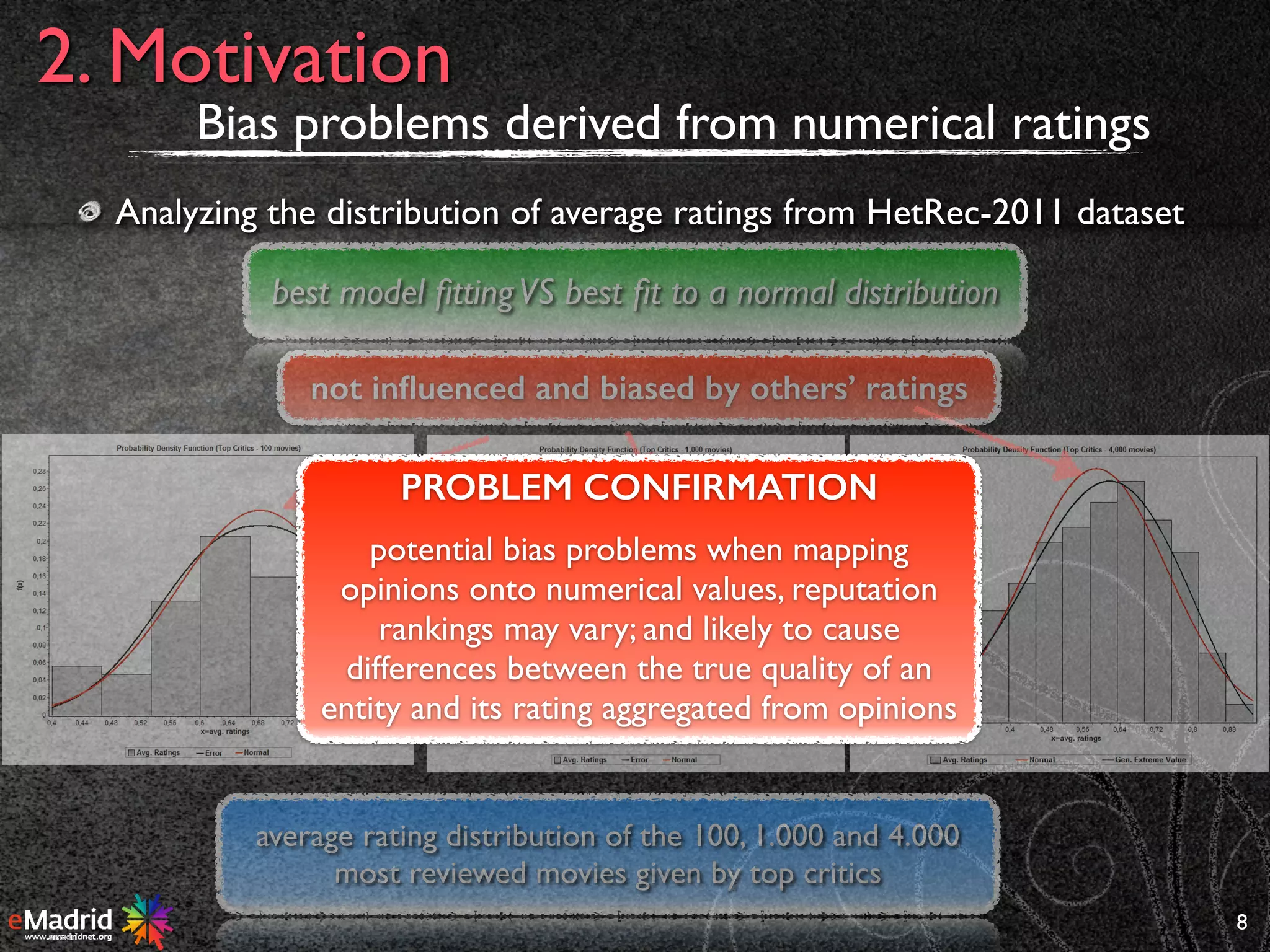

The document discusses a new reputation mechanism for MOOCs called Pairwise Preference Ranking Mechanism (PWRM) which addresses the inaccuracies of numerical ratings by using pairwise queries for preference elicitation. The traditional method of capturing opinions through numerical ratings is criticized for its subjectivity and potential biases, and PWRM proposes a proactive method that facilitates comparative aggregation of opinions in an iterative process. By modeling MOOCs as social networks, PWRM aims to provide a dynamic approach to ranking educational resources based on user preferences.

![11

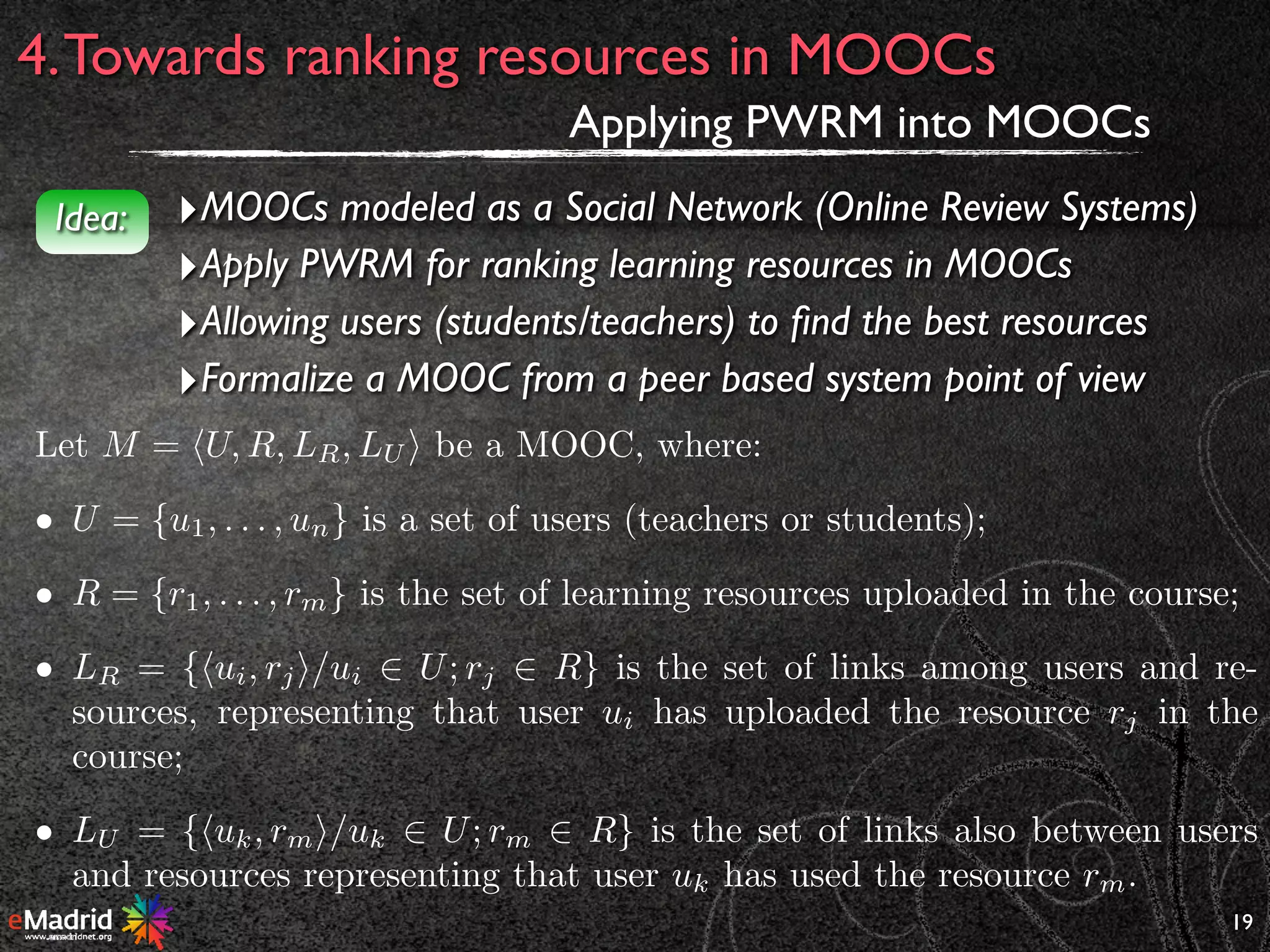

3. From opinion ratings to pairwise queries: PWRM

Comparative opinions: Pairwise preference elicitation

Based on pairwise queries:

FROM

Ben-Hur

[0..1]

[Awful, fairly bad, It’s OK,

Will enjoy, Must see]

Gone with

the wind

:

15. Ben-Hur 4.3

:

23. Gone with the wind 4.1

:](https://image.slidesharecdn.com/rcenteno-emadrid-jul-2015-150702154651-lva1-app6891/75/V-Jornadas-eMadrid-sobre-Educacion-Digital-Roberto-Centeno-Universidad-Nacional-de-Educacion-a-Distancia-Mecanismos-de-reputacion-en-MOOCs-34-2048.jpg)

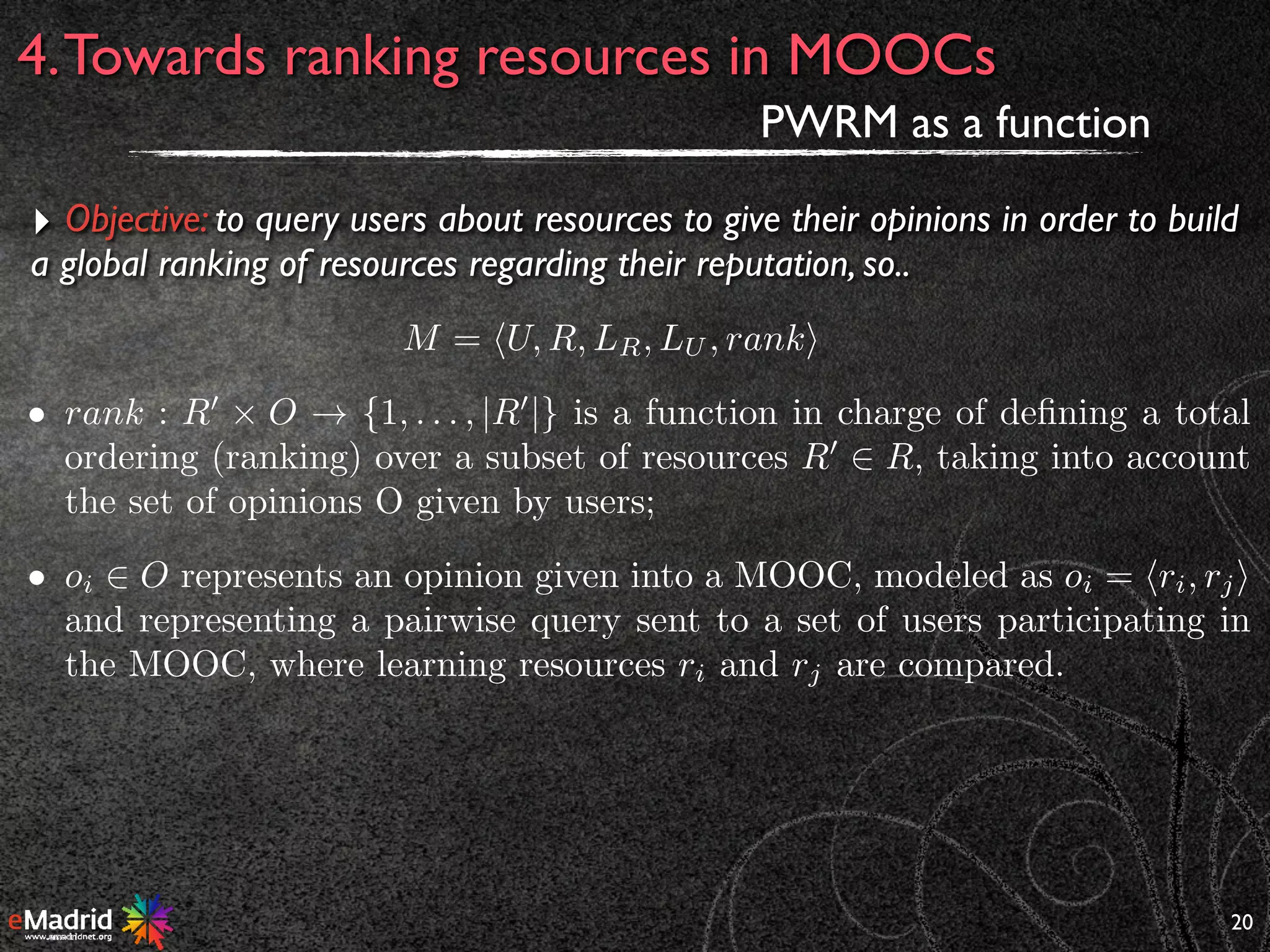

![11

3. From opinion ratings to pairwise queries: PWRM

Comparative opinions: Pairwise preference elicitation

Based on pairwise queries:

FROM

Ben-Hur

[0..1]

[Awful, fairly bad, It’s OK,

Will enjoy, Must see]

Gone with

the wind

:

15. Ben-Hur 4.3

:

23. Gone with the wind 4.1

:

TO Which movie do you prefer, Ben-Hur

or Gone with the wind?

:

15. Ben-Hur

:

23. Gone with the wind

:

easier for users to state opinions when the queries compare objects in a

pairwise fashion…

“… between these two objects, which one do you prefer?”](https://image.slidesharecdn.com/rcenteno-emadrid-jul-2015-150702154651-lva1-app6891/75/V-Jornadas-eMadrid-sobre-Educacion-Digital-Roberto-Centeno-Universidad-Nacional-de-Educacion-a-Distancia-Mecanismos-de-reputacion-en-MOOCs-35-2048.jpg)