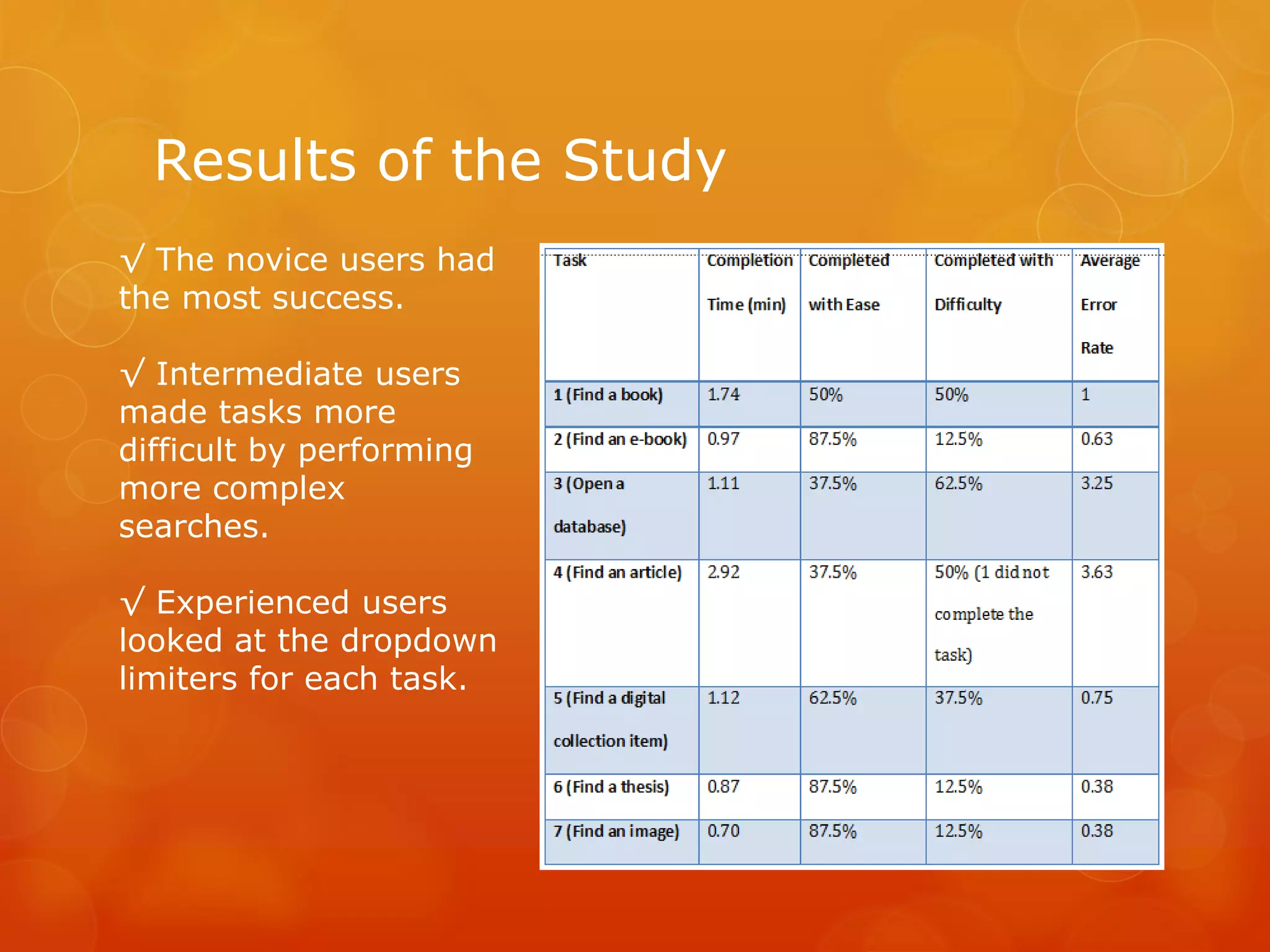

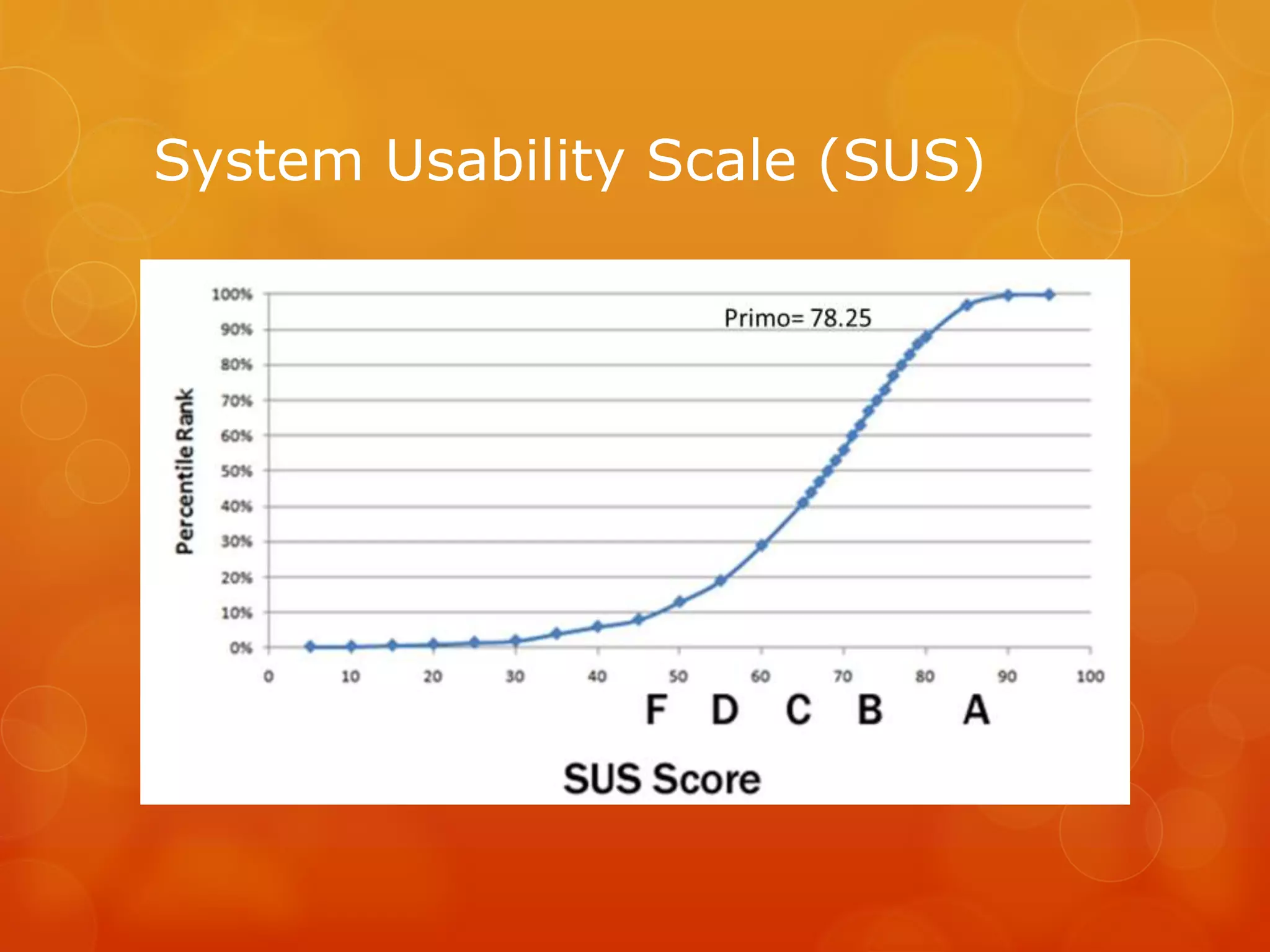

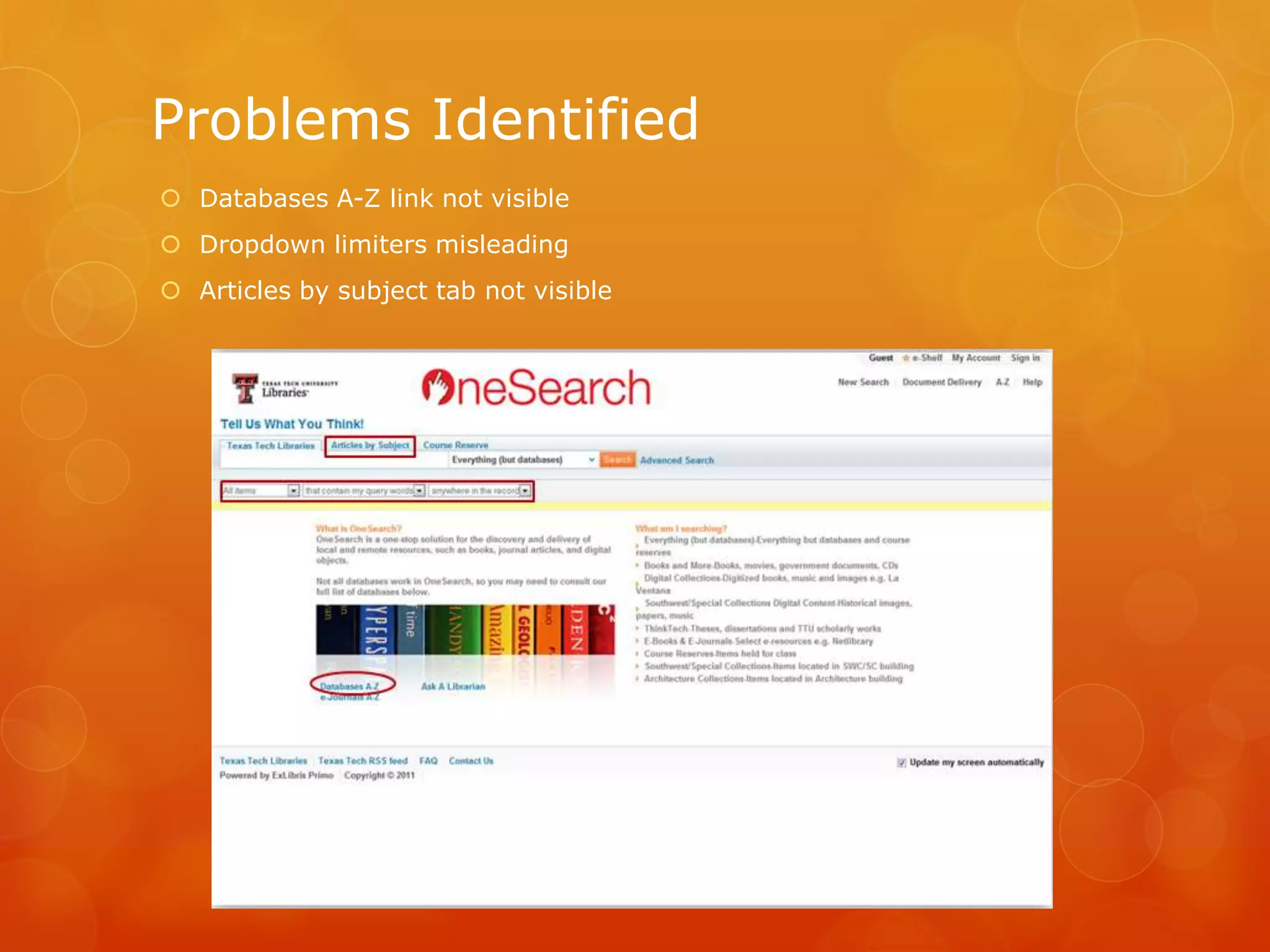

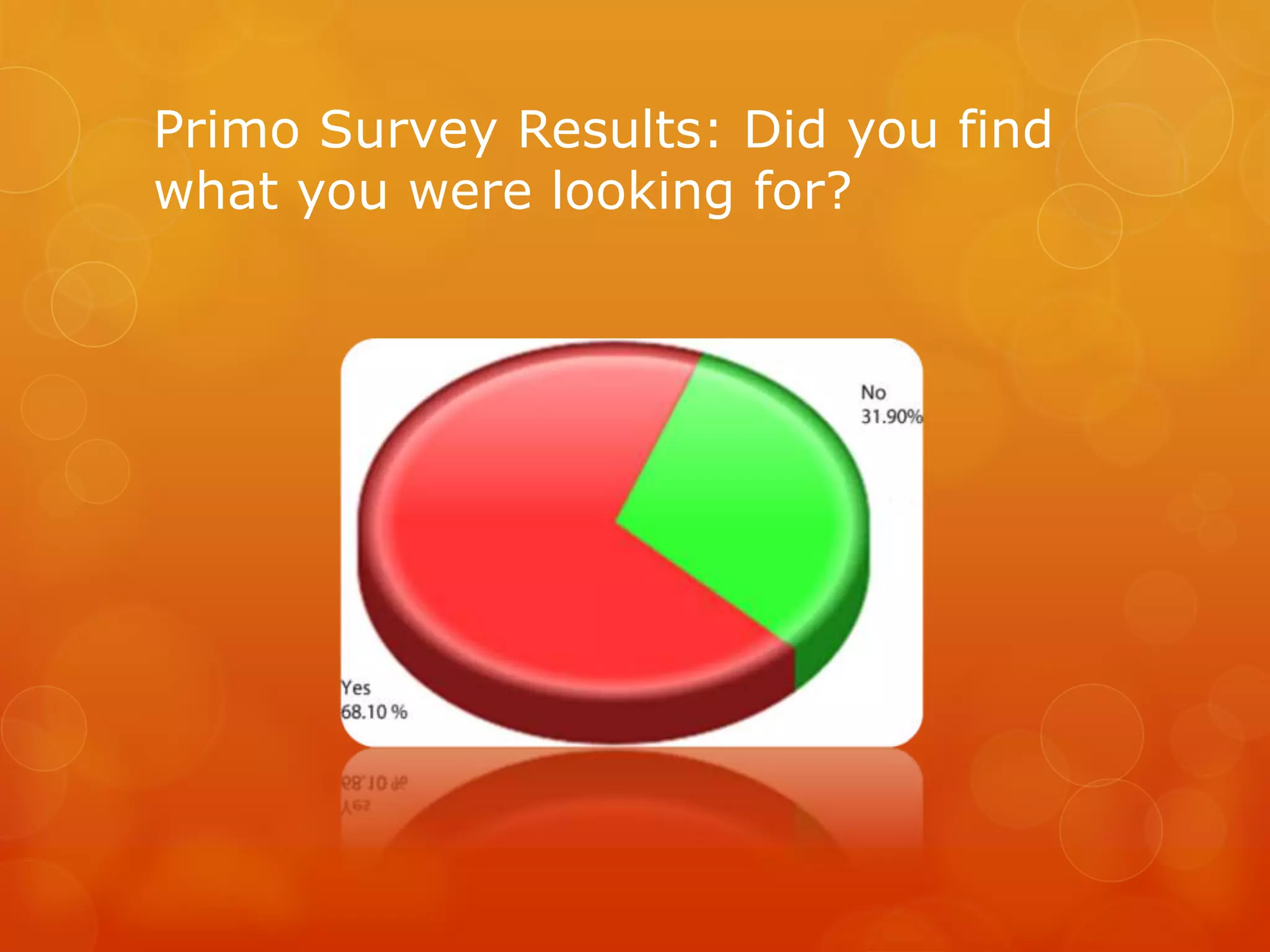

The document outlines a usability study conducted by Texas Tech University Libraries to evaluate the effectiveness of the OneSearch interface in serving library patrons. The study involved various tasks and employed different usability methods, revealing that novice users had the most success while identifying several improvement areas, mostly cosmetic. Overall feedback was positive, with the recognition that many identified problems could be easily fixed.

![References

Clark, Melanie, Esther De Leon, Lynne Edgar, Joy Perrin (2011). Primo

“OneSearch” usability report. Lubbock, TX: Texas Tech University.

Clark, Melanie, Esther De Leon, Lynne Edgar, Joy Perrin (2012). Library

usability: tools for usability testing in the library. Lubbock, TX: Texas Tech

University.

Clark, Melanie, Esther De Leon, Lynne Edgar, Joy Perrin (2012). Library

usability: tools for usability testing in the library (presentation). , Texas

Library Association Conference, Houston, TX, April 19, 2012.

Krug, Steve. (2006). Don’t make me think! A common sense approach to

web usability, Second Edition. Berkeley, CA: New Riders.

Sauro, Jeff. (February 2, 2011). Measuring usability with the system

usability scale (SUS). Retrieved February 22, 2012 from

http://www.measuringusability.com/sus.php

Schmidt, Aaron, Etches-Johnson, Amanda. (January 25, 2012). 10 steps

to a user-friendly library website. Retrieved January 25, 2012, from

https://alapublishing.webex.com

Still, Brian. (2010). A study guide for the certified user experience

professional (CUEP) workshop [study guide]. Lubbock, TX: Texas Tech

University.](https://image.slidesharecdn.com/ttulibrariesprimousabilitystudy2012-160818194204/75/Primo-Usability-What-Texas-Tech-Discovered-When-Implementing-Primo-20-2048.jpg)