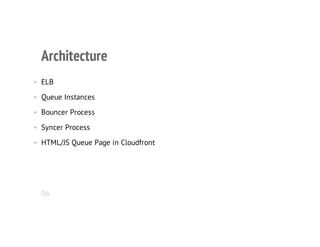

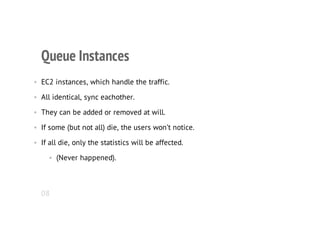

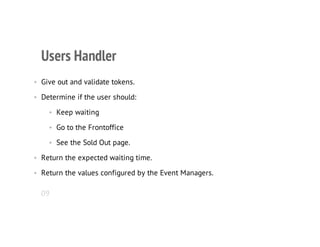

The document discusses strategies for handling high traffic (over 10k hits/s) using Python, focusing on queue management and server scalability. It outlines the architecture involving auto-scaling load balancers, queue instances, and data synchronization while emphasizing the importance of user communication and system resilience. Key takeaways include the challenges of debugging distributed applications and the limitations of auto-scaling technologies.