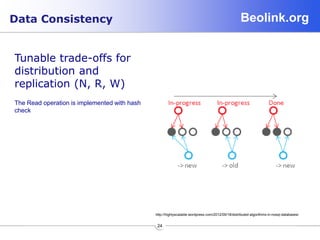

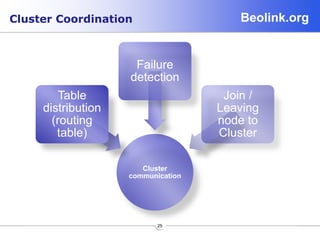

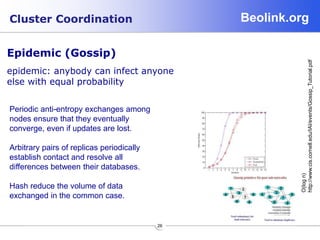

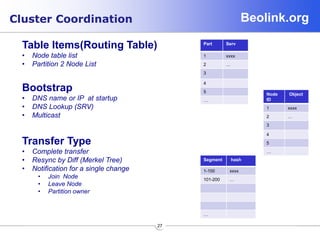

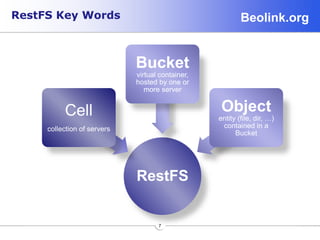

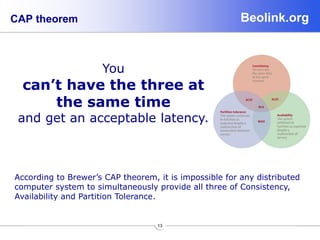

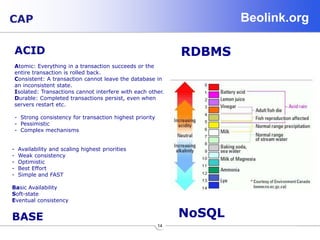

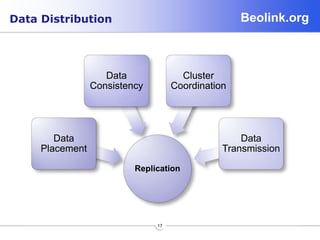

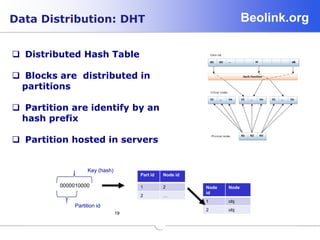

The document describes the Pisa framework for block data distribution and replication, detailing its architecture, implementation, and scalability features. Key concepts include data consistency challenges, the CAP theorem, and decentralized cluster coordination mechanisms. The framework aims to provide a low-cost, high-reliability storage solution by utilizing techniques like distributed hash tables and epidemic communication protocols.

![Beolink.org

Block Storage Devices

3

Pisa

is a simple block data

distribution and

Replication Framework on a

wide range of node

New Node

Transfer

New Node

New Node

Node

Data

Block

Key

Data

[Hash]](https://image.slidesharecdn.com/pisa2-140527013013-phpapp01/85/Pisa-3-320.jpg)

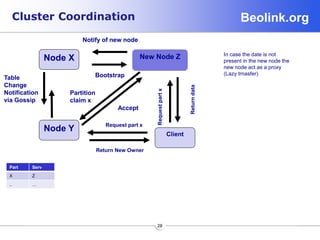

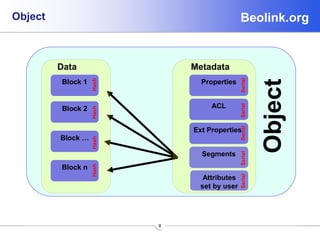

![Beolink.orgData Distribution

Zero Hop Hash (Consistent HASH)

- Partition location with 0 hops

- 1% capacity added and 1% moved

Node

• Zone

• Weight

Partition , array list (FIXED) :

• Position = kex prefix

• Value = node id

Shuffle

Avoid sequential allocation

Part_list = array('H')

part_key_shift = 32 - part_exp

part_count = 2 ** part_exp

sha(data).digest())[0] >> self.partition_shift

shuffle(part_list)

Ip = 10.1.0.1

zone = 1

weight = 3.0

class = 1](https://image.slidesharecdn.com/pisa2-140527013013-phpapp01/85/Pisa-20-320.jpg)

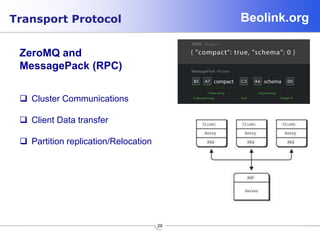

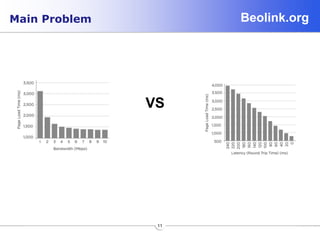

![Beolink.org

22

Data Distribution

Proximity base

http://highlyscalable.wordpress.com/2012/09/18/distributed-algorithms-in-nosql-databases/

node_ids = [master_node]

zones = [self.nodes[node_ids[0]]]

for replica in xrange(1, replicas):

while self.part_list[part_id] in node_ids :

part_id += 1

if part_id >= len(self.part_list):

part_id = 0

node_ids.append(self.part_list[part_id])

return [self.nodes[n] for n in node_ids]

Part Serv

1 xxxx

2 yyyyy

3 zzzzz

4

5

…

Partition one will be

also in node 2 and 3 ,

the master node is

always the first](https://image.slidesharecdn.com/pisa2-140527013013-phpapp01/85/Pisa-22-320.jpg)