This dissertation by Amrit Ranjan presents a face recognition method for managing photograph databases, utilizing principal component analysis (PCA) to extract and classify facial features for efficient image searching and identification. The proposed system addresses limitations of existing facial recognition systems, enhancing recognition accuracy and allowing for feature-based searches to accommodate variations in facial images. Through neural network training and eigenface representation, the study showcases the system's potential applications in security and criminal identification.

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 5

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

2. LITERATURE SURVEY

2.1 BACKGROUND

Biometrics based human computer interfaces (HCI) are becoming the most popular

systems for security and access control. Face recognition system is one such biometric

HCI system. It distinguishes among various human faces and identifies a face from a

digital image or a video frame from a video source. This is normally done by comparing

selected facial features of the image with those in a facial database that contain multiple

images of people and their information. The way humans recognize faces is highly

remarkable and efficient irrespective of the changes in the visual input due to lighting

conditions, background, and aging. [1] To build a system that can match humans in this

process is highly desirable yet very difficult to build. A basic face recognition system

may follow a Face Detection System whose function is to identify a face in a given image

and ignore all the other background details. After the face image is extracted it is given as

input to the Face Recognition System, which first extracts basic features of a face that

distinguishes one from the other and then classifiers are used to match images with those

stored in the database to identify a person. Therefore a basic face recognition system

contains the following sub-modules [2].

Face Detection

Feature Extraction

Feature Matching/Classification

In a work proposed by R. Chellappa, P. J. Phillips, A. Rosenfeld entitled ‘Face

Recognition: A Literature Survey’[3] describes face recognition has recently received

significant attention, especially during the past several years, as one of the most

successful applications of image analysis and understanding. This paper provides an up-

to-date critical survey of still and video-based face recognition research. There are two

underlying motivations for them to write this survey paper: the first is to provide an up-

to-date review of the existing literature, and the second is to offer some insights into the

studies of machine recognition of faces. To provide a comprehensive survey, they not

only categorize existing recognition techniques but also present detailed descriptions of

representative methods within each category. In addition, relevant topics such as](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-15-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 6

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

psychophysical studies, system evaluation, and issues of illumination and pose variation

are covered. In the work proposed by Matthew Turk and Alex Pentland in ‘Eigenfaces for

Recognition ‘[4], they have developed a near real-time computer system that can locate

and track a person’s head and then recognize it by comparing the features of this face

with those of known individuals. They treat the face recognition problem as an

intrinsically two dimensional recognition problem. The system functions by projecting

face images onto a feature space that spans the significant variations among known face

images. The significant features are known as ‘eigenfaces’, because they are the eigen

vectors of the set of faces, they do not necessarily correspond to features such as eyes,

ears and noses. The projection operation characterizes an individual face by a weighted

sum of the eigenface features, and so to recognize a face it is necessary only to compare

these weights to those of known individuals. Some particular advantages of the proposed

approach are that it provides for the ability to learn and later recognize new faces in an

unsupervised manner, and that it is easy to implement using a neural network approach.

In the work proposed by Kyungnam Kim entitled ‘Face Recognition using Principal

Component Analysis’ [5] illustrates that the Principal Component Analysis (PCA) is one

of the most successful techniques that have been used in image recognition and

compression. The purpose of PCA is to reduce the large dimensionality of the dataspace

to the smaller intrinsic dimensionality of feature space (independent variables), which are

needed to describe the data economically. The main idea of using PCA for face

recognition is to express the large 1-D vector of pixels constructed from 2-D facial image

into the compact principal components of the feature space. This is called Eigen space

projection. Eigen space is calculated by identifying the eigenvectors of the covariance

matrix derived from a set of facial images (vectors). The paper describes the

mathematical formulation of PCA and a detailed method of face recognition by PCA. In a

work by Baocai Yin Xiaoming Bai, Qin Shi, Yanfeng Sun entitled ‘Enhanced Fisher face

for Face Recognition’ Journal of Information & Computational Science 2: 3 (2005) 591-

595[6] Fisher face is enhanced in this paper for face recognition from one example image

per person. Fisher face requires several training images for each face and can hardly be

applied to applications where only one example image per person is available for

training. They enhance Fisher face by utilizing morphable model to derive multiple](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-16-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 7

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

images of a face from one single image. Region filling and hidden-surface removal

method are used to generate virtual example images. Experimental results on ORL and

UMIST face database show that our method makes impressive performance improvement

compared with conventional Eigenface methods. In another work contributed by P.

Latha, Dr. L. Ganesan, Dr. S. Annadurai, entitled “Face Recognition using Neural

Networks” , Signal Processing: An International Journal (SPIJ) Volume (3) : Issue (5)[7]

explains that Face recognition is one of biometric methods, to identify given face image

using main features of face. In this paper, a neural based algorithm is presented, to detect

frontal views of faces. The dimensionality of face image is reduced by the Principal

component analysis (PCA) and the recognition is done by the Back propagation Neural

Network (BPNN). In this project they have used 200 face images from Yale database and

some performance metrics like Acceptance ratio and Execution time have been

calculated. The Neural based Face recognition approach presented in this paper is robust

and has better performance of more than 90 % acceptance ratio. In the work proposed by

Mayank Agarwal, Nikunj Jain, Mr. Manish Kumar and Himanshu Agrawal, in “Face

Recognition Using Eigen Faces and Artificial Neural Network”, International Journal of

Computer Theory and Engineering, Vol. 2, No. 4, August, 2010[8]. Face is explained to

be a complex multidimensional visual model and developing a computational model for

face recognition is said to be difficult. The paper presents a methodology for face

recognition based on information theory approach of coding and decoding the face

image. Proposed methodology is connection of two stages – Feature extraction using

principle component analysis and recognition using the feed forward back propagation

Neural Network. The algorithm has been tested on 400 images (40 classes). A

recognition score for test lot is calculated by considering almost all the variants of feature

extraction. The proposed methods were tested on Olivetti and Oracle Research

Laboratory (ORL) face database. The test results are said to give a recognition rate of

97.018%.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-17-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 9

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

In the geometric feature-based approach the primary step is to localize and track a dense

set of facial points. Most geometric feature-based approaches use the active appearance

model (AAM) or its variations, to track a dense set of facial points. The locations of these

facial landmarks are then used in different ways to extract the shape of facial features,

and movement of facial features, as the expression evolves. [9] use AAM with second

order minimization, and a multilayer perceptron, for the recognition of facial expressions.

A recent example of an AAM-based technique for facial expression recognition is

presented in [10], in which different AAM fitting algorithms are compared and evaluated.

Another example of a system that uses geometric features to detect facial expressions is

that by Kotisa et al. [11]. A Kanade-Lucas-Tomasi (KLT) tracker was used, after

manually locating a number of facial points. The geometric displacement of certain

selected candid nodes, defined as the differences of the node coordinates between the first

and the greatest facial expression intensity frames, were used as an input to the novel

multiclass SVM classifier. Sobe et al. [12] also uses geometric features to detect

emotions. In this method, the facial feature points were manually located, and Piecewise

Bezier volume deformation tracking was used to track those manually placed facial

landmarks. They experimented with a large number of m machine learning techniques,

and the best result was obtained with a simple k-nearest neighbor technique. Sung and

Kim [13] introduce Stereo AAM (STAAM), which improves the fitting and tracking of

standard AAMs, by using multiple cameras to model the 3-D shape and rigid motion

parameters. A layered generalized discriminant analysis classifier is then used to combine

3-D shape and registered 2-D appearance, for the recognition of facial expressions. In

[14], a geometric features-based approach for modeling, tracking, and recognizing facial

expressions on a low-dimensional expression manifold was presented. Sandbach et

al. [15] recently presented a method that exploits 3D motion-based features between

frames of 3D facial geometry sequences, for dynamic facial expression recognition.

Onset and offset segment features of the expression are extracted, by using feature

selection methods. These features are then used to train the GentleBoost classifiers, and

build a Hidden Markov Model (HMM), in order to model the full temporal dynamic of

the expressions. Rudovic and Pantic [16] introduce a method for head-pose invariant

facial expression recognition that is based on a set of characteristic facial points,](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-19-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 10

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

extracted using AAMs. A coupled scale Gaussian process regression (CSGPR) model is

used for head-pose normalization.

Researchers have also developed systems for facial expression recognition, by utilizing

the advantages of both geometric-based and appearance-based features. Lyons and

Akamatsu [17] introduced a system for coding facial expressions with Gabor wavelets.

The facial expression images were coded using a multi-orientation, multi-resolution set of

Gabor filters at some fixed geometric positions of the facial landmarks. The similarity

space derived from this representation was compared with one derived from semantic

ratings of the images by human observers. In [18], a comparison between geometric-

based and Gabor-wavelets-based facial expression recognition using multi-layer

perceptorn was presented. Huang et al. [19] presented a boosted component-based facial

expression recognition method by utilizing the spatiotemporal features extracted from

dynamic image sequences, where the spatiotemporal features were extracted from facial

areas centered at 38 detected fiducial interest points.

2.3 PHOTOMETRIC (VIEW BASED)

Photometric is a technique in computer vision for estimating the surface normal of

objects by observing that object under different lighting conditions. It is based on the fact

that the amount of light reflected by a surface is dependent on the orientation of the

surface in relation to the light source and the observer. By measuring the amount of light

reflected into a camera, the space of possible surface orientations is limited. Given

enough light sources from different angles, the surface orientation may be constrained to

a single orientation or even over constrained.

The technique was originally introduced by Woodham in 1980. The special case where

the data is a single image is known as shape from shading, and was analyzed by B. K. P.

Horn in 1989. Photometric stereo has since been generalized to many other situations,

including extended light sources and non-Lambertian surface finishes. Current research

aims to make the method work in the presence of projected shadows, highlights, and non-

uniform lighting. Surface normal define the local metric, using this observation Bronstein

et al. [20] defined a 3D face recognition system based on the reconstructed metric](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-20-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 11

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

without integrating the surface. The metric of the facial surface is known to be robust to

expressions.

Figure 2.3 (a) Facial Surface[20]

The input of a face recognition system is always an image or video stream. The output is

an identification or verification of the subject or subjects that appear in the image or

video.

A face recognition system as a three step process

Figure 2.3 (b) Basic Face Recognition](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-21-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 14

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

Pedestrian Detection

Kinect projects

3D reconstruction

2.5 PCA

PCA is known a Principle Component Analysis – this is a statistical analytical tool that is

used to explore, sort and group data. What PCA does is take a large number of correlated

(interrelated) variables and transform this data into a smaller number of uncorrelated

variables (principal components) while retaining maximal amount of variation, thus

making it easier to operate the data and make predictions. Or as Smith (2002) puts it

“PCA is a way of identifying patterns in data, and expressing the data in such a way as to

highlight their similarities and differences[13]. Since patterns in data can be hard to find

in data of high dimension, where the luxury of graphical representation is not available,

PCA is a powerful tool for analyzing data.”

History of PCA

According to Jolliffe (2002) it is generally accepted that PCA was first described by Karl

Pearson in 1901. In his article ” On lines and planes of closest fit to systems of points in

space,” Pearson (1901) discusses the graphical representation of data and lines that best

represent the data. He concludes that “The best-fitting straight line to a system of points

coincides in direction with the maximum axis of the correlation ellipsoid”. He also states

that the analysis used in his paper can be applied to multiple variables. However, PCA

was not widely used until the development of computers. It is not really feasible to do

PCA by hand when number of variables is greater than four, but it is exactly for larger

amount of variables that PCA is really useful, so the full potential of PCA could not be

used until after the spreading of computers (Jolliffe, 2002). According to Jolliffe (2002)

significant contributions to the development of PCA were made by Hotelling (1933) and

Girshick (1936; 1939) before the expansion in the interest towards PCA. In 1960s. as the

interest in PCA rose, important contributors were Anderson (1963) with a theoretical

discussion, Rao (1964) with numerous new ideas concerning uses, interpretations and](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-24-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 16

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

of variables optimizing a certain algebraic criterion. Shlens 2009) gives an overview how

to perform principal components analysis:

Organize data as an m×n matrix, where m is the number of measurement types and n is

the number of samples

Subtract off the mean for each measurement type

Calculate covariance matrix

Calculate the eigenvectors and eigenvalues of the covariance matrix.

DERIVING PRINCIPAL COMPONENTS

Following is a standard derivation of principal components presented by Jolliffe (2002)

To derive the form of the PCs, consider first α’1x; the vector a1 maximizes

Var [α’x]= α’1∑ α1

It is clear that, as it stands, the maximum will not be achieved for finite α1 so a

normalization constraint must be imposed. The constraint used in the derivation is α1α1 =

1, that is, the sum of squares of elements of α1 equals 1. Other constraints may be more

useful in other circumstances, and can easily be substituted later on. However, the use of

constraints other than α’1α1 = constant in the derivation leads to a more difficult

optimization problem, and it will produce a set of derived variables different from the

principal components.

To maximize α’1∑α subject α’1∑α-λ (α’1α1-1) to α1α1 = 1, the standard approach

is to use the technique of Lagrange multipliers. ∑α1-λα1=0

(∑-λ Ip) α1 =0

Maximize where λ is a Lagrange multiplier. Differentiation with respect to α1 gives or

Where Ip is the (p x p) identity matrix. Thus, ë is an eigenvalue and á1 is the

corresponding eigenvector. To decide which of the

α’1 ∑α1= α’1λ α1= λ ,the eigenvectors gives á’1x with maximum variance, note that

the quantity to be maximized is so ë must be as large as possible. Thus, á1 is the

eigenvector corresponding to the largest eigenvalue, and var [α’1x] = α’1∑α1 = λ1, the](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-26-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 17

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

largest eigenvalue. In general, the kth PC of x is a’kx and, where ëk is the kth largest

eigenvalue of

var [α’kx] =λk for k=1, 2, 3,……,p.

And ák is the corresponding eigenvector.

Shlens (2009) derives an algebraic solution to PCA based on an important property of

eigenvector decomposition. Once again, the data set is X, an

m×n matrix, where m is the number of measurement types and n is the number of

samples. The goal is summarized as follows: Find some orthonormal matrix P in Y = PX

such that CY=1/n (YYT) is a diagonal matrix. The rows of P are the principal

components of X. The rewriting CY in terms of the unknown variable.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-27-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 18

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

2.6 ADVANTAGES AND DISADVANTAGES OF PCA

IMPORTANCE OF PCA

Principal component analysis (PCA) is a standard tool in modern data analysis - in

diverse fields from neuroscience to computer graphics - because it is a simple, non-

parametric method for extracting relevant information from confusing data sets. With

minimal effort PCA provides a roadmap for how to reduce a complex data set to a lower

dimension to reveal the sometimes hidden, simplified structures that often underlie it.

(Shlens, 2009) Importance of PCA is manifested by its use in so many different fields of

science and life. PCA is very much used in neuro-science, for example. Another fields of

use are pattern recognition and image compression, therefore PCA is suited for use in

facial recognition software for example, as well as for recognition and storing of other

biometric data. Many IT related fields also use PCA, even artificial intelligence research.

According to Jolliffe (2002) PCA is also used in research of agriculture, biology,

chemistry, climatology, demography, ecology, food research, genetics, geology,

meteorology, oceanography, psychology, quality control, etc. But in this paper we are

going to focus more on uses in finance and economy. PCA has been used in economics

and finance to study changes in stock markets, commodity markets, economic growth,

exchange rates, etc. Earlier studies were done in economics, but stock markets were also

under research already in 1960s. Lessard (1973) claims that “principal component or

factor analysis have been used in several recent empirical studies (Farrar [1962], King

[1967], and Feeney and Hester [1967]) concerned with the existence of general

movements in the returns from common stocks.” PCA has mostly been used to compare

different stock markets in search for diversification opportunities, especially in earlier

studies like the ones by Makridakis (1974) and by Phillipatos et al. (1983).

BENEFITS OF PCA

PCA is a special case of Factor Analysis that is highly useful in the analysis of many time

series and the search for patterns of movement common to several series (true factor

analysis makes different assumptions about the underlying structure and solves](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-28-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 22

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

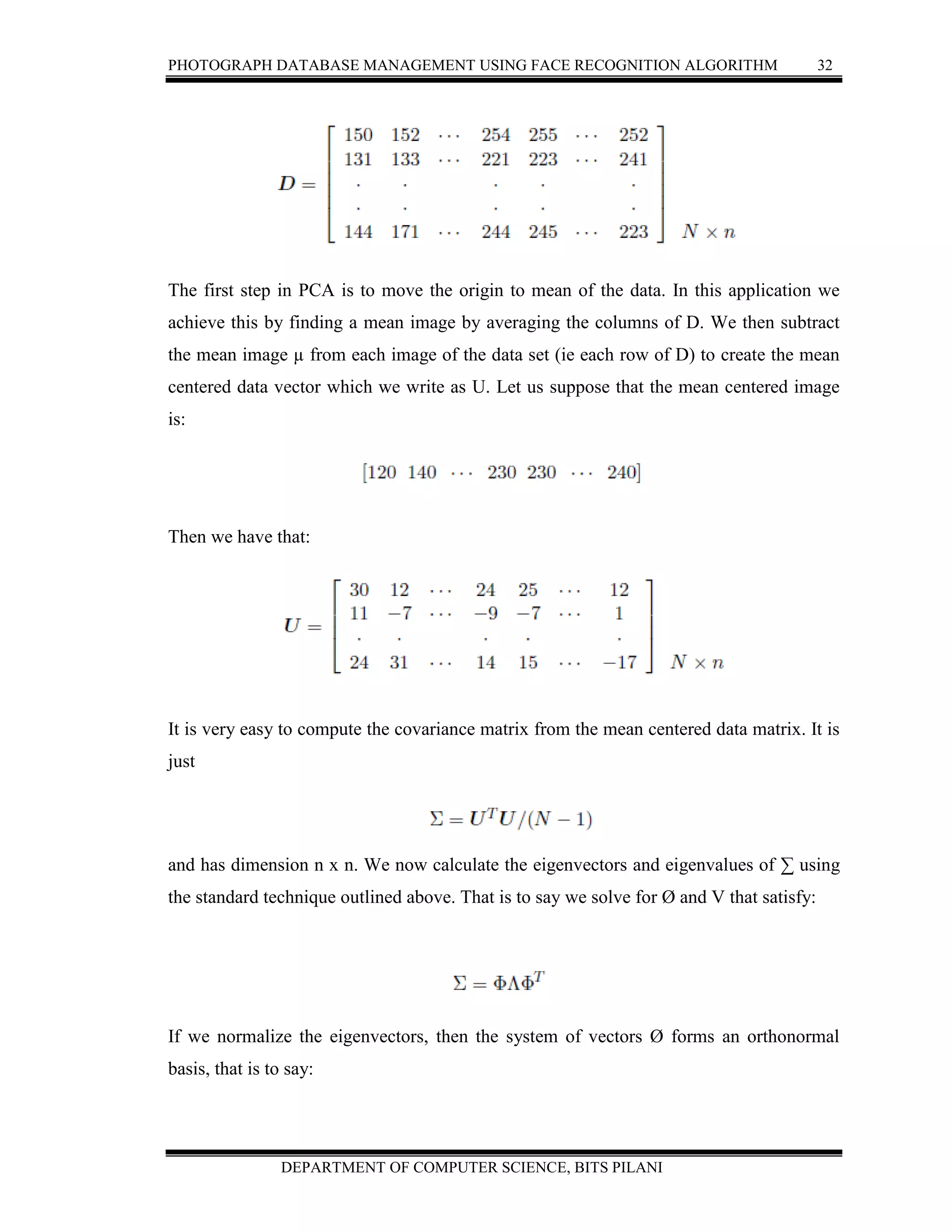

2.8 PCA VERSUS 2DPCA

In the PCA-based face-recognition technique, the 2D face-image matrices must be

previously transformed into 1D image vectors. The resulting image vectors usually lead

to a high dimensional image vector space in which it’s difficult to evaluate the covariance

matrix accurately due to its large size and relatively few training samples. Fortunately,

we can calculate the eigenvectors (eigenfaces) efficiently using single value

decomposition (SVD) techniques, which avoid the process of generating the covariance

matrix. Unlike conventional PCA, 2DPCA is based on 2D matrices rather than 1D

vectors. That is, the image matrix doesn’t need to be previously transformed into a

vector. Instead, an image covariance matrix can be constructed directly using the original

image matrices. In contrast to PCA’s covariance matrix, the image covariance matrix’s

size using 2DPCA is much smaller. As a result, 2DPCA has two important advantages

over PCA. First, it’s easier to evaluate the covariance matrix accurately. Second, less

time is required to determine the corresponding eigenvectors. We extract the features

from the 2DPCA matrix using the optimal projection vector axes. The vector’s size is

given by the image’s size and the number of coefficients. If the image size is 112 × 92,

for example, then the number of coefficients is 112 × d. Researchers have demonstrated

experimentally that should be set to no less than 5 to satisfy accuracy.4 This leads us to a

numerous coefficients.

Figure 2.8 Examples from the faces dataset[12]](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-32-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 24

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

2.10 HAAR FEATURE ALGORITHMS

Detecting human facial features, such as the mouth, eyes, and nose require that Haar

classifier cascades first be trained. In order to train the classifiers, this gentle AdaBoost

algorithm and Haar feature algorithms must be implemented. Fortunately, Intel developed

an open source library devoted to easing the implementation of computer vision related

programs called Open Computer Vision Library (OpenCV). The OpenCV library is

designed to be used in conjunction with applications that pertain to the field of HCI,

robotics, biometrics, image processing, and other areas where visualization is important

and includes an implementation of Haar classifier detection and training [8]. To train the

classifiers, two set of images are needed. One set contains an image or scene that does not

contain the object, in this case a facial feature, which is going to be detected. This set of

images is referred to as the negative images. The other set of images, the positive images,

contain one or more instances of the object. The location of the objects within the

positive images is specified by: image name, the upper left pixel and the height, and

width of the object [21]. For training facial features 5,000 negative images with at least a

mega-pixel resolution were used for training. These images consisted of everyday

objects, like paperclips, and of natural scenery, like photographs of forests and

mountains. In order to produce the most robust facial feature detection possible, the

original positive set of images needs to be representative of the variance between

different people, including, race, gender, and age. A good source foor these images is

National Institute of Standards and Technology’s (NIST) Facial Recognition Technology

(FERET) database. This database contains over 10,000 images of over 1,000 people

under different lighting conditions, poses, and angles. In training each facial feature,

1,500 images were used. These images were taken at angles ranging from zero to forty

five degrees from a frontal view. This provides the needed variance required to allow

detection if the head is turned slightly [22]. Three separate classifiers were trained, one

for the eyes, one for the nose, and one for the mouth. Once the classifiers were trained,

they were used to detect the facial features within another set of images from the FERET

database.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-34-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 25

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

The accuracy of the classifier was then computed as shown in Table 1. With the

exception of the mouth classifier, the classifiers have a high rate of detection. However,

as implied by [23], the false positive rate is also quite high.

2.11 REGIONALIZED DETECTION

Since it is not possible to reduce the false positive rate of the classifier without reducing

the positive hit rate, a method besides modifying the classifier training attribute is needed

to increase accuracy [24]. The method proposed to is to limit the region of the image that

is analyzed for the facial features. By reducing the area analyzed, accuracy will increase

since less area exists to produce false positives. It also increases efficiency since fewer

features need to be computed and the area of the integral images is smaller. In order to

regionalize the image, one must first determine the likely area where a facial feature

might exist. The simplest method is to perform facial detection on the image first. The

area containing the face will also contain facial features.

However, the facial feature cascades often detect other facial features as illustrated in

Figure 2.8. The best method to eliminate extra feature detection is to further regionalize

the area for facial feature detection. It can be assumed that the eyes will be located near

the top of the head, the nose will be located in the center area and the mouth will be

located near the bottom. The upper 5/8 of the face is analyzed for the eyes. This area

eliminates all other facial features while still allowing a wide variance in the tilt angle.

The center of the face, an area that is 5/8 by 5/8 of the face, was used to for detection of

the nose. This area eliminates all but the upper lip of the mouth and lower eyelid. The

lower half of the facial image was used to detect the mouth. Since the facial detector used

sometimes eliminates the lower lip the facial image was extended by an eighth for mouth

detection only.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-35-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 26

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

2.12 COMPUTATIONS AND EXAMPLES

Principal Component Analysis, or simply PCA, is a statistical procedure concerned with

elucidating the covari-ance structure of a set of variables. In particular it allows us to

identify the principal directions in which the data varies.

For example, in figure 2.12.a, suppose that the triangles represent a two variable data set

which we have measured in the X-Y coordinate system. The principal direction in which

the data varies is shown by the U axis and the second most important direction is the V

axis orthogonal to it. If we place the U V axis system at the mean of the data it gives us a

compact representation. If we transform each (X; Y ) coordinate into its corresponding

(U; V ) value, the data is de-correlated, meaning that the co-variance between the U and

V variables is zero. For a given set of data, principal component analysis finds the axis

system defined by the principal directions of variance (ie the U V axis system in figure

2.12.a). The directions U and V are called the principal components.

Figure 2.12(a) PCA for Data Representation[3]](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-36-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 27

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

Figure 2.12(b) PCA for Dimension Reduction[3]

If the variation in a data set is caused by some natural property, or is caused by random

experimental error, then we may expect it to be normally distributed. In this case we

show the nominal extent of the normal distribution by a hyper-ellipse (the two

dimensional ellipse in the example). The hyper ellipse encloses data points that are

thought of as belonging to a class. It is drawn at a distance beyond which the probability

of a point belonging to the class is low, and can be thought of as a class boundary.

If the variation in the data is caused by some other relationship then PCA gives us a way

of reducing the dimensionality of a data set. Consider two variables that are nearly related

linearly as shown in figure 2.12.b. As in figure 2.12.a the principal direction in which the

data varies is shown by the U axis, and the secondary direction by the V axis. However in

this case all the V coordinates are all very close to zero. We may assume, for example,

that they are only non-zero because of experimental noise. Thus in the U V axis system

we can represent the data set by one variable U and discard V. Thus we have reduced the

dimensionality of the problem by 1.

COMPUTING THE PRINCIPAL COMPONENTS

In computational terms the principal components are found by calculating the

eigenvectors and eigenvalues of the data covariance matrix. This process is equivalent to

finding the axis system in which the co-variance matrix is diagonal. The eigenvector with](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-37-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 30

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

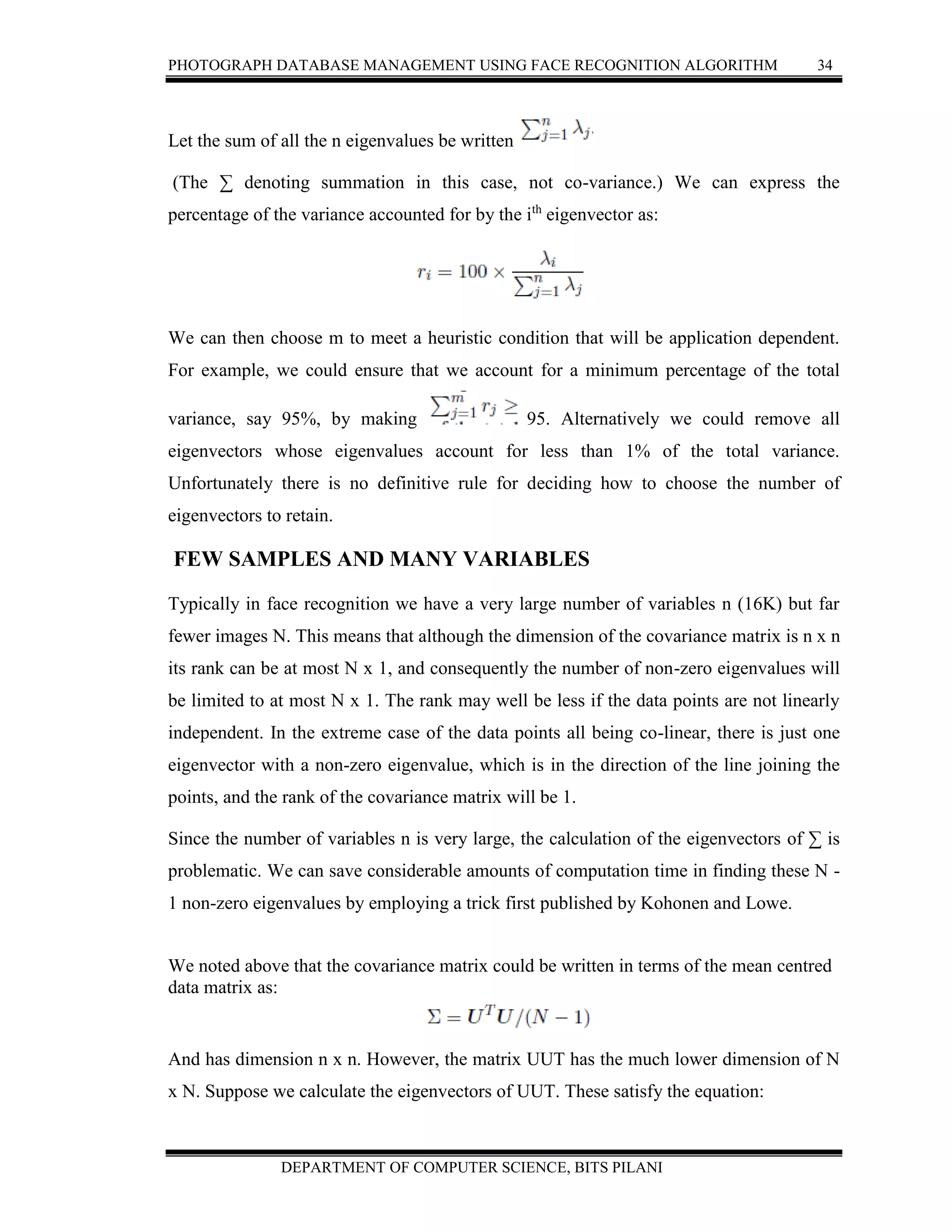

PCA IN PRACTICE

Figure 2.12 (c) The PCA Transformation[3]

Figure 2.12.c gives a geometric illustration of the process in two dimensions. Using all

the data points we find the mean values of the variables (µx1; µx2) and the covariance

matrix ∑ which is a 2 2 matrix in this case. If we calculate the eigenvectors of the co-

variance matrix we get the direction vectors indicated by Ø1 and Ø 2. Putting the two

eigenvectors as columns in the matrix = [Ø 1; Ø 2] we create a transformation matrix

which takes our data points from the [x1; x2] axis system to the axis [Ø 1; Ø 2] system with

the equation:

p = (px µx)

Where px is any point in the [x1; x2] axis system, µx = (µx1; µx2) is the data mean, and p is

the coordinate of the point in the [Ø 1; Ø 2] axis system.

PCA Based Face Recognition

Face recognition is one example where principal component analysis has been

extensively used, primarily for reducing the number of variables. Let us consider the 2D](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-40-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 31

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

case where we have an input image, and wish to compare this with a set of data base

images to find the best match. We assume that the images are all of the same resolution

and are all equivalently framed. (ie the faces appear at the same place and same scale in

the images). Each pixel can be considered a variable thus we have a very high

dimensional problem which can be simplified by PCA.

Formally, in image recognition an input image with n pixels can be treated as a point in

an n-dimensional space called the image space. The individual ordinates of this point

represent the intensity values of each pixel of the image and form a row vector: px = (i1;

i2; i3; :::in) This vector is formed by concatenating each row of image pixels, so for a

modest sized image, say 128 by 128 resolution it will dimension 16384. For example:

Becomes the vector:

[150; 152; 151; 131; 133; 72; 144; 171; 67]16K

Clearly this number of variables is far more than is required for the problem. Most of the

image pixels will be highly correlated. For example if the background pixels are all equal

then adjacent background pixels are exactly correlated. Thus we need to consider how to

achieve a reduction in the number of the variables.

DIMENSION REDUCTION

Let’s consider an application where we have N images each with n pixels. We can write

our entire data set as an N n data matrix D. Each row of D represents one image of our

data set. For example we may have:](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-41-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 33

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

It is in effect an axis system in which we can represent our data in a compact form.

We can achieve size reduction by choosing to represent our data we fewer dimensions.

Normally we choose to use the set of m (m <= n) eigenvectors of ∑ which have the m

largest eigenvalues. Typically for face recognition system m will be quite small (around

20-50 in number). We can compose these in an n x m matrix

Øpca = [Ø1; Ø2; Ø3 _ _ _ _m] which performs the PCA projection.

For any given image px = (i1; i2; i3; :::in)

We can find a corresponding point in the PCA space by computing

The m-dimension vector pØ is all we need to represent the image. We have achieved a

massive reduction in data size since typically n will be at least 16K and m as small as 20.

We can store all our data base images in the PCA space and can easily search the data

base to find the closest match to a test image. We can also reconstruct any image with the

inverse transform:

It can be shown that choosing the m eigenvectors of ∑ that have the largest eigenvalues

minimizes the mean square reconstruction error over all choices of m orthonormal bases.

Clearly we would like to be as small as possible compatible with accurate recognition and

reconstruction, but this problem is data dependent. We can make a decision on the basis

of the amount of the total variance accounted for by the m principal components that we

have chosen. This can be assessed by looking at the eigenvalues.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-43-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 35

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

If we multiply both sides of this equation by UT we get:

Adding brackets to this equation:

Makes it clear to see that UTØ` are the eigenvectors of UTU, which is what we wanted to

find. Since UUT has dimension N x N and is a considerably smaller matrix than UTU, we

have solved the much smaller eigenvector problem, and can calculate the N-1

eigenvectors of UTU with one further matrix multiply. UUT has at most N - 1

eigenvectors, but, as noted above, all other eigenvectors of UTU have zero eigenvalues.

The resulting eigenvectors are not orthonormal, and so should be normalized. The

method of PCA is sometimes also called the Karhunen-Lowe transform, and occasionally

the Householder transform. It is a specific application of the general mathematical

technique called single value decomposition in which an n x m matrix is projected to a

diagonal form. It is closely related to the technique called single value decomposition

which is a more general diagonalization method that applies to matrices that are not

square.

CORRESPONDENCE IN PCA

In 2D face images the pixels are not usually in correspondence. That is to say a given

pixel [xi; yi] may be part of the cheek in one image, part of the hair in another and so on.

This causes a lot of problems in face recognition and also in reconstructing faces. This

means that any linear combination of eigenfaces does not represent a true face but a

composition of face parts. A true face is only created when the eigenfaces are added

together in exactly the right proportions to re-create one of the original face images of the

training set.

However, if we represent a face in 3D, then it is possible to establish a correspondence

between each point on the surface map. We can organize the data in such a way that each

anatomical location (for example the tip of the nose) has the same index in each different](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-45-2048.jpg)

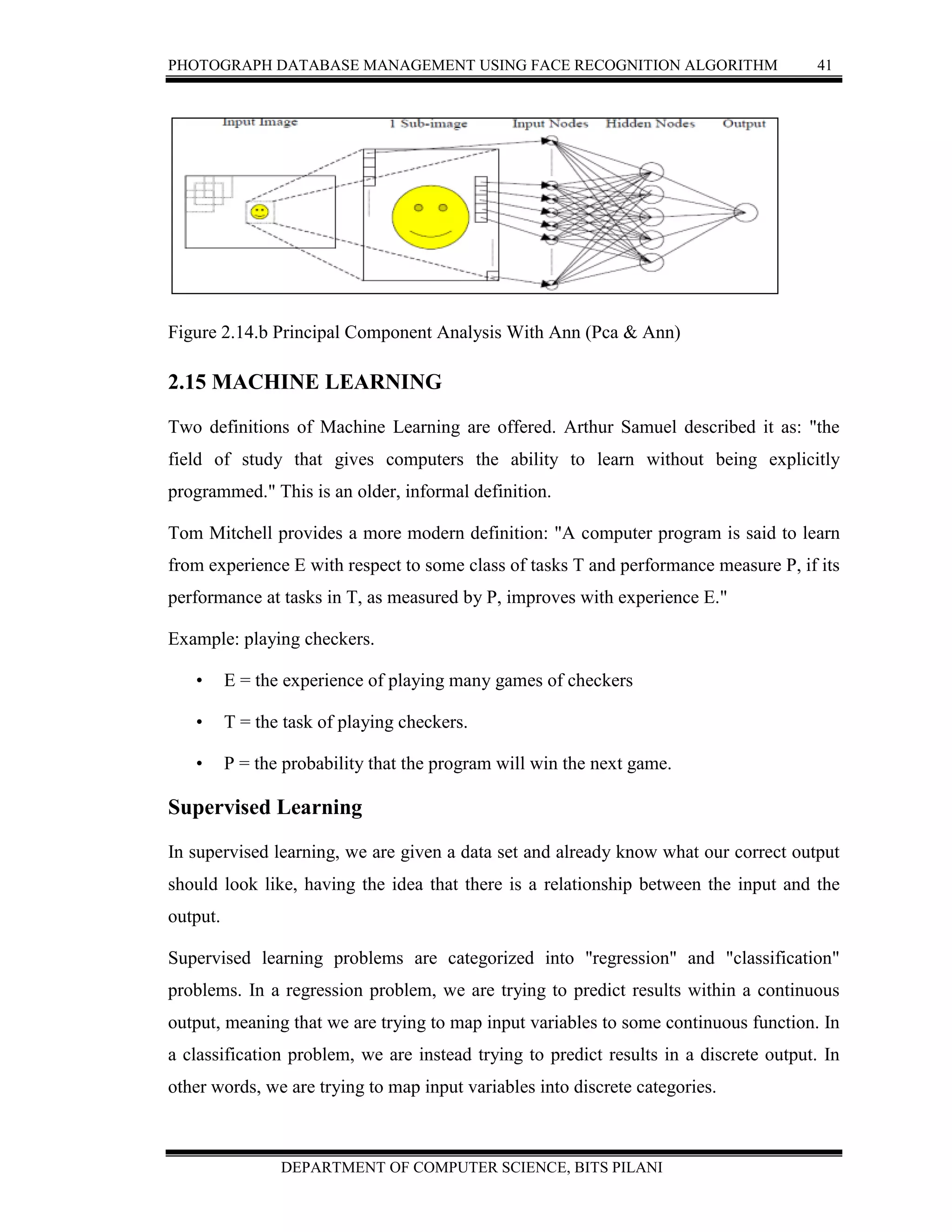

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 36

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

face data set. Figure shows two different faces whose surface points are in

correspondence.

Figure 2.12.d 3D surfaces in correspondence [7]

PCA can be carried out on 3D surface maps of faces (and other anatomical structures).

The variables are the individual co-ordinates of the surface points. A data set is of the

form:

[x1; y1; z1; x2; y2; z2; x3; xN ; yN ; zN ]

The face maps in figure have about 5,000 surface points, hence the number of variables is

15,000.

If the points of each subject are in correspondence, and the different subjects are aligned

as closely as possible in 3D before calculating the PCA, then any reasonable combination

of the eigenvectors will represent a valid face. This means that PCA on 3D surface maps

has the potential for constructing and manipulating faces that are different from any

example in the training set. This has many of applications in film and media. The set of

eigenvectors representing surface data in this manner is called an active shape model](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-46-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 37

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

Texture can be mapped onto a 3D surface map to give it a realistic face appearance. The

texture values (like pixel values in 2D) can also be included as variables in PCA. Models

of this sort are called active appearance models.

2.13 RESULTS

The first step in facial feature detection is detecting the face. This requires analyzing the

entire image. The second step is using the isolated face(s) to detect each feature.

The result is shown in Figure2.12.d. Since each the portion of the image used to detect a

feature is much smaller than that of the whole image, detection of all three facial features

takes less time on average than detecting the face itself. Using a 1.2GHz AMD processor

to analyze a 320 by 240 image, a frame rate of 3 frames per second was achieved. Since a

frame rate of 5 frames per second was achieved in facial detection only by [25] using a

much faster processor, regionalization provides a tremendous increase in efficiency in

facial feature detection.

Regionalization also greatly increased the accuracy of the detection. All false positives

were eliminated, giving a detection rate of around 95% for the eyes and nose. The mouth

detection has a lower rate due to the minimum size required for detection. By changing

the height and width parameter to more accurately represent the dimensions of the mouth

and retraining the classifier the accuracy should increase the accuracy to that of the other

features.

2.14 ARTIFICIAL NEURAL NETWORKS FOR FACE DETECTION

In the recent years, different architectures and models of Artificial neural networks

(ANN) were used for face detection and recognition. ANN can be used in face detection

and recognition because these models can simulate the way neurons work in the human

brain. This is the main reason for its role in face recognition. Artificial neural networks

(ANN) were used largely in the recent years in the fields of image processing

(compression, recognition and encryption) and pattern recognition. Many literature

researches used different ANN architecture and models for face detection and recognition](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-47-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 38

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

to achieve better compression performance according to: compression ratio (CR);

reconstructed image quality such as Peak Signal to Noise Ratio (PSNR); and mean square

error (MSE). Few literature surveys that give overview about researches related to face

detection based on ANN. Therefore, this research includes survey of literature studies

related to face detection systems and approaches which were based on ANN.

RETINAL CONNECTED NEURAL NETWORK (RCNN)

Rowley, Baluja and Kanade (1996) [27] presented face detection system based on a

retinal connected neural network (RCNN) that examine small windows of an image to

decide whether each window contains a face. Figure 3 shows this approach. The system

arbitrates between many networks to improve performance over one network. They used

a bootstrap algorithm as training progresses for training networks to add false detections

into the training set. This eliminates the difficult task of manually selecting non-face

training examples, which must be chosen to span the entire space of non-face images.

First, a pre-processing step, adapted from [28], is applied to a window of the image. The

window is then passed through a neural network, which decides whether the window

contains a face. They used three training sets of images. Test SetA collected at CMU:

consists of 42 scanned photographs, newspaper pictures, images collected from WWW,

and TV pictures (169 frontal views of faces, and require ANN to examine 22,053,124

20×20 pixel windows). Test SetB consists of 23 images containing 155 faces (9,678,084

windows). Test SetC is similar to Test SetA, but contains images with more complex

backgrounds and without any faces to measure the false detection rate: contains 65

images, 183 faces, and 51,368,003 window. The detection ratio of this approach equal](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-48-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 39

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

79.6% of faces over two large test sets and small number of false positives.

Figure 2.14.a Retinal Connected Neural Network (Rcnn)

ROTATION INVARIANT NEURAL NETWORK (RINN)

Rowley, Baluja and Kanade (1997) [29] presented a neural network-based face detection

system. Unlike similar systems which are limited to detecting upright, frontal faces, this

system detects faces at any degree of rotation in the image plane. Figure 4 shows the

RINN approach. The system employs multiple networks; the first is a “router” network

which processes each input window to determine its orientation and then uses this

information to prepare the window for one or more detector networks. We present the

training methods for both types of networks. We also perform sensitivity analysis on the

networks, and present empirical results on a large test set. Finally, we present preliminary

results for detecting faces which are rotated out of the image plane, such as profiles and

semi-profiles [29].](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-49-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 40

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

Figure 2.14.b Rotation Invariant Neural Network (Rinn)

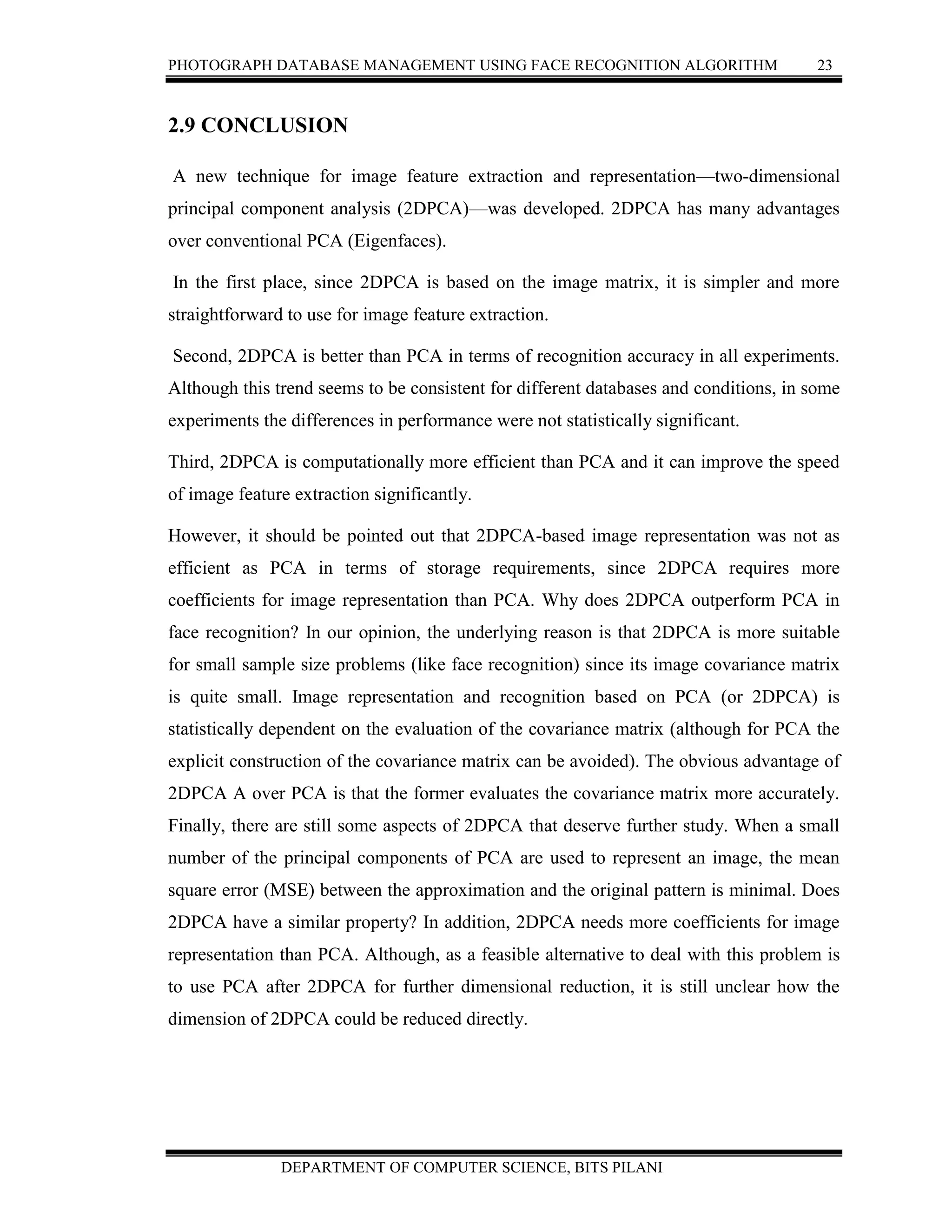

PRINCIPAL COMPONENT ANALYSIS WITH ANN (PCA & ANN)

Jeffrey Norris (1999) [30] used using principal component analysis (PCA) with class

specific linear projection to detect and recognized faces in a real-time video stream.

Figure 5 shows PCA & ANN for face detection. The system sends commands to an

automatic sliding door, speech synthesizer, and touchscreen through a multi-client door

control server. Matlab, C, and Java were used for developing system. The system steps to

search for a face in an image:

1. Select every 20×20 region of input image.

2. Use intensity values of its pixels as 400 inputs to ANN.

3. Feed values forward through ANN, and

4. If the value is above 0.5, the region represents a face.

5. Repeat steps (1..4) several times, each time on a resized version of the original

input

6. image to search for faces at different scales [30].](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-50-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 45

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

faces. These approaches are more appropriate for our specific case since they make use of

a template (e.g., the shape of an object). Among the early deformable template models is

the Active Contour Model by Kass et al. [47] in which a correlation structure between

shape markers is used to constrain local changes. Cootes et al. [48] proposed a

generalized extension, namely Active Shape Models (ASM), where deformation

variability is learned using a training set. Active Appearance Models (AAM) were later

proposed in [49] and they are closely related to the simultaneous formulation of Active

Blobs [50] and Morphable Models [51]. AAM can be seen as an extension of ASM which

includes the appearance information of an object.

EMOTION RECOGNITION RESEARCH

Ekman and Friesen [52] developed the Facial Action Coding System (FACS) to code

facial expressions where movements on the face are described by a set of action units

(AUs). Each AU has some related muscular basis. This system of coding facial

expressions is done manually by following a set of prescribed rules. The inputs are still

images of facial expressions, often at the peak of the expression. This process is very

time-consuming. Ekman’s work inspired many researchers to analyze facial expressions

by means of image and video processing. By tracking facial features and measuring the

amount of facial movement, they attempt to categorize different facial expressions.

Recent work on facial expression analysis and recognition has used these “basic

expressions” or a subset of them. The two recent surveys in the area [53, 54] provide an

in depth review of many of the research done in automatic facial expression recognition

in recent years.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-55-2048.jpg)

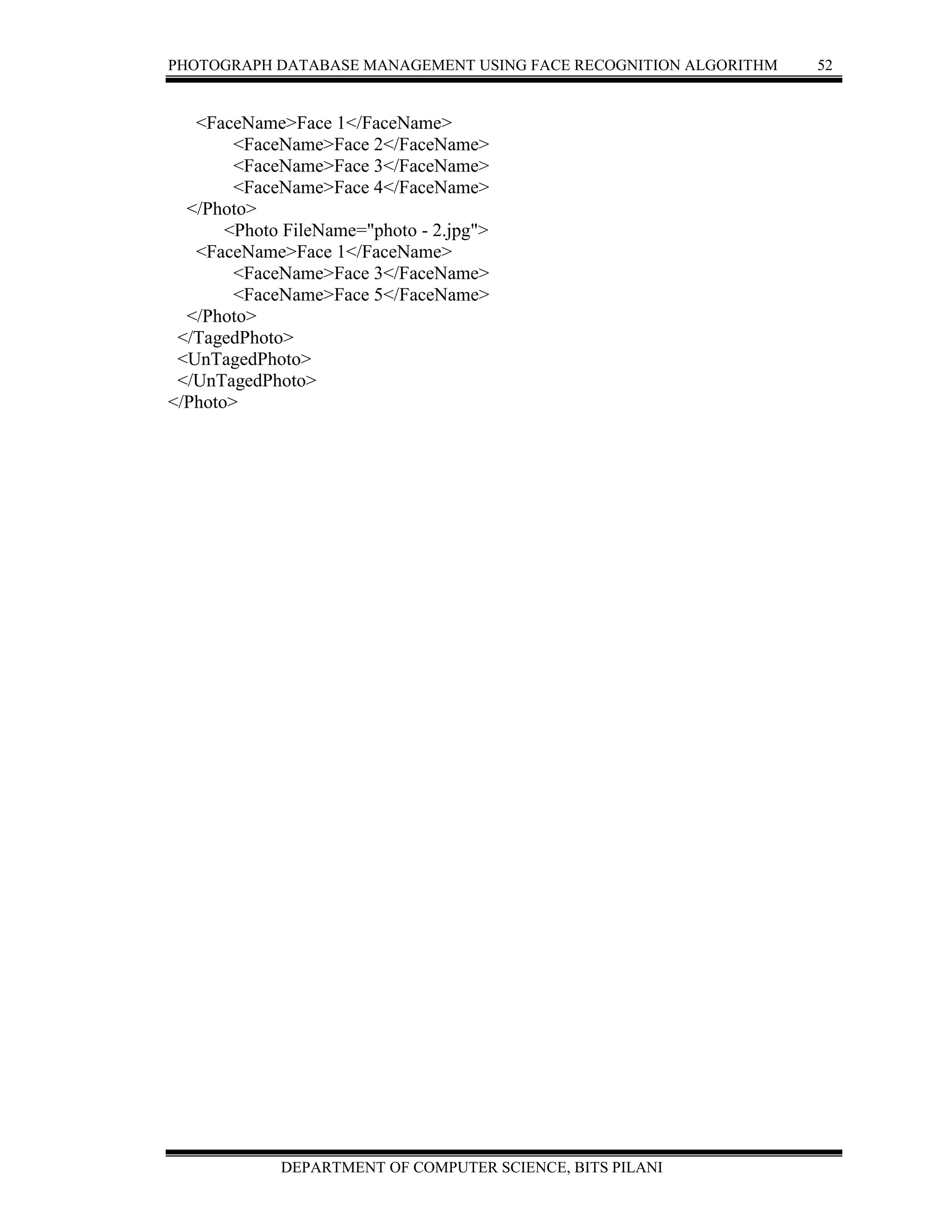

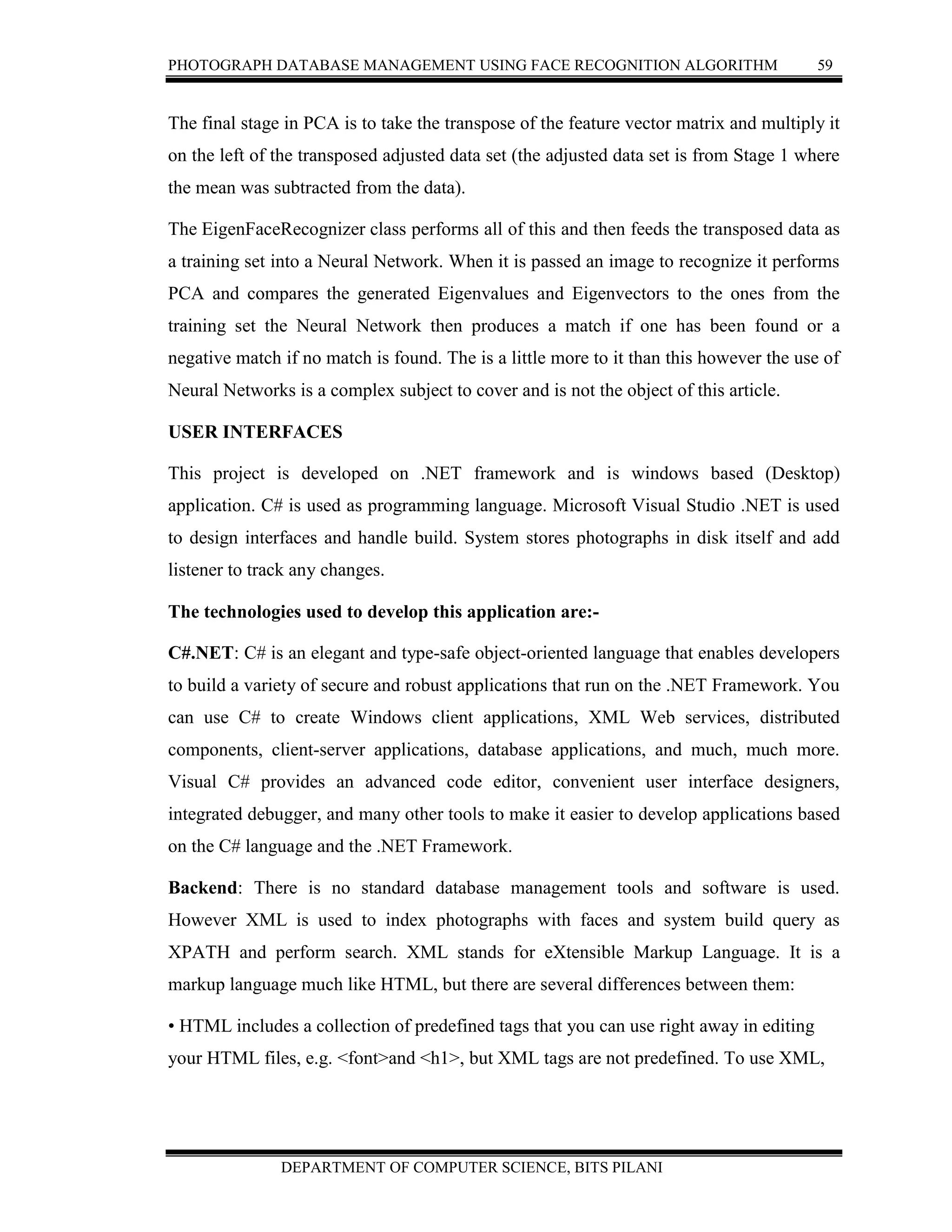

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 51

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

Figure 3.2.c Face Detection and Facial expression classification[12]

Once User approves or accept the faces with proper name, system records changes. This

changes will be recorded into XML file. XML will maintain fully qualified file name

(name with location) and the face value. Typical structure of XML is like below

<?xml version="1.0" encoding="utf-8"?>

<Photo>

<TagedPhoto>

<Photo FileName="photo - 1.jpg">](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-61-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 54

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

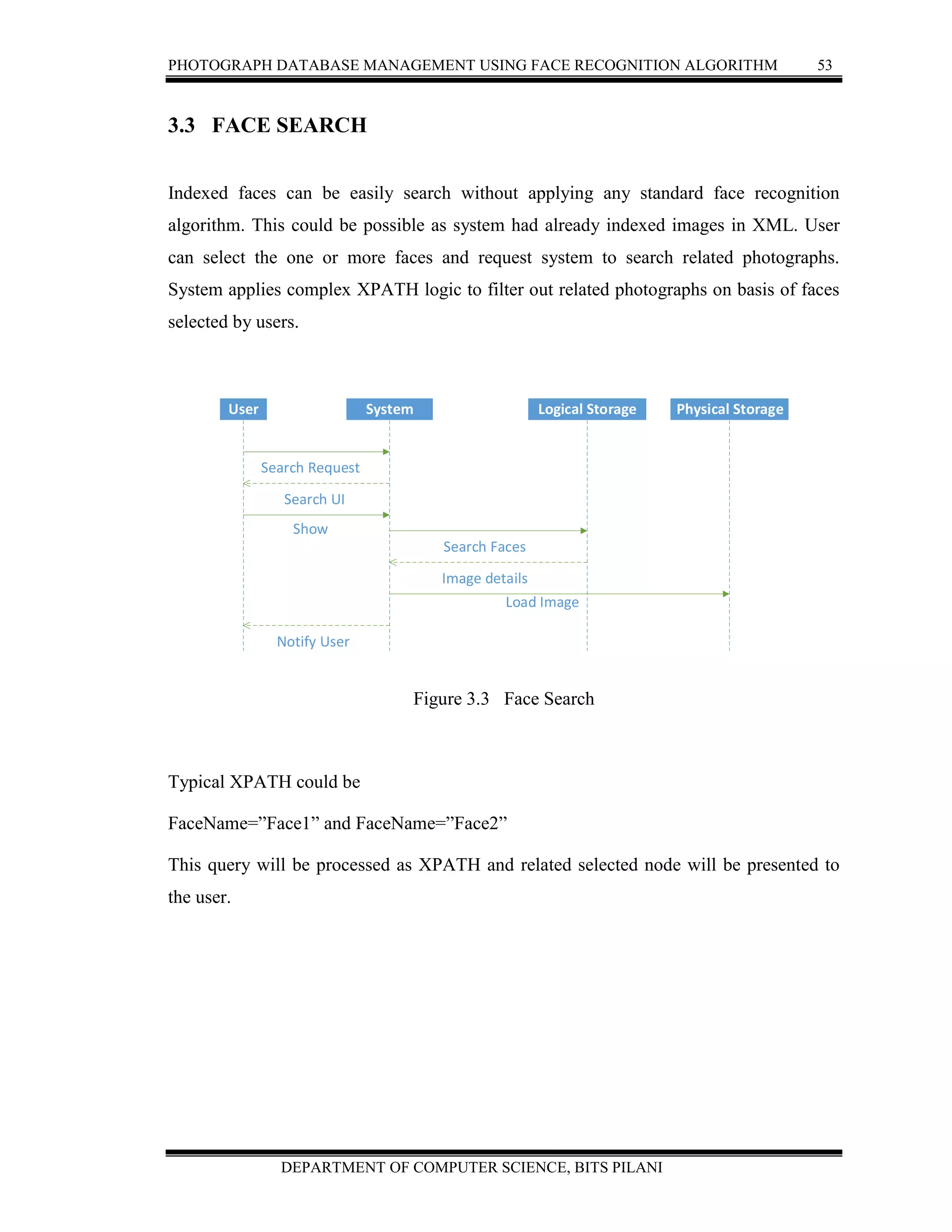

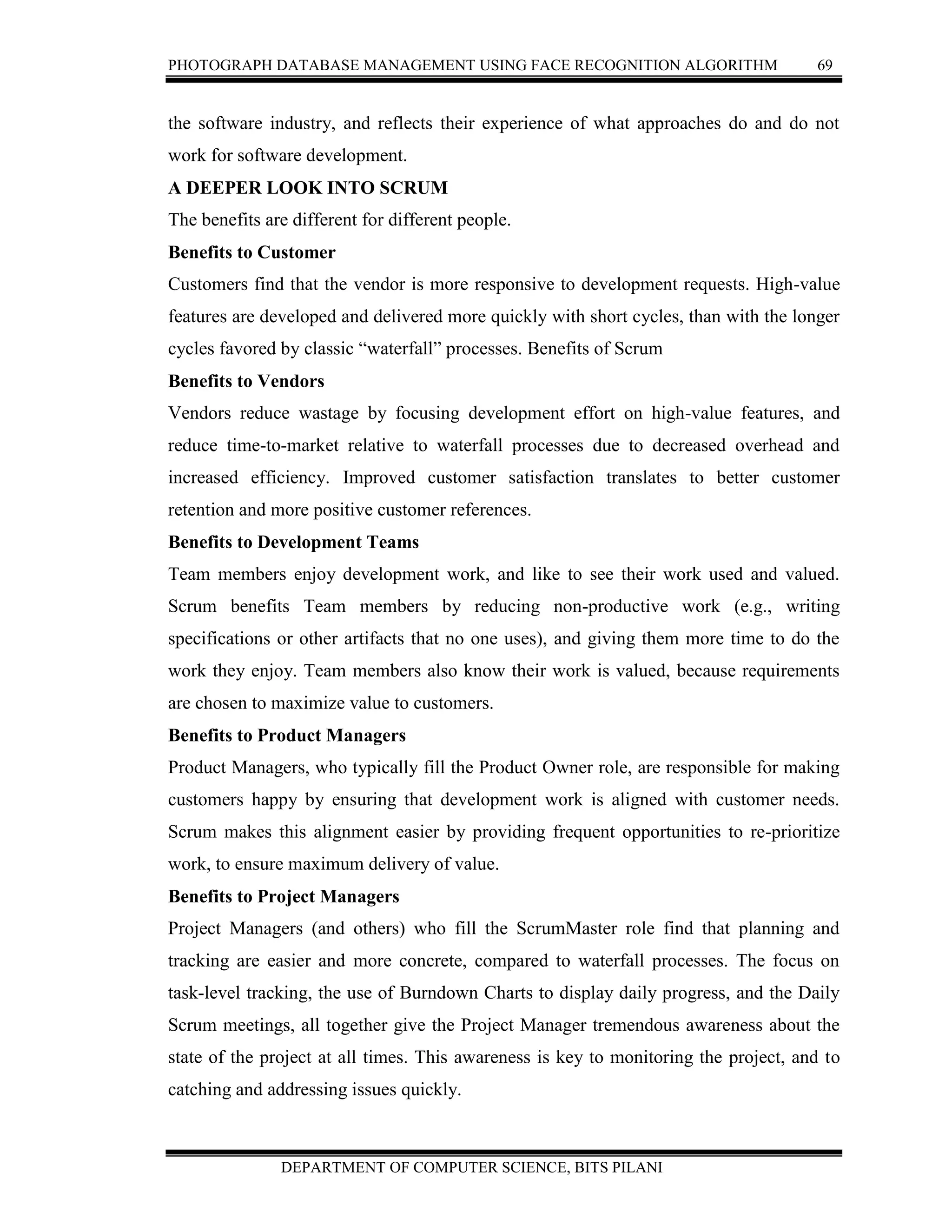

3.4 SIMULATION

INTRODUCTION

Simulation can be defined to show the eventual real behavior of the selected system

model .It is used for performance optimization on the basis of creating a model of the

system in order to gain insight into their functioning. We can predict the estimation and

assumption of the real system by using simulation results.

SIMULATOR

Photograph Database Management Using Face Recognition Algorithm is proposed to

overcome management of Photographs with faces without duplicating. It provides search

mechanism to query photographs from facial name. This is turning point as search will

not try any standard face recognition mechanism but it will look into index prepared

during copying of image. This makes system quite fast.

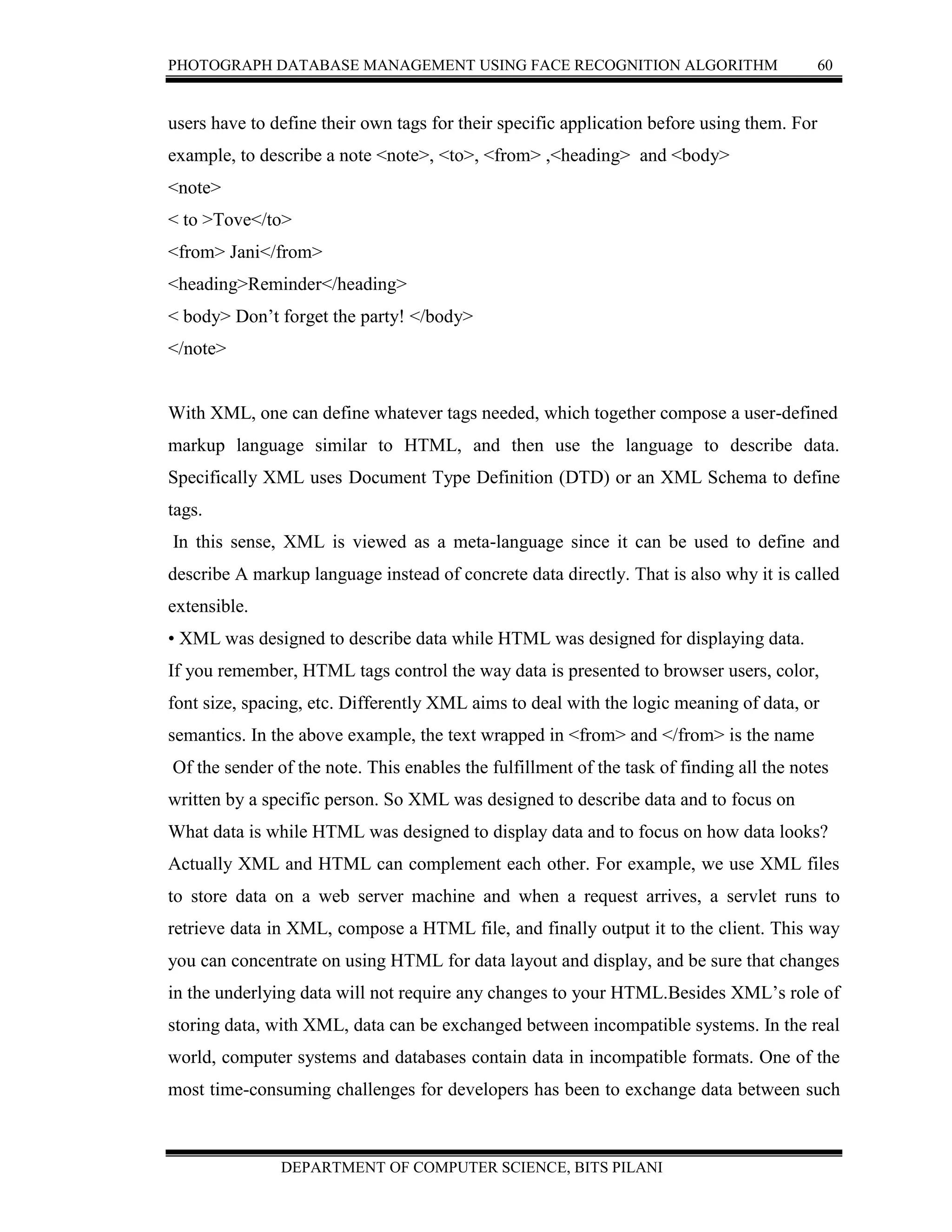

EGMU CV FACE RECOGNIZER AND EIGEN CLASSIFIER

FaceRecognizer is a global constructor that allows Eigen, Fisher, and LBPH classifiers to

be used together. The class combines common method calls between the classifiers. The

constructor for each classifier type is as follows:

FaceRecognizer recognizer = new EigenFaceRecognizer(num_components, threshold);

THE EIGEN CLASSIFIER

The Eigen recognizer takes two variables. The 1st, is the number of components kept for

this Principal Component Analysis. There’s no rule how many components that should be

kept for good reconstruction capabilities. It is based on input data, so experiment with the

number. OpenCV documentation suggests keeping 80 components should almost always

be sufficient. The 2nd variable is designed to be a prediction threshold[7].

FaceRecognizer recognizer = new EigenFaceRecognizer(80, double.PositiveInfinity);

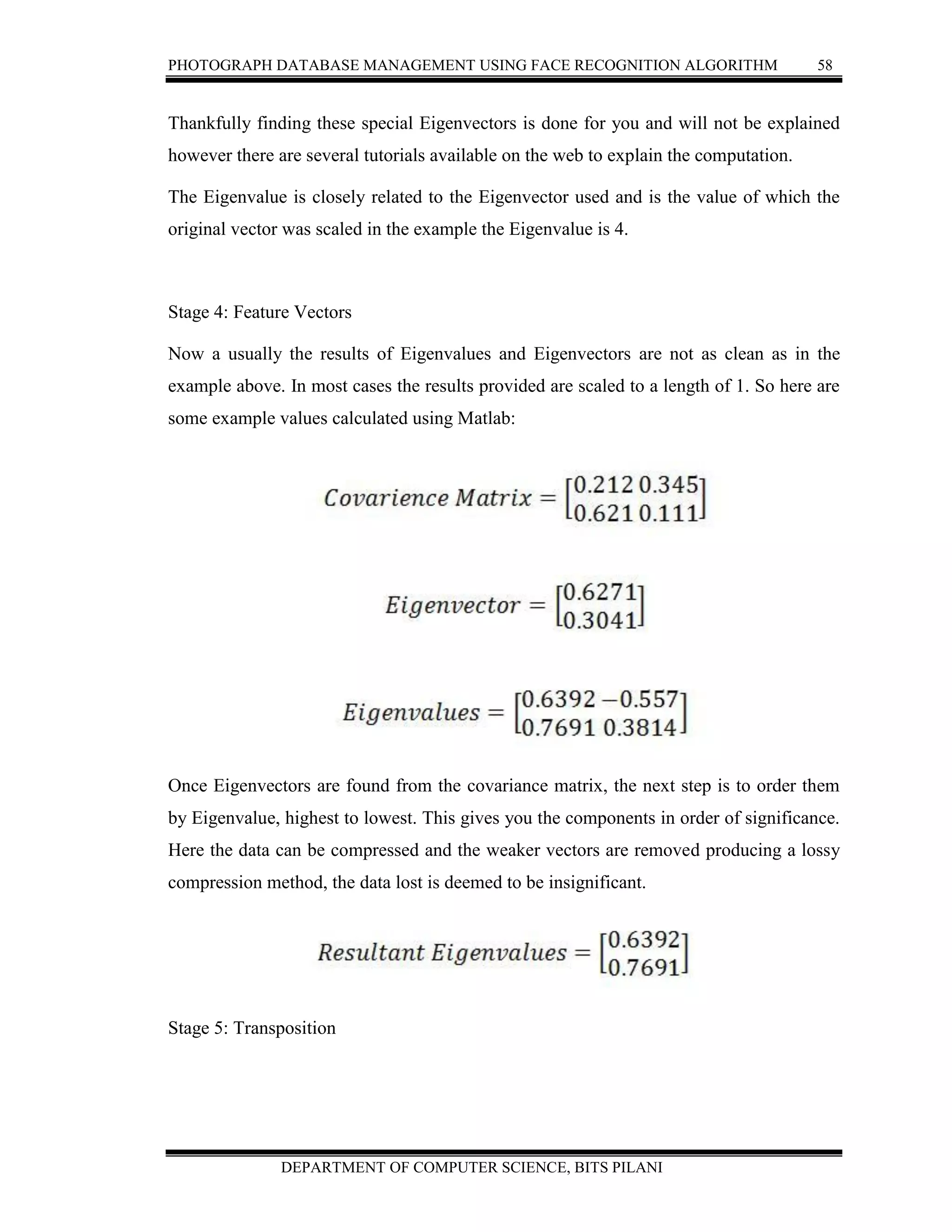

PRINCIPLE COMPONENT ANALYSIS (PCA)](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-64-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 55

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

The EigenFaceRecognizer applies PCA. The FisherFaceRecognizer applies Linear

Discriminant Analysis derived by R.A. The EigenFaceRecognizer class applies PCA on

each image, the results of which will be an array of Eigen values that a Neural Network

can be trained to recognize[7]. PCA is a commonly used method of object recognition as

its results, when used properly can be fairly accurate and resilient to noise. The method of

which PCA is applied can vary at different stages so what will be demonstrated is a clear

method for PCA application that can be followed. It is up for individuals to experiment in

finding the best method for producing accurate results from PCA.

To perform PCA several steps are undertaken:

Stage 1: Subtract the Mean of the data from each variable (our adjusted data)

Stage 2: Calculate and form a covariance Matrix

Stage 3: Calculate Eigenvectors and Eigenvalues from the covariance Matrix

Stage 4: Chose a Feature Vector (a fancy name for a matrix of vectors)

Stage 5: Multiply the transposed Feature Vectors by the transposed adjusted data

STAGE 1: Mean Subtraction

This data is fairly simple and makes the calculation of our covariance matrix a little

simpler now this is not the subtraction of the overall mean from each of our values as for

covariance we need at least two dimensions of data. It is in fact the subtraction of the

mean of each row from each element in that row.

(Alternatively the mean of each column from each element in the column however this

would adjust the way we calculate the covariance matrix)](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-65-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 81

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

6. FUTURE ENHANCEMENT

A new technique for image feature extraction and representation—two-dimensional

principal component analysis (2DPCA)—was developed. 2DPCA has many advantages

over conventional PCA (Eigenfaces).

In the first place, since 2DPCA is based on the image matrix, it is simpler and more

straightforward to use for image feature extraction. Second, 2DPCA is better than PCA in

terms of recognition accuracy in all experiments. Although this trend seems to be

consistent for different databases and conditions, in some experiments the differences in

performance were not statistically significant. Third, 2DPCA is computationally more

efficient than PCA and it can improve the speed of image feature extraction significantly.

However, it should be pointed out that 2DPCA-based image representation was not as

efficient as PCA in terms of storage requirements, since 2DPCA requires more

coefficients for image representation than PCA. Why does 2DPCA outperform PCA in

face recognition? In our opinion, the underlying reason is that 2DPCA is more suitable

for small sample size problems (like face recognition) since its image covariance matrix

is quite small. Image representation and recognition based on PCA (or 2DPCA) is

statistically dependent on the evaluation of the covariance matrix (although for PCA the

explicit construction of the covariance matrix can be avoided)[32]. The obvious

advantage of 2DPCA A over PCA is that the former evaluates the covariance matrix

more accurately. Finally, there are still some aspects of 2DPCA that deserve further

study. When a small number of the principal components of PCA are used to represent an

image, the mean square error (MSE) between the approximation and the original pattern

is minimal. In addition, 2DPCA needs more coefficients for image representation than

PCA. Although, as a feasible alternative to deal with this problem is to use PCA after

2DPCA for further dimensional reduction, it is still unclear how the dimension of 2DPCA

could be reduced directly.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-91-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 82

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

7. REFERENCES

[1] Shilpi Soni, Raj Kumar Sahu,” Face Recognition Based on Two Dimensional

Principal Component Analysis (2DPCA) and Result Comparison with Different

Classifiers”, International Journal of Advanced Research in Computer and

Communication Engineering Vol. 2, Issue 10, October 2013

[2] Vo Dinh Minh Nhat and Sungyoung Lee. “Improvement On PCA and 2DPCA

algorithms for face recognition “CIVR 2005, LNCS 3568, Pp: 568-577

[3] D.Q. Zhang, and H.Zhou” (2D) 2PCA, Two-directional two-dimensional PCA for

efficient face representation and recognition for Nero computing”

[4] Zhao W., Chellappa R, Phillips and Rosenfeld, “Face Recognition: A Literature

Survey”, ACM Computing Surveys, Vol. 35, No. 4, December 2003, pp. 399–458.

[5] Patil A.M., Kolhe S.R. and Patil P.M, “2D Face Recognition Techniques: A Survey,

International Journal of Machine Intelligence,”, ISSN: 0975–2927, Volume 2, Issue 1,

2010, pp-74-8

[6] G. H. Dunteman. “Principal Components Analysis”. Sage Publications, 1989.

[7] Turk M. And Pentland A.” Eigenfaces for recognition”, J. Cogn. Neurosci. 3, 72–86,

1991

[8] S. J. Lee, S. B. Yung, J. W. Kwon, and S. H. Hong, “Face Detection and Recognition

Using PCA”, pp. 84-87, IEEE TENCON,1999.

[9] Kohonen, T., "Self-organization and associative memory”, Berlin: Springer- Verlag,,

1989.

[10] Kohonen, T., and Lehtio, P., "Storage and processing of information in distributed

associative memory systems", 1981.

[11] Fischler, M. A.and Elschlager, R. A., "The representation and matching of pictorial

structures", IEEE Trans. on Computers, c-22.1, 1973.

[12] Yuille, A.L., Cohen, D. S., and Hallinan, P. W., "Feature extraction from faces

using deformable templates", Proc. of CVPR, 1989.

[13] Kanade, T., "Picture processing system by computer complex and recognition of

human faces", Dept. of Information Science, Kyoto University, 1973.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-92-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 83

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

[14] B.Moghaddam, and A. Pentland, “Probabilistic Visual Learning for Object

Representation”, IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol.

19, No. 7, July 1997

[15] K. C. Chung, S. C. Kee, and S. R. Kim, “Face Recognition using Principal

Component Analysis of Gabor Filter Responses”, p.53- 57, IEEE, 1999.

[16] Hossein Sahoolizadeh, B. Zargham Heidari, and C. Hamid Dehghani, “A New Face

Recognition Method using PCA, LDA and Neural Network”, World Academy of Science,

Engineering and Technology 41008

[17] Kyungim Baek, Bruce A. Draper, J. Ross Beveridge, Kai , “PCA vs. ICA: A

comparison on the FERET data set”.

[18] B. Moghaddam, and A. Pentland, “An Automatic System for Model-Based Coding of

Faces”, pp. 362- 370, IEEE, 1995.

[19] Fleming, M., and Cottrell, G., "Categorization of faces using unsupervised feature

extraction", Proc. of IJCNN, Vol. 90(2), 1990.

[20] T. Yahagi and H. Takano, “Face Recognition using neural networks with multiple

combinations of categories,” International Journal of Electronics Information and

Communication Engineering., vol.J77-D-II, no.11, pp.2151-2159, 1994.

[21] Patrick J. Grother; George W. Quinn; P J. Phillips,”Report on the Evaluation of 2D

Still-Image Face Recognition Algorithms”, NIST Interagency/Internal Report (NISTIR) –

7709, June 17, 2010.

[22] Patrick J. Grother, George W. Quinn and P. Jonathon Phillips,” Report on the

Evaluation of 2D Still-Image Face Recognition Algorithms”, NIST Interagency Report

7709.

[23] Patrik Kamencay, Robert Hudec, Miroslav Benco and Martina Zachariasova, “2D-

3D Face Recognition Method Based on a Modified CCA-PCA Algorithm “, International

Journal of Advanced Robotic System, DOI: 10.5772/58251

[24] Phillip Ian Wilson, Dr. John Fernandez “Facial Feature Detection Using HAAR

Classifiers”, Texas A&M University – Corpus

[25] Matthew Turk and Alex Pentland,”Eigenfaces for Massachusetts Institute of

Technology Recognition, vision and Modeling Group “, The Media Laboratory](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-93-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 84

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

[26] Paulo Menezes, Jos´e Carlos Barreto and Jorge Dias,” Face Tracking Based On

Haar-Like Features And Eigenfaces”,ISR-University of Coimbra.

[27] Barreto, Jos´e, Paulo Menezes and Jorge Dias,” Human-robot interaction based on

Haar-like features and eigenfaces”, International Conference on Robotics and

Automation,(2004).

[28] Freund, Yoav and Robert E.Schapire (1996),”Experiments with a new boosting

algorithm.”

[29] Kalman, R.E. (1960),”A new approach to linear filtering and prediction problems”,

Journal of Basic Engineering (82series D), 35–45.

[30] Lienhart, Rainer and Jochen Maydt (2002).” An extended set of haar-like features

for rapid object detection. “In: IEEE ICIP 2002, Vol. 1, pp 900-903.

[31] Moghaddam, B. and A.P. Pent and A.P. Pentland (1995). “Probabilistic visual

learning for object representation”, Technical Report 326, Media Laboratory,

Massachusetts Institute of Technology.

[32] Mohan, Anuj, Constantine Papageorgiou and Tomaso Poggio (2001).,”Example-

based object detection in images by components”, IEEE Transactions on Pattern Analysis

and Machine Intelligence 23(4), 349–361.

[33] Oren, M., C.Papageorgiou, P.Sinha, E.Osuna and T.Poggio (1997),” Pedestrian

detection using wavelet templates”.

[34] Rainer Lienhart, Alexander Kuranov and Vadim Pisarevsky (2002),”Empirical

analysis of detection cascades of boosted classifiers for rapid object detection”, MRL

Technical Report, Intel Labs.

[35] Rowley, Henry A., Shumeet Baluja and Takeo Kanade (1998),”Neural network-

based face Detection”, IEEE Transactions on Pattern Analysis and Machine Intelligence

20(1), 23– 38.

[36] Schneiderman, H. and T. Kanade (2000),” A statistical method for 3D object

detection applied to faces and cars”, In International Conference on Computer Vision.

[37] Sung, K. and T. Poggio (1998),“Example-based learning for view based face

detection.” In IEEE Patt. Anal. Mach. Intell. 20(1), 39–51.

[38] Turk, M.A. and A.P. Pentland (1991),”Face recognition using eigenfaces”, In Proc.

of IEEE Conference on Computer Vision and Pattern Recognition pp. 586 – 591.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-94-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 85

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

[39] Viola, Paul and Michael Jones (2001),”Rapid object detection using boosted cascade

of simple features”, IEEE Conf.Pattern Recognition 2001.

[40] Yang, Ming-Hsuan (2002),”Detecting faces images: A survey”. IEEE Transations on

Pattern Analysis and Machine Intelligence 24(1), 34–58.

[41] Sung, K. and T. Poggio (1998).” Example-based learning for view based face

detection.”

[42] Henry Rowley, Baluja S. & Kanade T. (1999) “Neural Network-Based Face

Detection, Computer Vision and Pattern Recognition”, Neural Network-Based Face

Detection, Pitts-burgh, Carnegie Mellon University, PhD thesis.

[43] KahKay Sung & Tomaso Poggio (1994) Example Based Learning For View Based

Human Face Detection, Massachusetts Institute of Technology Artificial Intelligence

Laboratory and Center For Biological And Computational Learning, Memo 1521, CBCL

Paper 112, MIT, December.

[44] Henry A. Rowley, Shumeet Baluja &Takeo Kanade. (1997) Rotation Invariant

Neural Network-Based Face Detection, December, CMU-CS-97-201

[45] Jeffrey S. Norris (1999) Face Detection and Recognition in Office Environments,

thesis, Dept. of Electrical Eng. and CS, Master of Eng in Electrical Eng., Massachusetts

Institute of Technology.

[46] Hazem M. El-Bakry (2002), Face Detection Using Neural Networks and Image

Decomposition Lecture Notes in Computer Science Vol. 22, pp:205-215.

[47]. M. Kass, A. Witkin, and D. Terzopoulos. Snakes: Active contour models. IJCV,

1(4):321–331, 1987

[48]. T. Cootes, C. Taylor, D. Cooper, and J. Graham. Active shape models - Their

training and application. CCVIU, 61(1):38–59, 1995.

[49]. T. Cootes, G. Edwards, and C. Taylor. Active appearance models. PAMI,

23(6):681–685, 2001.

[50]. S. Sclaroff and J. Isidoro. Active blobs. In ICCV, 1998.

[51]. M. Jones and T. Poggio. Multidimensional morphable models. In ICCV, pages 683–

688, 1998.

[52]. P. Ekman and W.V. Friesen. Facial Action Coding System: Investigator’s Guide.

Consulting Psychologists Press, 1978.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-95-2048.jpg)

![PHOTOGRAPH DATABASE MANAGEMENT USING FACE RECOGNITION ALGORITHM 86

DEPARTMENT OF COMPUTER SCIENCE, BITS PILANI

[53]. B. Fasel and J. Luettin. Automatic facial expression analysis: A survey. Pattern

Recognition, 36:259–275, 2003.

[54]. M. Pantic and L.J.M. Rothkrantz. Automatic analysis of facial expressions: The

state of the art. IEEE Trans. on Pattern Analysis and Machine Intelligence, 22(12):1424–

1445, 2000.](https://image.slidesharecdn.com/2009ht12895-report-160710200025/75/Photograph-Database-management-report-96-2048.jpg)