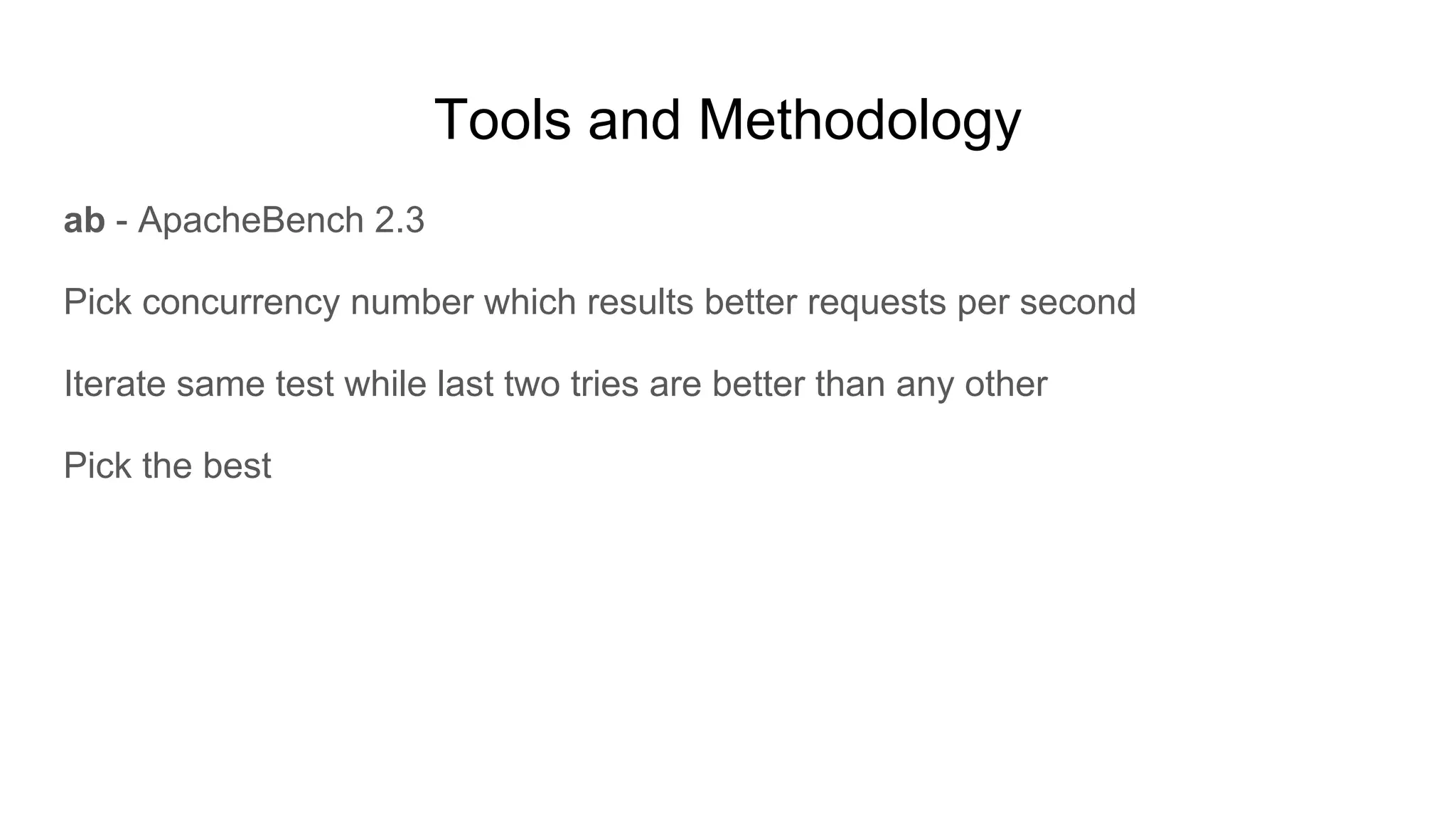

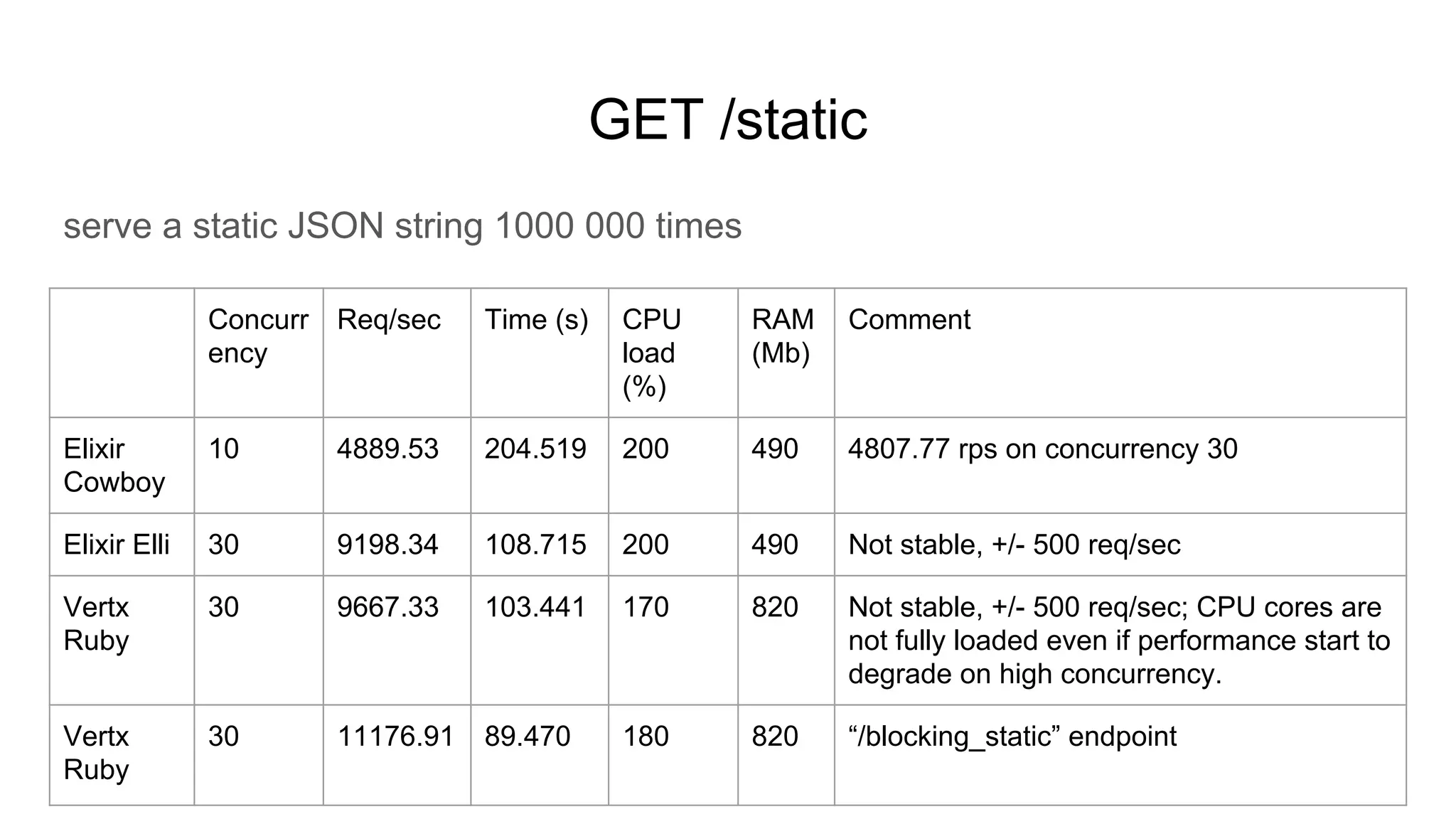

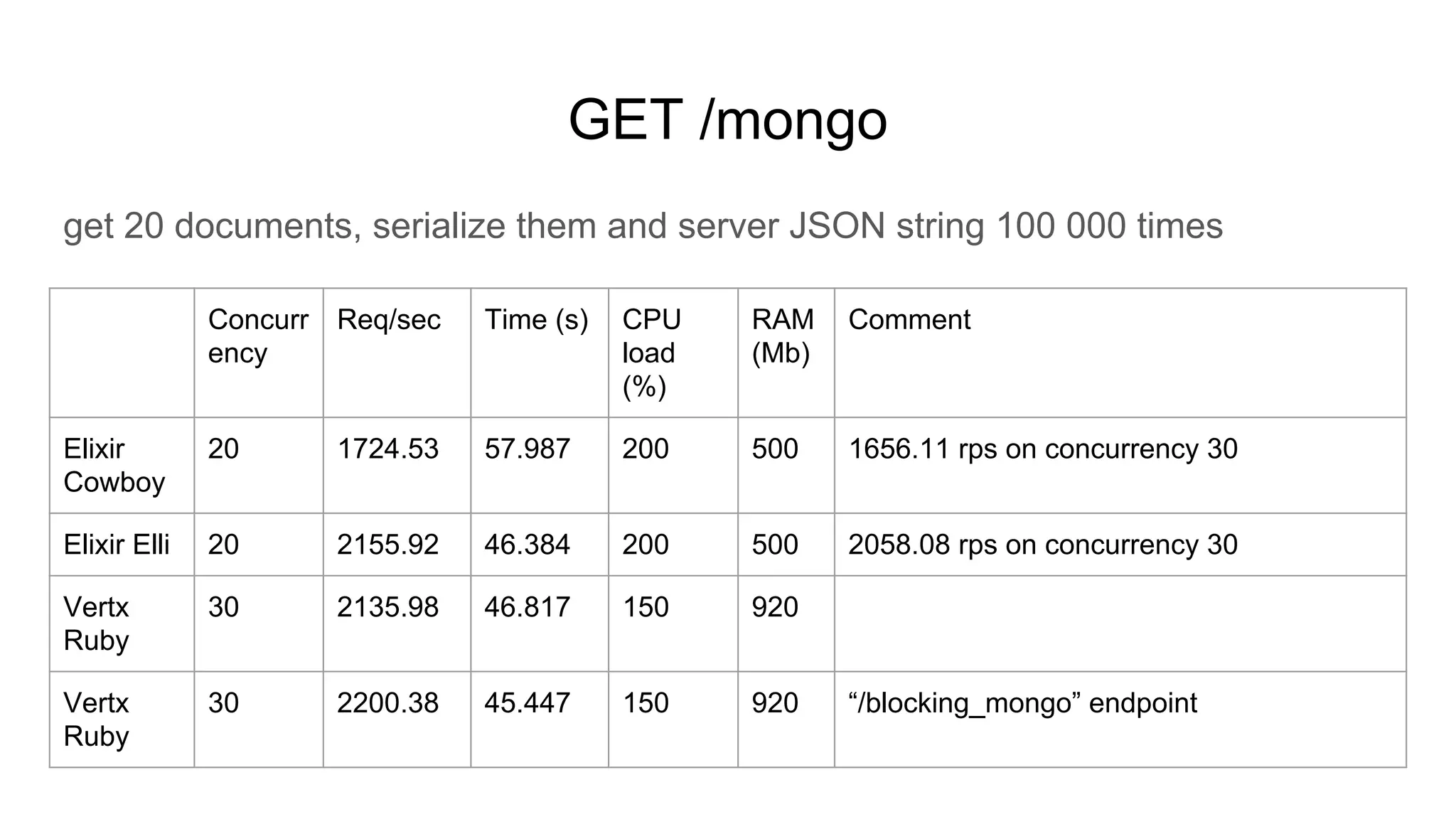

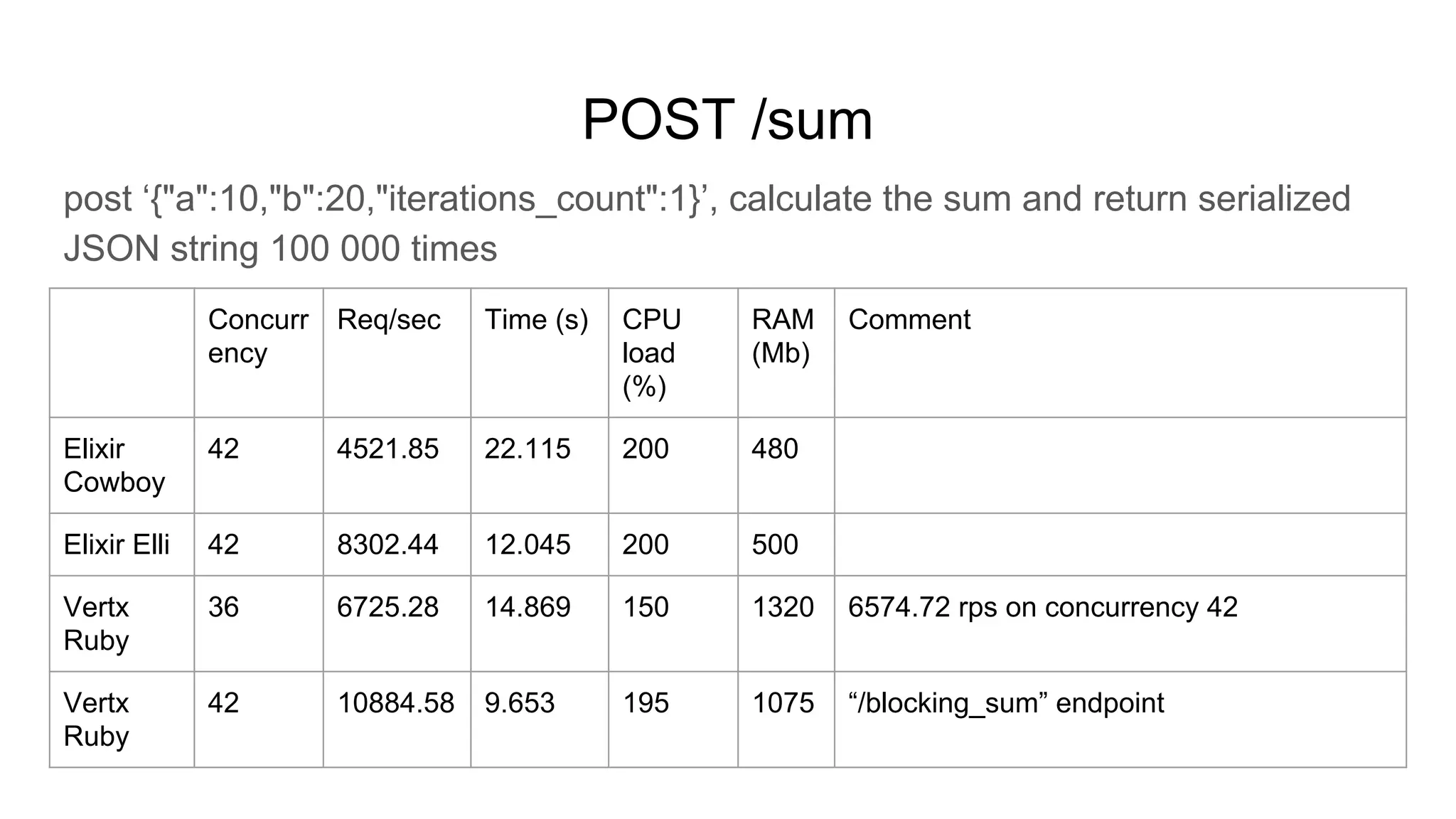

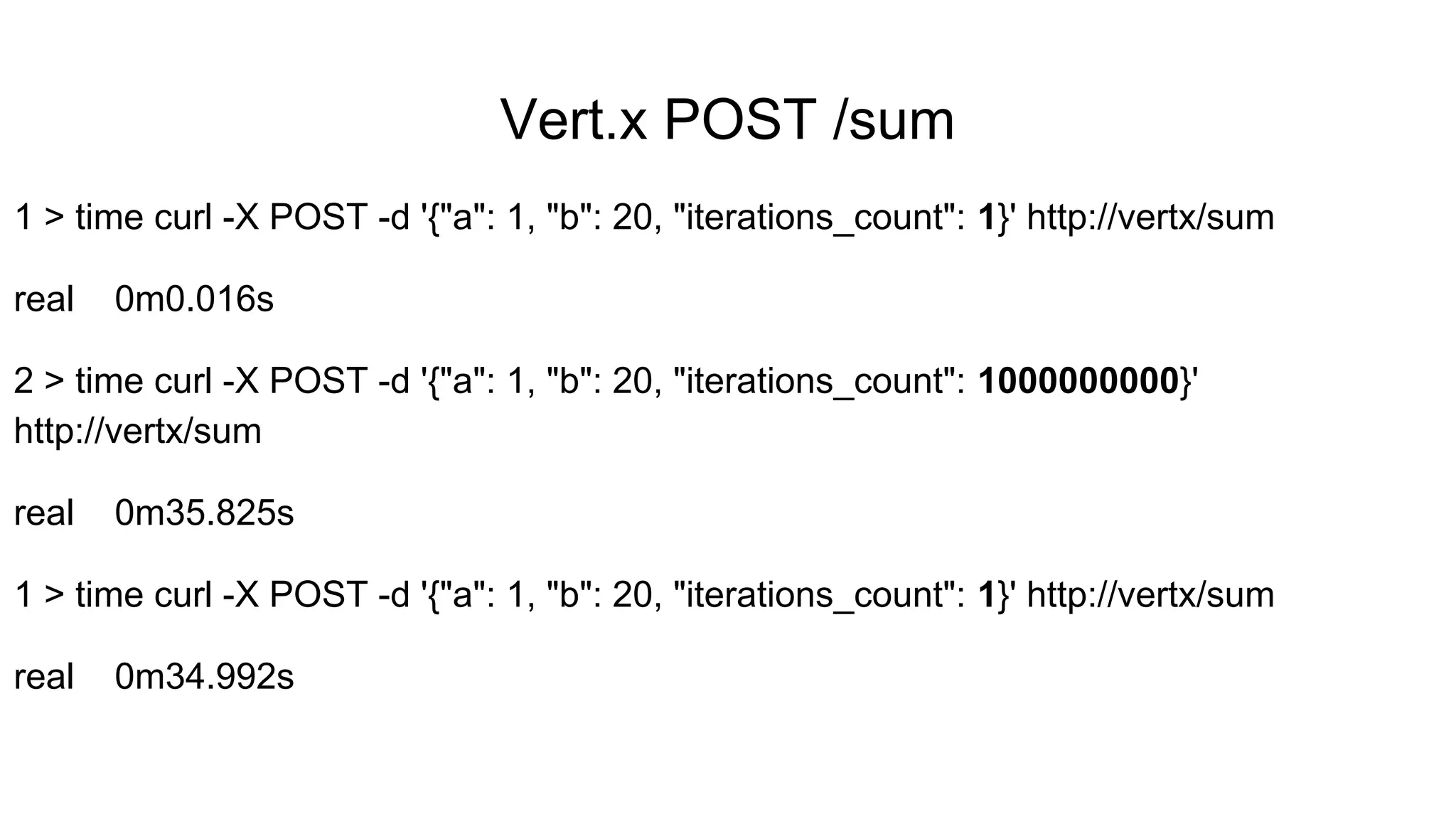

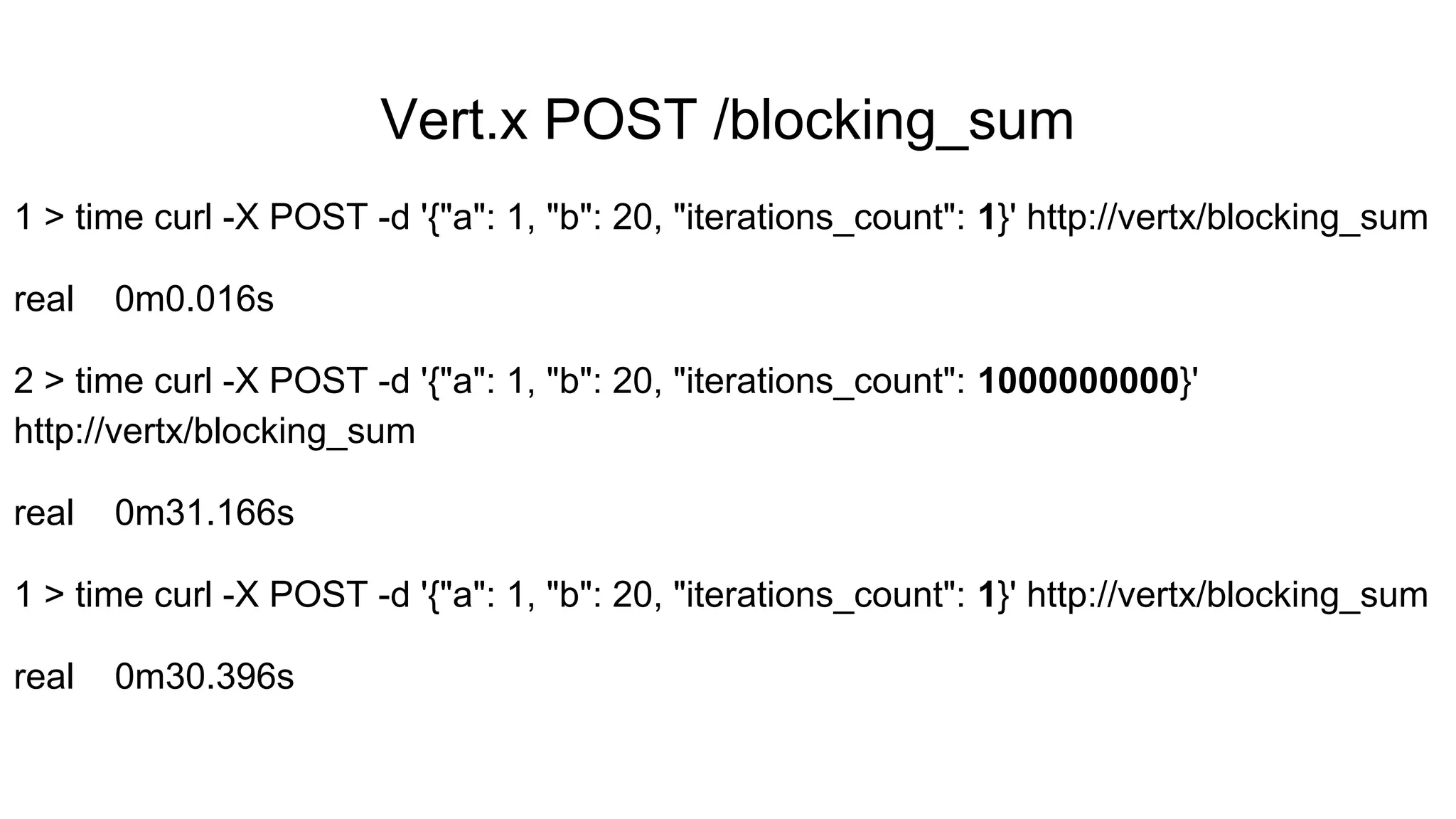

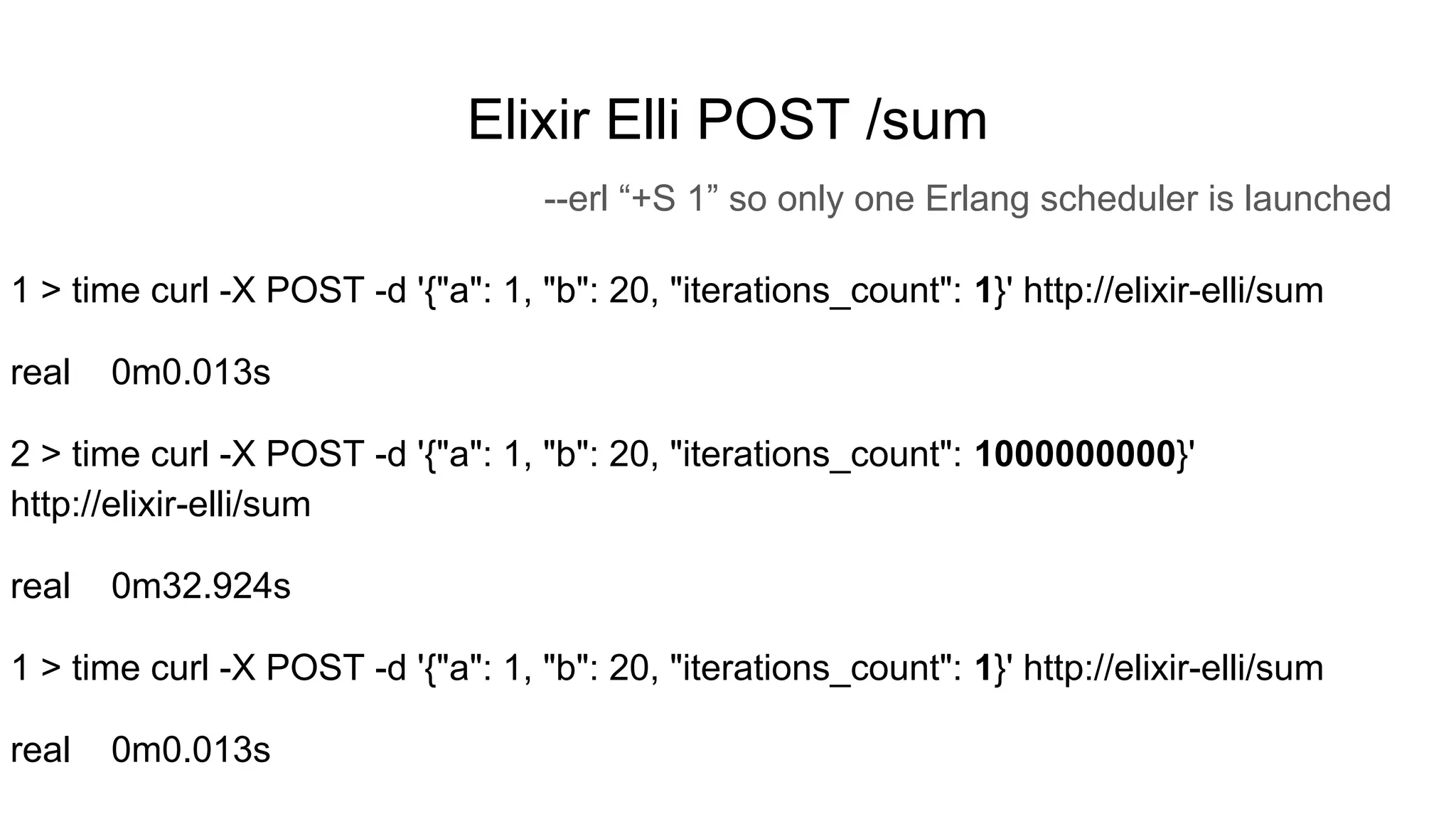

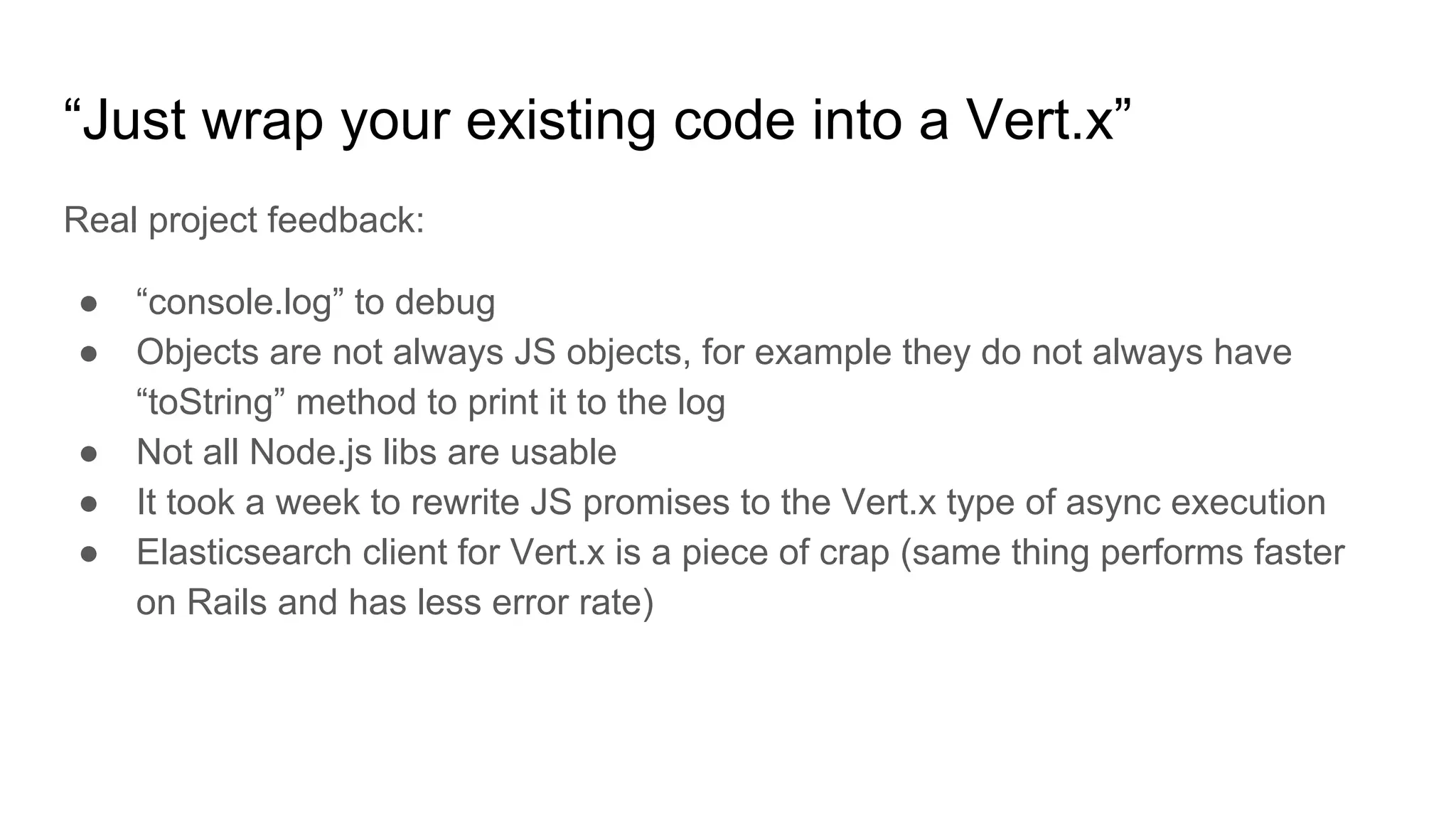

The document discusses performance measurement methodology comparing the Vert.x framework and Elixir for backend services, highlighting test results in terms of concurrency and requests per second. It reveals insights into Vert.x's performance, particularly its inability to fully utilize CPU resources, and questions regarding its community and documentation. Additionally, it shares practical experiences and challenges encountered while integrating existing code with Vert.x.