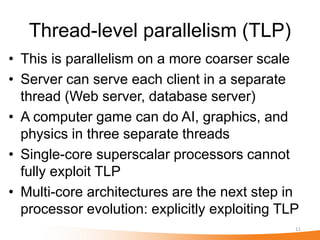

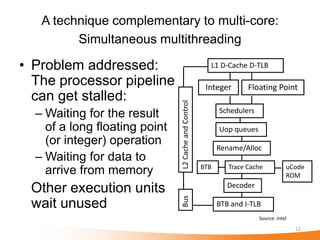

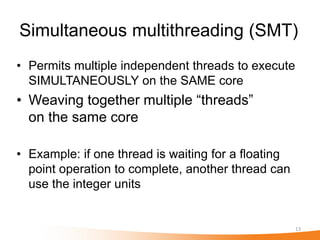

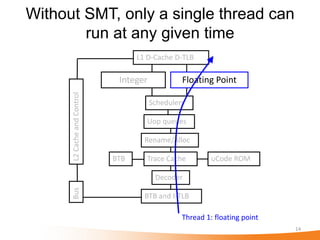

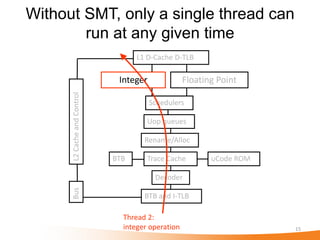

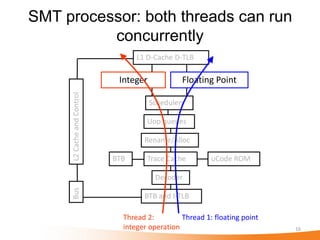

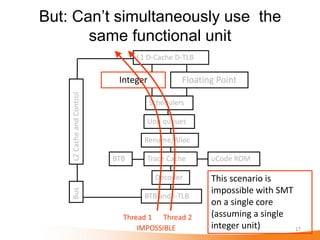

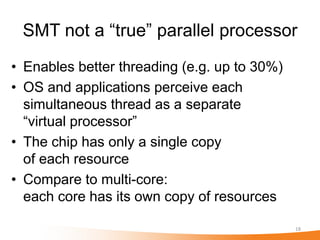

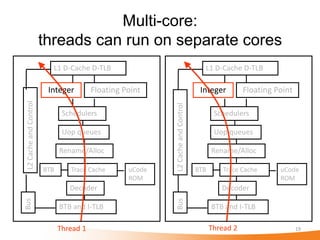

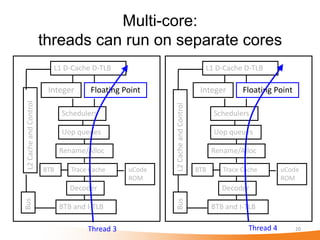

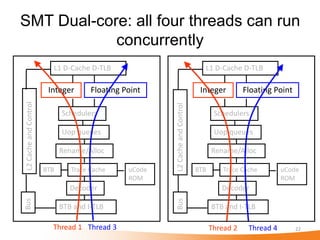

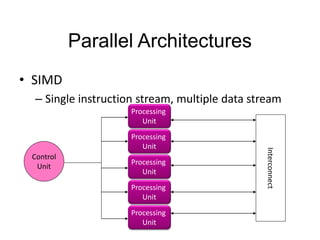

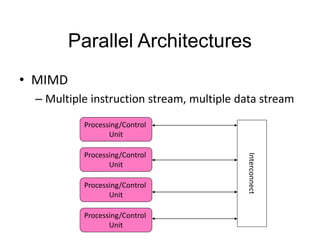

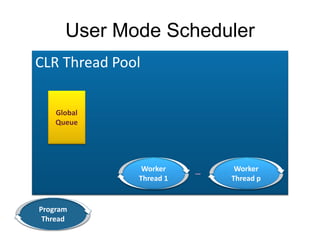

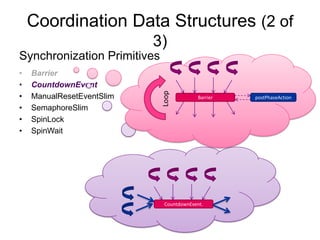

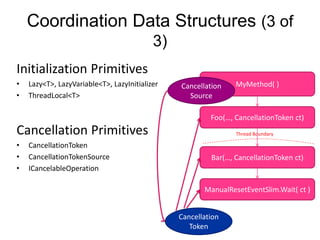

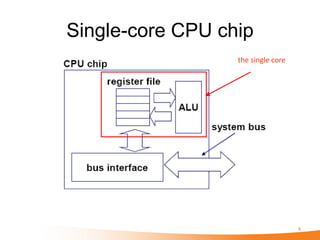

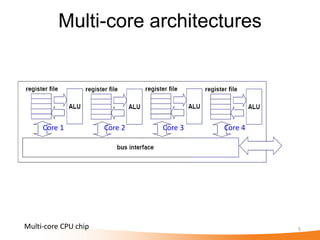

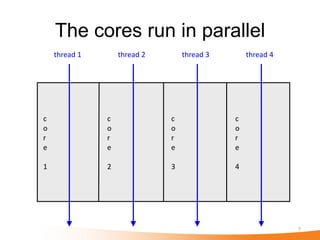

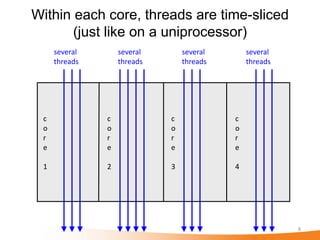

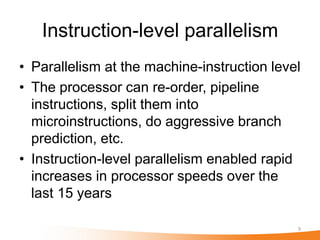

This document discusses parallel computing and summarizes a talk on the topic. It begins by noting the challenge of exploiting parallelism in hardware to solve problems more effectively. It then discusses single-core CPUs and how multi-core architectures allow multiple cores to run threads in parallel. Within each core, threads are time-sliced. Instruction-level parallelism and thread-level parallelism are described. Simultaneous multithreading is presented as allowing multiple independent threads to execute simultaneously on the same core. However, SMT does not provide true parallelism like multi-core architectures, which allow threads to run independently on separate cores each with their own resources.

![Instruction level parallelism

• For(int i-0;i<1000;i++)

{ a[0]++; a[0]++; }

• For(int i-0;i<1000;i++)

{ a[0]++; a[1]++; }](https://image.slidesharecdn.com/ot4-parallelprogramming-sundararajans-sep29-120103020019-phpapp01/85/Parallel-Programming-10-320.jpg)