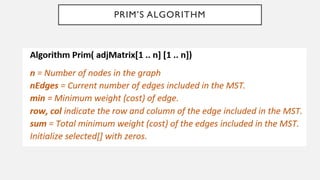

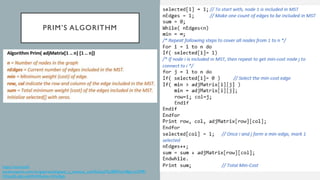

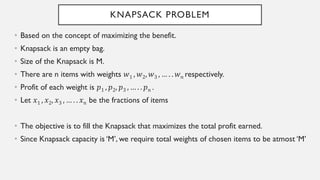

The document provides an introduction to greedy algorithms, explaining their characteristics such as the greedy choice property and optimal substructure, and emphasizes their application in optimization problems like the knapsack problem and Dijkstra's algorithm. It details various techniques and examples, including both fractional and 0/1 knapsack problems, and presents methods for finding minimum spanning trees through Prim's and Kruskal's algorithms. The key takeaway is that while greedy methods are simple and efficient, they may not always yield optimal solutions.

![DIJKSTRA’S ALGORITHM

Example: Relaxation Formula:

If dist[z] > dist[u] + w[u,z], then

dist[z] = dist[u] + w[u,z]](https://image.slidesharecdn.com/module2-greedyalgorithm-240502093022-0591044a/85/Module-2-Greedy-Algorithm-Data-structures-and-algorithm-18-320.jpg)