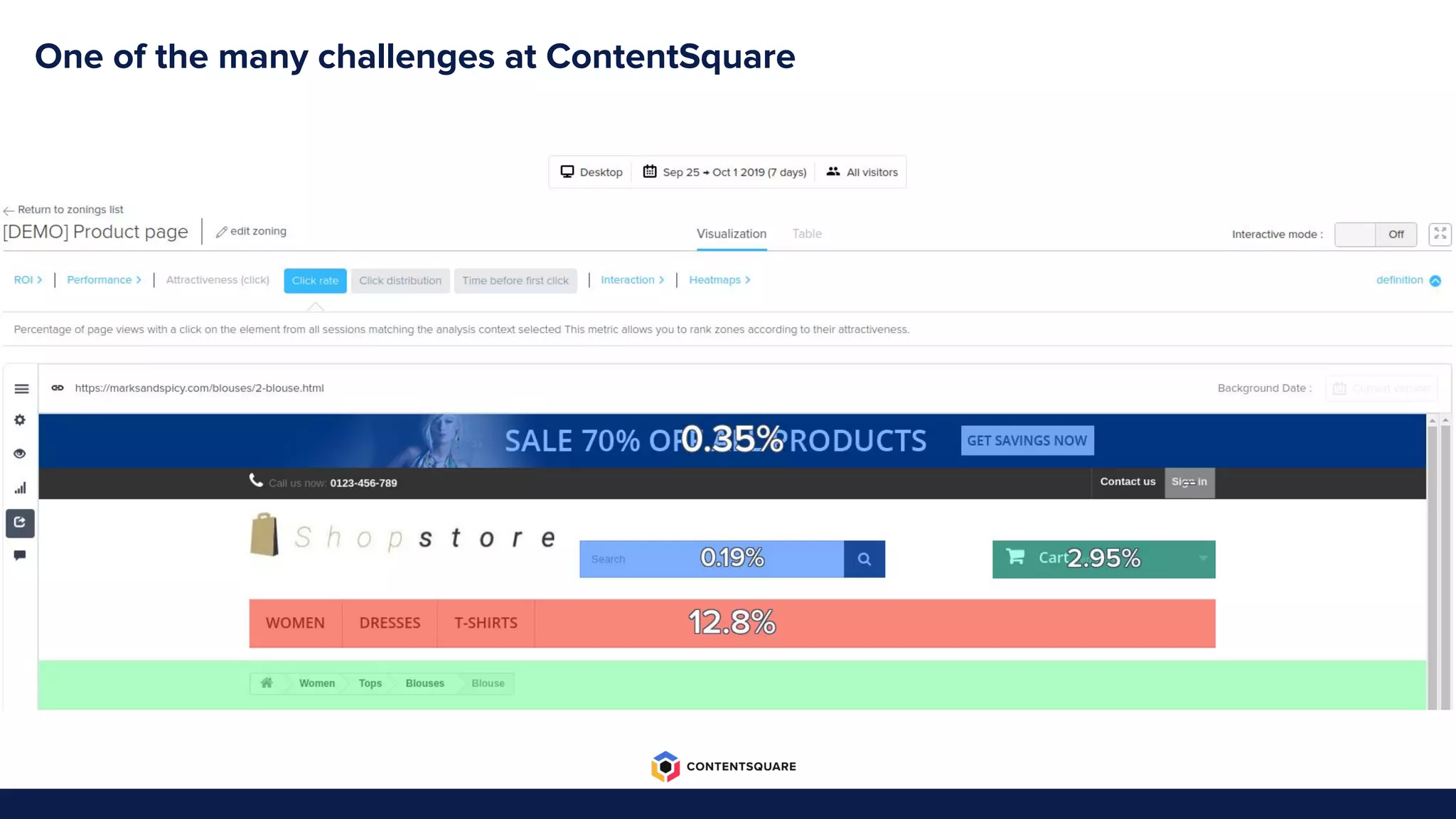

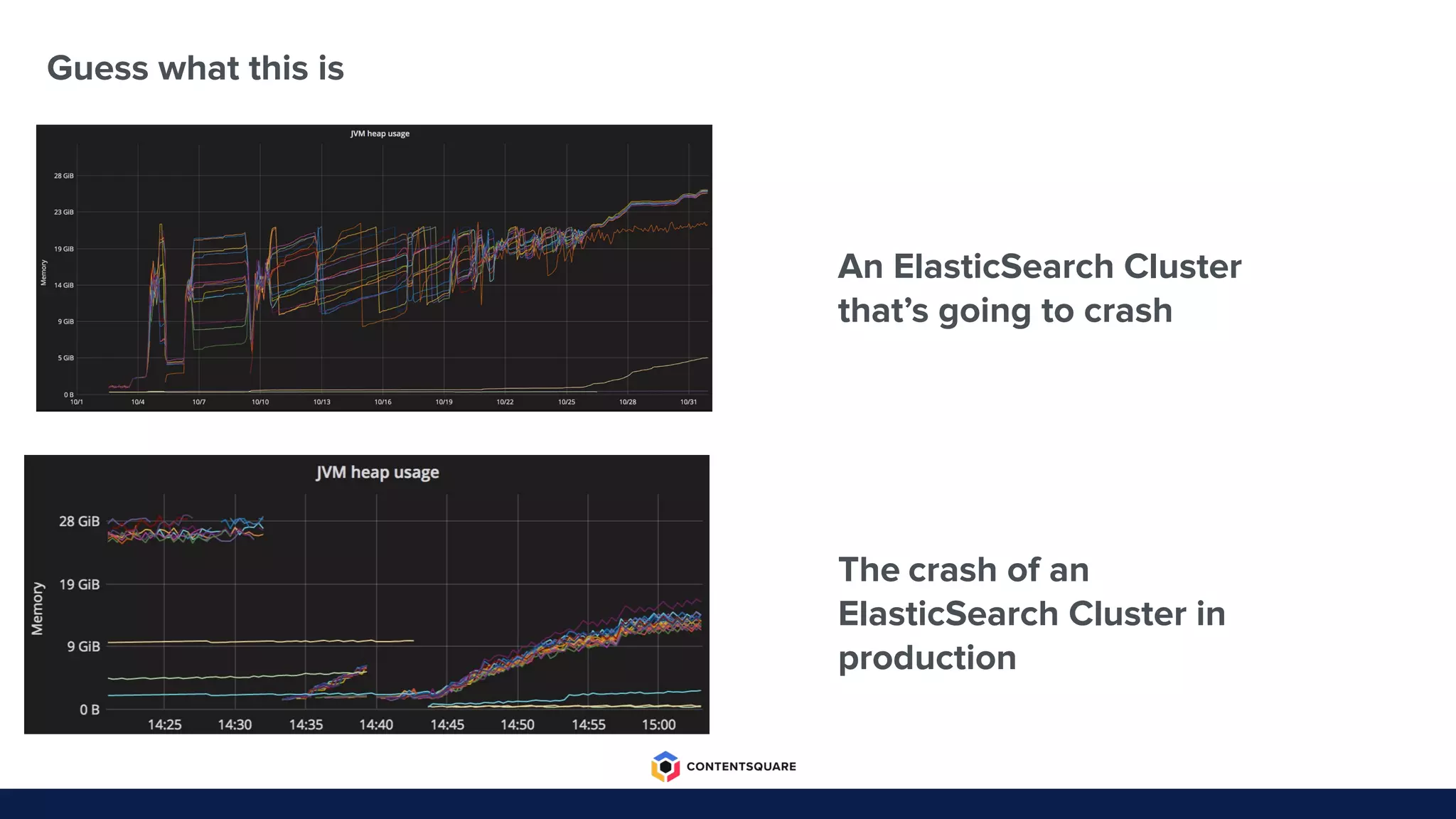

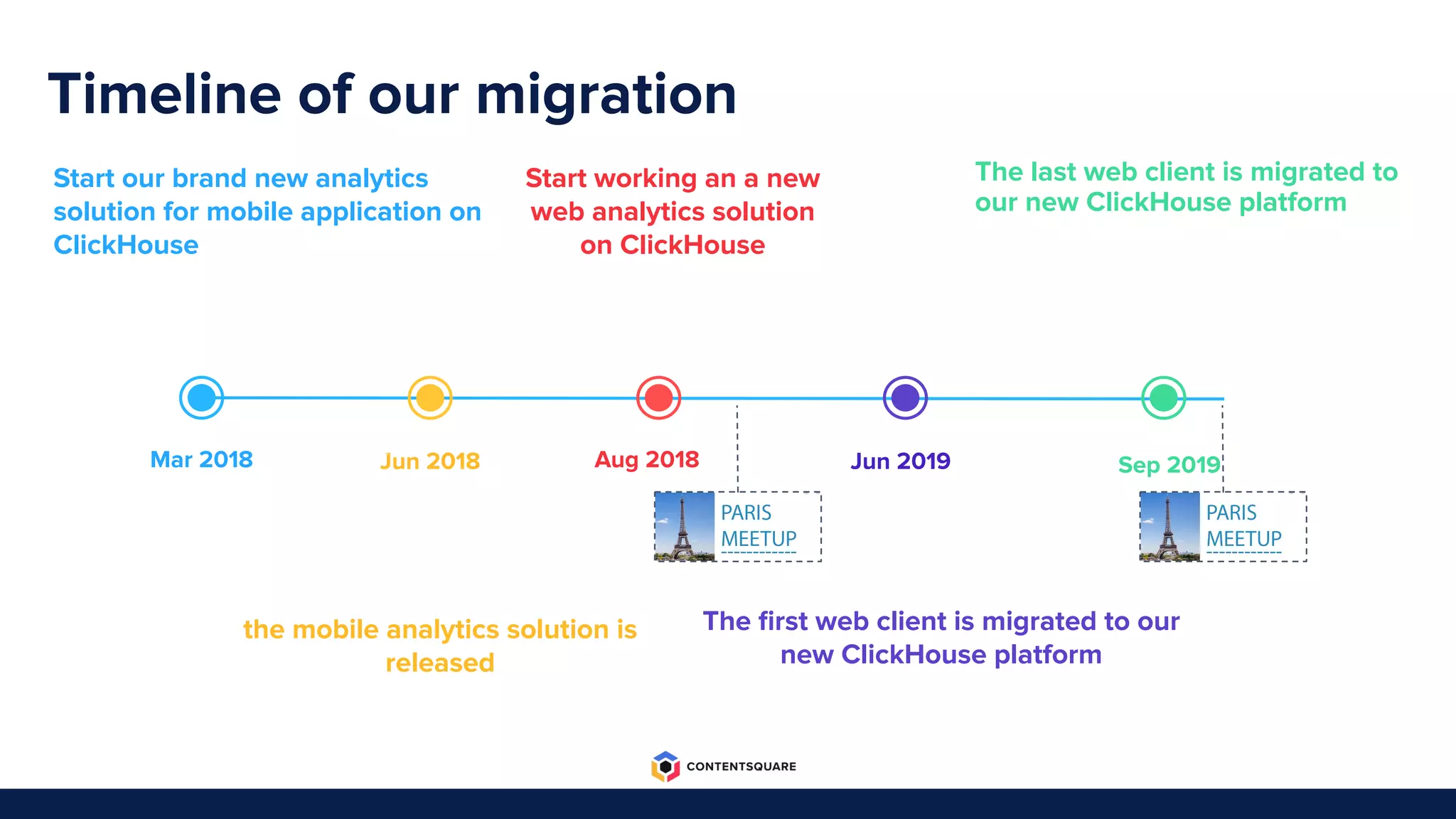

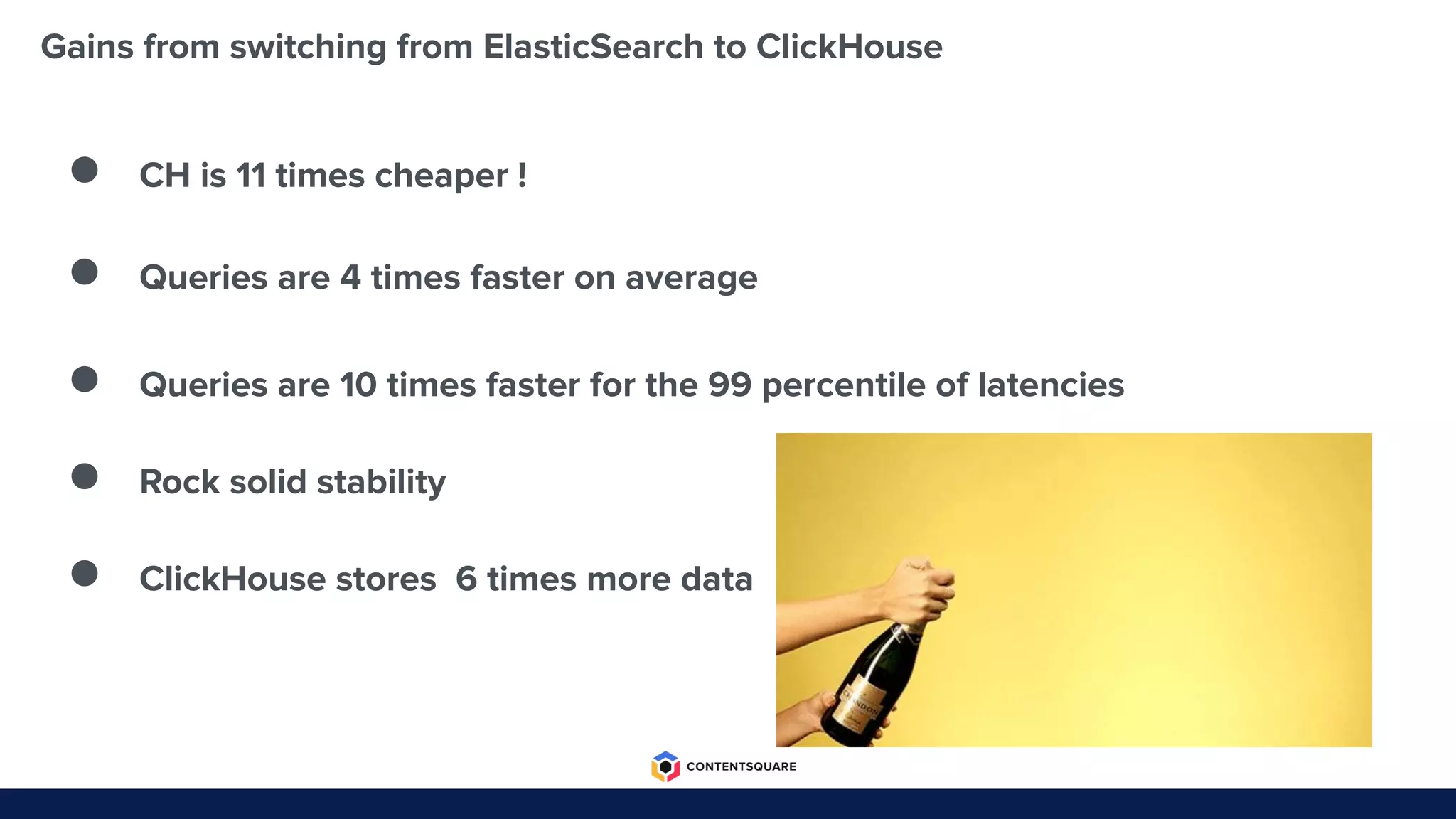

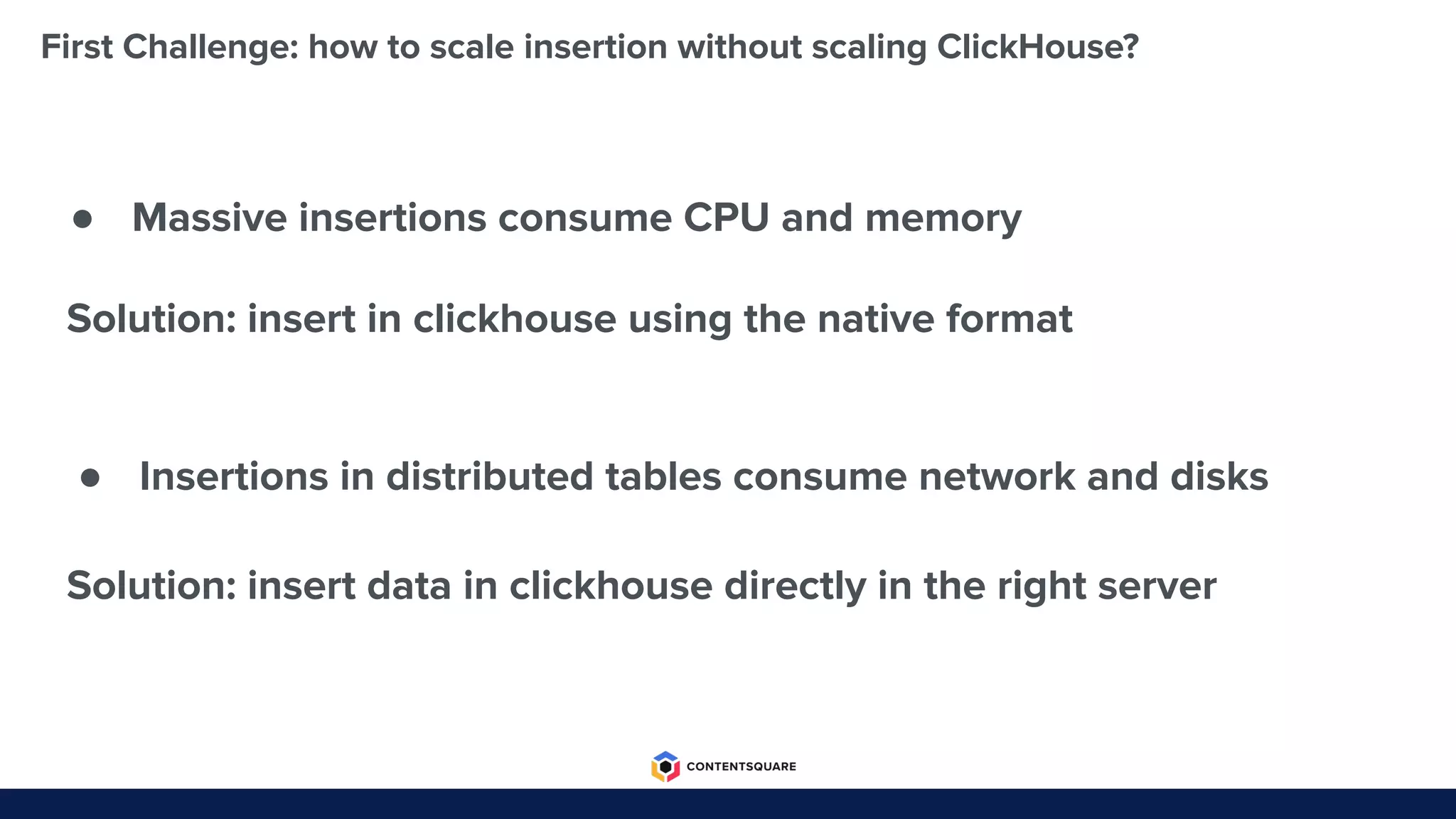

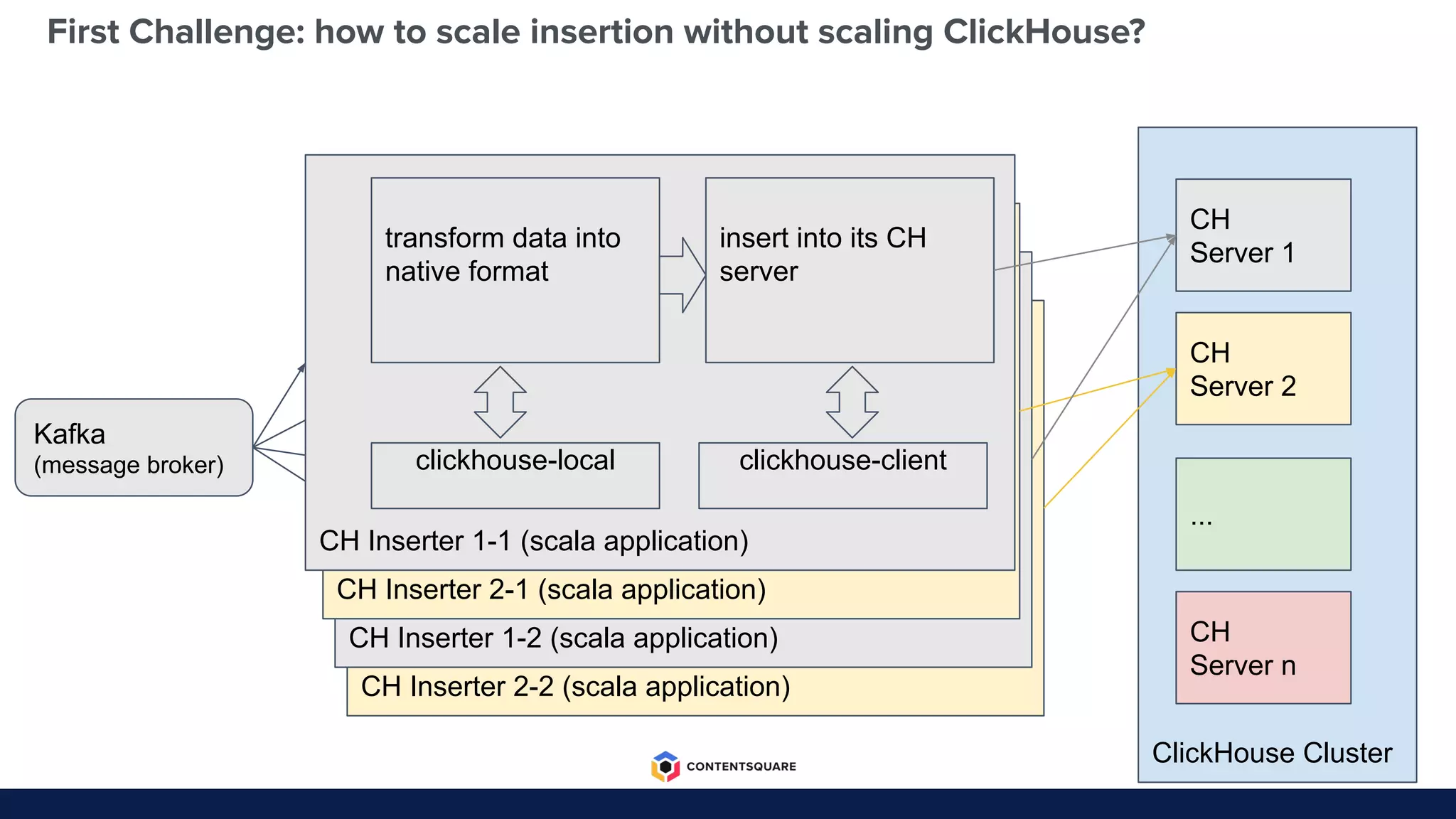

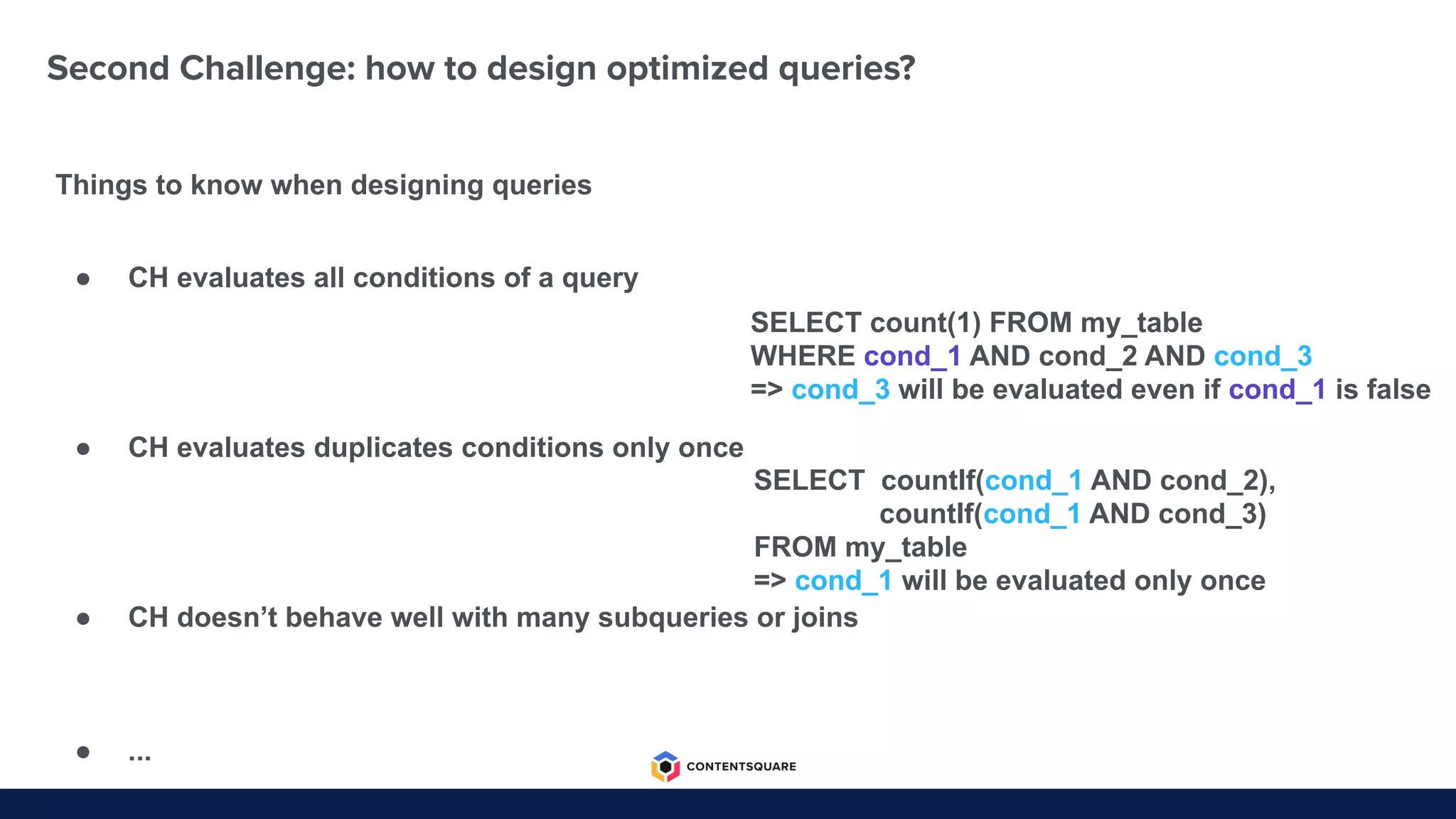

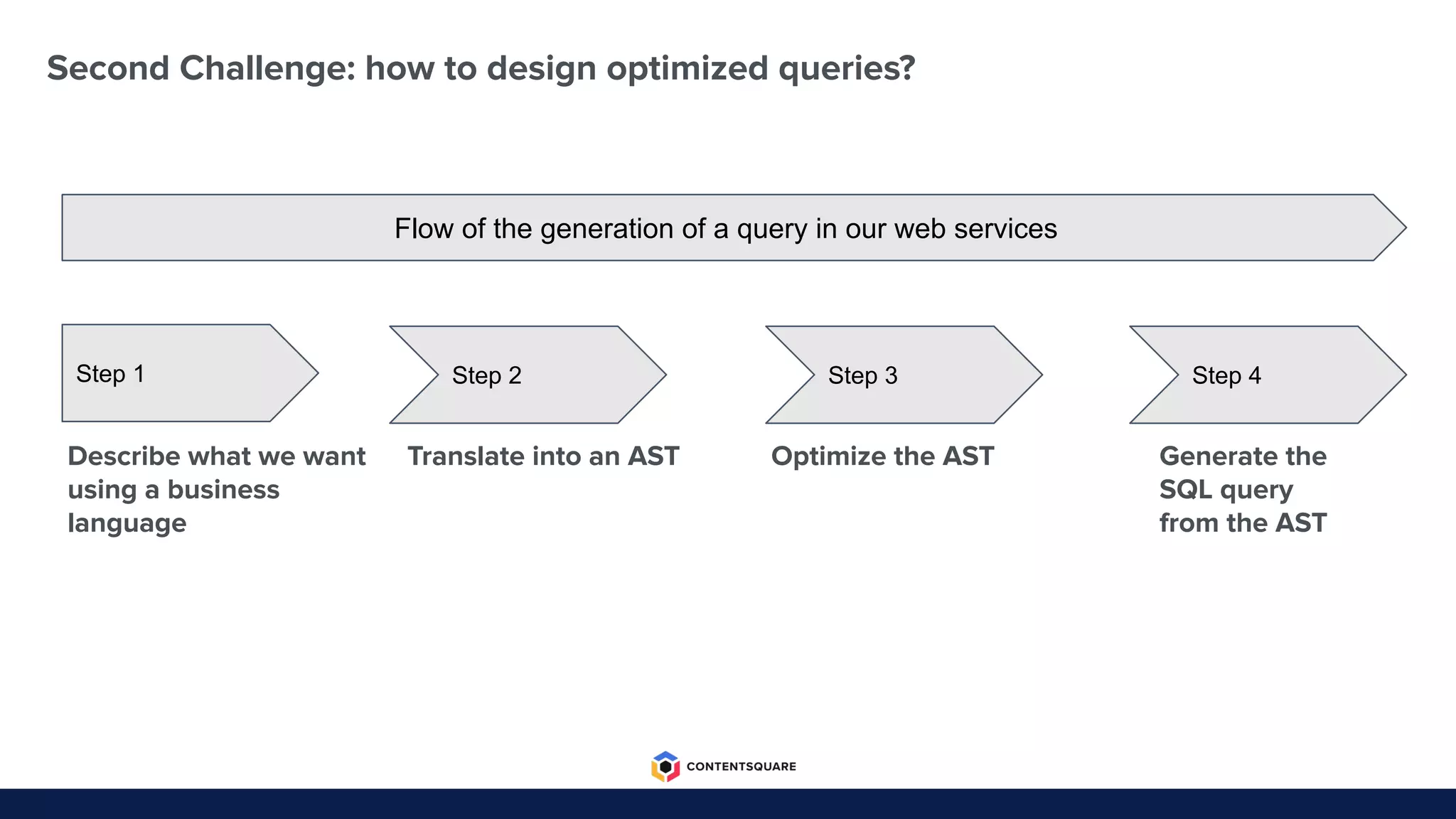

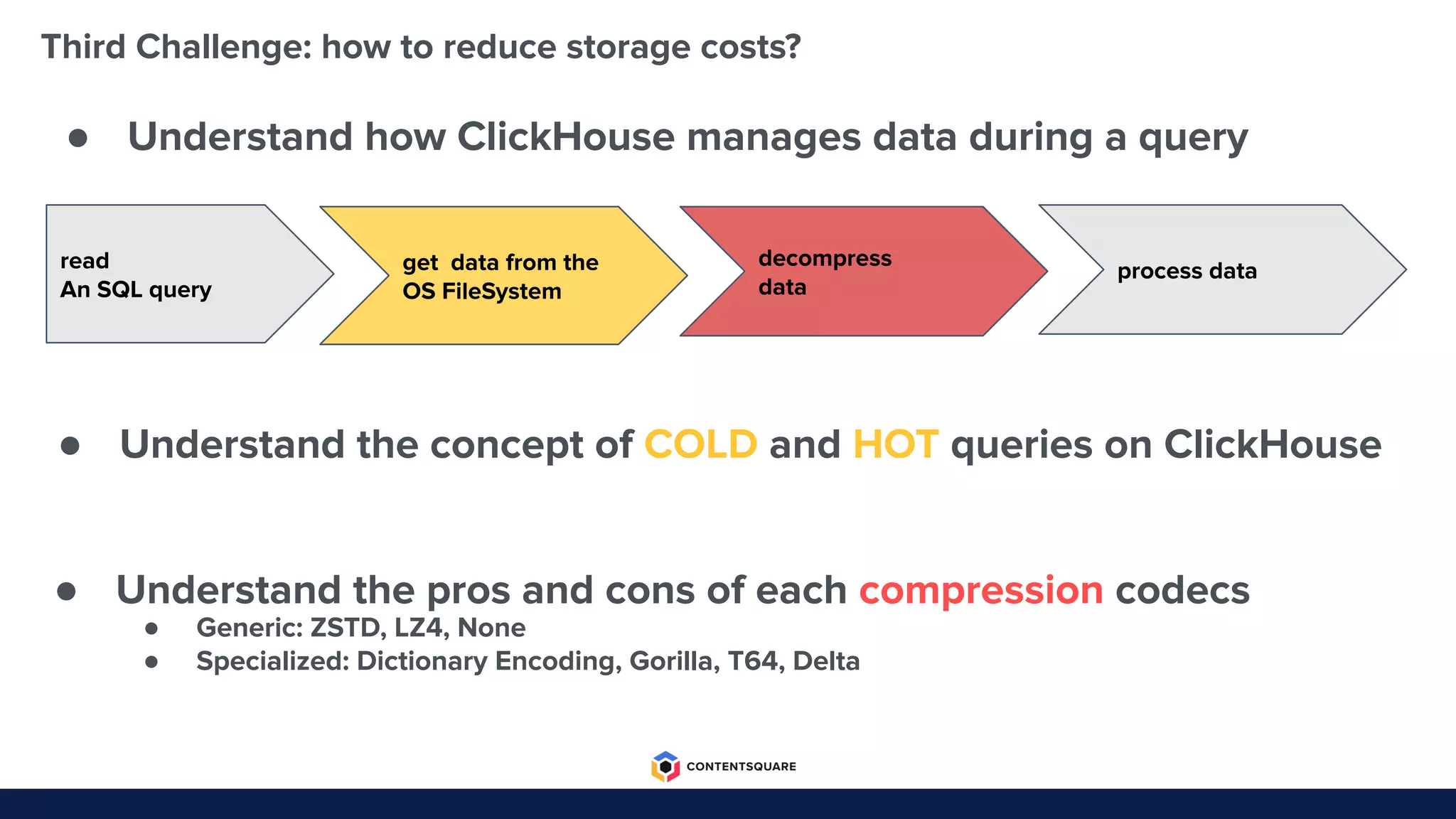

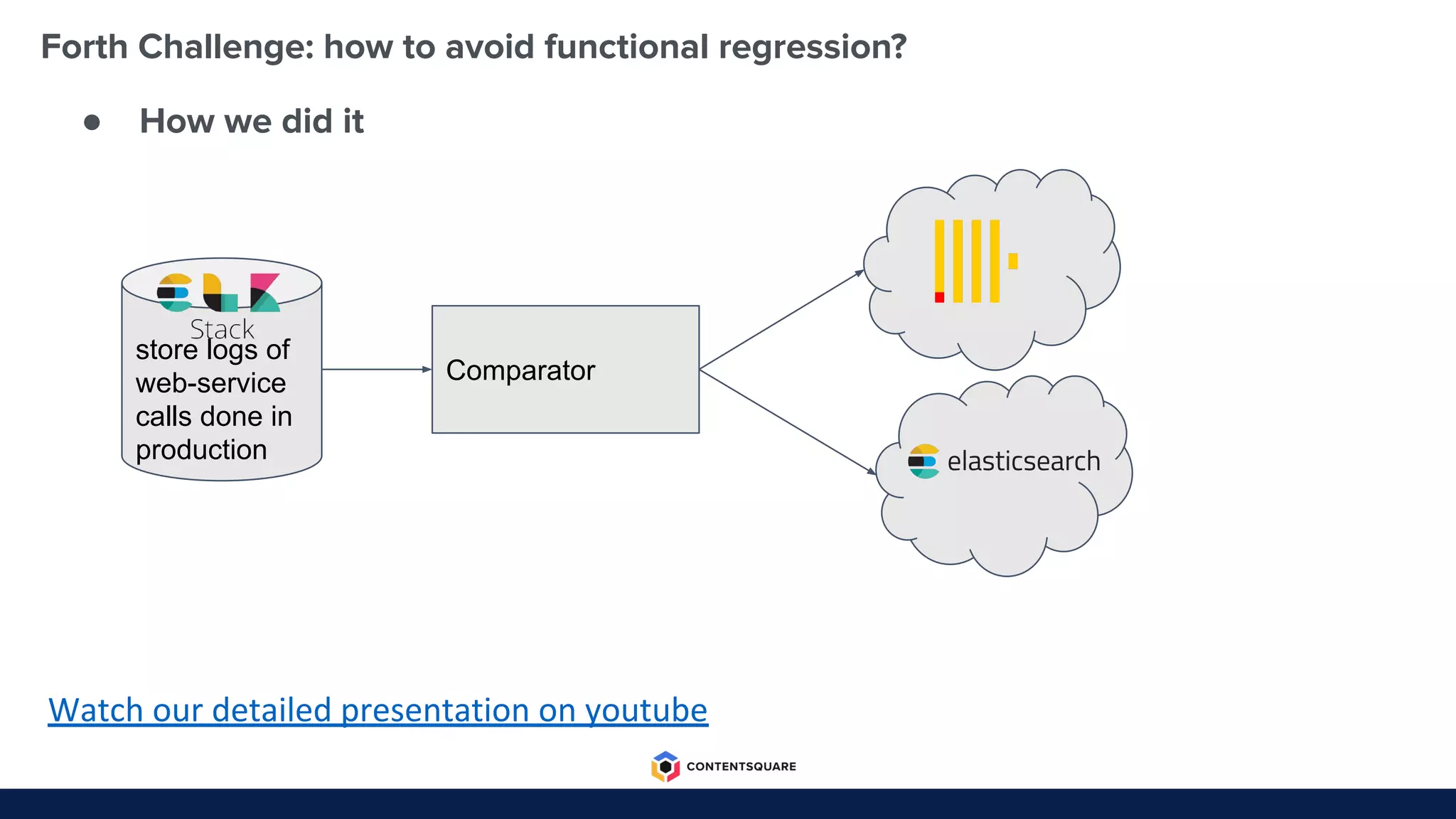

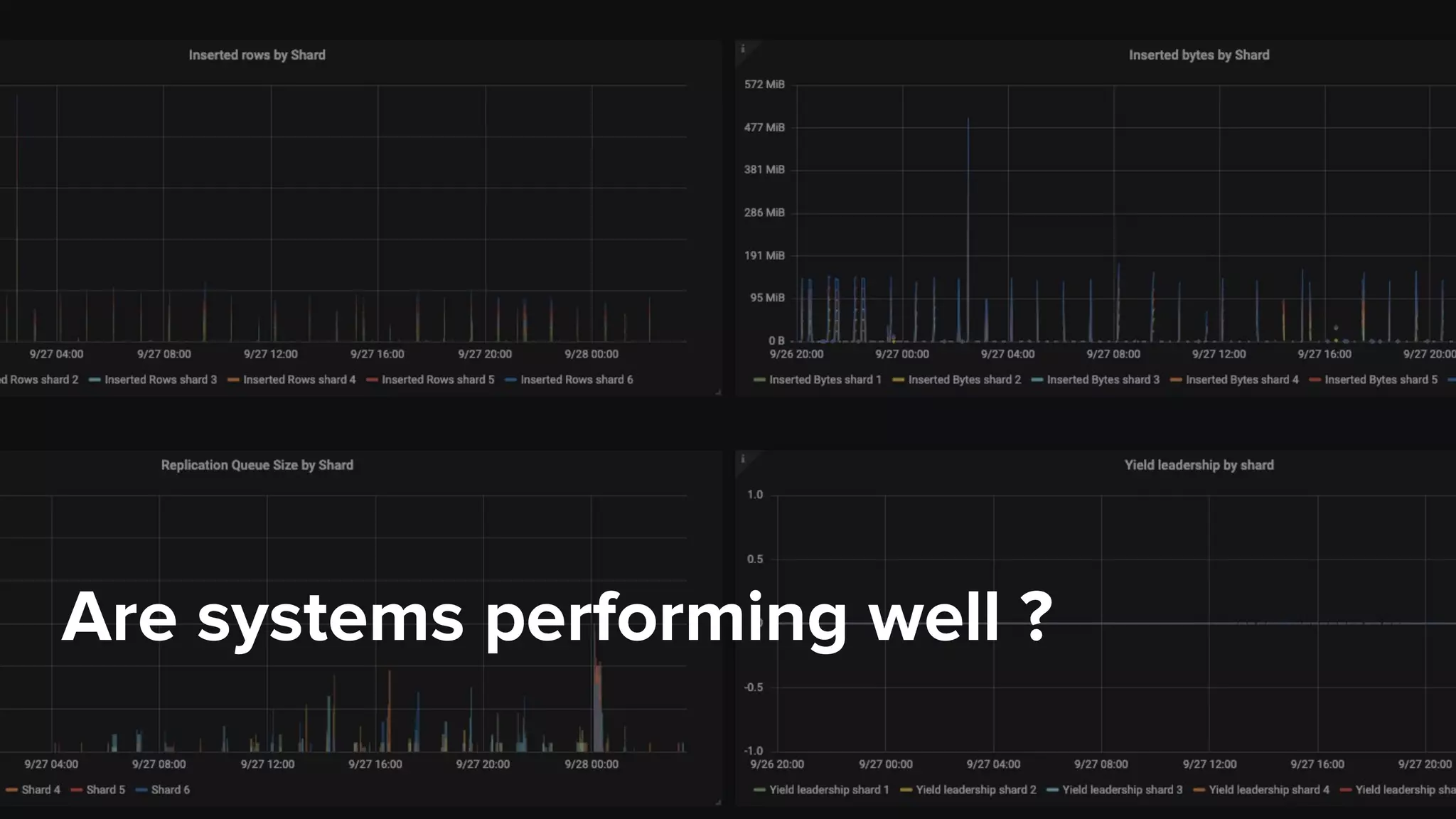

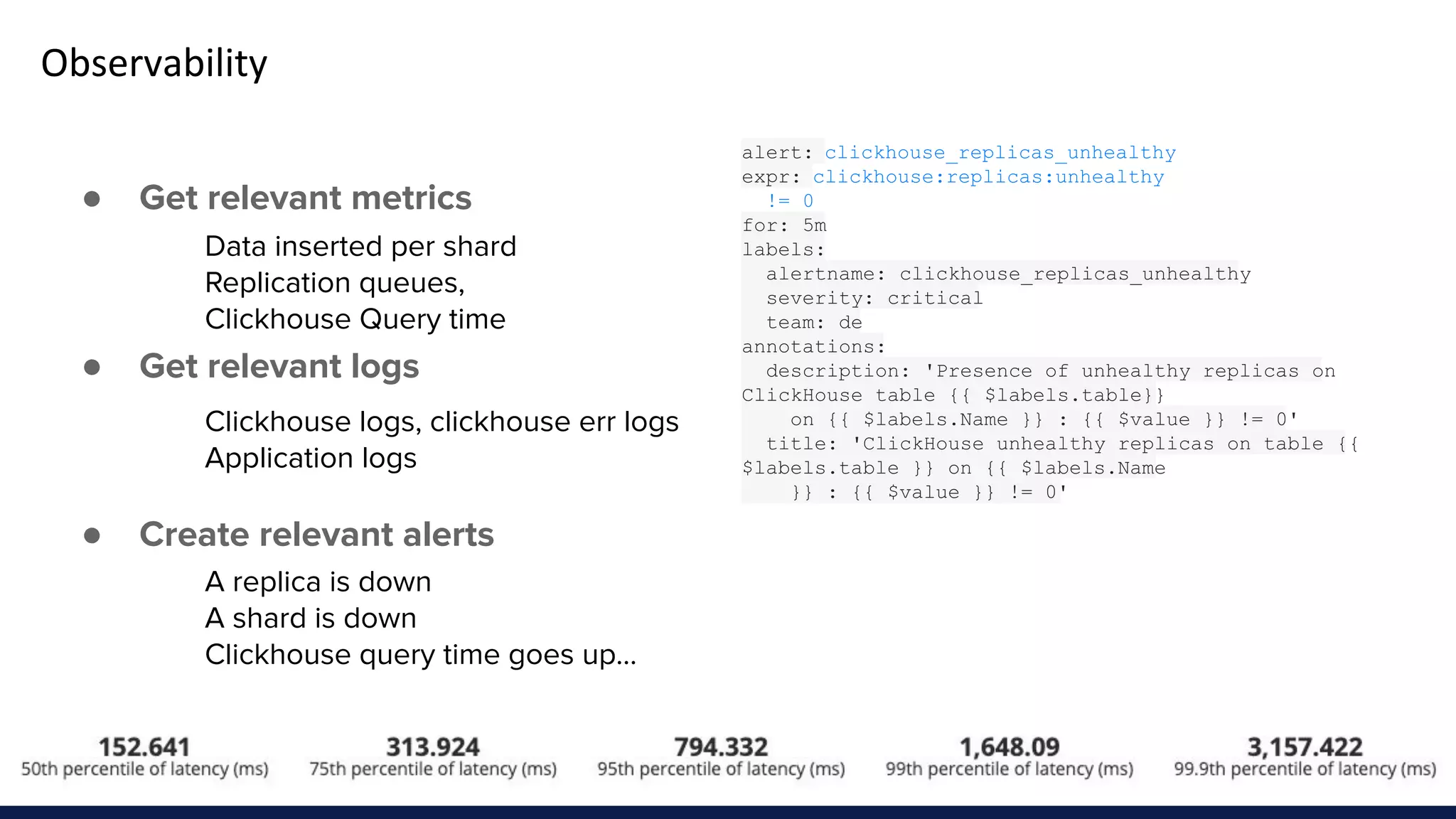

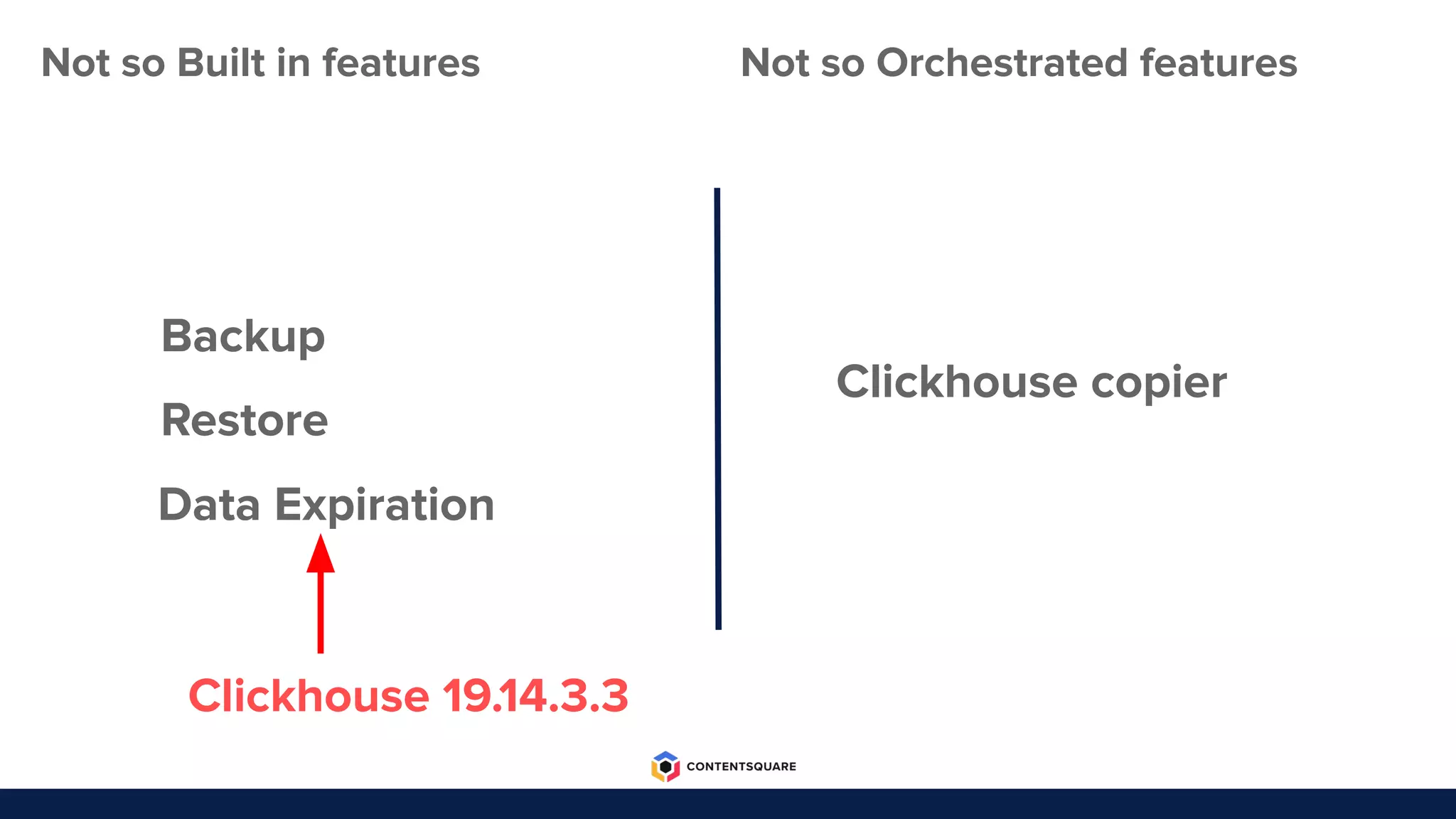

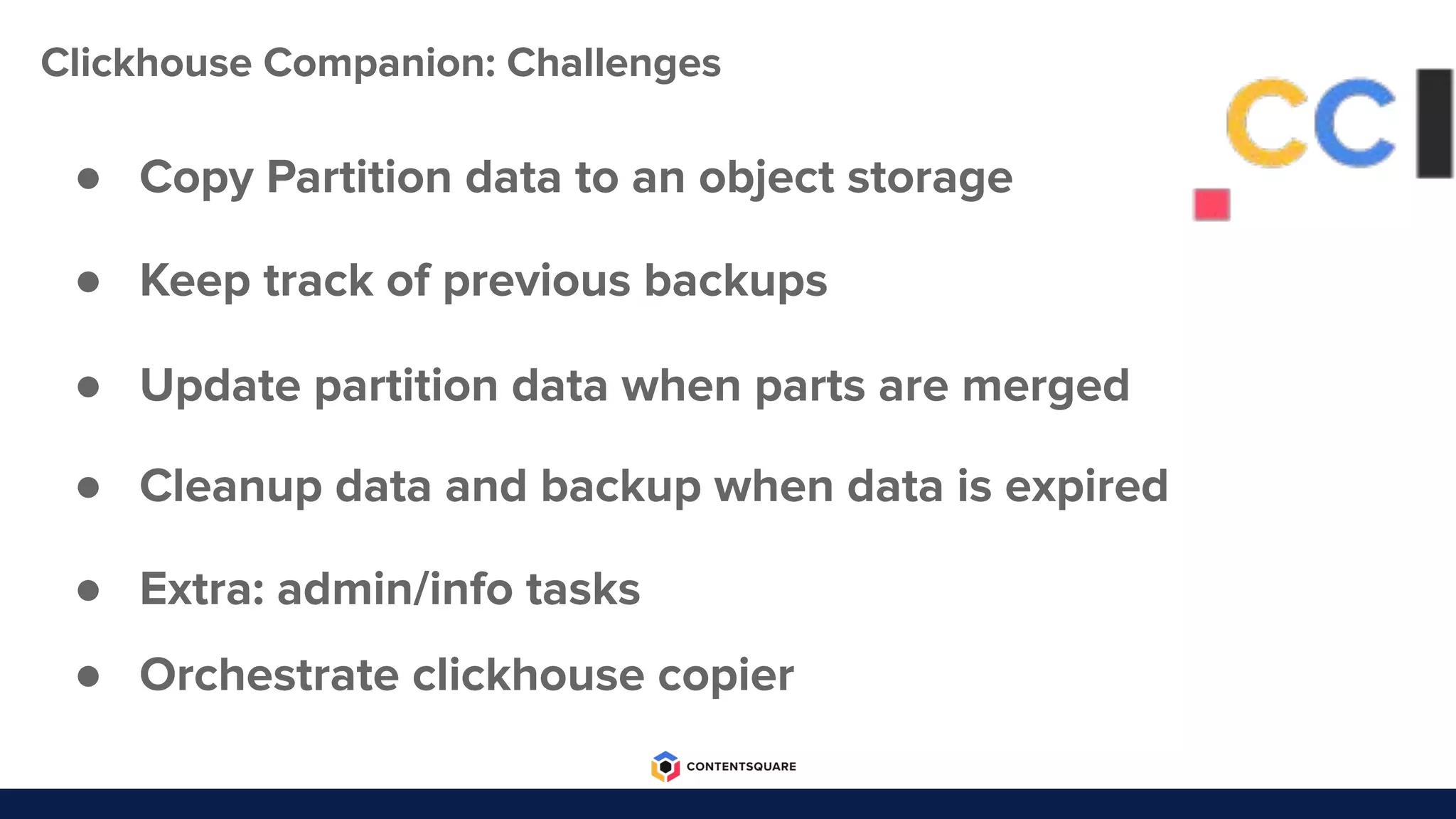

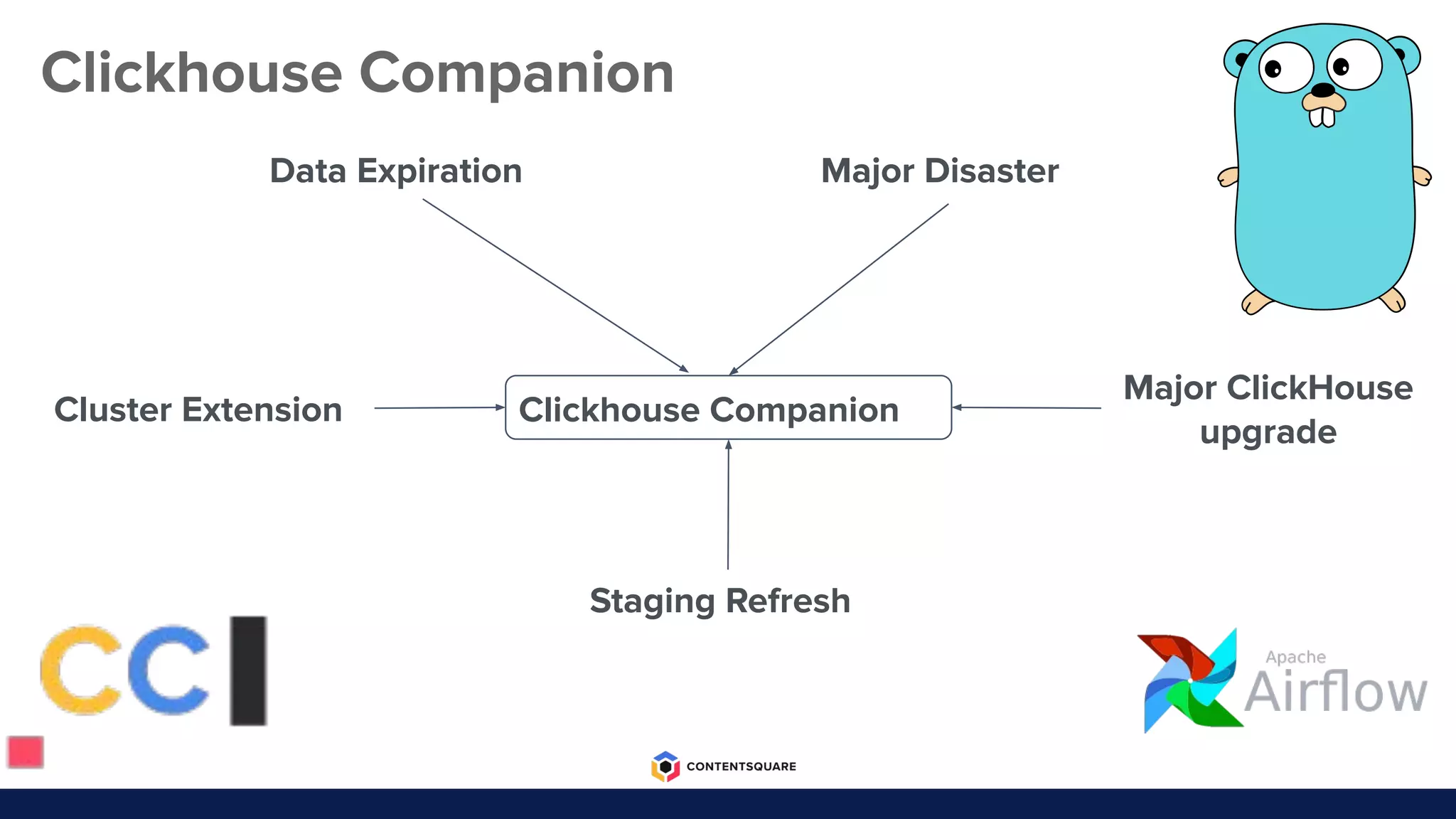

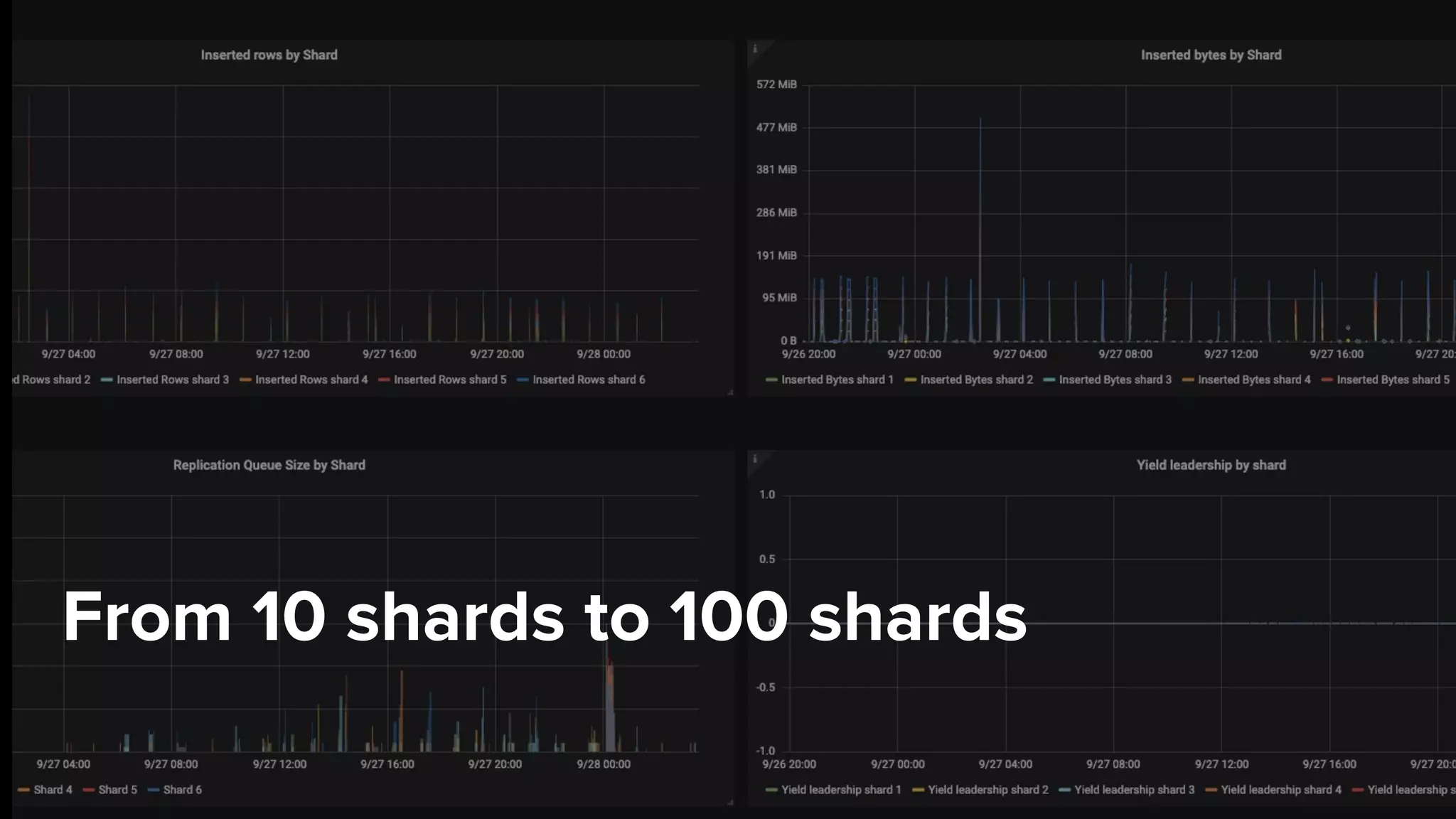

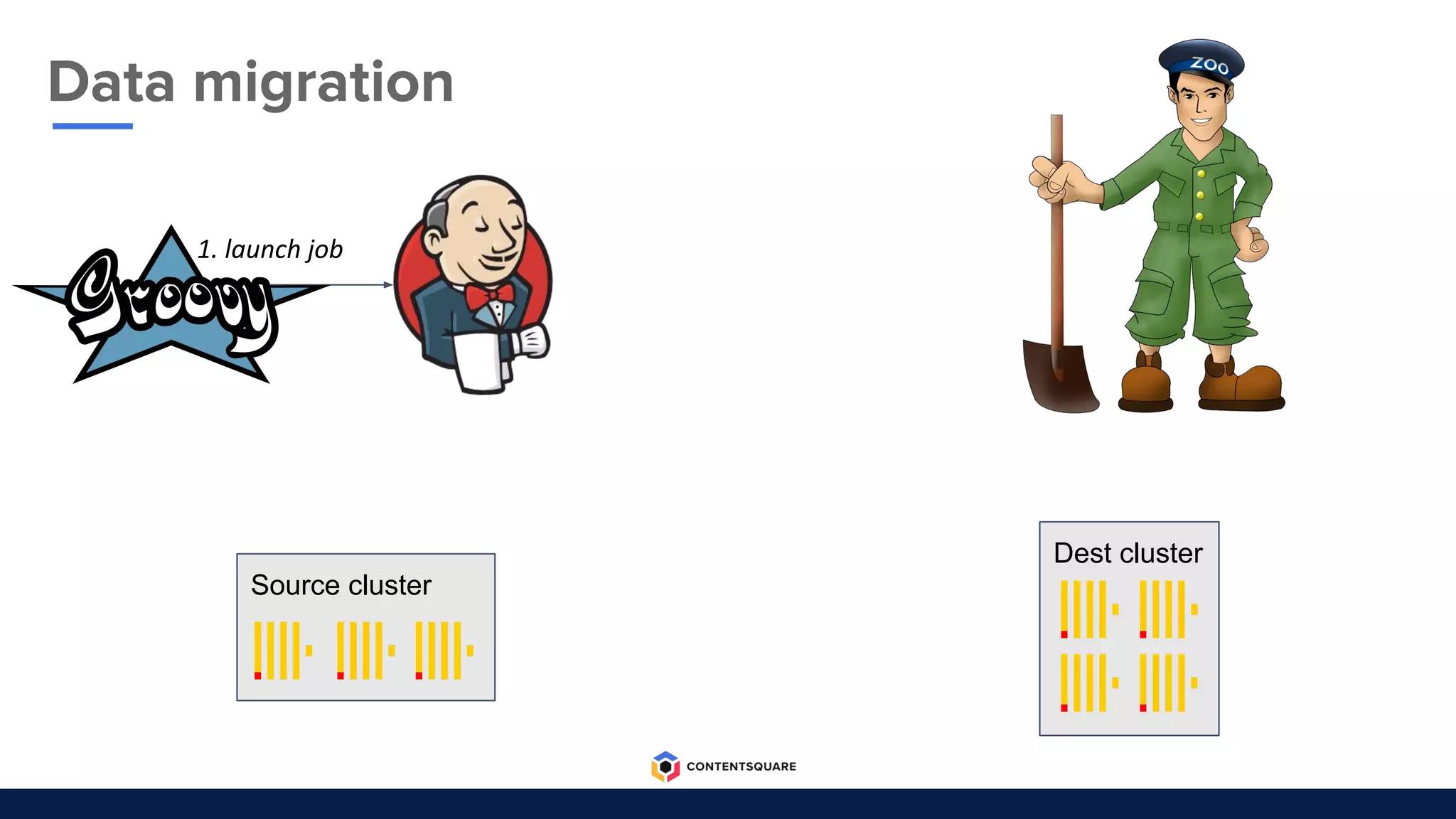

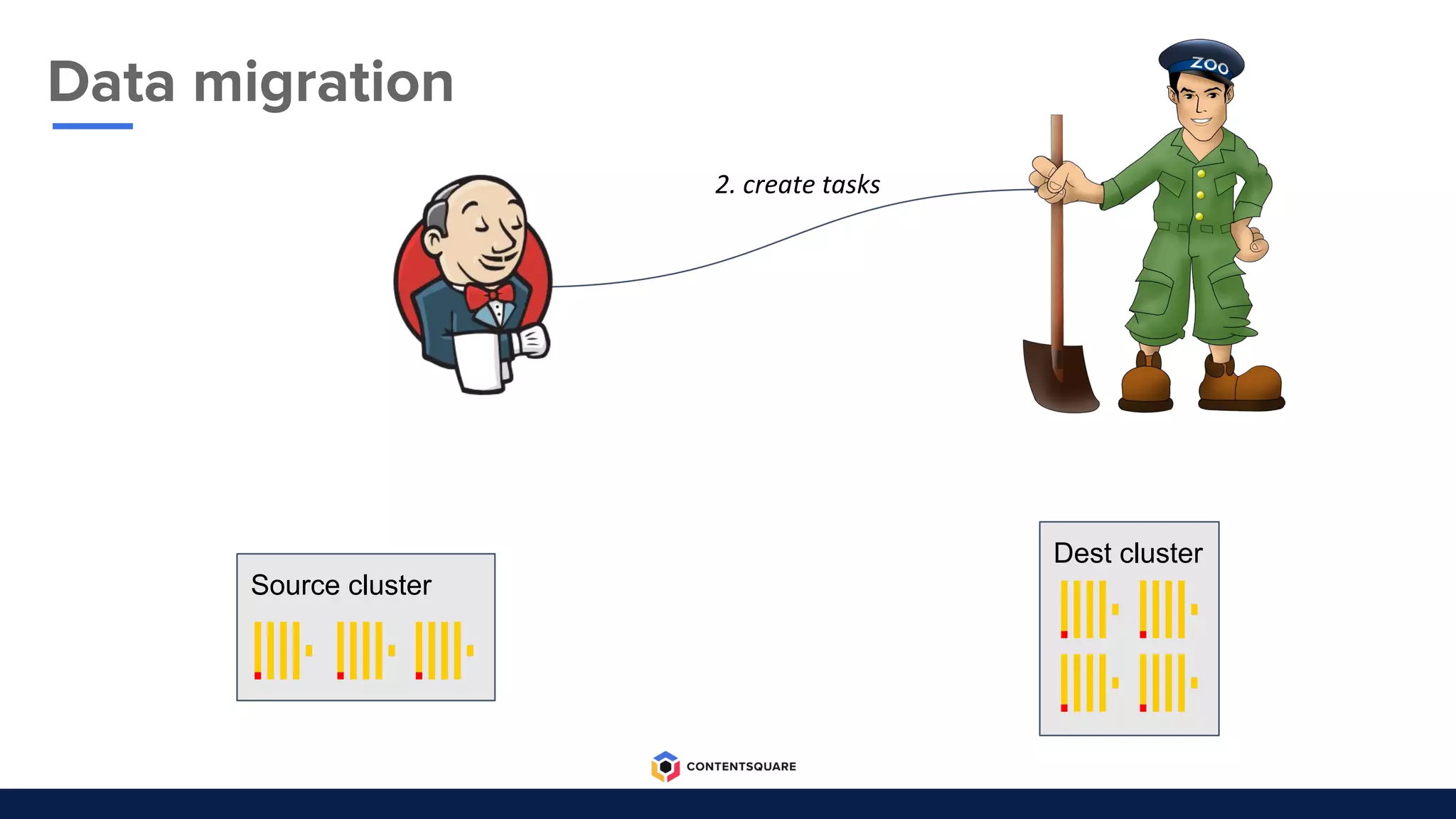

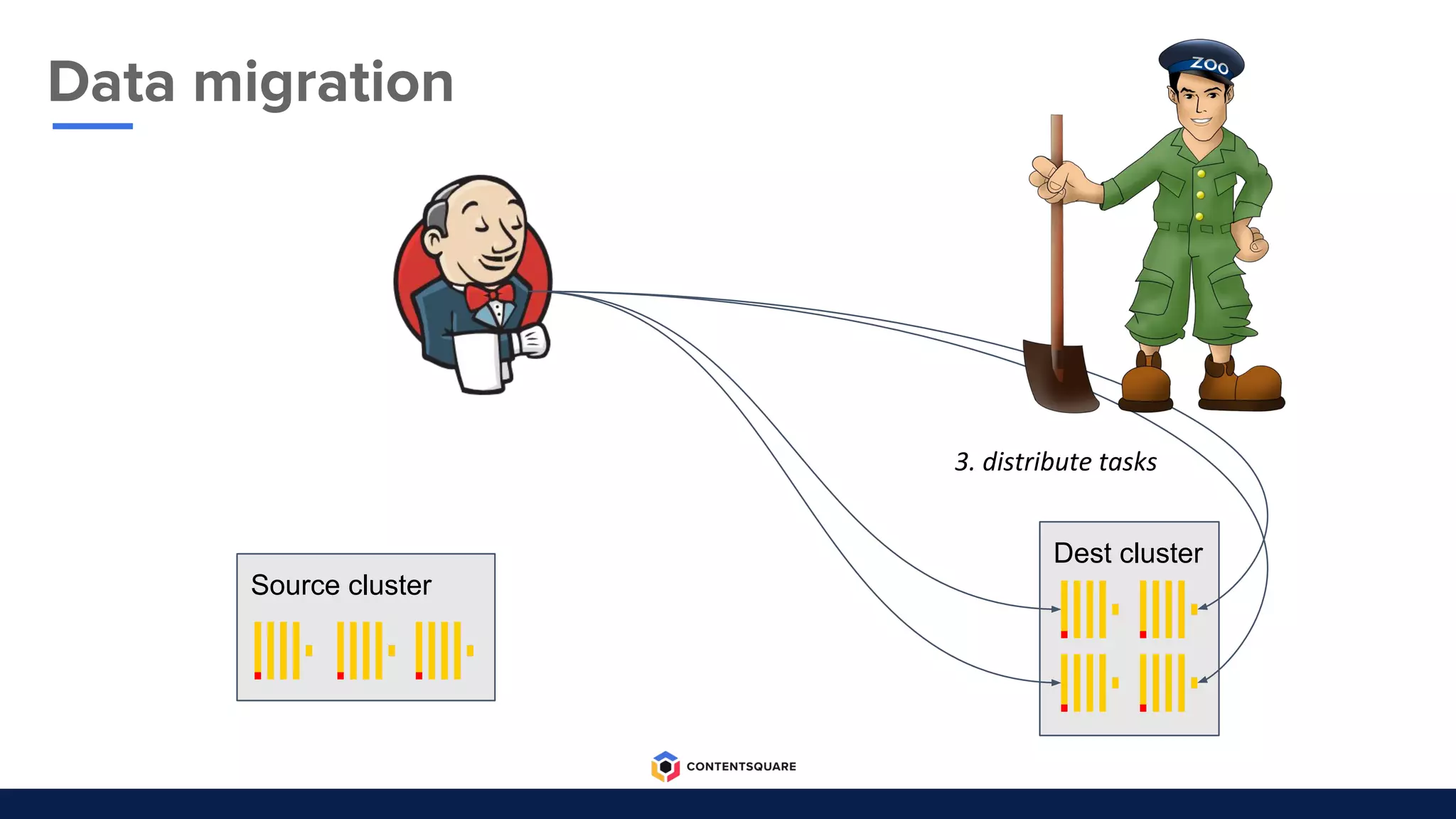

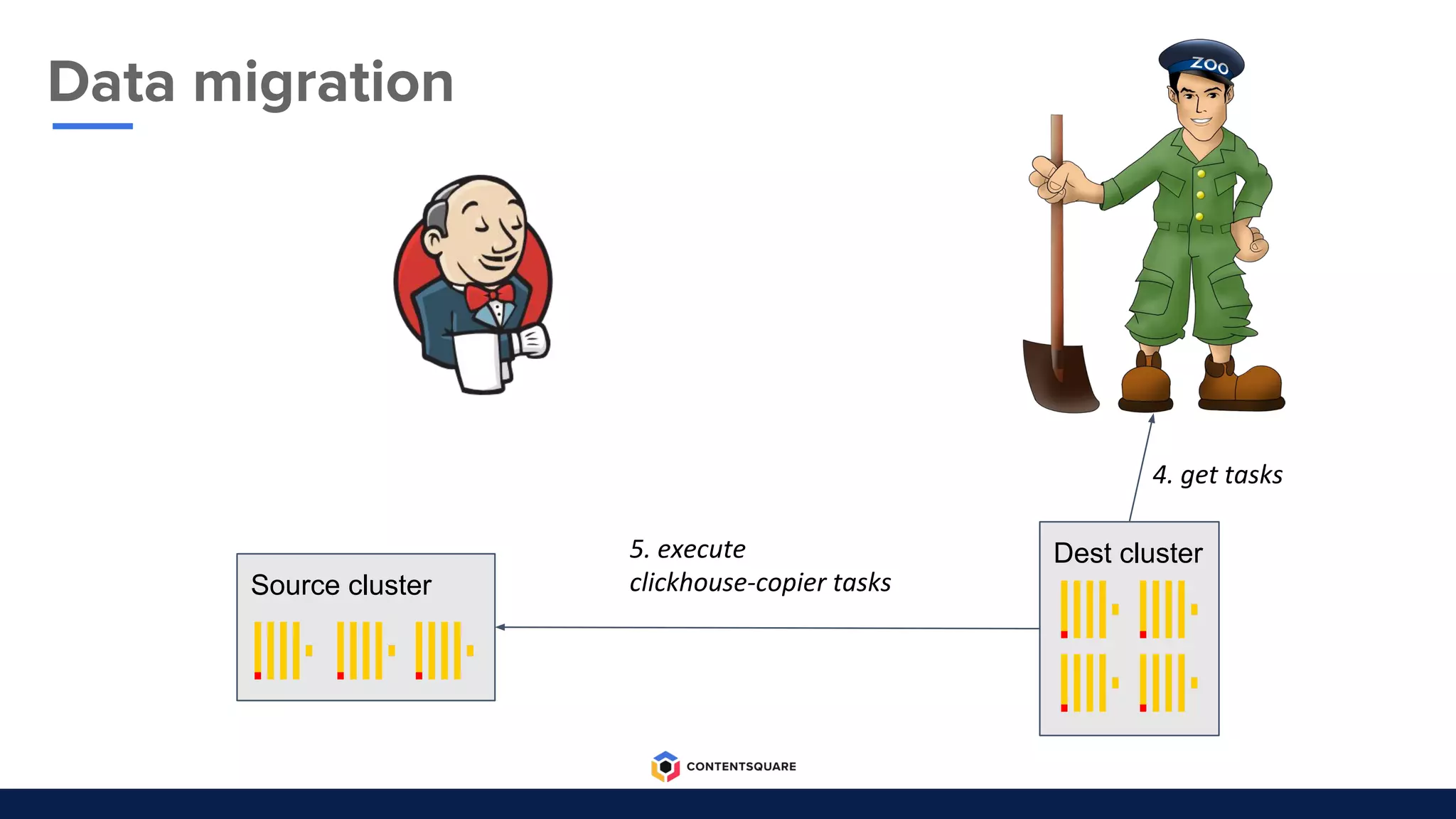

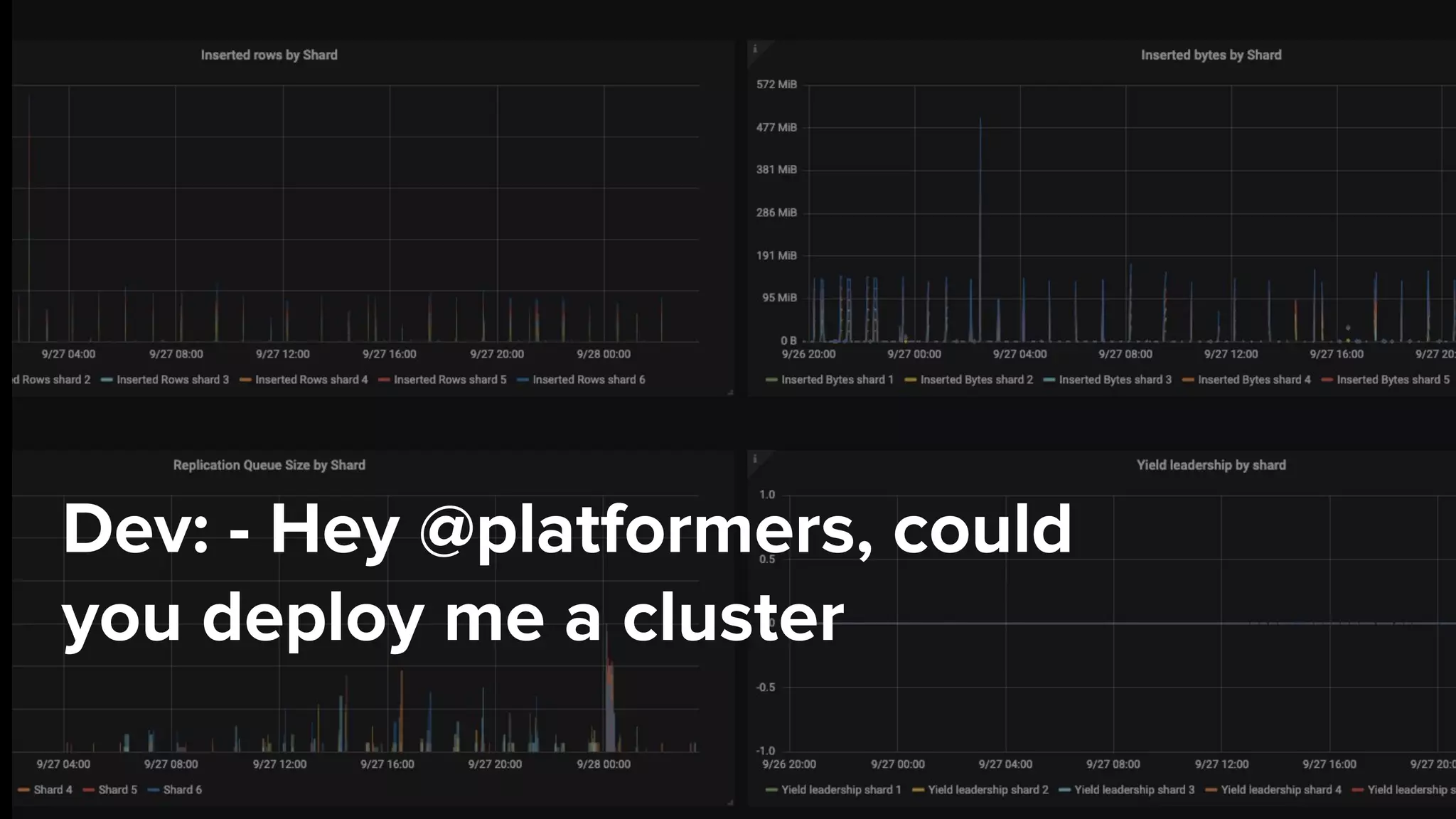

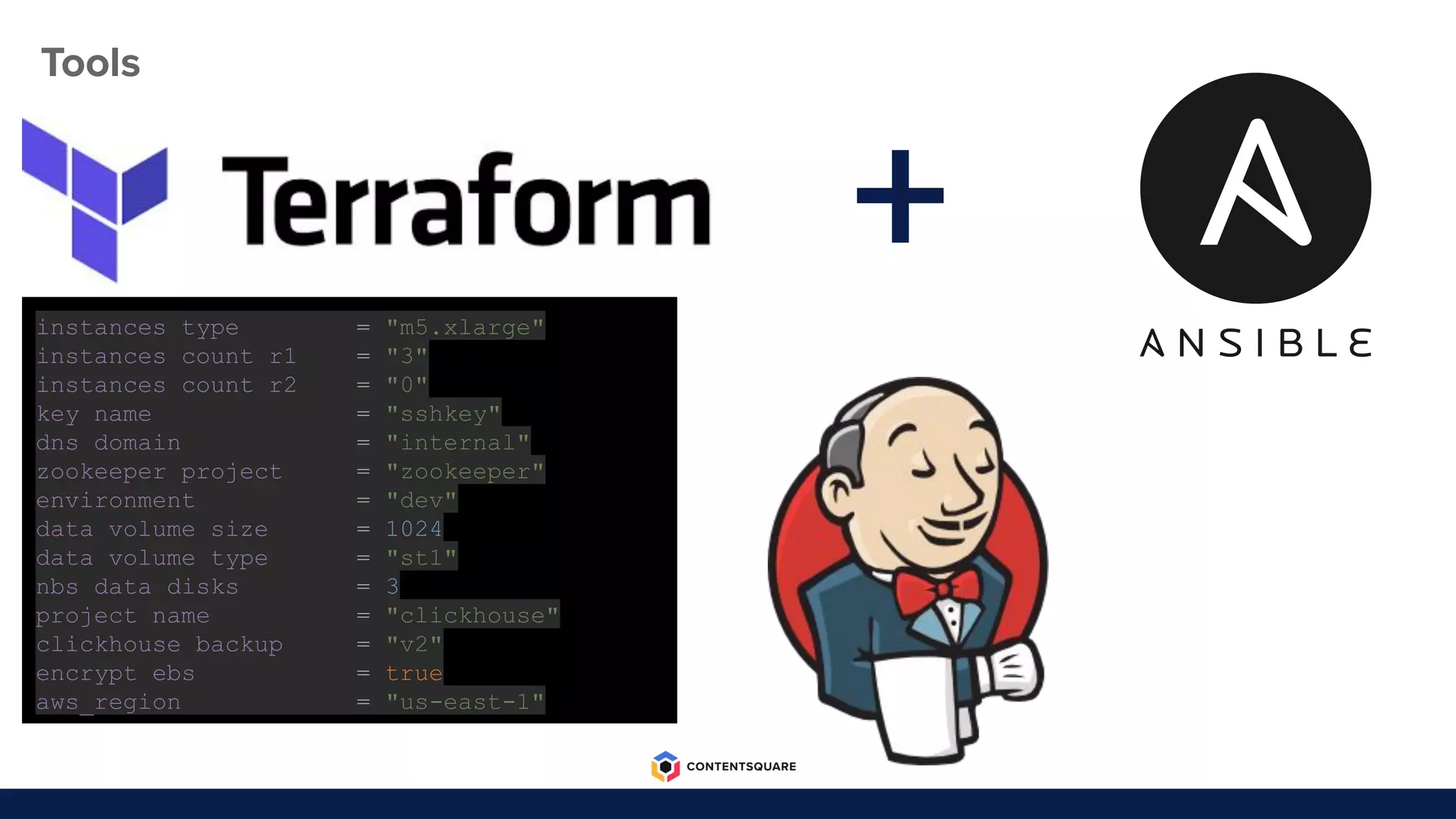

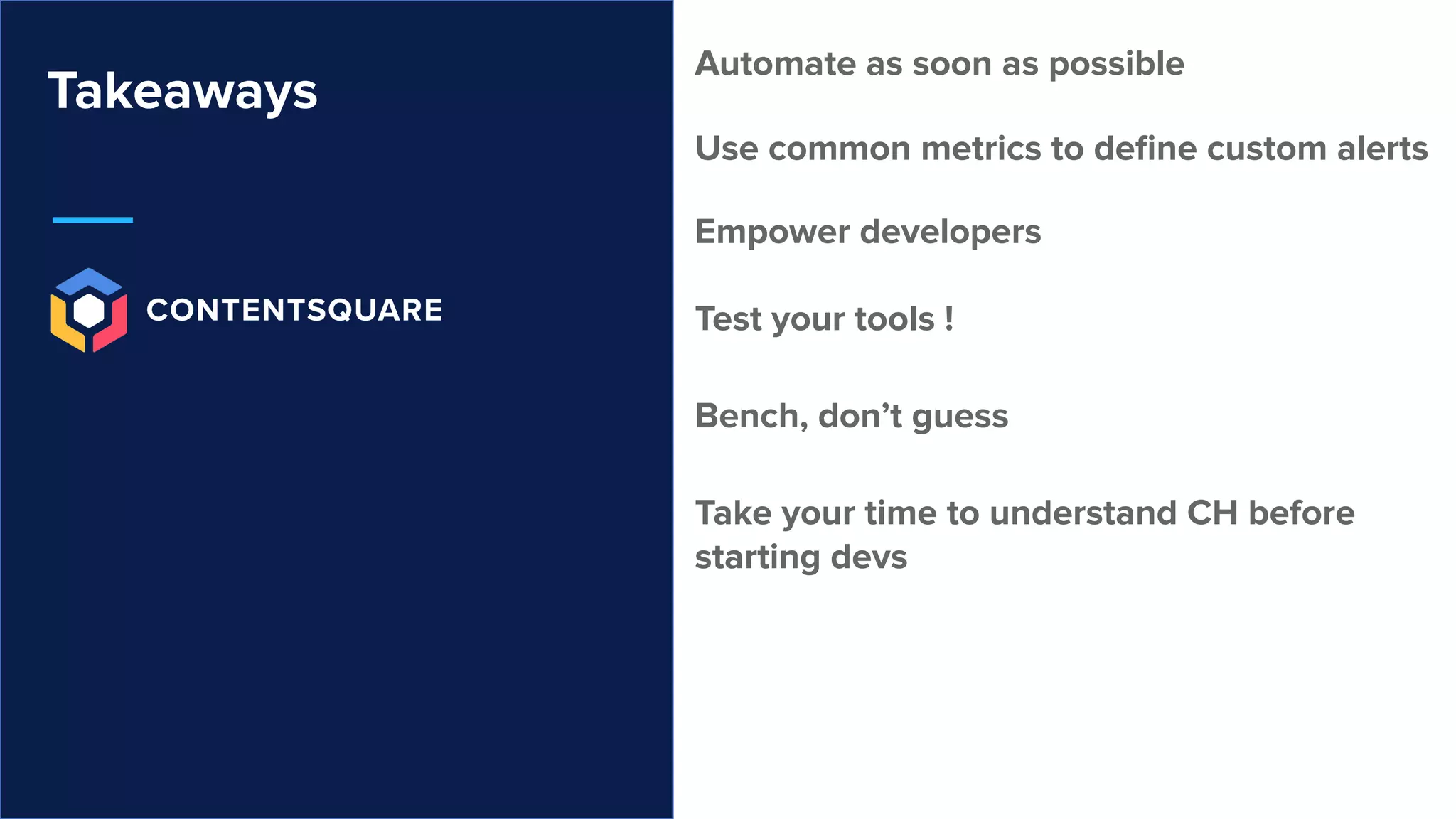

The document outlines the migration process from Elasticsearch to ClickHouse by Contentsquare, detailing the reasons for the switch, key challenges faced, and the benefits of using ClickHouse, which include cost-efficiency and improved performance. It also discusses various technical challenges encountered, such as optimizing query design, reducing storage costs, and ensuring system reliability post-migration. The document concludes with takeaways emphasizing automation, metrics, and developer empowerment in the transition process.