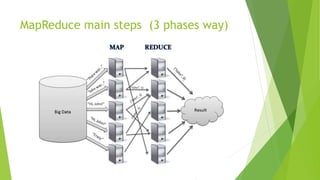

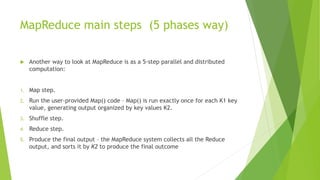

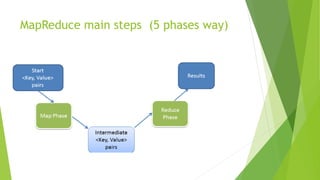

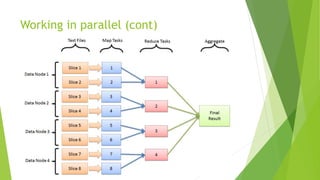

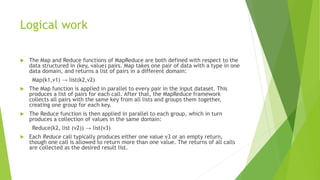

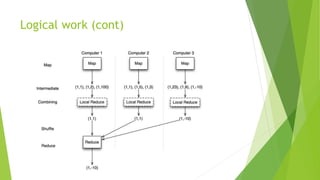

MapReduce is a programming model for processing large datasets in a distributed system. It involves a map step that performs filtering and sorting, and a reduce step that performs summary operations. Hadoop is an open-source framework that supports MapReduce. It orchestrates tasks across distributed servers, manages communications and fault tolerance. Main steps include mapping of input data, shuffling of data between nodes, and reducing of shuffled data.