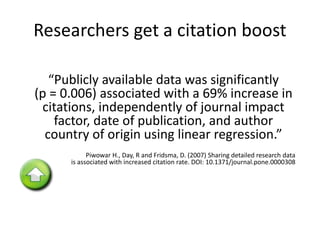

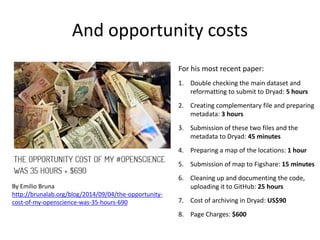

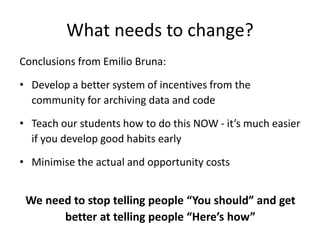

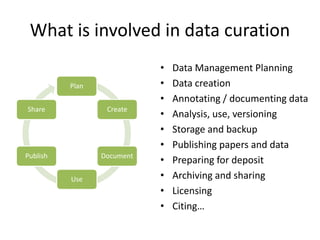

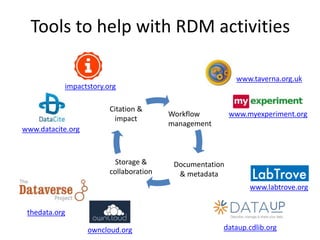

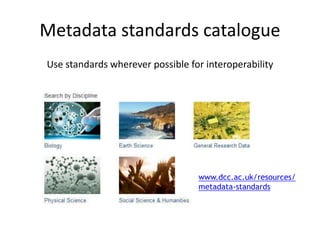

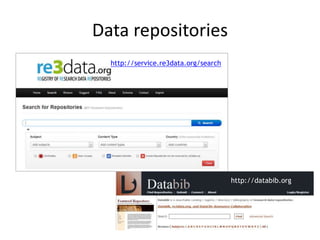

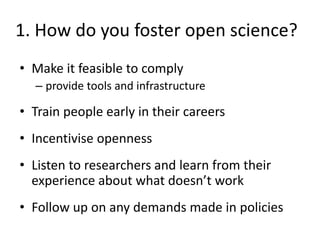

The document discusses the importance of managing and sharing research data, highlighting a commitment to open science by the European Research Council. It outlines benefits such as increased citations and institutional returns from shared data, while also addressing barriers like data ownership and sharing restrictions. Additionally, it emphasizes the need for improved systems and training for researchers to develop good practices in data management and sharing.