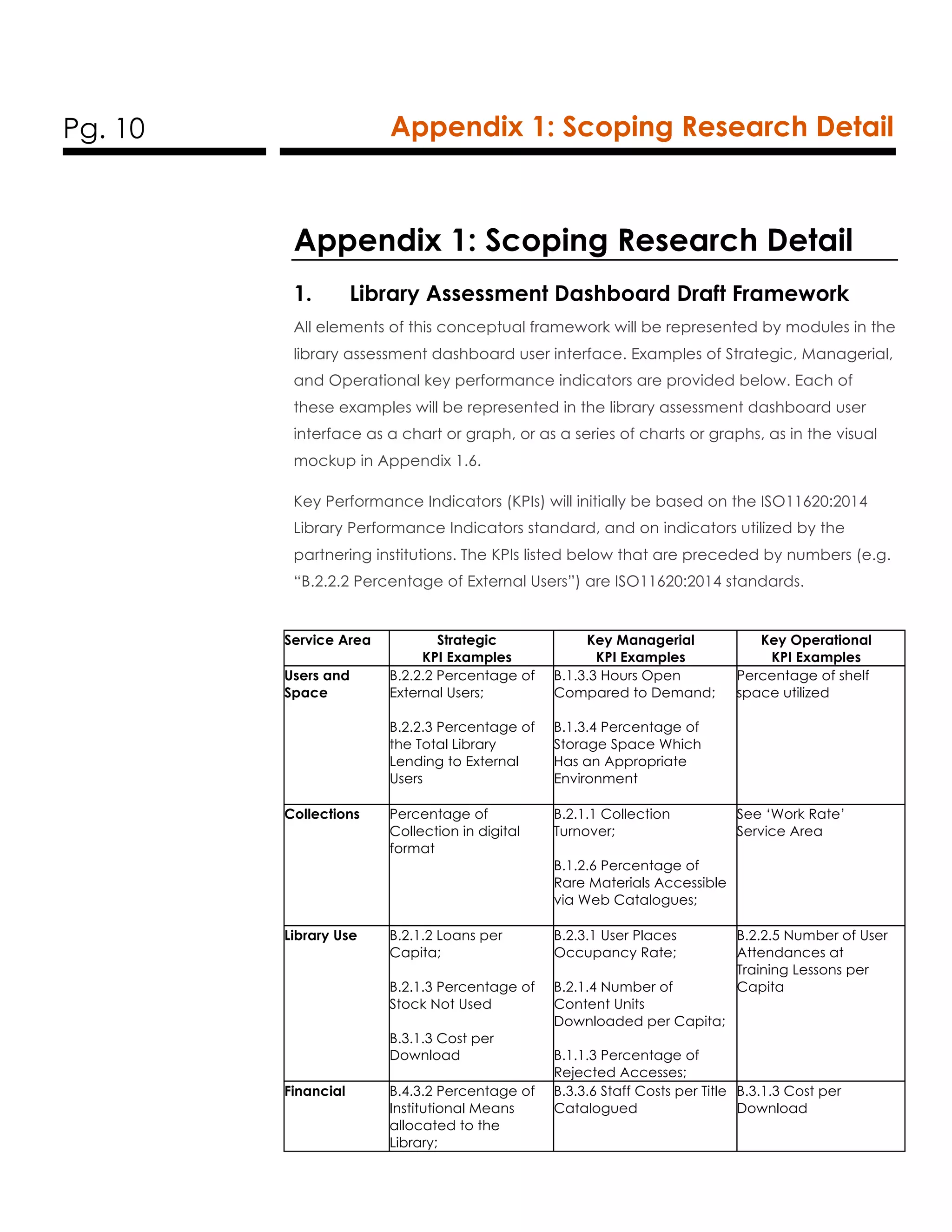

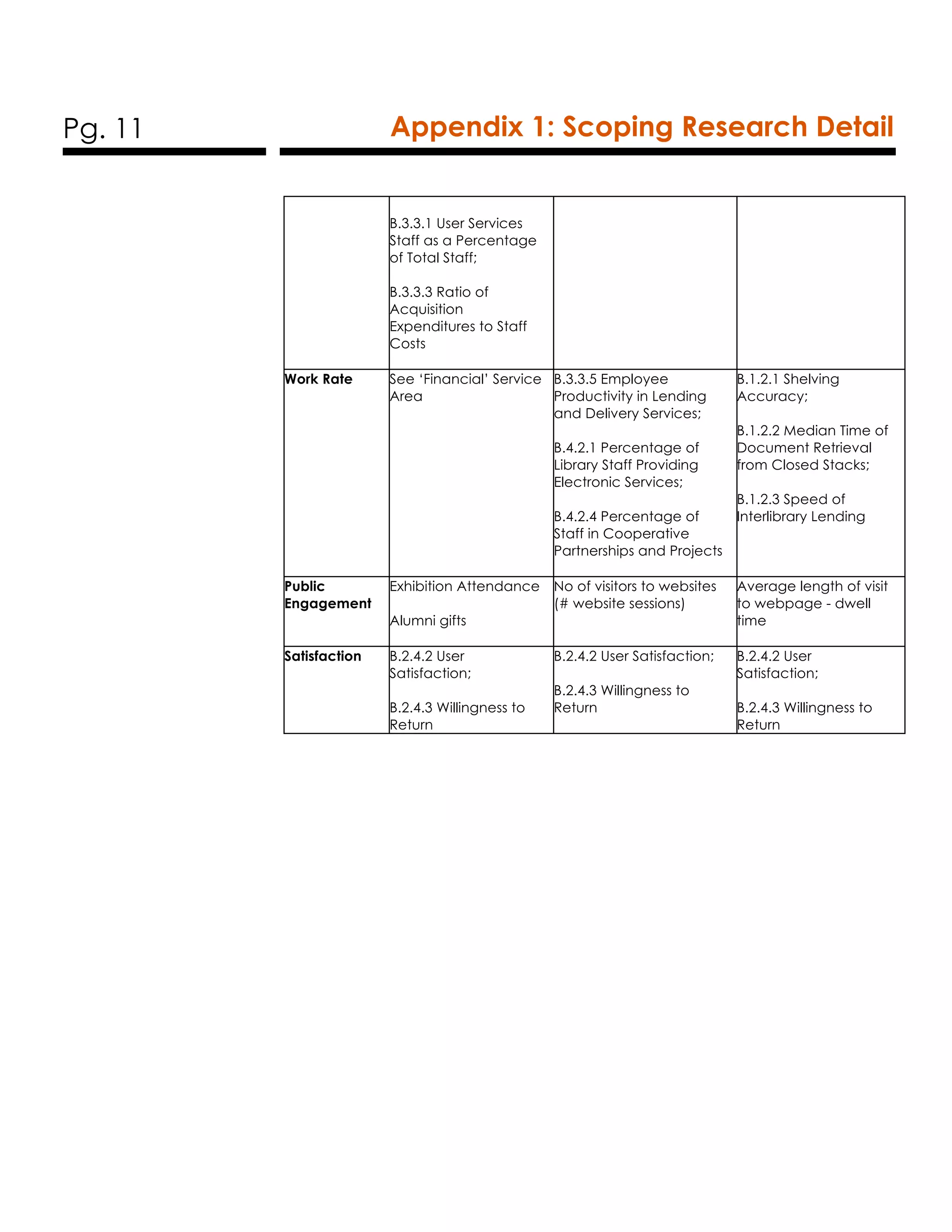

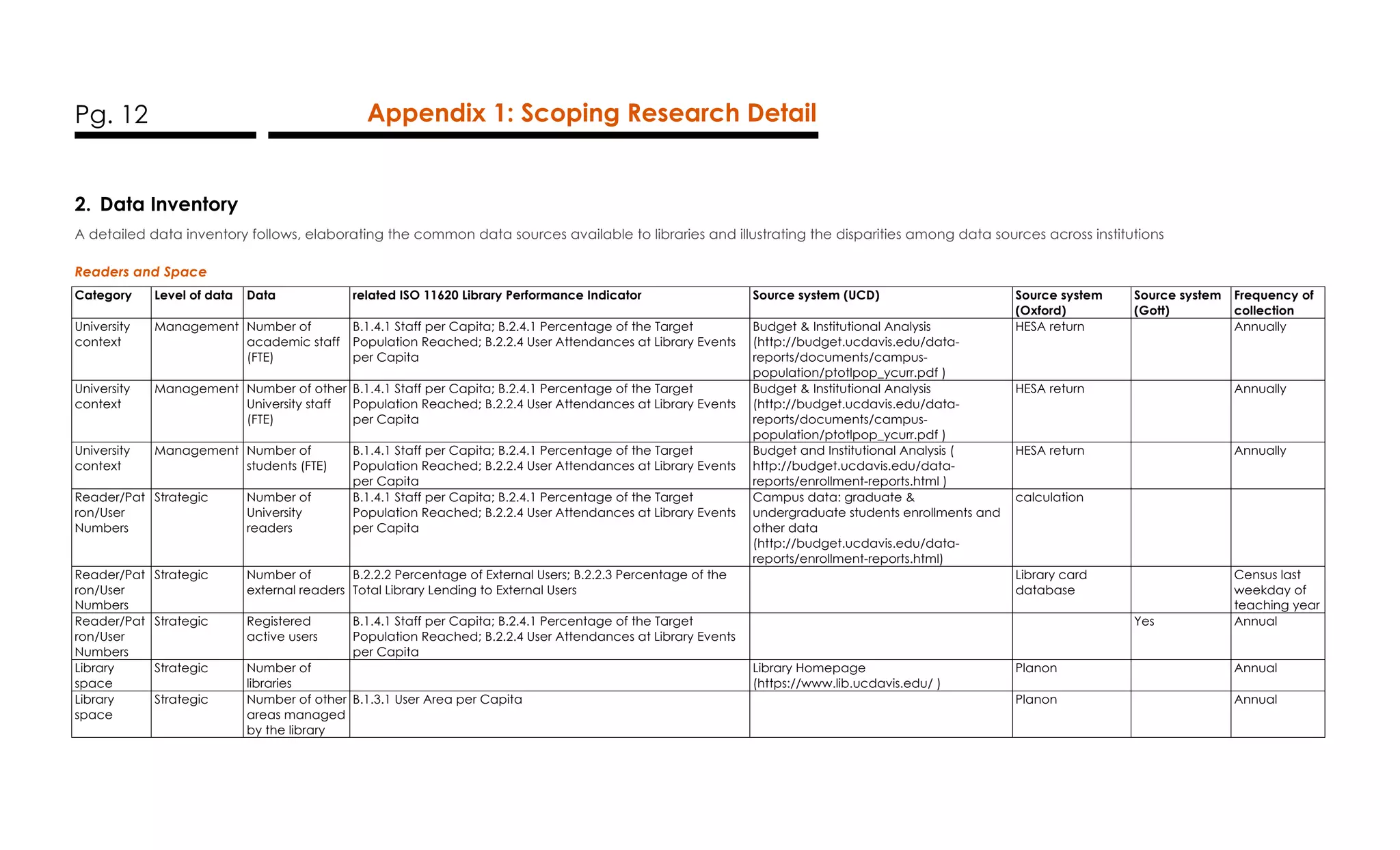

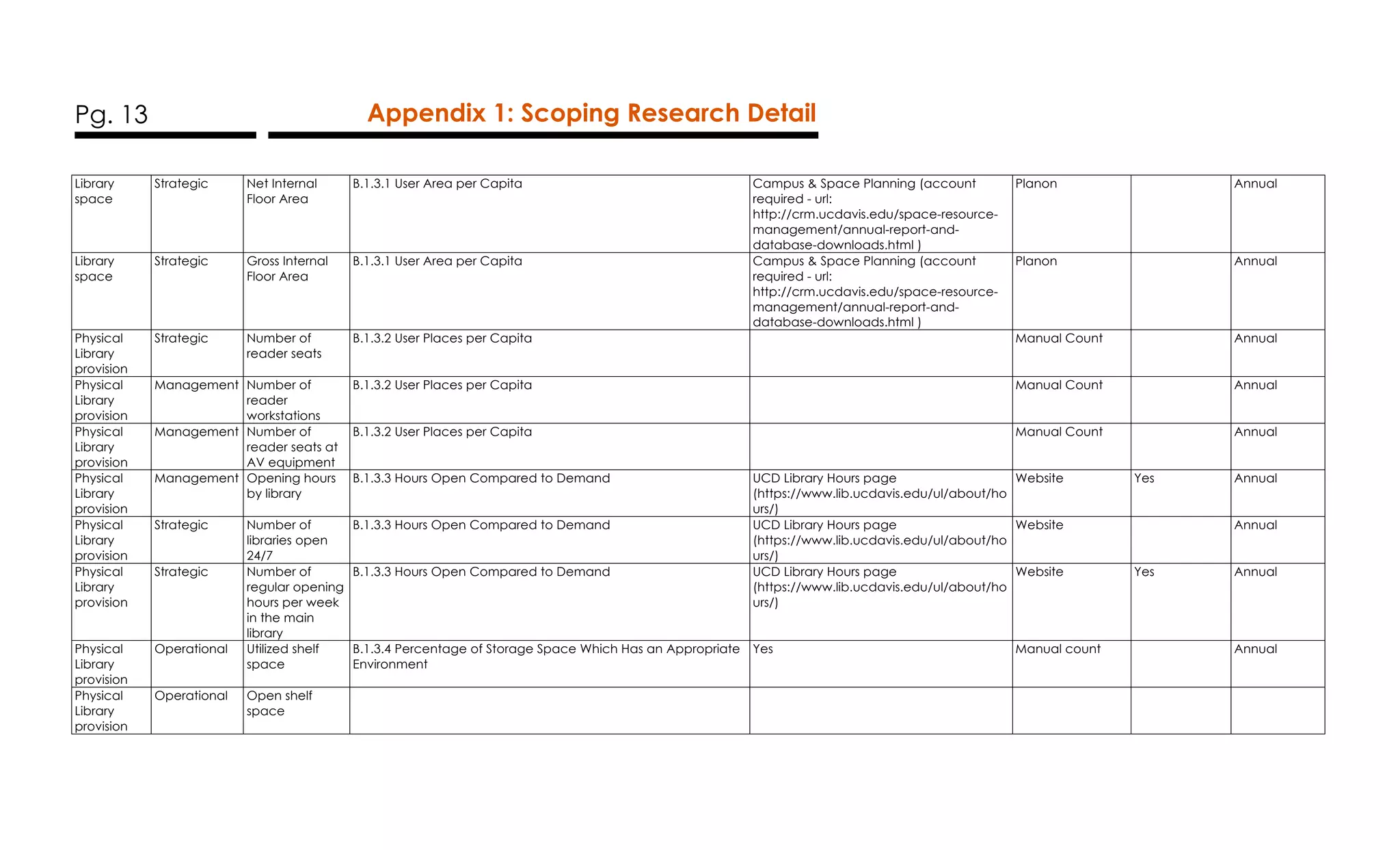

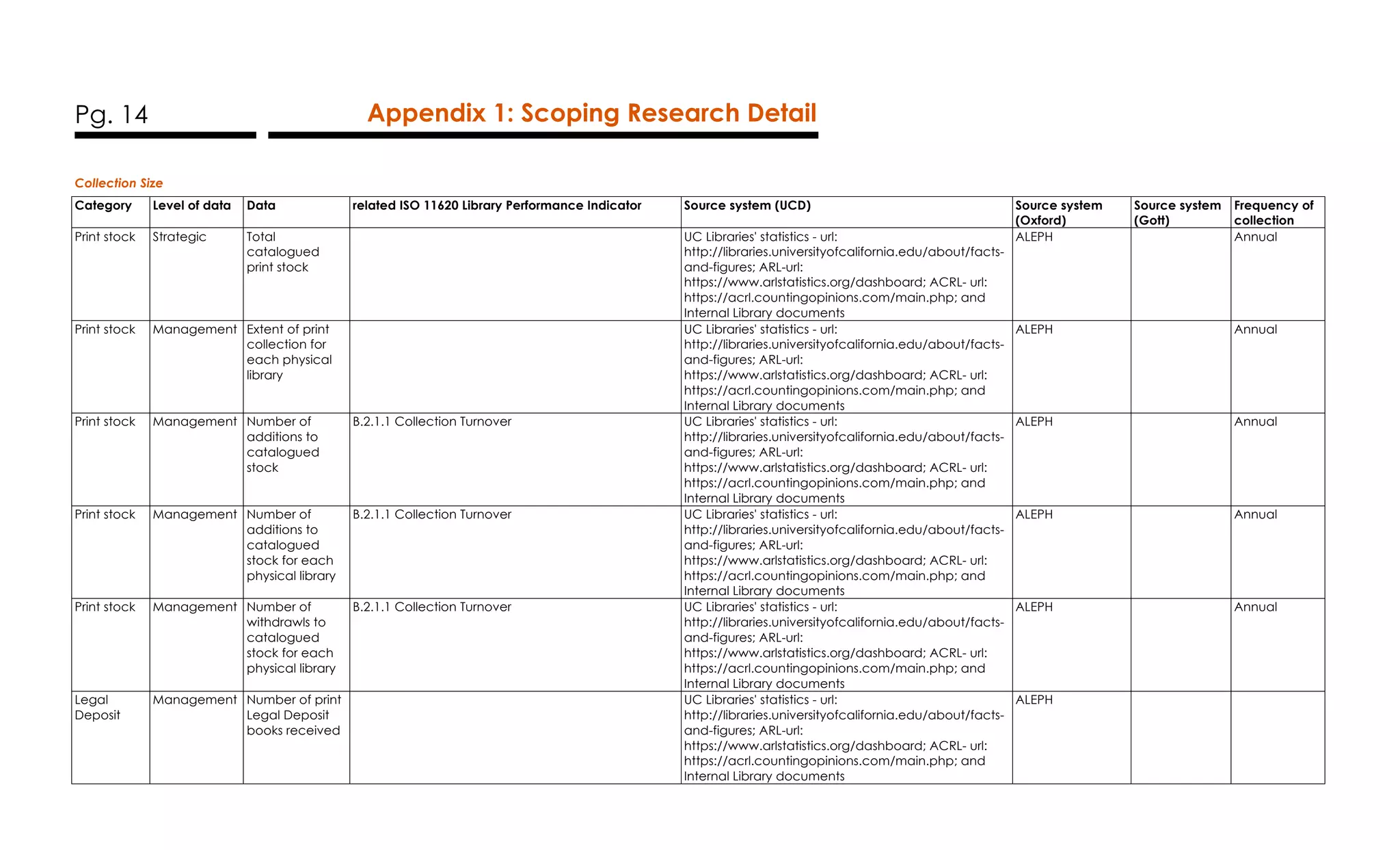

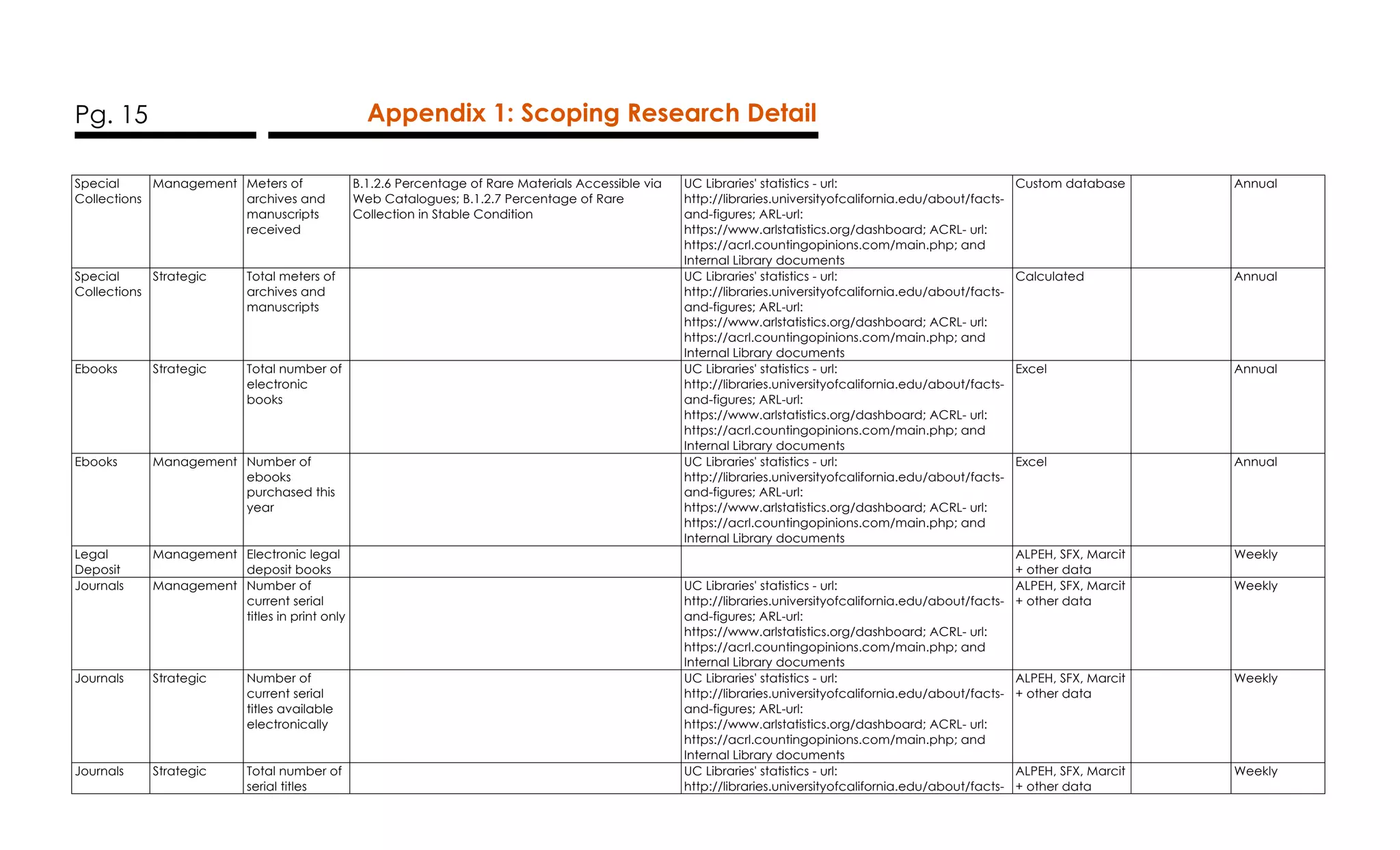

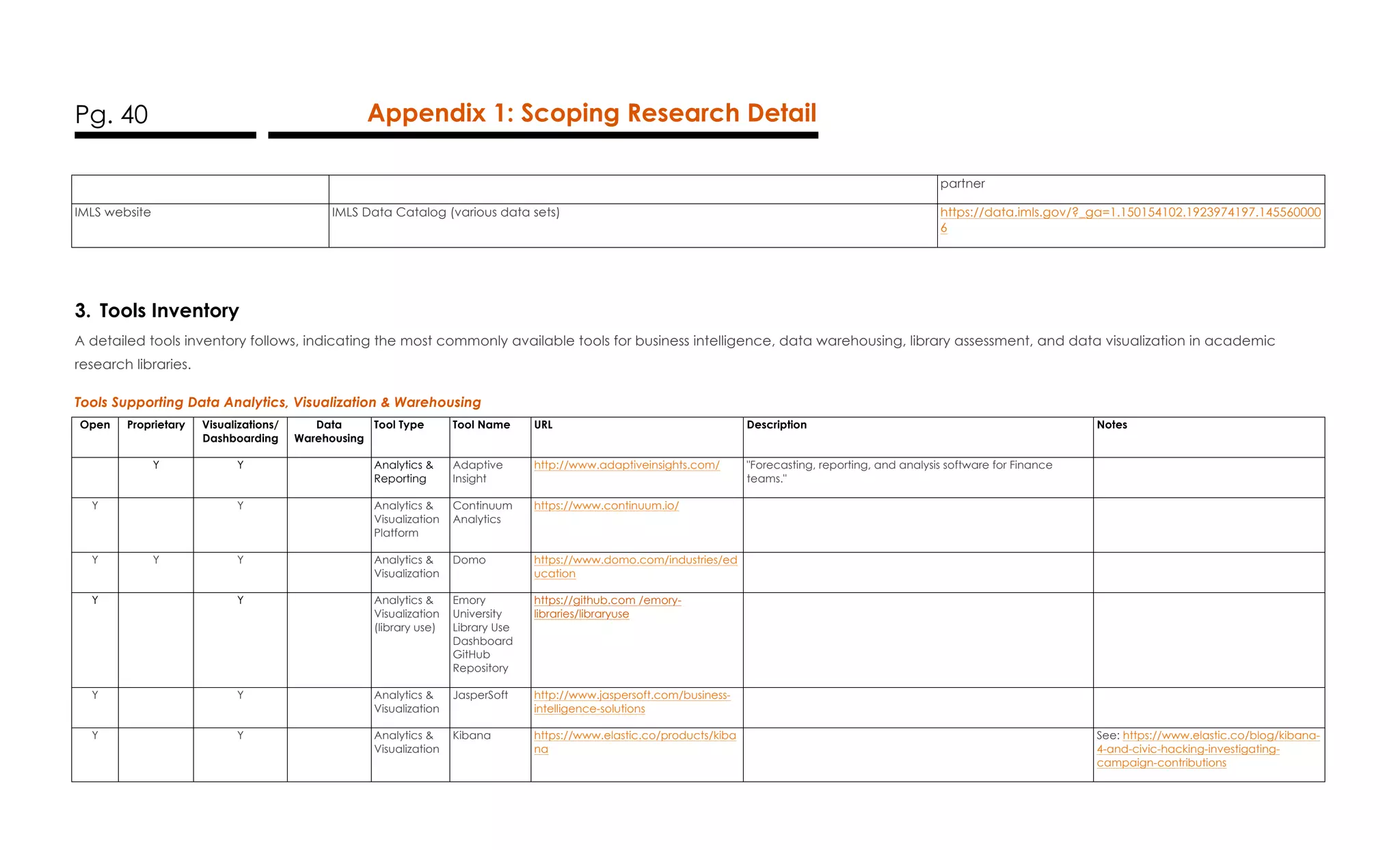

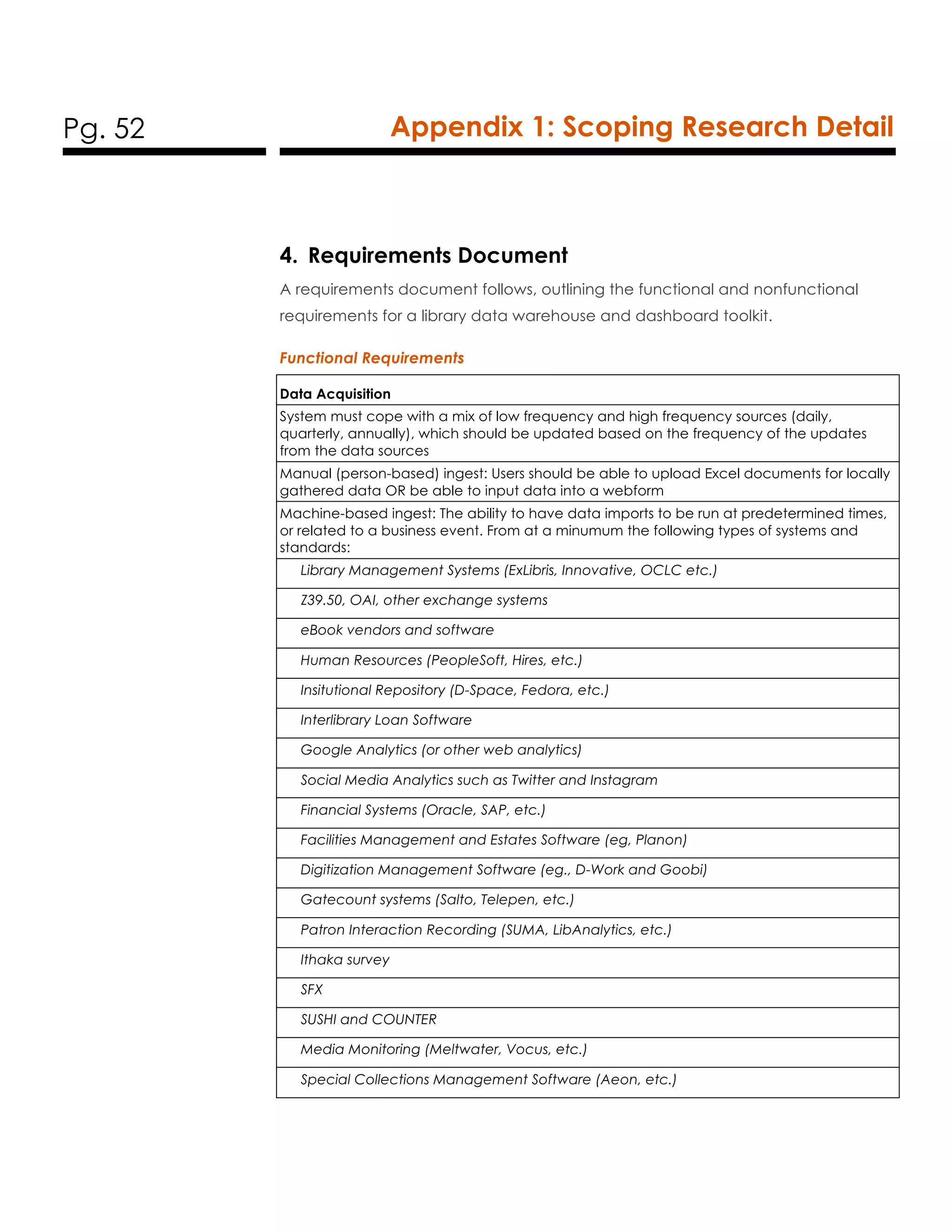

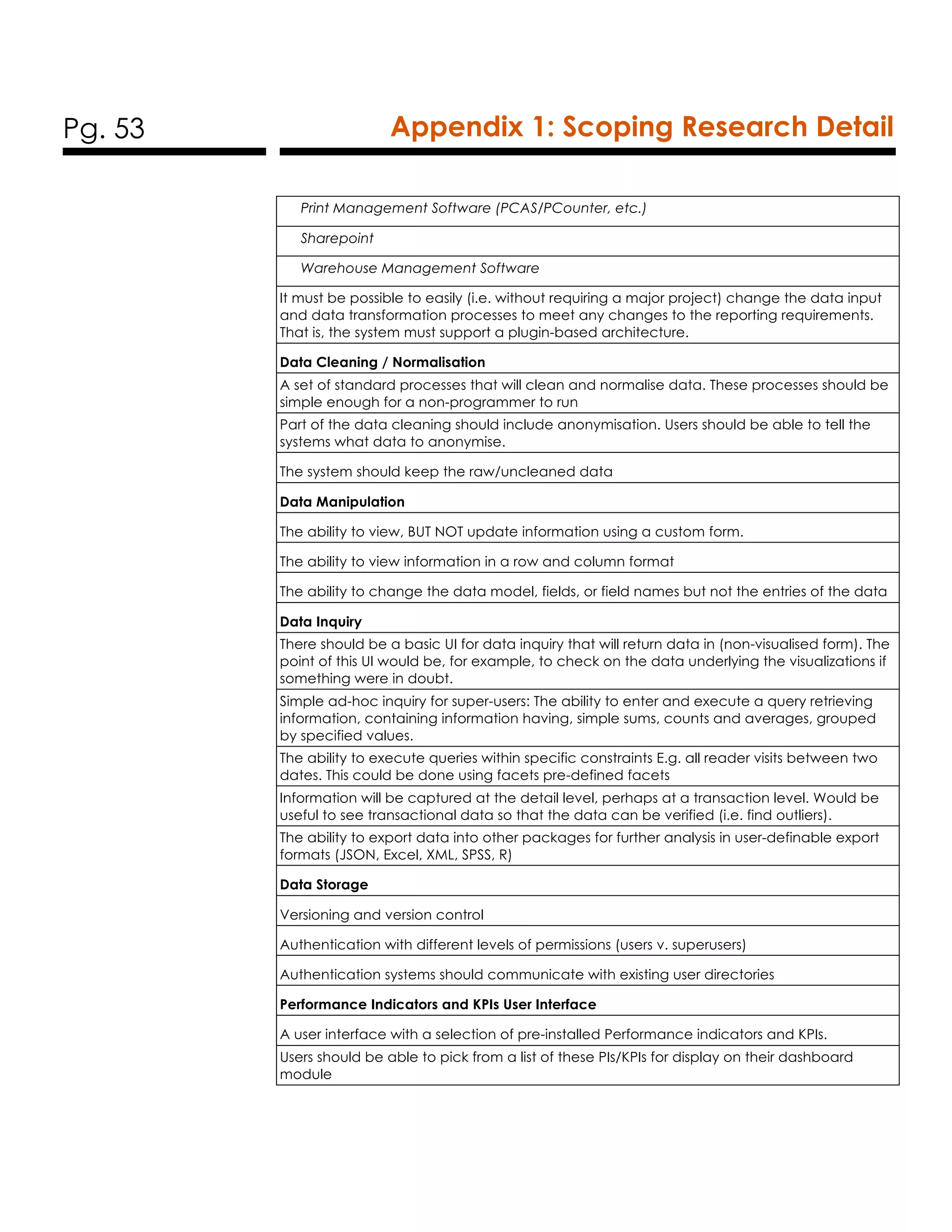

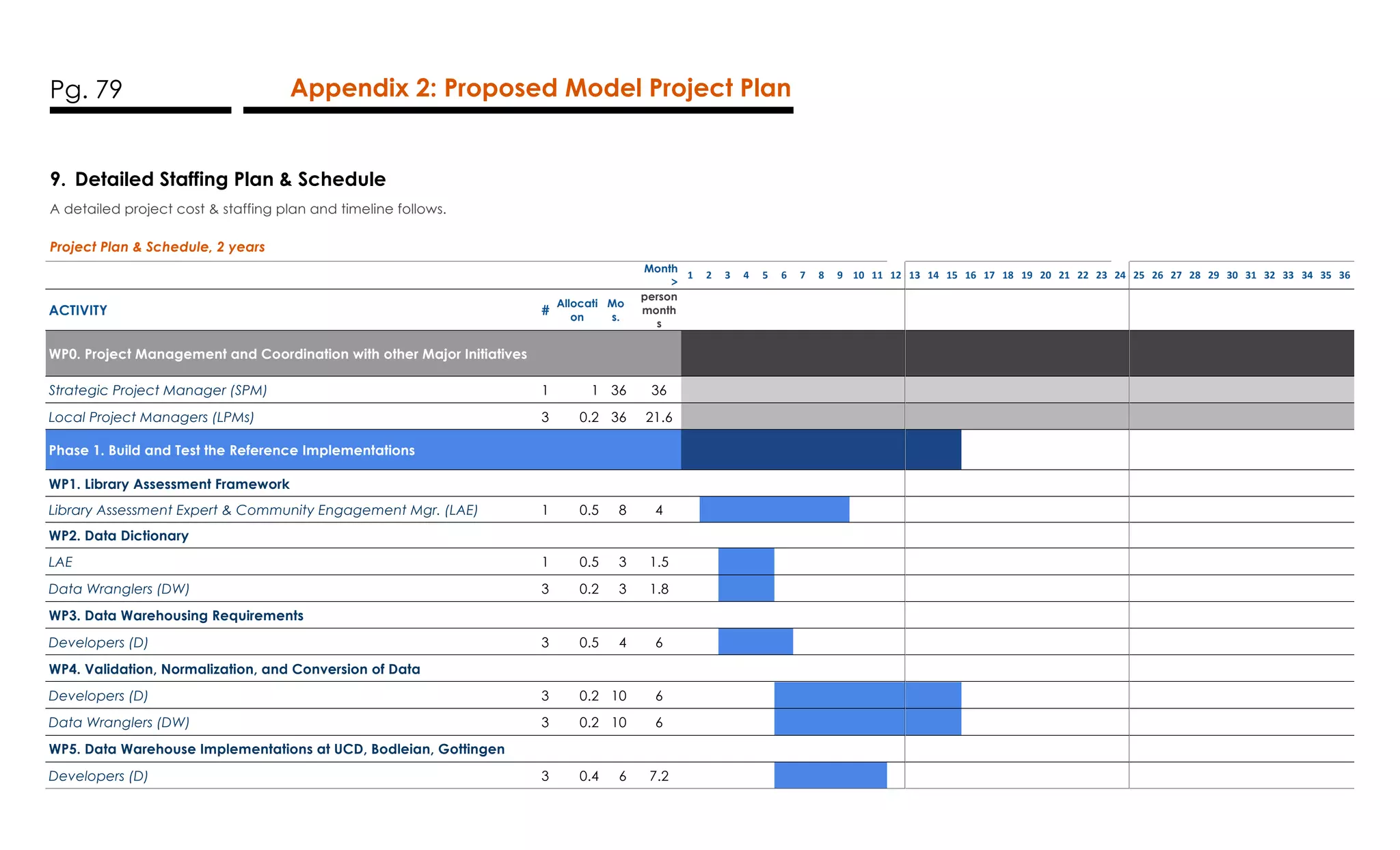

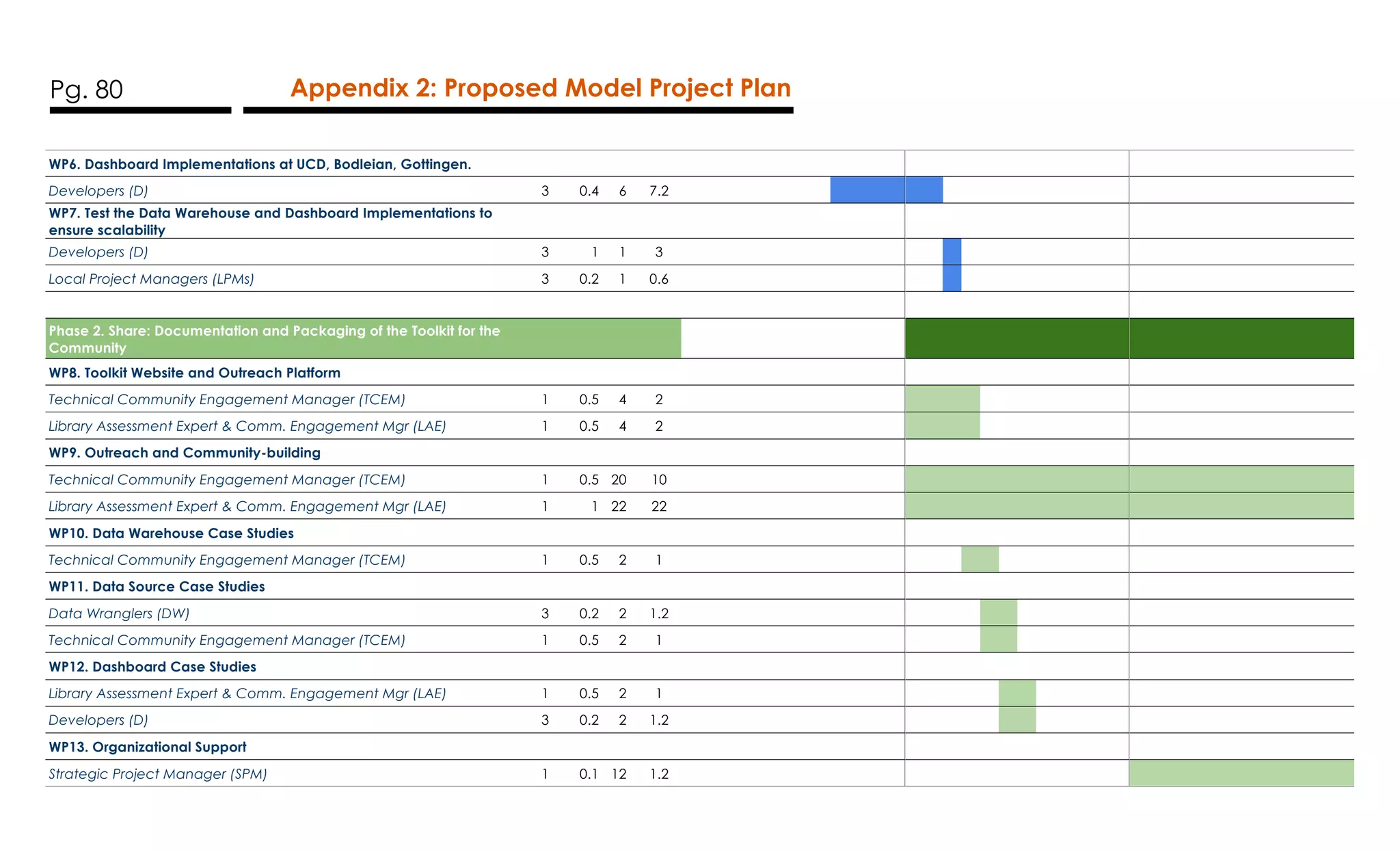

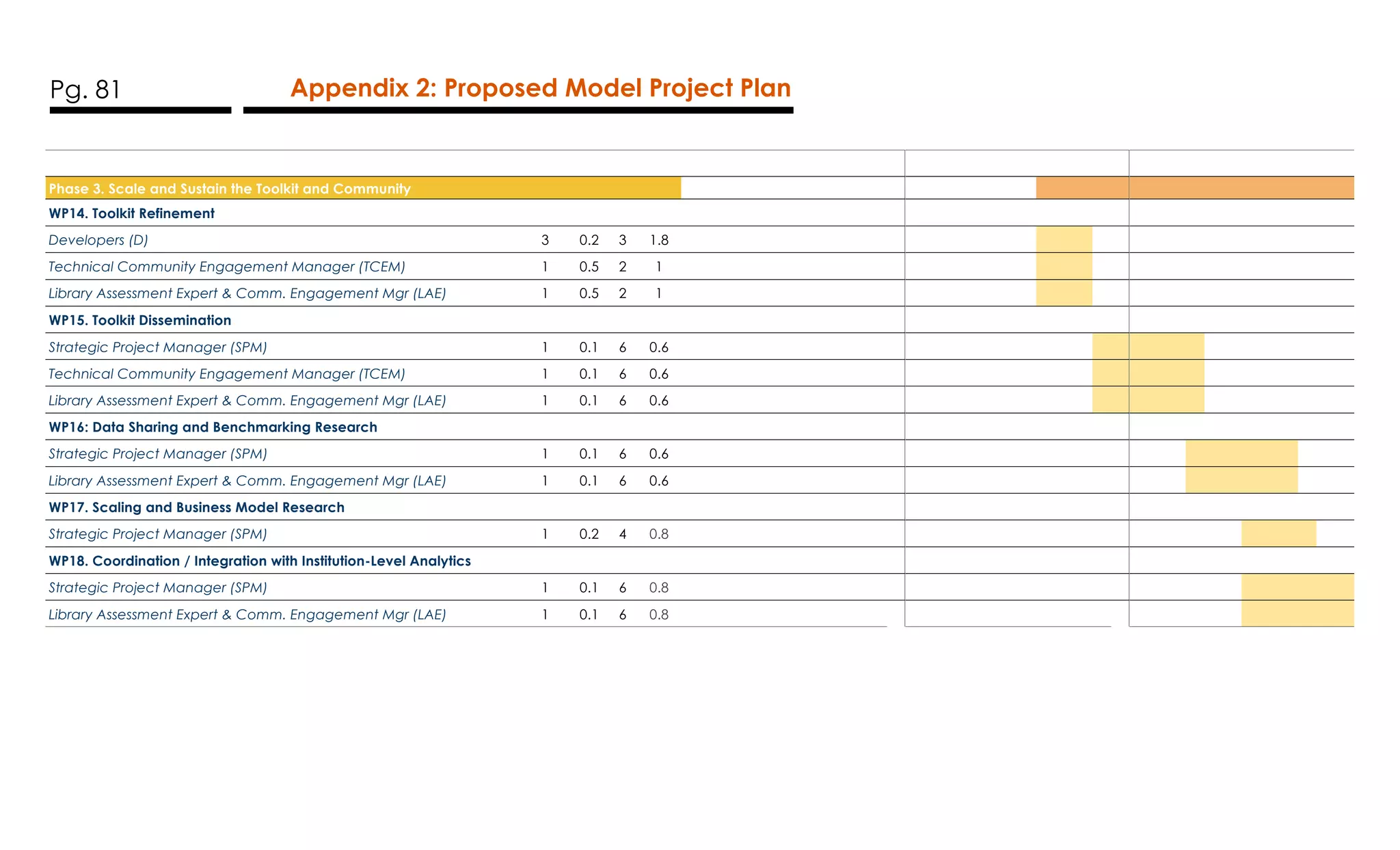

The scoping research conducted from January to June 2016 suggests the need for a comprehensive library assessment toolkit and dashboard, highlighting that most library managers currently approach assessment in a reactive manner. The proposed toolkit aims to provide key performance indicators, data visualization, and customizable features to facilitate strategic decision-making, benefiting various types of libraries. A three-year project plan is recommended to develop the toolkit and establish a membership consortium for sustainability and community support.