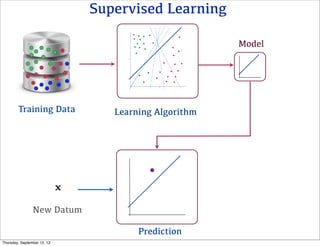

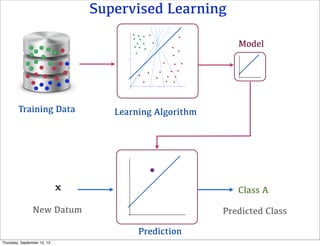

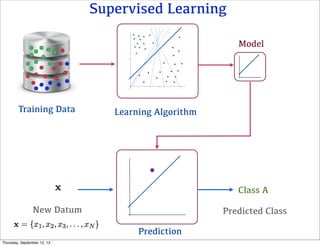

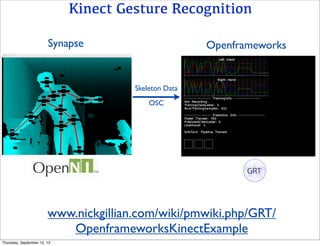

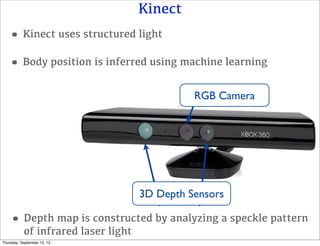

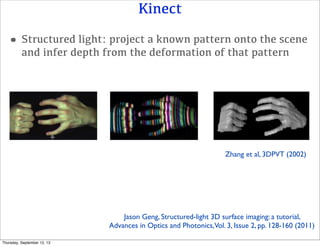

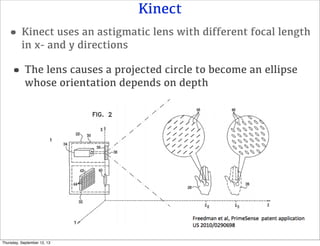

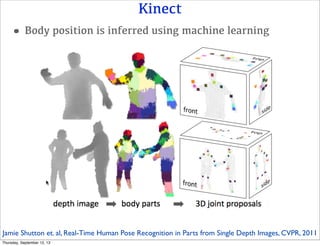

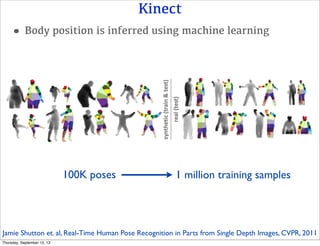

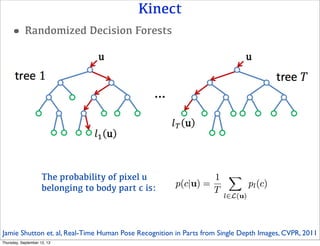

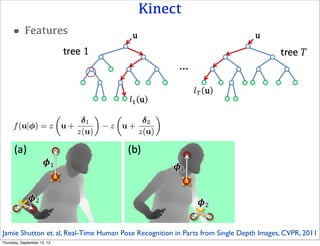

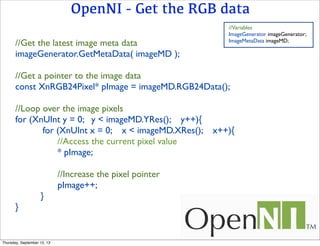

The document summarizes a tutorial presentation about the Kinect and its 3D depth sensing capabilities. It describes how the Kinect uses structured light and machine learning to construct depth maps and infer body position from depth images. It also provides an overview of the OpenNI and NITE libraries for accessing Kinect sensor data and the types of data that can be accessed like RGB images, depth maps, and skeletal joint positions.

![OpenNI - Data

RGB Image Depth Image

Label Image

[640 480]

Depth: Unsigned short

Label: Unsigned short

RGB: Unsigned char

Thursday, September 12, 13](https://image.slidesharecdn.com/kinecttutorial-130916112651-phpapp01/85/Kinect-Tutorial-12-320.jpg)

![OpenNI - Skeleton data

//Variables

UserGenerator userGenerator;

XnUserID userIDs[ numTrackedUsers ];

XnUInt16 numUsers = userGenerator->GetNumberOfUsers();

userGenerator->GetUsers(userIDs, numUsers);

for( int i=0; i < numUsers; i++ ){

if( userGenerator->GetSkeletonCap().IsTracking( userIDs[i] ) ){

XnPoint3D userCenterOfMass;

userGenerator->GetSkeletonCap().IsCalibrating( userIDs[i] );

userGenerator->GetSkeletonCap().IsCalibrated( userIDs[i] );

userGenerator->GetCoM( userIDs[i], userCenterOfMass );

XnSkeletonJointTransformation jointData;

if( userGenerator->GetSkeletonCap().IsJointAvailable( XN_SKEL_HEAD ) ){

userGenerator->GetSkeletonCap().GetSkeletonJoint(userIDs[i], XN_SKEL_HEAD, jointData );

}

}

}

Thursday, September 12, 13](https://image.slidesharecdn.com/kinecttutorial-130916112651-phpapp01/85/Kinect-Tutorial-20-320.jpg)