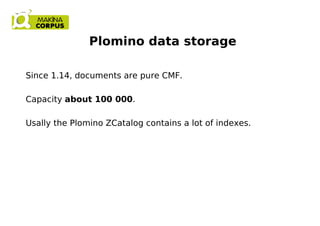

This document discusses using the souper.plone library to store large amounts of non-content data in Plone. Souper allows storing records containing any picklable data in BTrees in the ZODB. This provides significantly more storage capacity than default Plone content storage. The records can then be queried and indexed. The document demonstrates importing hundreds of thousands to millions of records from Wikipedia into souper with good performance. Souper provides an alternative to using a separate database for large structured datasets in Plone.

![Add a record

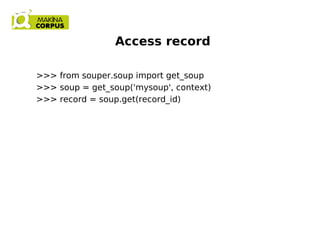

>>> soup = get_soup('mysoup', context)

>>> record = Record()

>>> record.attrs['user'] = 'user1'

>>> record.attrs['text'] = u'foo bar baz'

>>> record.attrs['keywords'] = [u'1', u'2', u'ü']

>>> record_id = soup.add(record)](https://image.slidesharecdn.com/importingwikipediainplone-131006122721-phpapp02/85/Importing-wikipedia-in-Plone-12-320.jpg)

![Record in record

>>> record['homeaddress'] = Record()

>>> record['homeaddress'].attrs['zip'] = '6020'

>>> record['homeaddress'].attrs['town'] = 'Innsbruck'

>>> record['homeaddress'].attrs['country'] = 'Austria'](https://image.slidesharecdn.com/importingwikipediainplone-131006122721-phpapp02/85/Importing-wikipedia-in-Plone-13-320.jpg)

![Query

>>> from repoze.catalog.query import Eq, Contains

>>> [r for r in soup.query(Eq('user', 'user1')

& Contains('text', 'foo'))]

[<Record object 'None' at ...>]

or using CQE format

>>> [r for r in soup.query("user == 'user1' and 'foo' in text")]

[<Record object 'None' at ...>]](https://image.slidesharecdn.com/importingwikipediainplone-131006122721-phpapp02/85/Importing-wikipedia-in-Plone-15-320.jpg)