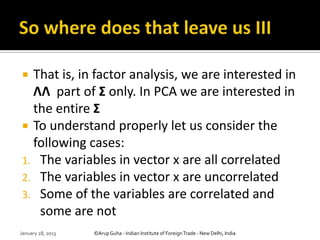

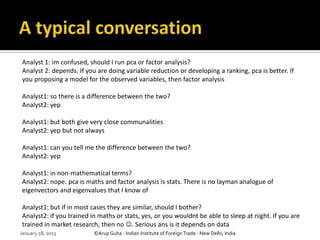

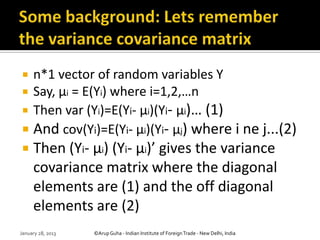

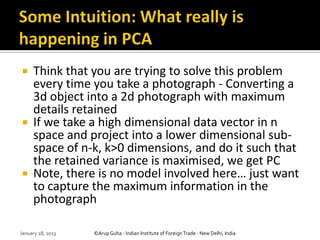

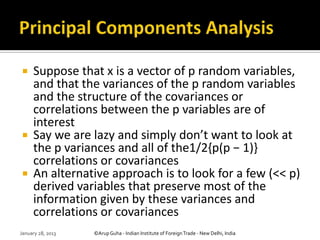

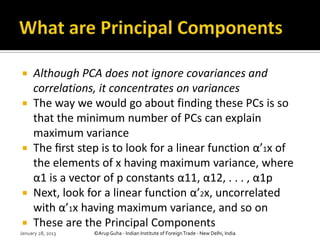

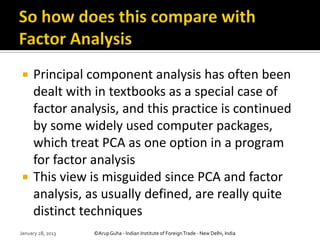

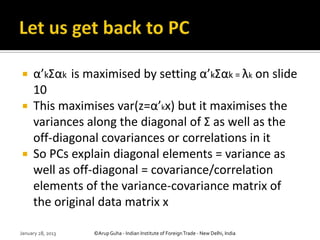

The document discusses the differences between Principal Component Analysis (PCA) and Factor Analysis, emphasizing that while both are used for dimension reduction, PCA focuses on maximizing variance without a model, whereas Factor Analysis aims to uncover common latent factors and involves specific modeling of the data. It highlights that PCA explains variance and covariance while Factor Analysis targets common variance only. Various mathematical aspects of both techniques are presented, illustrating their distinct nature despite occasional similar outcomes in specific situations.

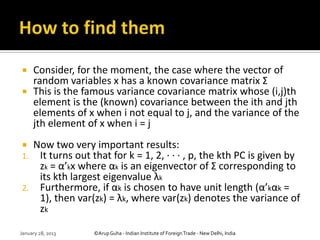

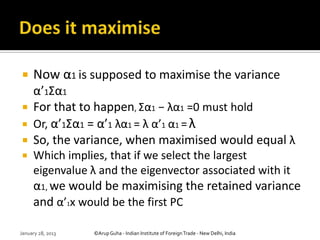

![ To derive the form of the PCs, consider first

α’1x; the vector α1 maximizes

var*α’1x] = α1Σα1

It is clear the maximum will not be achieved

for finite α1 so a normalization constraint

must be imposed

The constraint used in the derivation is α’1α1

= 1, that is, the sum of squares of elements

of α1 equals 1

January 28, 2013 ©Arup Guha - Indian Institute of Foreign Trade - New Delhi, India](https://image.slidesharecdn.com/howprincipalcomponentsanalysisisdifferentfromfactor-130127030052-phpapp01/85/How-principal-components-analysis-is-different-from-factor-10-320.jpg)

![ The second PC, α’2x, maximizes α’2Σα2 subject to

being uncorrelated with α’1x

Or equivalently subject to cov[α’1x,α’2x] = 0,

where cov(x, y) denotes the covariance between

the random variables x and y

Solving, we once again come down to maximising

λ but it cant be equal to the largest eigenvalue

since that is already taken by the first PC. So,

λ=λ2, or the second largest eigenvalue of Σ

And so on

January 28, 2013 ©Arup Guha - Indian Institute of Foreign Trade - New Delhi, India](https://image.slidesharecdn.com/howprincipalcomponentsanalysisisdifferentfromfactor-130127030052-phpapp01/85/How-principal-components-analysis-is-different-from-factor-13-320.jpg)

![ It can be shown that for the first, second,

third, fourth, . . . , pth PCs, the vectors of

coefficients α1,α2,α3,α4, . . . ,αp are the

eigenvectors of Σ corresponding to λ1, λ2,λ3,

λ4, . . . , λp, the first, second, third and fourth

largest, . . . , and the smallest eigenvalue,

respectively

Also, var[α’kx] = λk for k = 1, 2, . . . , p.

January 28, 2013 ©Arup Guha - Indian Institute of Foreign Trade - New Delhi, India](https://image.slidesharecdn.com/howprincipalcomponentsanalysisisdifferentfromfactor-130127030052-phpapp01/85/How-principal-components-analysis-is-different-from-factor-14-320.jpg)

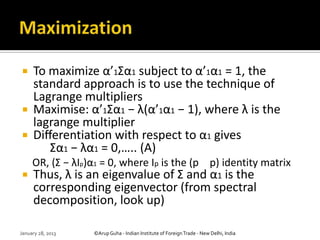

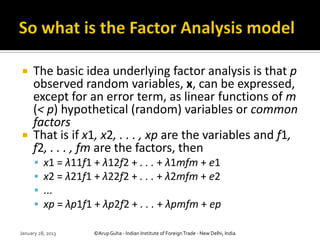

![ If you remember the factor analysis model in

matrix form: x = Λf + e

Along with the following assumptions,

E[ee] = Ψ (diagonal)

E[fe] = 0 (a matrix of zeros)

E[ff] = Im (an identity matrix)

the above model implies that the variance

covariance matrix would have the form:

Σ = ΛΛ +Ψ

January 28, 2013 ©Arup Guha - Indian Institute of Foreign Trade - New Delhi, India](https://image.slidesharecdn.com/howprincipalcomponentsanalysisisdifferentfromfactor-130127030052-phpapp01/85/How-principal-components-analysis-is-different-from-factor-23-320.jpg)