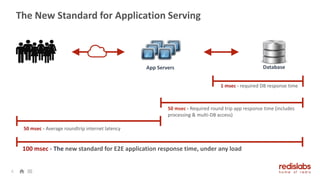

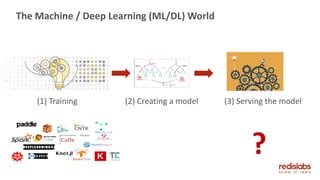

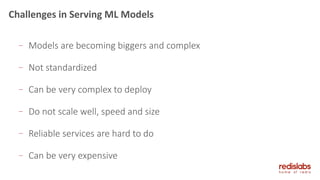

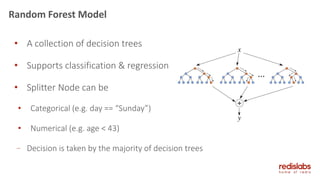

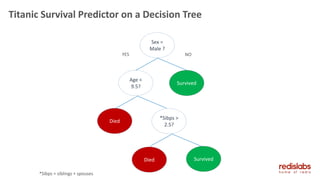

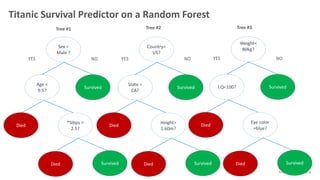

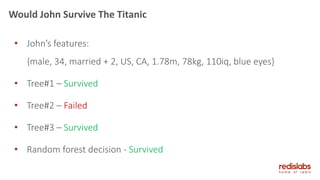

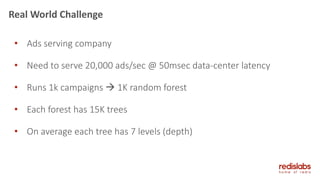

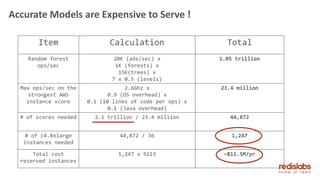

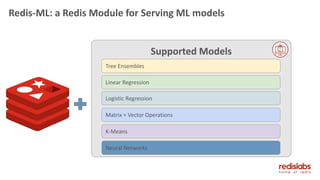

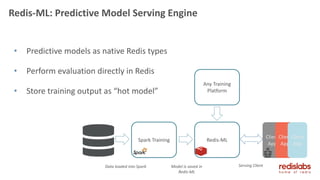

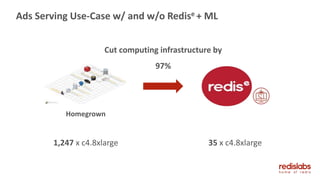

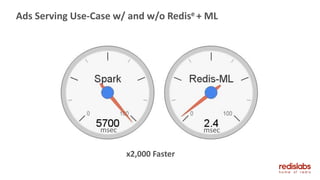

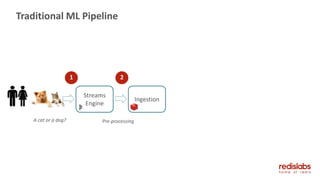

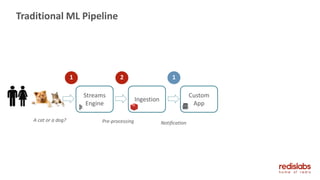

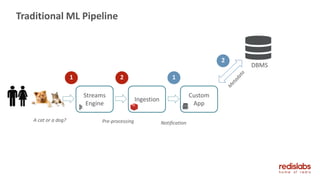

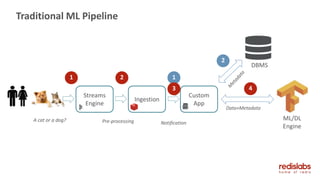

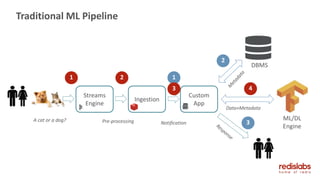

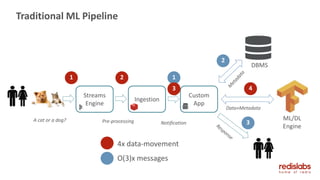

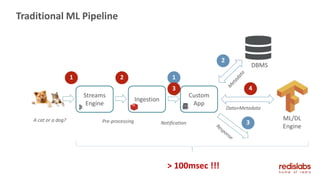

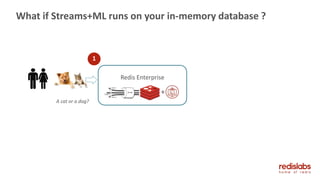

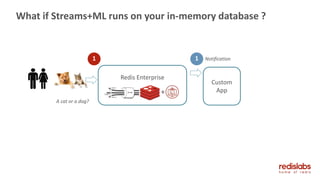

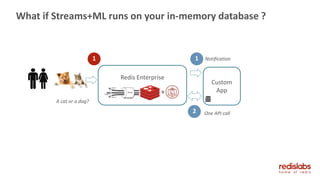

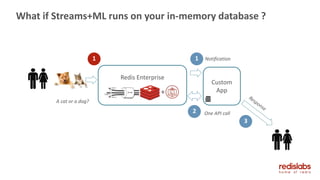

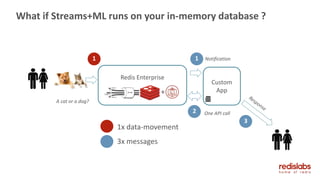

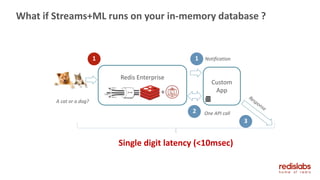

This document discusses how in-memory technology can impact machine and deep learning services using Redis Labs as a case study. It describes how Redis can provide a simple, extensible, and high performance platform for serving machine learning models. Serving complex models at scale is challenging due to their size, lack of standardization, and high costs. Redis-ML module allows predictive models to be stored and evaluated directly in Redis, reducing infrastructure needs by 97% for an ad serving use case compared to a homegrown solution. Co-locating streams, data, and machine learning engines in an in-memory database like Redis can reduce data movement, messages, and latency compared to traditional machine learning pipelines.