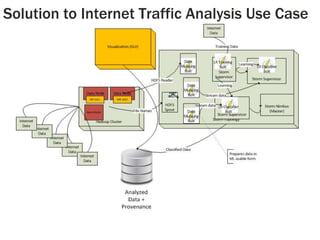

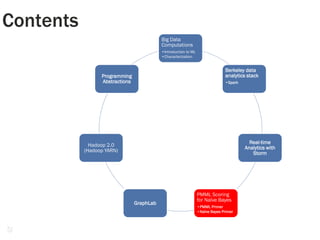

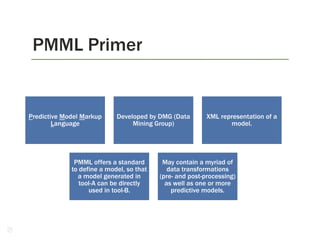

The document discusses big data analytics focusing on machine learning (ML) paradigms, tools, and techniques including Spark, Storm, and GraphLab. It covers various ML approaches such as decision trees, random forests, and naive Bayes with examples in applications like medical decision aids and recommendation systems. Additionally, it highlights the Berkeley Data Analytics Stack and the use of PMML for model sharing across different tools.

![11

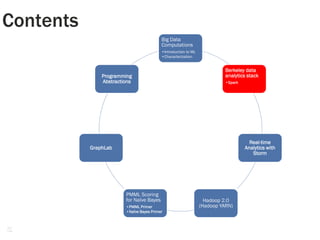

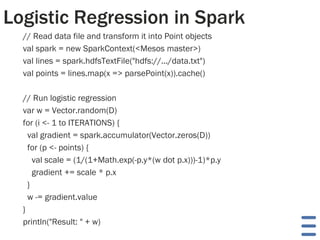

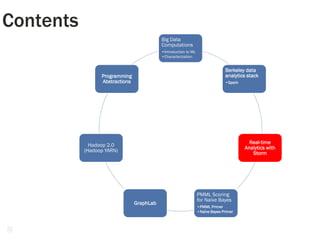

Big Data ComputationsComputations/Operations

Giant 1 (simple stats) is perfect

for Hadoop 1.0.

Giants 2 (linear algebra), 3 (N-

body), 4 (optimization) Spark

from UC Berkeley is efficient.

Logistic regression, kernel SVMs,

conjugate gradient descent,

collaborative filtering, Gibbs

sampling, alternating least squares.

Example is social group-first

approach for consumer churn

analysis [2]

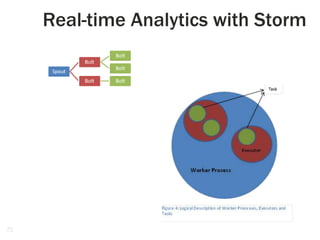

Interactive/On-the-fly data

processing – Storm.

OLAP – data cube operations.

Dremel/Drill

Data sets – not embarrassingly

parallel?

Deep Learning Artificial Neural Networks

Machine vision from Google [3]

Speech analysis from Microsoft

Giant 5 – Graph processing –

GraphLab, Pregel, Giraph

[1] National Research Council. Frontiers in Massive Data Analysis . Washington, DC: The National Academies Press, 2013.

[2] Richter, Yossi ; Yom-Tov, Elad ; Slonim, Noam: Predicting Customer Churn in Mobile Networks through Analysis of Social Groups.

In: Proceedings of SIAM International Conference on Data Mining, 2010, S. 732-741

[3] Jeffrey Dean, Greg Corrado, Rajat Monga, Kai Chen, Matthieu Devin, Quoc V. Le, Mark Z. Mao, Marc'Aurelio Ranzato, Andrew W.

Senior, Paul A. Tucker, Ke Yang, Andrew Y. Ng: Large Scale Distributed Deep Networks. NIPS 2012: 1232-1240](https://image.slidesharecdn.com/publicbigdataanalyticssparkstormisectutorial3mar2014ver1-140312042708-phpapp01/85/Big-Data-Analytics-with-Storm-Spark-and-GraphLab-11-320.jpg)

![Iterative ML Algorithms

[CB09] C. Bunch, B. Drawert, M. Norman, Mapscale: a cloud environment for scientific computing,

Technical Report, University of California, Computer Science Department, 2009.

What are iterative

algorithms?

• Those that need

communication

among the

computing

entities

• Examples –

neural networks,

PageRank

algorithms,

network traffic

analysis

Conjugate

gradient descent

• Commonly used

to solve systems

of linear

equations

• [CB09] tried

implementing

CG on dense

matrices

• DAXPY –

Multiplies vector

x by constant a

and adds y.

• DDOT – Dot

product of 2

vectors

• MatVec –

Multiply matrix

by vector,

produce a

vector.

Communication

Overhead

• 1 MR per

primitive – 6

MRs per CG

iteration,

hundreds of

MRs per CG

computation,

leading to 10 of

GBs of

communication

even for small

matrices.

Other iterative

algorithms

• fast fourier

transform, block

tridiagonal](https://image.slidesharecdn.com/publicbigdataanalyticssparkstormisectutorial3mar2014ver1-140312042708-phpapp01/85/Big-Data-Analytics-with-Storm-Spark-and-GraphLab-12-320.jpg)

![BDAS: Spark

[MZ12] Matei Zaharia, Mosharaf Chowdhury, Tathagata Das, Ankur Dave, Justin Ma, Murphy McCauley, Michael J.

Franklin, Scott Shenker, and Ion Stoica. 2012. Resilient distributed datasets: a fault-tolerant abstraction for in-memory

cluster computing. In Proceedings of the 9th USENIX conference on Networked Systems Design and

Implementation (NSDI'12). USENIX Association, Berkeley, CA, USA, 2-2.

Transformations/Actions Description

Map(function f1) Pass each element of the RDD through f1 in parallel and return the resulting RDD.

Filter(function f2) Select elements of RDD that return true when passed through f2.

flatMap(function f3) Similar to Map, but f3 returns a sequence to facilitate mapping single input to multiple outputs.

Union(RDD r1) Returns result of union of the RDD r1 with the self.

Sample(flag, p, seed) Returns a randomly sampled (with seed) p percentage of the RDD.

groupByKey(noTasks) Can only be invoked on key-value paired data – returns data grouped by value. No. of parallel

tasks is given as an argument (default is 8).

reduceByKey(function f4,

noTasks)

Aggregates result of applying f4 on elements with same key. No. of parallel tasks is the second

argument.

Join(RDD r2, noTasks) Joins RDD r2 with self – computes all possible pairs for given key.

groupWith(RDD r3, noTasks) Joins RDD r3 with self and groups by key.

sortByKey(flag) Sorts the self RDD in ascending or descending based on flag.

Reduce(function f5) Aggregates result of applying function f5 on all elements of self RDD

Collect() Return all elements of the RDD as an array.

Count() Count no. of elements in RDD

take(n) Get first n elements of RDD.

First() Equivalent to take(1)

saveAsTextFile(path) Persists RDD in a file in HDFS or other Hadoop supported file system at given path.

saveAsSequenceFile(path) Persist RDD as a Hadoop sequence file. Can be invoked only on key-value paired RDDs that

implement Hadoop writable interface or equivalent.

foreach(function f6) Run f6 in parallel on elements of self RDD.](https://image.slidesharecdn.com/publicbigdataanalyticssparkstormisectutorial3mar2014ver1-140312042708-phpapp01/85/Big-Data-Analytics-with-Storm-Spark-and-GraphLab-18-320.jpg)

![GraphLab: Ideal Engine for Processing Natural Graphs [YL12]

[YL12] Yucheng Low, Danny Bickson, Joseph Gonzalez, Carlos Guestrin, Aapo Kyrola, and Joseph M. Hellerstein. 2012. Distributed GraphLab: a

framework for machine learning and data mining in the cloud. Proceedings of the VLDB Endowment 5, 8 (April 2012), 716-727.

Goals – targeted at machine

learning.

•Model graph dependencies, be

asynchronous, iterative, dynamic.

Data associated with edges

(weights, for instance) and vertices

(user profile data, current interests

etc.).

Update functions – lives on each

vertex

• Transforms data in scope of vertex.

• Can choose to trigger neighbours (for

example only if Rank changes drastically)

• Run asynchronously till convergence – no

global barrier.

Consistency is important in ML

algorithms (some do not even

converge when there are

inconsistent updates – collaborative

filtering).

• GraphLab – provides varying level of

consistency. Parallelism VS consistency.

Implemented several algorithms,

including ALS, K-means, SVM, Belief

propagation, matrix factorization,

Gibbs sampling, SVD, CoEM etc.

• Co-EM (Expectation Maximization)

algorithm 15x faster than Hadoop MR – on

distributed GraphLab, only 0.3% of Hadoop

execution time.](https://image.slidesharecdn.com/publicbigdataanalyticssparkstormisectutorial3mar2014ver1-140312042708-phpapp01/85/Big-Data-Analytics-with-Storm-Spark-and-GraphLab-43-320.jpg)

![GraphLab 2: PowerGraph – Modeling Natural Graphs [1]

[1] Joseph E. Gonzalez, Yucheng Low, Haijie Gu, Danny Bickson, and Carlos Guestrin (2012). "PowerGraph: Distributed

Graph-Parallel Computation on Natural Graphs." Proceedings of the 10th USENIX Symposium on Operating Systems

Design and Implementation (OSDI '12).

GraphLab could not

scale to Altavista web

graph 2002, 1.4B

vertices, 6.7B edges.

• Most graph parallel

abstractions assume small

neighbourhoods – low

degree vertices

• But natural graphs

(LinkedIn, Facebook,

Twitter) – power law

graphs.

• Hard to partition power law

graphs, high degree

vertices limit parallelism.

Powergraph provides

new way of

partitioning power law

graphs

• Edges are tied to

machines, vertices (esp.

high degree ones) span

machines

• Execution split into 3

phases:

• Gather, apply and

scatter.

Triangle counting on

Twitter graph

• Hadoop MR took 423

minutes on 1536

machines

• GraphLab 2 took 1.5

minutes on 1024 cores (64

machines)](https://image.slidesharecdn.com/publicbigdataanalyticssparkstormisectutorial3mar2014ver1-140312042708-phpapp01/85/Big-Data-Analytics-with-Storm-Spark-and-GraphLab-44-320.jpg)

![51

•Domain specific language approach from

Stanford.

•Forge [AKS13] – a meta DSL for high

performance DSLs.

•40X faster than Spark!

•OptiML – DSL for machine language

Forge: Approach to build high performance

Domain Specific Languages

[Arvind K. Sujeeth, Austin Gibbons, Kevin J. Brown, HyoukJoong Lee, Tiark Rompf, Martin Odersky, and Kunle

Olukotun. 2013. Forge: generating a high performance DSL implementation from a declarative specification.

In Proceedings of the 12th international conference on Generative programming: concepts & experiences (GPCE '13).

ACM, New York, NY, USA, 145-154.](https://image.slidesharecdn.com/publicbigdataanalyticssparkstormisectutorial3mar2014ver1-140312042708-phpapp01/85/Big-Data-Analytics-with-Storm-Spark-and-GraphLab-51-320.jpg)